After Update 2.7.2 / 23.09.1

-

Yes the dynamic repos system allows us to push update branches based on the exact running version. Since downgrading is not supported there is no point in ever showing a branch earlier than the running version. So that is indeed expected.

Steve

-

so my first mistake was thinking today was Monday.

Set the update branch. 23.09.1 waited. pulled the trigger.

No errors, system comes back 23.09.1 - seems good.

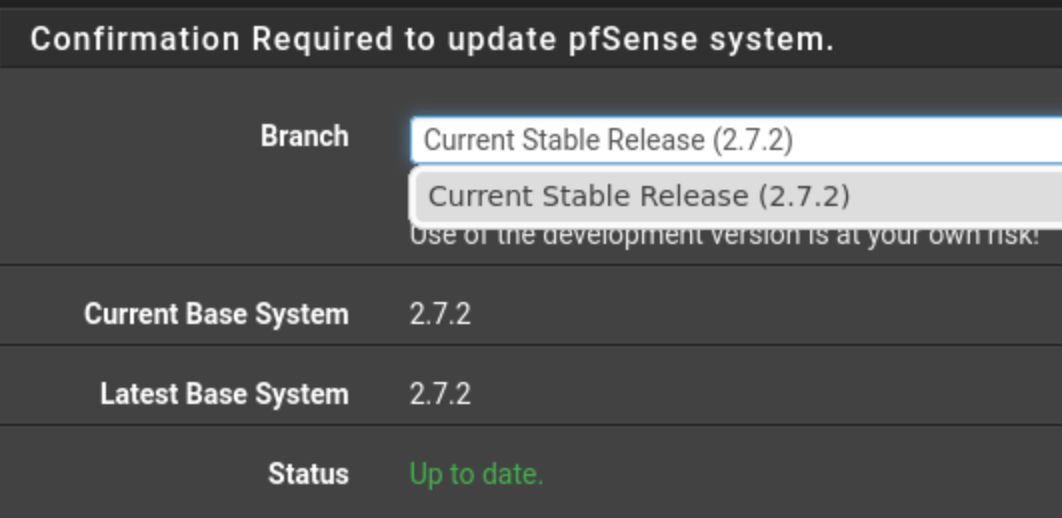

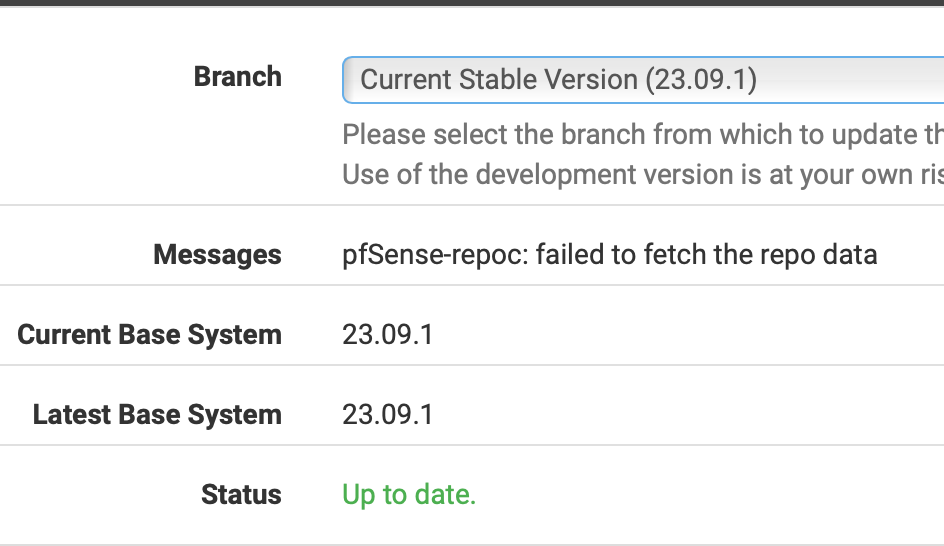

Except now when I go to the update page, the branch shows correctly, only has the one entry we expect

but it displays

From all other aspects the system appears "normal" and functioning

I can see both Installed Packages and Available Packages. Everything looks normal in this regard.pfSense-repocreturns

pfSense-repoc: failed to fetch the repo data

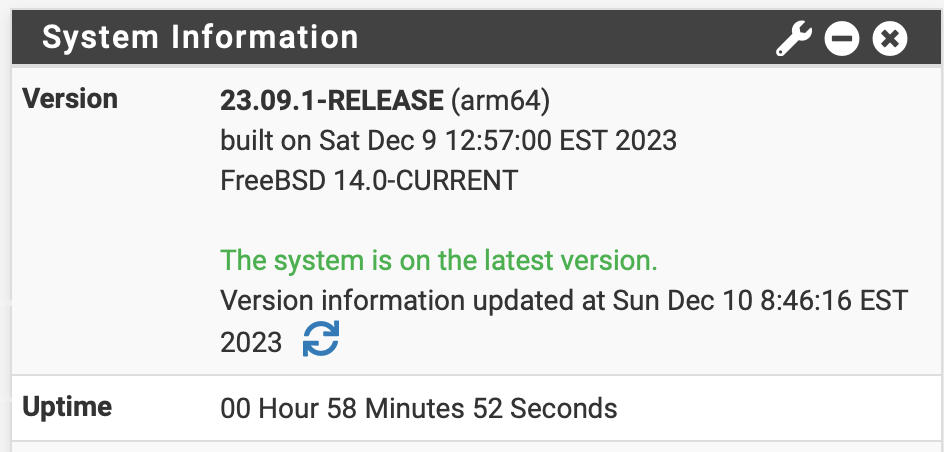

failed to read the repo data.Weird is that - Dashboard shows

I can refresh that on the widget and it updates the Version info updated time as expected

Also just curious about the build time displayed there, as Dec 9 seems to be after the release ?

the boot log shows the 6th

FreeBSD 14.0-CURRENT aarch64 1400094 #1 plus-RELENG_23_09_1-n256200-3de1e293f3a: Wed Dec 6 20:59:18 UTC 2023Maybe I've just never noticed this difference (if it was there) in all the previous versions.

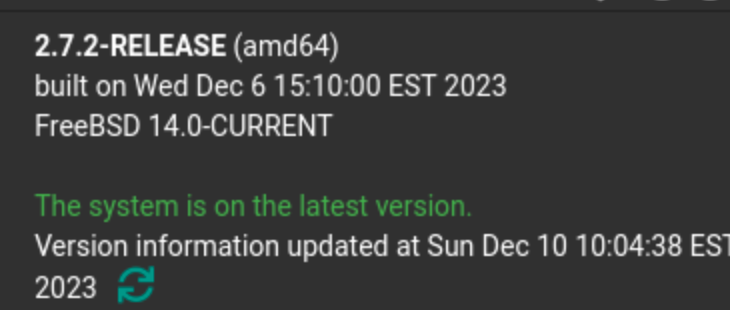

Edit - well now that is interesting -- I loaded up my test virtual

and the built on date is the same day! (time zone adjusted, but the time is off by minutes not days)FreeBSD 14.0-CURRENT amd64 1400094 #1 RELENG_2_7_2-n255948-8d2b56da39c: Wed Dec 6 20:45:47 UTC 2023

That seems like the production box displaying something that is 3 days off, more than a timezone.

The current Date/Time on both machines is spot on. But clearly one of these things is not like the other.

-

The timestamps on the update check is just whenever it last checked.

The buildtime may show something newer if it's pulled in newer pkgs. Check /etc/version.buildtime

-

the bigger problem is a lot of people (including me) seem to have lost the repo earlier this morning. In my case after the install completed it seems, others according to the forum not so much.trace from here gets to

13 fw1-zcolo.netgate.com (208.123.73.4) 43.020 ms 43.208 ms 42.889 ms

14 * * *after a trace hits the netgate colo it's gone..the repo came back about 20 minutes after I replied

=

build time so any package ?

because other than the update itself nothing has been installed.the file contains

20231209-1757

that matches the dashboard, but not the boot line which says the 6th date shownbuildtime may show something newer if it's pulled in newer pkgs

So what I think you are saying that packages on a released build are changing in the background and after the release?

therefore those folks that updated earlier will have a different system than those who update later.. seems like the potential for a support nightmare. -

If it's a pfSense package it would show as an available upgrade. We commonly upgrade packages for fixes between releases when required.

Though I would not expect that to show in the system uildtime like that.

-

J jrey referenced this topic on

-

@stephenw10 said in After Update 2.7.2:

it would show as an available upgrade

or looking at the upgrade log from this morning, pfblockerNG was upgraded automatically from _6 to _7 That doesn't really make much sense either, because this package was available before the build date referenced. _7 was not installed on this system prior to 23.09.1

but was upgraded during..

>>> Setting vital flag on pfSense... done. >>> Unlocking package pfSense-pkg-Backup... done. >>> Unlocking package pfSense-pkg-System_Patches... done. >>> Unlocking package pfSense-pkg-acme... done. >>> Unlocking package pfSense-pkg-apcupsd... done. >>> Unlocking package pfSense-pkg-pfBlockerNG... done. >>> Upgrading necessary packages... Checking for upgrades (1 candidates): . done Processing candidates (1 candidates): . done Checking integrity... done (0 conflicting) The following 1 package(s) will be affected (of 0 checked): Installed packages to be UPGRADED: pfSense-pkg-pfBlockerNG: 3.2.0_6 -> 3.2.0_7 [pfSense] Number of packages to be upgraded: 1 [1/1] Upgrading pfSense-pkg-pfBlockerNG from 3.2.0_6 to 3.2.0_7... [1/1] Extracting pfSense-pkg-pfBlockerNG-3.2.0_7: .......... done Removing pfBlockerNG components... Menu items... done. Services... done. Loading package instructions... Removing pfBlockerNG... All customizations/data will be retained... done. Saving updated package information... overwrite! Loading package configuration... done.That now likely explains why the DNSBL needed a force reload to get it running after the system came back up. I thought it odd to have to do that after system upgrade. Normally the DNSBLin still intact and just starts (through all the 23.09betas and version before) never had pfB auto update with the system. Normally the packages page "would show as an available upgrade" after the system update, and you'd have to do it manually.

This feels like a different handling of installed packages than previous. Documented?

Opens another question. I have my pfBlocker set to keep configuration during package updates. Image that is what is happening/recorded here in the update.log shown.

Removing pfBlockerNG... All customizations/data will be retained... done.So what happens to someone that doesn't have the option checked? is their configuration lost or does the package getting auto updated during a system upgrade override that package setting and retain the settings anyway? Just trying to determine if someone may be asking.. "I installed 23.09.1 and all my pfB settings are gone." in the near future.

-

Nothing has changed there. When the upgrade is run the current packages are pulled in.

Retaining the config is the default setting there so its retained even if it's unassembled. It should always be retained across an update to the package.

-

Fair enough - pretty sure back in 22.?? the pfb update didn't appear until checking the packages pages and had to be updated from the packages page manually. (maybe that is just because the package wasn't actually released until a short time after the system update in that case)

_7 was released well before 23.09.1 release, but had not been installed on this system.

Thanks for the clarity

Though I would not expect that to show in the system uildtime like that.

the _7 release was built and released well before the 9th. it was installed and running on our test machine before it was upgraded Friday to 2.7.2

So what we are seeing/suggesting is a released package is apparently being built with the same version number after release and slipstreamed into the full release. To us that is just bad practice.

if someone updates to 23.09.1 a week from now, are they likely to get yet a different build date? (what if the build has a flaw, for any reason, how do we tell them apart they are all _7)

There might be a perfectly good reason for rebuilding a released package, but generally that should/would go hand in hand with a new rev. in the version. -

There is only ever one version of the package in the repo. When you upgrade pfSense versions it upgrades any pkgs available. So after an upgrade you should always have all the current pkg versions. That has always been the case.

None of that explains the unexpected build timestamp though.

Do you see the output of:

cat /etc/version.buildtimematch:

pkg-static info -A pfSense | /usr/bin/awk '/build_timestamp/ {print $2;}'?

-

Interesting,

so for the first one I already post it above (but still is)

20231209-1757for the pkg-static it is

2023-12-09T19:39:03+0000and from the boot log it is

FreeBSD 14.0-CURRENT aarch64 1400094 #1 plus-RELENG_23_09_1-n256200-3de1e293f3a: Wed Dec 6 20:59:18 UTC 2023The 9th being Sat, the official release before that and the install being Sunday the 10th.

the 2.7.2 test box as mentioned, the dates are more in line. It was installed Friday (8th) and all three dates there are on the 6th.

sounds like the repo perhaps got something different than what was first released sometime between Sat and Sun morning ?

the test box is a virtual, I wonder what would happen if I went back to the snapshot at 2.7.1 and applied the update again now. That might be a test later, if time permits. Other fish in the pond right now.

(Although I could, I am not going to do this on the production box, by going back to the ZFS snap. Seems working fine. Don't fix it.)This certainly isn't a crisis by the way. Just seems odd.

-

Yup it's just confusing this close to a release. In fact I believe it's expected. Still digging though..

-

Don't dig to hard...

is just odd that once a product is released the repo would end up with newer builds. A test repo sure, but production just seems like it should be frozen in time until a new release requires a new repo.

Maybe the "new" build was just to accommodate whatever took the repo's offline for most of yesterday. (although they went away shortly after I did the upgrade, and then remained unavailable for several hours)

-

Don't want to keep hijacking the other thread or create a new one for this really. So this will be completely out of context on this thread and people can just scratch their head and ignore it

however over there you said:

Open a feature request for it if you want. However that is the expected behaviour it's not a bug.

Sorry, but anything that allows Joe User to shoot themselves in the foot (and this does) should not be "expected behaviour".

It is the current behaviour, true, i recognized that right away, but it should not be labeled as "expected" just because it is "current"

Your comment as a Netgate rep, makes me not sure I really care enough about it to create a change request either. It's not really a problem I have. I recognized what it was/is doing right away and know what it's doing. But Joe U might not, but the position it is "expected" is typical of so many companies. When they know full well it has the potential to break stuff for Joe U and elect to call it expected not a bug. Just baffles me.

-my 2 cents

I've removed my messages on the subject from the other thread.

-

If you add a feature request then other devs can make comments or suggestions on there.

My own opinion is that if you are able to add custom patches then we are limited in what we can or should do to prevent foot shooting. There's nothing to stop someone adding a custom patch that breaks everything whether that be applying or reverting. We could prevent adding custom patches that way at all but there are a lot of people using that. We could perhaps improve the warnings around using the package at all.

-

@stephenw10 said in After Update 2.7.2:

limited in what we can or should do

respectfully disagree. You can as I suggested:

- display a warning on save (of new one that can't actually apply)

and

during / after an upgrade scan the patches and do the same determination, don't just automatically set the ones that are auto apply to apply if the changes already exist. flag them in the GUI as not required.

If I find some cycles, I'd actually consider "patching" this myself. That's how serious the problem could be for our friend Joe U.

There's nothing to stop someone adding a custom patch that breaks everything

That's true, you can't fix stu..pid. But that's not the case here - this is 100% a preventable situation on both counts, and the system should therefore protect itself and remain flexible as much as it can within the framework.

lot of people using that

Yup for sure. Me included. That's the main way I fix stuff that is "broken" that I know will never get fixed, but is required for operation in our environment -- I have a laundry list (actually a set of 8 patches at this time) that get reverted before every major update - re-evaluated with every new release and reapplied as required. ... I'm not open to discussion what those 8 items are in an open forum.

But if in some cases the redmine is closed as "not required" there is generally no point in continuing - just easier to fix it and move on.

Over and above those 8, there are redmine items (for example one we (you and I as I recall, but could be wrong on that) have discussed in the past (regarding the Traffic Graph Widget of all things)

That redmine is showing as affected version 23.05.1 Status New and 0% done, even though there is a patch on the ticket not a single comment since 10/28/2023 except one person who commented (a month ago) that "I can reproduce this in version 23.09"

I guess I could also just go on to redmine and comment saying "still in 23.09.1, but the patch still works." Seems futile since it hasn't even been looked at, in 2 months. Bigger fish and all.Thanks for the banter,

- display a warning on save (of new one that can't actually apply)

-

What was seconds, is now in the minutes category.I can confirm this observation - interestingly not right after the upgrade though but after 24 hrs after a final reboot.

Here fetching both the installed and available packages takes like 30 seconds.

The Update page takes about 30 seconds to open and further 30 seconds to check if the installation is up to date or not.

Opening the update settings page then also takes ~30 seconds to load...I came from 2.7.1 where I couldn’t notice this behaviour - pfsense is running on a physical box, CPU usage is low and the bandwidth is fine, so I can’t see any bottleneck there…

More an annoyance than a true issue though….

-

Here fetching both the installed and available packages takes like 30 seconds.

not here those pages are pretty quick maybe around 10-15 seconds to open and display the packages.

The Update page takes about 30 seconds to open

longer here, really haven't looked into it. this is where the delay is for me, just loading the page. and switching the tab is just as bad, that doesn't make sense.

further 30 seconds to check if the installation is up to date or not.

once it loads, another 10-20 seconds is typical for checking if there is something available. This would be while the cog is spinning (this seems pretty normal)

It's not really spending a lot of time going to the page. So almost never. There is enough noise when a new version is available, it becomes really obvious when it is time to check it.

That said, still the same observation today.

so for me this item is filed under category "of it is what it is" -

@jrey said in After Update 2.7.2:

That's true, you can't fix stu..pid. But that's not the case here - this is 100% a preventable situation on both counts, and the system should therefore protect itself and remain flexible as much as it can within the framework.

As I say open a feature request for it. You may find other developers agree with you. I wouldn't be me coding that anyway.

-

@stephenw10 said in After Update 2.7.2:

Still digging though..

Add this to the low priority "dig" re packages (dig being the reference for unbound

)

)in the update ( 23.09.1)

the update log showsunbound: 1.18.0 -> 1.18.0_1 [pfSense] Number of packages to be upgraded: 9 [1/9] Upgrading unbound from 1.18.0 to 1.18.0_1... ===> Creating groups. Using existing group 'unbound'. ===> Creating users Using existing user 'unbound'. [1/9] Extracting unbound-1.18.0_1: .......... doneunbound is working no issue, but it is logging itself as 1.18.0

and running the commandunbound -Vreports 1.18.0

where as in the 2.7.2 release there is no mention of unbound being upgraded to _1

unbound -V also reports 1.18.0 on that version

maybe that's the package the got slipped in ? or is expectedeither way on 23.09.1 it is not reporting what is says was installed..

We can talk about the curl package too.. Upgraded on 23.09.1 now running 8.5.0 but 2.7.2 is still running 8.4.0 maybe that is also expected?

from 23.09.1 upgrade

Installed packages to be UPGRADED: curl: 8.4.0 -> 8.5.0 [pfSense] isc-dhcp44-relay: 4.4.3P1_3 -> 4.4.3P1_4 [pfSense] isc-dhcp44-server: 4.4.3P1_3 -> 4.4.3P1_4 [pfSense] openvpn: 2.6.5 -> 2.6.8_1 [pfSense] pfSense: 23.09 -> 23.09.1 [pfSense] pfSense-default-config-serial: 23.09 -> 23.09.1 [pfSense] pfSense-repo: 23.09 -> 23.09.1 [pfSense] strongswan: 5.9.11_1 -> 5.9.11_3 [pfSense] unbound: 1.18.0 -> 1.18.0_1 [pfSense]from 2.7.2 upgrade

Installed packages to be UPGRADED: isc-dhcp44-relay: 4.4.3P1_3 -> 4.4.3P1_4 [pfSense] isc-dhcp44-server: 4.4.3P1_3 -> 4.4.3P1_4 [pfSense] openvpn: 2.6.7 -> 2.6.8_1 [pfSense] pfSense: 2.7.1 -> 2.7.2 [pfSense] pfSense-default-config: 2.7.1 -> 2.7.2 [pfSense] pfSense-repo: 2.7.1 -> 2.7.2 [pfSense] strongswan: 5.9.11_2 -> 5.9.11_3 [pfSense] -

The curl pkg was updated in the repo. Upgrades after it was will have 8.5.0. Anything that was already upgraded can get it using

pkg upgradeif needed.The Unbound package had the port revision bumped to pull in a fix to a patch we carry:

https://github.com/pfsense/FreeBSD-ports/commit/4749e98e2dbffb8e725f68e5b437c5056c55a0bc