Suricata blocking IPs on passlist, legacy mode blocking both

-

@bmeeks I thought of that earlier and as a test raised the Base Advertising Frequency from 1 to 10 on wan only but it didn't help there. But that was with hardware checksum offloading enabled. So I will probably have to redo that test and maybe with an even higher base number to rule that out. But I think the next step will be to move this suricata instance from wan to an internal interface and switching to inline and see if it runs stable on these Netgate 6100.

I'm not sure disabling hardware checksum offloading did anything in our case either.

Perhaps the combination of running ET Pro rules (longer rule loading time), amount of traffic at the time of suricata starting and carp/ha makes this setup more likely to hit the issue. -

@btspce said in Suricata blocking IPs on passlist, legacy mode blocking both:

@bmeeks I thought of that earlier and as a test raised the Base Advertising Frequency from 1 to 10 on wan only but it didn't help there. But that was with hardware checksum offloading enabled. So I will probably have to redo that test and maybe with an even higher base number to rule that out. But I think the next step will be to move this suricata instance from wan to an internal interface and switching to inline and see if it runs stable on these Netgate 6100.

I'm not sure disabling hardware checksum offloading did anything in our case either.

Perhaps the combination of running ET Pro rules (longer rule loading time), amount of traffic at the time of suricata starting and carp/ha makes this setup more likely to hit the issue.I agree that the presence of CARP/HA is likely the cause of this problem. As I mentioned before, it's not a configuration I've ever tested with Suricata (nor Snort, for that matter). And the more traffic flowing over the interface, the more likely it is that a packet will trigger an alert while one of the interface IPs has been deleted from the Radix Tree (and before it gets added back to the tree).

So, do you not run HA on the internal interfaces? I would think that wherever CARP/HA is in place (WAN, LAN, or elsewhere) that the interface flapping would happen.

While Inline IPS Mode will eliminate permanent blocks of an interface IP, it can still result in traffic interruptions if a DROP rule triggers. But those interruptions should not impact packets associated with the CARP protocol unless a rule false positives on the traffic.

Also be aware that Inline IPS Mode is not available for all NIC types, but it should be available and work for the NICs in the SG-6100 box.

-

@bmeeks We do run HA on internal interfaces aswell. Moving the suricata instance from wan to one of the internal interfaces is simply to limit the traffic it sees when switching to inline as the load will increase. But it's not perfect either because now we have to rearrange or bypass some of the internal traffic which do not need to be scanned by suricata to limit the throughput drop on that side. I will probably do the switch this weekend if possible and report back.

We did use inline mode a few years ago on XG-7100 but it wasn't stable enough and legacy mode solved all issues at the time. But there has been a lot of development since then.

-

@btspce said in Suricata blocking IPs on passlist, legacy mode blocking both:

We did use inline mode a few years ago on XG-7100 but it wasn't stable enough and legacy mode solved all issues at the time. But there has been a lot of development since then.

Yes, a lot of work has gone into the netmap device driver over the last couple of years, especially in regards to mutliple host rings support in Suricata.

You will almost certainly want to change the Suricata Run Mode from AutoFP to workers on the INTERFACE SETTINGS tab in the Performance section. That will usually work much better with netmap on multi-core CPUs if you also have multi-queue NICs. But experiment with both modes. For a small handful of users AutoFP has performed better. Depends a lot on the particular NIC.

-

@bmeeks I'm now up and running in inline mode on two internal interfaces and in workers mode. One of interfaces has vlans on it.

Hardware Checksum offload disabled and flow control disabled for the relevant parent interfaces.

Everything works so far except both firewalls becomes carp master for the vlan interfaces only. No alerts on the interfaces. Any idea on this issue ? -

The issue is that vlan hardware tagging has to be disabled on the nic for suricata to be able to pass the vlan tags in inline mode.

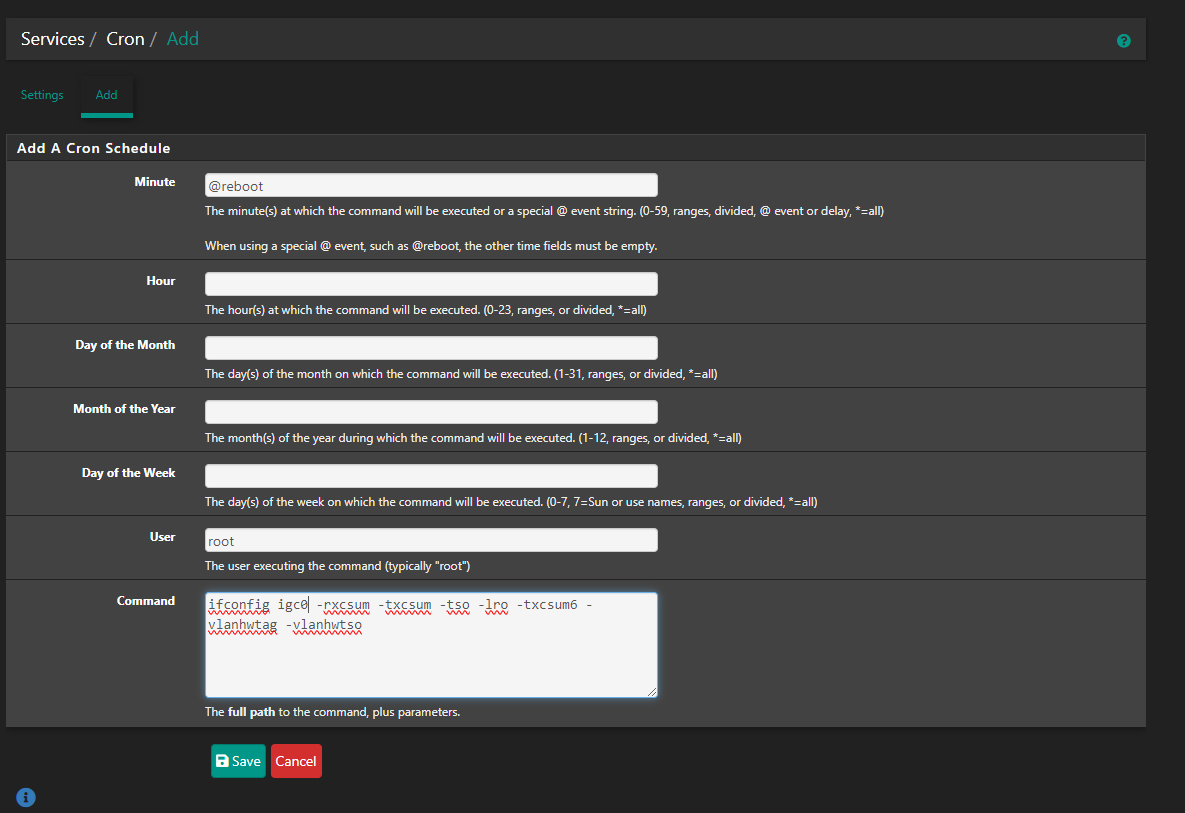

In this case it was interface igc0 so I entered the below command in a shell on both firewalls and traffic and carp was instantly happy. Is there any way to set this as a system tunable ?

ifconfig igc0 -vlanhwtag -

@stephenw10 Do you know if we can disable vlan hardware tagging on a nic through a system tunable or loader or is it something that can be added in the next release?

It is needed for suricata to work in inline mode on parent interface with vlan's. Vlan traffic will not work without it. -

-

@btspce said in Suricata blocking IPs on passlist, legacy mode blocking both:

The issue is that vlan hardware tagging has to be disabled on the nic for suricata to be able to pass the vlan tags in inline mode.

In this case it was interface igc0 so I entered the below command in a shell on both firewalls and traffic and carp was instantly happy. Is there any way to set this as a system tunable ?

ifconfig igc0 -vlanhwtagNot as a system tunable, but sort of in concert with @kiokoman's suggestion, you can use the earlyshellcmd options to do this at bootup. I formerly did this to make DHCP work properly with my fiber-to-the-home connection as the ISP's device used VLAN 0 and previous pfSense versions would not see that tag unless you turned off hardware VLAN tags.

Here is a link describing the process: https://docs.netgate.com/pfsense/en/latest/development/boot-commands.html. You can install the

Shellcmdpackage to make this an easy GUI task. You can configure early shell commands to execute shortly after the firewall boots to turn off (or on) any special NIC hardware features.Be advised that Inline IPS Mode and VLANs are not exactly best friends, but they do work okay so long as the Suricata instance is running on the parent physical interface. The package now sort of does that behind the scenes when it detects the interface is a VLAN. It configures Suricata to run on the parent physical interface in promiscuous mode. This VLAN coexistence is due to a limitation within the netmap kernel device and not something Suricata has control over. The current workaround is to use the physical parent interface.

-

@kiokoman @bmeeks Thanks both of you!

I know suricata was not really made for running traffic with vlan tags so this is more of a nice to have on these vlans. All important/production traffic already had their own interface as we were planning on moving back to suricata inline when it became stable enough so that made this easier now. If there is any instability this suricata instance will be disabled. -

I'm having this problem as well. Suricata is on the WAN interface operating in Legacy Mode (pfsense 23.09.1, suricata 7.0.2_3, custom hardware Supermicro C2758, IGB interfaces), and as soon as Suricata is enabled the Public IP on the WAN is instantaneously blocked. I have four additional CARP interfaces that get blocked as well. The CARP interfaces aren't 'real' failovers, but are used due to the goofy AT&T fiber setup. At any rate the WAN interface is not a CARP and getting blocked too. I've checked out the pass list file in the Suricata config folder and the correct IPs/Networks are showing up. The suicata.log is claiming these IP addresses are being added to the pass list. I've tried a custom pass list, and I've disabled the hardware offloading for the interface, and verified there aren't multiple instances of Suricata running, any advice?

-

@eldog Try moving it to LAN. That also has the advantages of alerting on LAN IPs instead of the NATted WAN, and also not bothering to scan any inbound traffic that will immediately be blocked by the pfSense firewall.

-

I might try that, but I actually prefer it on the WAN. There it can generically block IPs that are poking around and up to no good even if the traffic would never have reached my network.

-

Finally got some additional blocks on the other interfaces, and I can confirm my issue is now resolved. Disabling the hardware checksum offloading did the trick, as unlikely or inexplicable as a solution as it may be. All my interfaces have now has alerts that only blocked the external IP. The IP listed in the default pass list was not blocked. Also, not seeing the deleted IPs from the default pass list from previous interface flapping issues. The suricata.log on the various interfaces is just showing adding the IPs and no deletions.

Even though it may not make sense, hopefully this solution will help some others that come across it.

-

@sgnoc said in Suricata blocking IPs on passlist, legacy mode blocking both:

Finally got some additional blocks on the other interfaces, and I can confirm my issue is now resolved. Disabling the hardware checksum offloading did the trick, as unlikely or inexplicable as a solution as it may be. All my interfaces have now has alerts that only blocked the external IP. The IP listed in the default pass list was not blocked. Also, not seeing the deleted IPs from the default pass list from previous interface flapping issues. The suricata.log on the various interfaces is just showing adding the IPs and no deletions.

Even though it may not make sense, hopefully this solution will help some others that come across it.

Yes, we can take the win even if we don't fully understand why it works

.

.I'm still thinking it was the rapid interface IP deletions and additions that were at the root of the unwanted blocking problem. Maybe the hardware checksum thing was messing with some other part of Suricata's code (not the custom blocking module) and that was resulting in resets of the interface which was resulting in the long sequence of deleting and adding back the interface IP addresses.

One thing I have not cross-checked is if the number of IP deletion/addition sequences equals the number of worker threads spawned by Suricata. You have a lot of interfaces (with the VPNs and CARP), so there is a lot to digest. Have not checked this myself, but curious if say the number of times the WAN IP (to use just one interface example) was deleted and added back a total of 4 times during startup. Suricata spawned 4 worker threads in your setup if I recall correctly. Wonder if each worker thread, when it launched and started a PCAP process on the interface, resulted in the interface being "cycled" by the kernel ??

Later Edit: went back and looked through the

suricata.logfile that @sgnoc posted back up earlier in this thread to check out my new hypothesis. I think my hypothesis is correct. Here is the whole story as I now see it, and my theory appears to jive with the log evidence (and this also explains why disabling the hardware offloading "fixed' the problem for @sgnoc).Around 6 months ago Suricata upstream made some changes to the PCAP module of Suricata. Those changes were released in Suricata 7.x. Those changes involved adding some system

ioctl()calls to disable certain hardware offloading if it was found to be enabled when running with the PCAP package capture method. Legacy Blocking Mode in Suricata on pfSense uses PCAP for acquisition. The system call to disable hardware offloading will happen thread-by-thread and interface-by-interface as Suricata starts up. The more interfaces and worker threads you have, the more pronounced this behavior will be. Worker thread count is controlled by the number of CPU cores. Theioctl()system call apparently caused the kernel to delete and then add back IP addresses on the interface specified for hardware offloading disable. This is likely due to the kernel "resetting" that interface's capabilities as requested. Since these changes were made in Suricata native code and not in the custom pfSense blocking module, I was not tracking them and was unaware of them. This is why I was initially puzzled that turning off hardware offloading "fixed" the problem.To see if my theory was correct, I just went back and counted the number of worker threads launched by Suricata on @sgnoc's machine and compared that count to the number of times his WAN IP was deleted and added back on the

ix0interface. Both numbers were "4". There were four threads launched, and his WAN IP was deleted and added back four separate times during Suricata startup.The new Suricata changes first test if offloading is already disabled, and if it is, then no

ioctl()system call is made. Therefore the interface does not get reset to change the capabilities. The bounce would only happen if offloading was found to be enabled, because then the Suricata PCAP module will make a systemioctl()call attempting to disable the offloading. -

M mcury referenced this topic on

-

@bmeeks That all makes a lot of sense from what I was seeing on my end. The only thing that doesn't make sense to me is why I was still getting internal blocking from alerts after the interface flapping stopped. Your explanation on the flapping made sense, with threads catching alerts between deleting and adding, thus blocking the internal IP in between those actions.

That part makes sense, but what I don't understand is why the blocks continued to happen once the interfaces finish starting and the flapping cease. I would have thought that the IPs would then be added back and stable and the internal IPs would no longer be blocked for future alerts, especially since there are no more deletions on the Suricata.log after the interface initial startup.

The only thing I can guess, with my limited understanding on the behind the scenes interactions you're describing, is maybe there is some kind of compatibility issue with the hardware and the way that the ioctl() calls disable hardware offloading? There must be some kind of reasoning why the alerts continued to block internal IPs.

I know it doesn't matter much since this solution seems to have fixed everything, but it is still curious to me. It made it more of a challenge too because restarting the system caused the WAN to start working, and then internal interfaces to start blocking internal IPs. Either way, Suricata and the behind the scenes interaction with pfSense definitely do not like hardware offloading!

I'm glad you were able to make some sense of this. Thanks again for your time working with me previously. Happy New Year everyone!

-

@sgnoc said in Suricata blocking IPs on passlist, legacy mode blocking both:

The only thing that doesn't make sense to me is why I was still getting internal blocking from alerts after the interface flapping stopped.

Still puzzled about that myself. The only explanation I currently have (and it's really just a guess) is that the rapid sequence of deletions and additions upfront somehow corrupted or did something to the internal Radix Tree.

The Radix Tree I keep mentioning is a construct provided in the native Suricata binary source code. It is a way to store IP addresses and/or netblocks and then later quickly search through them to find matches. It allows you to test a /32 or /128 IP address by searching for a "best match" in the Radix Tree. The Radix Tree then attempts to find either the specific matching address if it's in the tree, or the netblock that contains that IP address. This is the logic used by the custom blocking module's pass list code. The "best match search" in the Radix Tree returns a value indicating whether the IP was found in the tree or not. Any block by the custom blocking module then flows from that determination.

The Radix Tree code has function calls to add, delete, and search for IP addresses or netblocks. All of those are used by the custom blocking module. I also implemented read/write locking in my custom blocking module so that the Radix Tree is locked when being written to. That happens when adding or removing an IP. When a thread is simply searching the tree, then a read lock is taken to prevent another thread (the interface monitoring thread most often) from modifying the tree until the reader is finished. Thus the Radix Tree should be protected from a situation where one thread is searching the tree while another thread is simultaneoulsy adding or removing an IP.

If the search logic in the Radix Tree was faulty, you would expect every single search attempt to fail - and most likely fail the same way everytime. You would not expect a search to succeed 80% of the time and fail 20% of the time. That's the part that is the most puzzling.

-

FYI, I removed the CARP interfaces from my configuration and the problem went away, even on non-carp addresses. I have a separate installation of PfSense with almost identical hardware that does not use CARP and I have never had a problem with it. Seems to point the finger at CARP generically.

-

S SteveITS referenced this topic on