FRR seeing IPsec tunnels disappearing

-

I have an IPsec VTI deployment with FRR (bgp) running.

Everything works as expected but i have been noticing that all my IPsec tunnels that have BGP running fail at the exact same time.- These are tunnels to diverse locations

- Gateway monitoring is healthy so don't believe its any local issue.

- As an experiment i switched one IPsec tunnel to Wireguard and when there is a routing flap, only the IPsec tunnels are impacted so now i believe its something specifically to that.

Combing through the system logs something stuck out

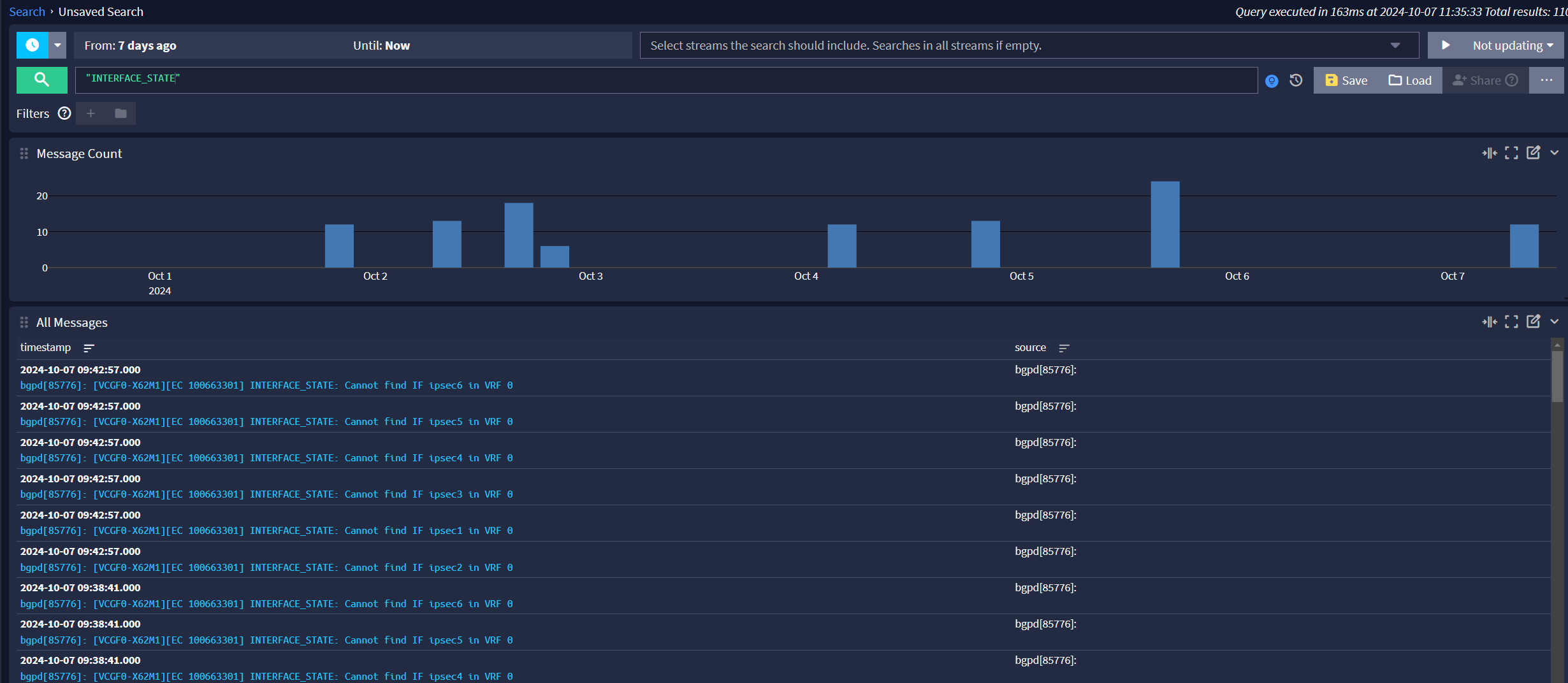

[VCGF0-X62M1][EC 100663301] INTERFACE_STATE: Cannot find IF ipsec5 in VRF 0

[VCGF0-X62M1][EC 100663301] INTERFACE_STATE: Cannot find IF ipsec4 in VRF 0

[VCGF0-X62M1][EC 100663301] INTERFACE_STATE: Cannot find IF ipsec3 in VRF 0There is no specific timing that i can see (assuming there's some scheduled clean up job).

I have a suspicion that IPsec (charon) is adjusting/re-applying/removing and adding the IPsec configuration as this seems to relate to redmine 14483 but would need additional assistance in troubleshooting but i think this is closely related. The only difference between this situation and the redmine is that the redmine is reproducible by simply making ANY changes in ipsec(eg update description) which would cause a traffic outage for all ipsec tunnels where as this requires no administrator involvement so not sure how to reproduce.

If so, it makes sense why Wireguard tunnels with FRR are never impacted.

edit: The IPsec implementation is deeply broken on some level but I'm surprised its never been noticed until i opened up the Redmine. These types of issues shouldn't be possible, but it is scary that today's documented issue requires no changes in IPsec configuration to trigger the outage.

-

Nothing is logged at that time in the IPSec or System logs?

If the interfaces really do appear to go AWOL to FRR I'd expevct to see something. And removing the interfaces entirely it would be something significant like IPSec restarting.

-

@stephenw10

Nothing is sticking out in the IPsec logs. What should i change in the logging to see more? Theres a lot of options but not sure which ones would be relevant -

Anything that would cause the interface to disappear should be shown by the default IPSec log settings.

I'd expect it to be in the system log too. Nothing else in the routing log either? Just FRR suddenly unable to find the interfaces?

-

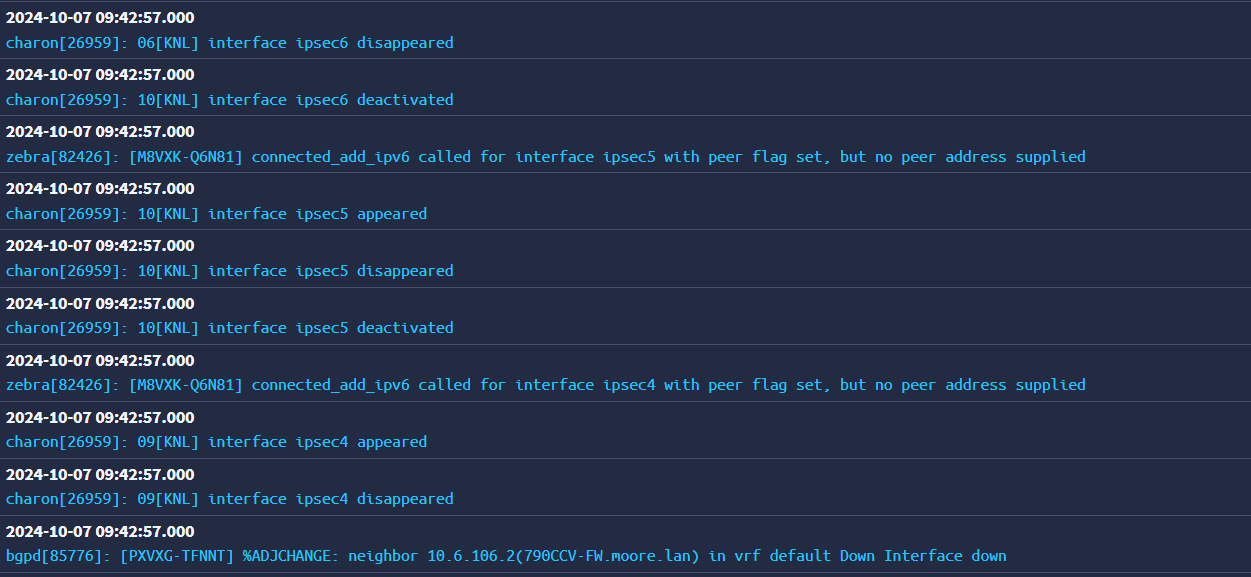

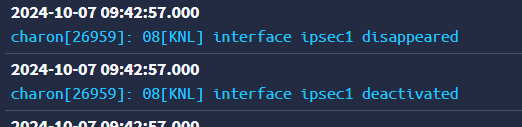

IPsec acknowledges that the interface went away.

Its even saying that it disappeared

Deactivated and Disappeared are red flags to me.

-

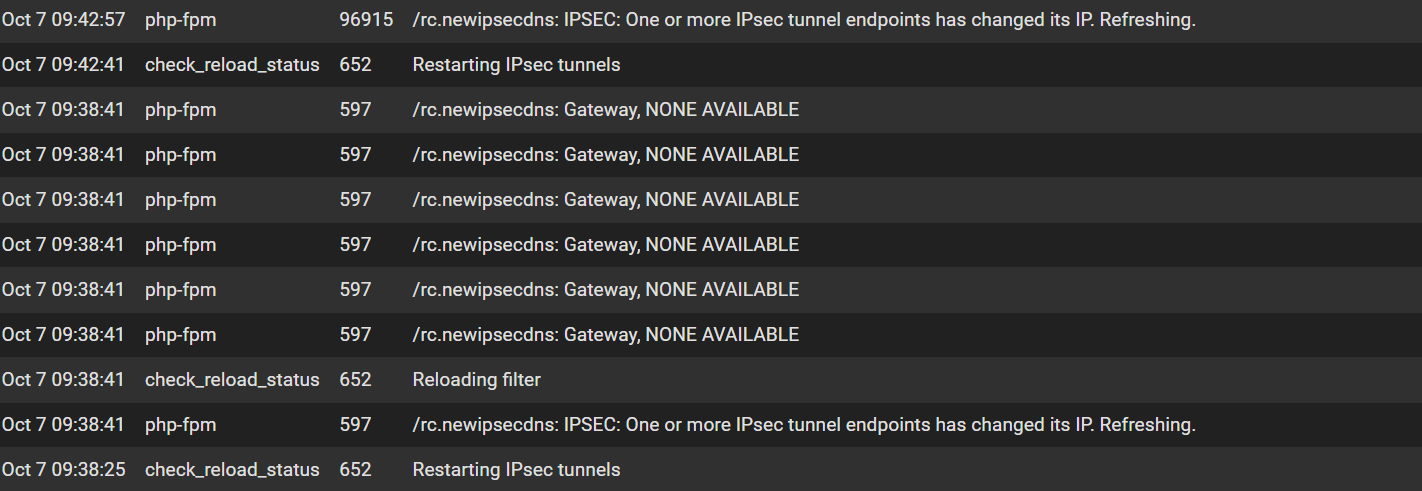

I think i found the restart event with cause.

ipsecdns

edit: Oh man....so i do have a few IPsec tunnels using the DNS name of a remote gateway instead of an IPv4 address. My theory is that the IP changes, the ipsecdns process picks it up, and restarts all tunnels. I really hope that's not the case but if so that's really bad.

-

Mmm, indeed. IIRC there's something specific about the way FRR interacts with it there.

Looks like it may be related to this:

https://redmine.pfsense.org/issues/10503Though in your case no gateway actually goes down?

-

@stephenw10 No gateways go down.

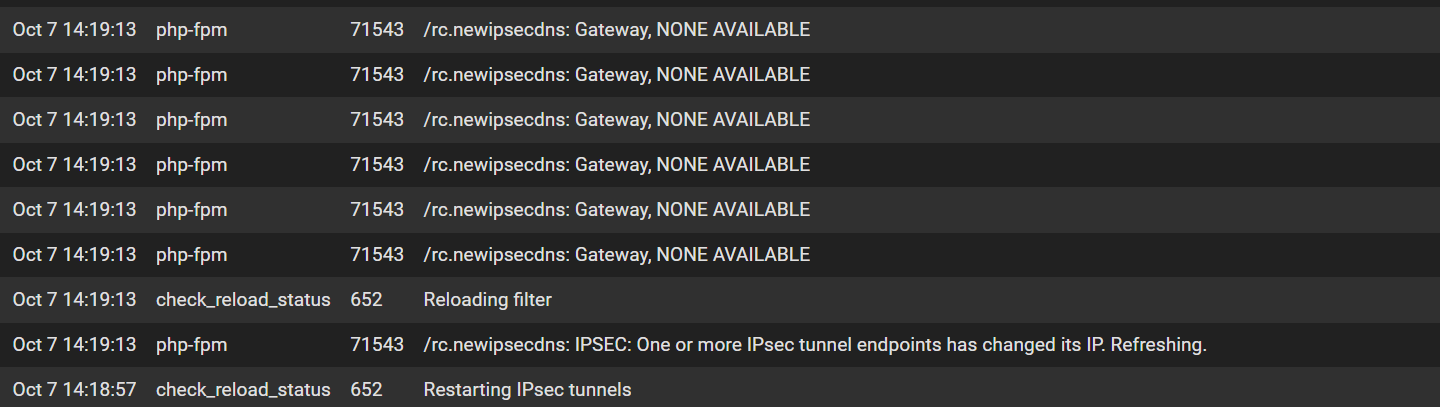

The incident happened just now and whats good is that i now know what to look for.

Is there a way to find out which gateway is changing its IP.

Also should i open a redmine?

-

Hmm, if it's actually a gateway I'd expect to see that logged there and in the gateways log.

If it's just a remote IPSec node that changed IP that's probably in the resolver log.

-

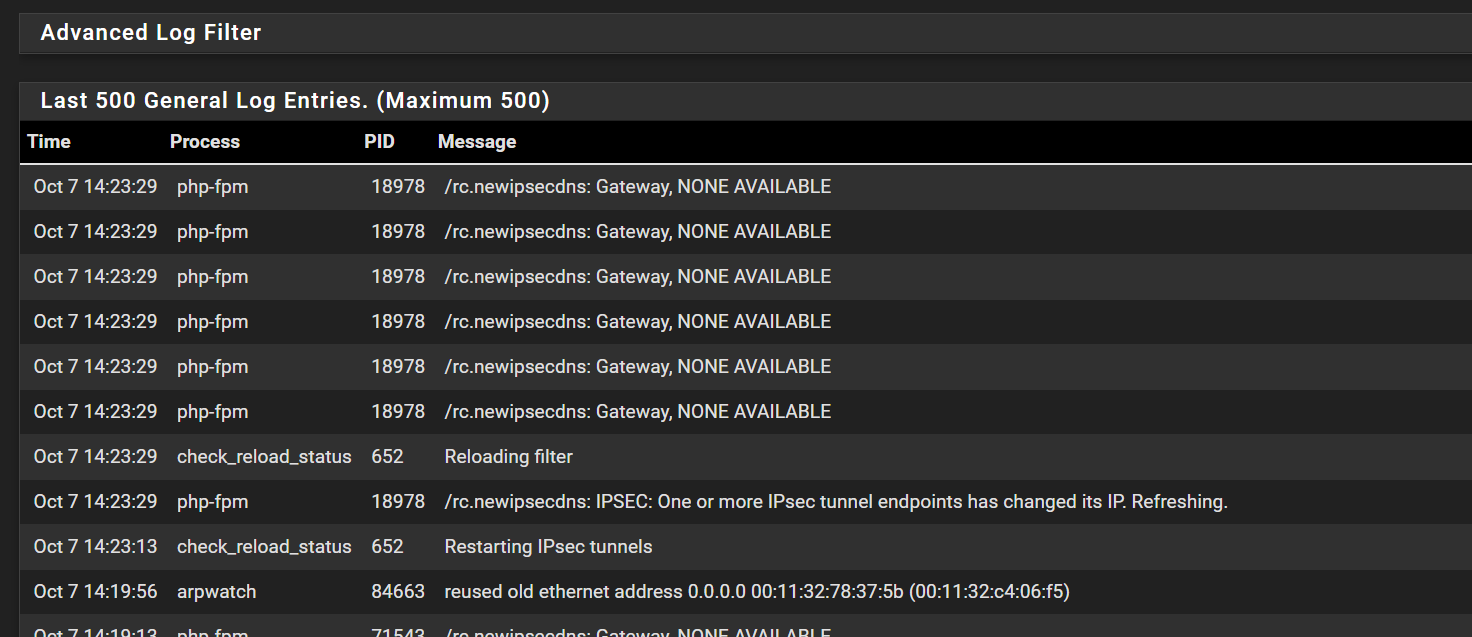

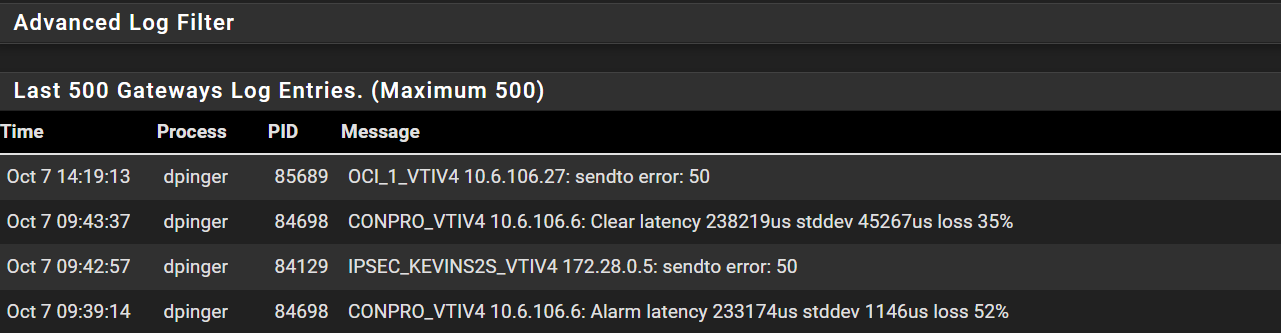

The only times in the gateway log are the following. Packet loss but nothing seen showing a complete loss. Considering all VPN tunnels bounce, these error messages make sense.

Resolver log shows nothing useful. I see pfsense checking local cache for DNS but i don't see any related errors

-

Hmm, it does seem to have triggered something at 14:19:13 though. Was there anything in the system log leading up to that? I can just about see there was a newipsecdns call then.

-

@stephenw10 Yep a restart event

-

Hmm, so in both cases the first thing logged is 'Restarting IPsec tunnels' ?

That would normally be triggered by something else. Were any tunnels being renewed at that point?

-

@stephenw10 That is correct, that is the first thing logged.

-

Is it possible that coincided with the renew time for the tunnel using an FQDN remote endpoint?

-

I believe it does. For both incidents. Even though the time between a change of IP and the restart are a few minutes apart so it doesn't seem to occur right away.

Incident one. Time of restart event was around 09:38

./pfblockerng/dns_reply.log:DNS-reply,Oct 7 09:32:25,resolver,A,A,300,vpn.server4u.in,127.0.0.1,124.123.66.69,IN ./pfblockerng/dns_reply.log:DNS-reply,Oct 7 09:37:25,resolver,A,A,300,vpn.server4u.in,127.0.0.1,103.127.188.125,IN ./pfblockerng/dns_reply.log:DNS-reply,Oct 7 09:42:41,resolver,A,A,300,vpn.server4u.in,127.0.0.1,124.123.66.69,INIncident two: 14:18

./dns_reply.log:DNS-reply,Oct 7 14:14:03,resolver,A,A,300,vpn.networkzz.co.in,127.0.0.1,210.89.55.63,IN <--- ./dns_reply.log:DNS-reply,Oct 7 14:18:33,resolver,A,A,300,vpn.networkzz.co.in,127.0.0.1,202.88.209.151,INIm happy that we found something that is reproducable.

-

Hmm, OK so did those endpoints actually change? Are they FQDNs that resolve to several IPs?

I'd guess there is some timeout there that has to add-up over those 4mins.

Either way I agree it should not affect all IPSec tunnels.

-

@stephenw10 said in FRR seeing IPsec tunnels disappearing:

Hmm, OK so did those endpoints actually change? Are they FQDNs that resolve to several IPs?

Yep those endpoints do resolve to several IPs. One of those i know for sure because i remember the set up for that recently.

I did open the redmine for it for tracking purposes. Dont think there is any workaround for this other than getting into the weeds of how IPsec is configured/built

-

-

Hmm, do they all resolve IPs? Conversely do you have any that only resolve to one IP that doesn't cause this?

Like is this being triggered because it's resolving a different IP address everytime or just because it is re-resolving at all?