6100 Failed eMMC replaced with NVme but now no longer reboots

-

Hello,

So far this year I have had two 6100 storage fail and have been trying to get the units running again with a NVMe drive.

The unit detects the drive, installs the OS fine but then when it comes to rebooting ether after a software update or from the WebGUI/CLI the unit just hangs.

The console output shows this when you reboot:

System is going to be upgraded. Rebooting now. Waiting (max 60 seconds) for system process `vnlru' to stop... done Waiting (max 60 seconds) for system process `syncer' to stop... Syncing disks, vnodes remaining... 0 0 0 0 0 done All buffers synced. Uptime: 1m23s uhub0: detachedThis is happening on both units using a variety of different drives.

If you power cycle the unit, it will boot normally and be fine, until the next reboot/update.

Anyone run into this and have a fix ?

Thanks

-

Unfortunately that looks like: https://redmine.pfsense.org/issues/15110

What drives did you try?

Did you test them in both m.2 slots?

Steve

-

Hello Steve

We tried both a second hand Intel Optane 16GB M10 and Kioxia 256GB NVME as these all we had with ether B or M+B keyed.

Both of these boot and don't reboot.

Also got a new Transcend 128GB M+B Key which doesn't get detected at all.

The errors do seem exactly like in that ticket.

To me it feels like the device is waiting for the eMMC to sync but it never does due to it being corrupted and gets stuck in a loop.

Thanks

-

Hmm, well I've tested those 16G Optane drives and never seen an issue with them.

It still boots fine from a full power cycle though?

Does it ever show anything from the BIOS/blinkboot at reboot?

-

A andrew_cb referenced this topic on

-

Correct, power cycling the unit will make it boot correctly but reboot just shows the output above. I left it for 24 hours just to see if it would eventually restart, but it stays on uhub0: detached

-

Hmm, well that Optane drive really should work. I have two here that I've used in several devices without issue.

Possibly the reboot issue is due to the eMMC as you say. Though it's hard to see why.

Does it show the eMMC present in the boot logs when it does boot?

-

It does on one unit, you can see the eMMC showing up but trying to gpart to delete any partitions causes an a IO/error.

On the other unit the eMMC doesn't show up at all even in the bootmenu and both are acting the same when it comes to rebooting with it just stopping and not restarting the unit. If you Reroot instead of reboot it does restart fine but then this isn't a full restart.

-

Do either halt correctly? With the led switching the slow orange flash?

-

When halting the system the orange light stays on when the output reads:

Netgate pfSense Plus is now shutting down ... net.inet.carp.allow: 0 -> 0 pflog0: promiscuous mode disabled Waiting (max 60 seconds) for system process `vnlru' to stop... done Waiting (max 60 seconds) for system process `syncer' to stop... Syncing disks, vnodes remaining... 0 0 done All buffers synced. Uptime: 1m26s ix3: link state changed to DOWN ix3: link state changed to UP uhub0: detachedThis is similar to when you reboot, the lights depend on when the system reaches uhub0: detached. If they are on then they stay in that state or off.

-

If it halts correctly you should see only the green-circle LED fixed orange.

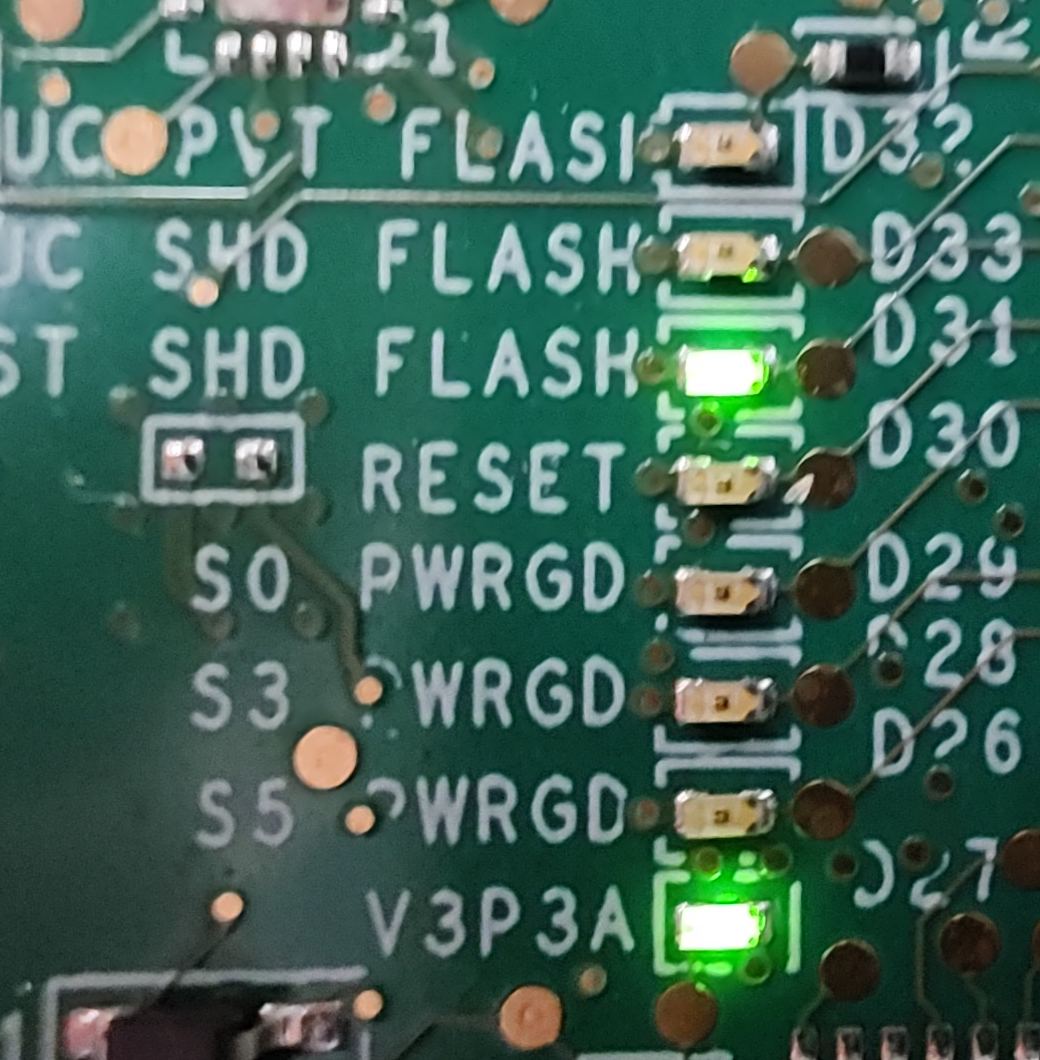

The other thing you can check are the internal diagnostic LEDs on the board next to the SIM slots. After correctly halting they should show only

V3P3AandHOST_SHD_FLASHlit.It seems like it might actually be hanging at shutdown not at boot. Which would explain why it boots fine after a hard reset.

-

Leds on halt are V3PA and HOST_SHD_FLASH

Status LEDS are just a single orange solid and no flashing:

-

Hmm, it is shutting down correctly there then. Or at least appears to be.

Does it boot back from there if you power it up by pressing the PWR button?

-

No activity when trying to restart by pressing the power button. Just stays in the current state.

-

Hmm, that is odd. Momentary press? 1s press? 3s press? All of them?

-

Tried all the sequences of presses, long press did restart the unit but still this isn't ideal to have someone go into a remote server room and do this after each reboot.

One of the units had stopped putting any console output so we went the next drastic step and that is remove the eMMC from the board.

Since doing this, this unit now reboots fine into the external storage and reboots fine so it does seem like the issue is with the eMMC, we are going to repeat this process with the other unit to see if it also solves its rebooting issue.

My only real concern is since doing this almost all our 6100 in the field are reporting there eMMC are at end of life, some units are less than 6 months old and are satellite offices which don't do a lot of traffic. I just hope that we aren't going to have a cascade of failing eMMC drives.

-

If it's less than 6m old that should be in warranty so would be eligible to be replaced.

That's good info on removing the eMMC though.

-

Yup, I have 2 of these now. Both with 'Innodisk' 128GB nVME drives. If we have to physically remove the eMMC...thats not cool. There should be a way to disable it via firmware or something. BIOS maybe, (if an interface to change config exists on these). Issue in general is pretty messed up though...

-

My only real concern is since doing this almost all our 6100 in the field are reporting there eMMC are at end of life, some units are less than 6 months old and are satellite offices which don't do a lot of traffic. I just hope that we aren't going to have a cascade of failing eMMC drives.

Sorry to hear that you are going through this! I am in the same situation - 6 devices with failed eMMC storage and another 10 of 30 that are at or over 100% estimated wear.

It is mind-blowing that you have units failing at less than 6 months - I guess I should consider myself lucky that mine seem to last 18-24 months before they start dying.I also have 2 units with the same no-power on issue. With one, I had just finished installing pfSense to a USB stick and it had booted and I was logged-in, but on the next reboot it just stopped responding completely - no console anymore. I wonder if removing the eMMC would get it working again.

Are you running any packages on the failed units? Most of mine are remote/small office units that just run Zabbix, and yet they have impending eMMC failure.

Check out my thread for more information and discussion about failing storage in Netgate devices.

-

@stbellcom Run these commands in the Command Prompt or console to check the eMMC health of your devices:

pkg install -y mmc-utils; rehashmmc extcsd read /dev/mmcsd0rpmb | egrep 'LIFE|EOL'The Type_A and Type_B wear values are hex values that you need to multiply by 10 to get the wear percentage.

The Pre-EOL value is 0x01 for under 80%, 0x02 for >80%, and 0x03 for >90% consumed reserve blocks.

https://docs.netgate.com/pfsense/en/latest/troubleshooting/disk-lifetime.html

-

A andrew_cb referenced this topic on

-

We are running pfblockerng which is known to cause a lot of writes, but I am running a non-standard setup. Maxmind DB lists are generated externally and are pulled down weekly and most of the logging is disable.

We only use it to maintain geo-blocking lists and no other features.

The unit mentioned above at the remote office has no incoming forwards so doesn't have pfblockerng installed on it. Yet it still says 0x0b. It does have a IPSEC tunnel back to the main office.

The two units that have failed so far are both sites which do the highest traffic. They average around 5TB a month.

Almost all our units are show 0x0b on Type_A and Type_B with only one showing 0x05. For interest's sake I grabbed a brand-new unit out of the box and it did show 0x01 which is what I would expect.