PfSense breaks when I plug device into additional interface? (ESXi 5.0)

-

Running ESXi 5.0 Enterprise Plus (legit licensed) and 2.0-RELEASE (amd64) built on Tue Sep 13 17:05:32 EDT 2011.

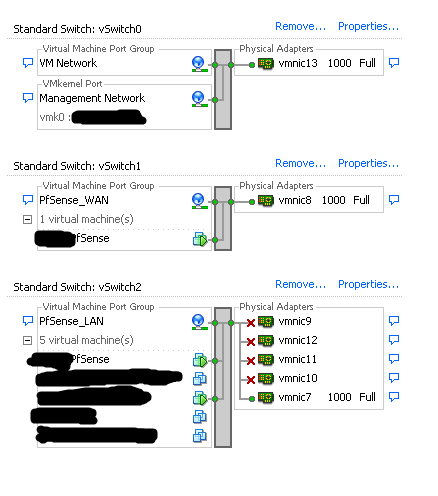

Everything works fine - except that I have an additional (supported) quad-port NIC that I want to attach to a virtual switch. I can add the NICs fine (so there are 5 interfaces total) however when I plug anything into the additional NICs the whole thing breaks. I was hoping to add interfaces to the vSwitch so that the HV would handle the switch traffic rather than adding 4 additional vSwitches and 4 additional virtual interfaces to PfSense (which would spike CPU usage).

Hopefully the picture makes it clear. Right now I have vmnic7 plugged into a switch and then that switch is handling all the traffic however I would like to eliminate that switch and plug devices (such as NAS) directly into vmnic9-12. When I plug anything into vmnic9-12 pfsense breaks and the LAN stops working.

What is the best way to configure this? am I going to need to add virtual interfaces 1:1 in pfsense for each additional physical NIC? Pass the quad-card directly through to pfsense?

The switch is a gigabit switch as are the NICs and I'm trying to avoid having to upgrade to 10G hardware on this machine. If I can use the additional NICs and get 4gig throughput I'll be safe for a while.

Thx for help/suggestions.

I played around with it a bit more and I am able to plug my NAS into VMNIC7 and then I plugged my switch into VMNIC11 and it didn't break - however when I plug my laptop into VMNIC9 it breaks again. I'm confused - I thought that the HV/virtual switch was supposed to handle these interfaces not pfsense.

-

I ran into a similar problem and it had to do with the negotiated speed on the device that I was plugging into the ESXi vSwitch. I would play with the negotiated speed (maybe change from the default auto negotiate to 100/1000mbps full duplex). It sounds more like something is poisoning your network rather than pfsense breaking. I have a very similar setup so if this doesn't help please feel free to post more here.

-

You're breaking networking at the hypervisor level. Putting multiple NICs on a single vswitch doesn't turn those physical NICs into a big switch. What it does depends on how you have it configured, but basically it expects the entire network must be reachable via any of those NICs. What is likely happening there is when you plug in one of the others, the vswitch changes over to using only that NIC. Short of creating one vswitch per NIC and bridging them all together on the firewall (which I wouldn't recommend for performance reasons if you expect to get gigabit wire speed through your internal network), I don't know you can do exactly what you're looking to accomplish at the ESX level. Never seen anyone attempt a setup like that, all our ESX boxes and all the customer ones I've worked on have significant physical networks that they connect to. May have better luck asking on the VMware forum, it's an issue at the hypervisor level that doesn't have any relation to the firewall. I doubt if there is an option to accomplish what you're looking to do.

Looking a bit closer at your expectation of this alleviating a need to upgrade to 10G by getting you 4G of throughput, that's just not going to happen. NICs are never going to achieve the forwarding performance of a switch. If you put those NICs into a real switch and balance across them you may get close to that depending on work load and a variety of other specifics.

-

I'm just speculating here, but couldn't it be that you're getting broadcast storms and switching loops (MAC Address table havoc in the switch) when plugging cables from switch into port 9-12?

Are you using a smart/managed switch with portchannel/etherchannel (Cisco language) setup?

Please elaborate on your setup a bit?:

What switch you're using, how those switchports are setup (Any STP, portfast, bpdu specifics??).

How's the other vSwitches connected? Anything special?

Any VLAN's? Trunked into pfSense or chosen on VSwitch config.One thing I could recommend is to activate port mirroring, or something equivalent on your switch (in essence everything going through the switch goes to that port also), hook up your PC to that port and check what happens in Wireshark when connecting the cables (if it's your network that fails and not the pfsense machine itself). I've seen surely wicked things fiddling with the network, that I could not predict, that was obvious once I saw what happened to the traffic.

-

Upon some further research I found that every uplink (physical NIC) you add to a virtual switch, esx expects that they all will allow you to reach the same network no matter which uplink is used. For you to utilize a software switch within esx and do what you are looking to do, you would have to upgrade to the Cisco Nexus 1000 series switches within esx, thus allowing it to actually work like a physical switch.

-

I've accidentally destroyed ESX 4.0 networking on single host after connecting all available 8-cables (2 x Quad Gigabit network cards) to single switch.

I did nothing more than plugging all cables to single switch. Worked on 5 hosts. Didn't worked on 1 host.

Don't know why it was responding on one part of company (ICMP ok), and was unreachable on other part (ICMP unreachable).

I think this was a driver failure in ESX, since I was able to get all 8-port working in LACP mode under LiveCD Linux distro.

I had to reinstall ESX to ESXi 4.0 then it worked with all 8-ports used with link aggregation mode IP hash.Hope this helps someone one day.