HFSC explained - decoupled bandwidth and delay - Q&A - Ask anything

-

When discussing HFSC, delay is measured as the time between the last bit being received and the last bit being transmitted. This particular type of delay is technically referred to as "transmission delay"; http://en.m.wikipedia.org/wiki/Transmission_delay

Understanding transmission delay is essential to understanding HFSC. This can be calculated by using;

D = N ÷ RD is delay expressed in seconds (multiply × 1000 for milliseconds)

N is the number of bits (packet size)

R is the bitrate (bits per second)A connection's bitrate, or the bitrate allocated to a queue, directly affects the length of time (delay) it will take to completely transmit a packet, from the first bit to the last. HFSC does things differently by allowing you to separately define the delay and average bitrate. The wording used in the HFSC paper is "decoupled delay and bandwidth allocation".

Real-time example:

Usually, you can only allocate bandwidth and delay together. For example, let's say you allocate 25kbit/sec (HFSC m2 param) to NTP's queue (do not underestimate this speed. My observed average over an hour was ~10kbit/sec.). My NTP packets are ~80bytes/640bits in size and are sent every 1-5min. Transmitting at 25kbit/sec, one 80byte/640bit packet would take ~26ms to send (640 / 25000). By only setting m2 you are not decoupling bandwidth and delay. By allocating 25kbit/sec you have doomed the NTP packets to a 26ms minimum delay. You could over-allocate more bandwidth to improve delay, but this is inefficient and wastes precious bandwidth. This is the drawback of the coupling of bandwidth with delay allocation.

By configuring the m1 and d parameters you can decouple bandwidth and delay. The m1 parameter defines the initial bitrate (which also determines delay, as explained above) upon packet receipt and the d parameter defines, in milliseconds, how long the m1 bitrate will be made available. My upload speed is 666kbit/sec but I limit it to 600kbit/sec. Real-time is limited to 80% of your configured limit (I think), which is 480kbit/sec. If we calculate the amount of time it would take for a 480kbit/sec connection to send a 80byte/640bit NTP packet it is 1.3ms (640 / 480000). With this information we set NTP's queue as follows:

Real-time

m1=480Kb

d=2 (as long as it is larger than the calculated minimum above it should be valid.)

m2=25KbWith these settings our NTP packets will be limited to a 25kbit/sec average, but they will have the delay of a 480kbit/sec connection. This means the NTP packets are guaranteed be transmitted within 2ms (should be ~1.3ms) instead of ~26ms with our earlier "coupled" example, which only used the m2 parameter. This is what decoupled delay and bandwidth allocation offers.

Real-time should only be used for services that require low-delay guarantees (NTP and VOIP, for example). Indiscriminate (over)usage of real-time may interact in unforeseen ways with link-share allocations unless you precisely understand the differences between real-time and link-share. If you are not using real-time's m1 and d parameters you should probably be using link-share.

It is important to realize that these delays, in a worst-case scenario, may be additionally delayed by the amount of time it takes to send a MTU-sized packet. So MTU tuning may be important. In my case that is ~17ms with a 1280byte MTU. (Thanks for this info Harvy66!)

Any questions, comments, corrections, or critiques are (mostly) welcome. :)

Regarding corrections and critiques, please cite your sources, otherwise your post may be ignored. :-[Links:

http://www.cs.cmu.edu/~hzhang/HFSC/HierarchicalScheduler.ps.gz <- Slide-show by HFSC's author

http://man7.org/linux/man-pages/man7/tc-hfsc.7.html <- Simplifies some of HFSC's math

http://serverfault.com/questions/105014/does-anyone-really-understand-how-hfsc-scheduling-in-linux-bsd-works

http://www.iijlab.net/~kjc/software/TIPS.txt <- ALTQ tips from ALTQ author

http://linux-ip.net/articles/hfsc.en/

http://www.cs.cmu.edu/~hzhang/HFSC/ <- HFSC author's website

http://linux-tc-notes.sourceforge.net/tc/doc/sch_hfsc.txt <- Good tutorial

http://wiki.openwrt.org/doc/howto/packet.scheduler/sch_hfsc

http://www.cs.cmu.edu/~hzhang/papers/SIGCOM97.pdf <- Original HFSC paper

http://www.dtic.mil/dtic/tr/fulltext/u2/a333257.pdf <- Longer version of HFSC paperSome versions of the HFSC papers say "Mbytes/s" while other say "Mbps" when referring to connection speeds. Mbps, megabits per second, is the correct version. The version from the author's site (the one I link to; "SIGCOM97.pdf") says "Mbps". Be aware of this.

-

Advanced real-time VOIP configuration

Disclaimer: This post is more theoretical than the first post because it gets into some undocumented capabilities (decoupled bandwidth and delay applied to simultaneous sessions/streams in the same class/queue). The possibility of me having misunderstood the HFSC paper is greater in this post. I also have zero experience with VOIP. Please report any errors.

Say you want to efficiently improve your VOIP setup. You have 7 VOIP lines and a 5Mbit upload. Each VOIP packet is 160 bytes (G.711), with an average bitrate of 64Kbit/s per line (a 160byte packet sent every 20ms). We want to improve our worst-case delay (which also improves worst-case jitter and overall call quality) from 20ms to 5ms, so we calculate the bitrate needed to achieve that:

160 bytes × 8 = 1280 bits

1280 bits × (1000ms ÷ 5ms) = 256000 bits/sSo, to send a 160 byte packet within 5ms we need to allocate 256Kbit/s. This gives us our m1 (256Kb) and our d (5).

Now we need to calculate our maximum average bitrate:

7 lines × 64Kbit/s = 448Kbit/s

Just to be safe, we allocate bandwidth for an extra line, so

8 lines × 64Kbit/s = 512Kbit/sThis gives us our m2 (512Kb).

To make sure that your m1 (the per packet delay) can always be fulfilled by your connection, make sure that the m1 (256Kb) multiplied by the maximum number of simultaneous sessions (7 or 8) is less than your maximum upload. 2048Kbit (256Kb × 8) is less than 5000Kbit, good.

Our finalized configuration is:

m1=256Kb

d=5

m2=512KbThis configuration will guarantee a 7.4ms (5ms+MTU transmission delay) worst-case delay for each packet, with a limit of 8 simultaneous calls (512Kbit/s). We get low-delay, low-jitter calls as though you had allocated 2048Kbit/s of bandwidth, but you actually only allocated 512Kbit/s of bandwidth.

The only negative side-effect is that all other traffic might have an increased latency of a few milliseconds. I am unclear on the precise latency increase, but since the worst-case (all virtual-circuits active) scenario would be 2048Kbit/s of your total 5Mbit/s for only 5ms.

Comments are welcomed. :)

-

Here is a quick and easy "hands-on" example of using HFSC's decoupled delay and bandwidth allocation. We will use upper-limit to add a 10ms delay to ping replies. Just ping pfSense from your LAN, before and after, to see results.

In the traffic-shaper, on your LAN interface, create an HFSC leaf queue called "qPing". Configure qPing as follows:

Bandwidth=1Kb

Upper-limit.m1=0Kb

Upper-limit.d=10

Upper-limit.m2=100KbCreate a firewall rule that assigns all LAN Network->LAN Address ICMP Echo/Reply (ping/pong) into "qPing".

Before:

PING pfsense.wlan (192.168.1.1) 56(84) bytes of data.

64 bytes from pfsense.wlan (192.168.1.1): icmp_seq=1 ttl=64 time=0.225 ms

64 bytes from pfsense.wlan (192.168.1.1): icmp_seq=2 ttl=64 time=0.200 msAfter:

PING pfsense.wlan (192.168.1.1) 56(84) bytes of data.

64 bytes from pfsense.wlan (192.168.1.1): icmp_seq=1 ttl=64 time=11.3 ms

64 bytes from pfsense.wlan (192.168.1.1): icmp_seq=2 ttl=64 time=11.9 msAccording to our "qPing" configuration, packets will be delayed an additional 10ms and the queue's total bandwidth will be held at or below 100Kbit/sec.

I hope that this shows how (a packet's) delay is a separate function from bandwidth. Delay can be increased, or decreased (limited by your link's max bitrate), without affecting throughput. Bandwidth and delay are "decoupled". :)

-

My limited understanding of "decoupled bandwidth and delay" is in reference to older traffic shaping algorithms that require delaying packets in order to shape them. HFSC can track bandwidth consumption without artificially creating a backlog of packets.Decoupled bandwidth and delay means the amount of assigned bandwidth to a queue does not affect its delay characteristics.When most people think of what's going on, they think of two or more packets arriving at the same time and the traffic shaper deciding which packet to forward next. If you actually look at most modern network connections, you rarely see two packets at the same time except above 80% link utilization. Packets are small and are quickly forwarded.

This doesn't always apply. For very slow link rates, like below 100Mb/s, the time taken to forward a 1500byte packet can be substantial, such that the chance of two packets interfering with each other is much higher. But for even many people with 10Mb connections, their link rates are typically 1Gb, but only provisioned 10Mb.

Transmitting at 25kbit/sec, one 80byte/640bit packet would take ~26ms to send (640 / 25000)

Packets are transmitted at link rate, but averaged over time to your desired rate. With your example 80byte packets, on a 1Gb link, it would be transmitted in 0.00000064 seconds or 0.00064 milliseconds. This is why averages are dangerous when thinking about micro-time scale events.

If packets are coming in faster than the buffer can be drained, then "buffering" will start to occur. With HFSC, this will act just like older algorithms, but when packets are enqueuing slower than they're being dequeued, HFSC has the advantage of not needing to delay.

All queues with HFSC have a "service curve". If you only have m2 set, then you have a linear service curve. If you have m1 and d set, then you can have a non-linear service curve, like a burst.

The stuff above is my understanding, which is probably some mixture of correct.

One thing to absolutely remember is to rate limit your interface. Here's a quote from another site. This is in reference to setting the parent queue rate.

The first line is simply a comment. It reminds one that our total upload bandwidth is 20Mb/s (megabits per second). You never want to use exactly the total upload speed, but a few kilobytes less.

Why? You want to use your queue as the limiting factor in the connection. When you send out data and you saturate your link the router you connect to will decide what packets go first and that is what we want HSFC to do. You can not trust your upstream router to queue packets correctly.

So, we limit the upload speed to just under the total available bandwidth. "Doesn't that waste some bandwidth then?" Yes, in this example we are not using 60KB/s, but remember we are making sure the upstream routers sends out the packets in the order we want, not what they decide. This makes all the difference with ACK packets and will actually increase the available bandwidth on a saturated connections.

-

Even when buffering occurs, latency due to buffering is still a bit difficult to understand when trying to think about micro-time scales at our normal experience at the macro level.

When monitoring my queues during bursty data transfers, I sometimes see my queue reaching nearly 200 packets for a brief moment. Mind you, I have Codel enabled, which means if a packet stays in the queue for longer than 5ms, it will start dropping packets. Even with a 200 packet backlog on my 95Mb/s rate limited connection, zero packets are being dropped. These packets are 1500 bytes each because I am doing HTTP transfers. These backlogs only last for a brief moment. Once TCP stabilizes, the backlog quickly drops to 0, even while my connection is nearly 100% utilization.

Modern TCP stacks not only track packet-drops, but also variation in delays. Because HFSC will start to delay packets as your approach saturation, HFSC creates a smoothing effect. This test was done with a single major flow, normally you would not see this with many interacting flows. 200 packets at 1500 bytes and 95Mb/s is still about 2.5ms of backlog, but that still isn't too bad, and my other queues are still isolated from this queue. My NTP and game packets will still slip right past that backlog like nothing was there. Yes, if one of those 1500byte HTTP packets were being dequeued, my NTP packet will need to wait, but at a link rate of 1Gb/s, that is only a 0.012ms dequeue time.

My tests are done with relatively few light flows and a single or few large flow(s). This does not perfectly reflect the real world like a business with many users. Another issue with my tests is I have a 100Mb provisioned internet connection, but my actual link rate is 1Gb/s. If I had 1Gb provisioned and I was up near 100% utilization, jitter may act differently.

Just as HFSC has a smoothing affect between queues and for a queue as a whole, fq_codel has a smoothing affect between flows within a queue. HFSC+fq_codel sounds like it should be a perfect marriage. The "smoothness" created by HFSC during backlog will be affected by kernel scheduling. On my machine, I am seeing 2,000 ticks per second per code, which is 0.5ms.

The coolest part about all of this is even though I regularly download or upload large bursts that saturate my connection, I still get zero packets dropped. In my opinion, HFSC has benefits beyond just traffic shaping in the regular sense, it also seems to have a bandwidth stabilization effect that reduces the need for packets getting dropped. I've checked my queue stats after a month of usage, 0 dropped packets, terabytes transferred, and according to the RRD the connection was maxed out several times, and codel making sure there is never more than a 5ms backlog.

High utilization

Stable bandwidth

Queue isolation

No buffer bloat

Sub 0.5ms of jitter at 100% utilization. I've seen as low as ~0.15ms -

I found a paper that describes delay and bandwidth coupling as the effect of better latency for higher bandwidth queues

. In this way bandwidth and delay are not decoupled because high priority classes get a better service from both the viewpoints of delay (high priority classes are served first) and bandwidth (excess bandwidth is shared among high priority classes first).

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.663&rep=rep1&type=pdf

Assume this same definition, HFSC has "decoupled" bandwidth and delay such that low bandwidth queues can have just as good delay characteristics, which is important for low bandwidth latency sensitive flows.

-

My limited understanding of "decoupled bandwidth and delay" is in reference to older traffic shaping algorithms that require delaying packets in order to shape them. HFSC can track bandwidth consumption without artificially creating a backlog of packets.

When most people think of what's going on, they think of two or more packets arriving at the same time and the traffic shaper deciding which packet to forward next. If you actually look at most modern network connections, you rarely see two packets at the same time except above 80% link utilization. Packets are small and are quickly forwarded.

This doesn't always apply. For very slow link rates, like below 100Mb/s, the time taken to forward a 1500byte packet can be substantial, such that the chance of two packets interfering with each other is much higher. But for even many people with 10Mb connections, their link rates are typically 1Gb, but only provisioned 10Mb.

Transmitting at 25kbit/sec, one 80byte/640bit packet would take ~26ms to send (640 / 25000)

Packets are transmitted at link rate, but averaged over time to your desired rate. With your example 80byte packets, on a 1Gb link, it would be transmitted in 0.00000064 seconds or 0.00064 milliseconds. This is why averages are dangerous when thinking about micro-time scale events.

Check out my link to the tc-hfsc manpage and see for yourself when HFSC considers a service-curve backlogged.

Bitrate averages can definitely be confusing depending on granularity of time, but since "kern.hz=1000" is the default, let us assume 1ms. This means HFSC can only control the bitrate as measured over a 1 millisecond time span. This will be acceptable for most people. I assume if you understand HFSC that you are aware of kern.hz's importance and know how to change it if needed.

HFSC makes guarantees backed up by simulations and mathematics. When I tell HFSC to send at 25Kbit/sec, I trust that it will do that. Without proof otherwise, I will trust HFSC.

If packets are coming in faster than the buffer can be drained, then "buffering" will start to occur. With HFSC, this will act just like older algorithms, but when packets are enqueuing slower than they're being dequeued, HFSC has the advantage of not needing to delay.

All queues with HFSC have a "service curve". If you only have m2 set, then you have a linear service curve. If you have m1 and d set, then you can have a non-linear service curve, like a burst.

The stuff above is my understanding, which is probably some mixture of correct.

One thing to absolutely remember is to rate limit your interface. Here's a quote from another site. This is in reference to setting the parent queue rate.

The first line is simply a comment. It reminds one that our total upload bandwidth is 20Mb/s (megabits per second). You never want to use exactly the total upload speed, but a few kilobytes less.

Why? You want to use your queue as the limiting factor in the connection. When you send out data and you saturate your link the router you connect to will decide what packets go first and that is what we want HSFC to do. You can not trust your upstream router to queue packets correctly.

So, we limit the upload speed to just under the total available bandwidth. "Doesn't that waste some bandwidth then?" Yes, in this example we are not using 60KB/s, but remember we are making sure the upstream routers sends out the packets in the order we want, not what they decide. This makes all the difference with ACK packets and will actually increase the available bandwidth on a saturated connections.

Artificially limiting your upload below your real-world speed is a common thing in any QoS. This is to assure delay and packet prioritization is controlled by your QoS's intelligent queue and not some stupid FIFO buffer at your modem or ISP.

-

I found a paper that describes delay and bandwidth coupling as the effect of better latency for higher bandwidth queues

. In this way bandwidth and delay are not decoupled because high priority classes get a better service from both the viewpoints of delay (high priority classes are served first) and bandwidth (excess bandwidth is shared among high priority classes first).

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.663&rep=rep1&type=pdf

Assume this same definition, HFSC has "decoupled" bandwidth and delay such that low bandwidth queues can have just as good delay characteristics, which is important for low bandwidth latency sensitive flows.

Whether or not to use decoupled delay and bandwidth allocation is up to the user. I am simply trying to explain what it is and what the advantages are over the standard "coupled" method.

-

Even when buffering occurs, latency due to buffering is still a bit difficult to understand when trying to think about micro-time scales at our normal experience at the macro level.

When monitoring my queues during bursty data transfers, I sometimes see my queue reaching nearly 200 packets for a brief moment. Mind you, I have Codel enabled, which means if a packet stays in the queue for longer than 5ms, it will start dropping packets. Even with a 200 packet backlog on my 95Mb/s rate limited connection, zero packets are being dropped. These packets are 1500 bytes each because I am doing HTTP transfers. These backlogs only last for a brief moment. Once TCP stabilizes, the backlog quickly drops to 0, even while my connection is nearly 100% utilization.

Modern TCP stacks not only track packet-drops, but also variation in delays. Because HFSC will start to delay packets as your approach saturation, HFSC creates a smoothing effect. This test was done with a single major flow, normally you would not see this with many interacting flows. 200 packets at 1500 bytes and 95Mb/s is still about 2.5ms of backlog, but that still isn't too bad, and my other queues are still isolated from this queue. My NTP and game packets will still slip right past that backlog like nothing was there. Yes, if one of those 1500byte HTTP packets were being dequeued, my NTP packet will need to wait, but at a link rate of 1Gb/s, that is only a 0.012ms dequeue time.

My tests are done with relatively few light flows and a single or few large flow(s). This does not perfectly reflect the real world like a business with many users. Another issue with my tests is I have a 100Mb provisioned internet connection, but my actual link rate is 1Gb/s. If I had 1Gb provisioned and I was up near 100% utilization, jitter may act differently.

Just as HFSC has a smoothing affect between queues and for a queue as a whole, fq_codel has a smoothing affect between flows within a queue. HFSC+fq_codel sounds like it should be a perfect marriage. The "smoothness" created by HFSC during backlog will be affected by kernel scheduling. On my machine, I am seeing 2,000 ticks per second per code, which is 0.5ms.

The coolest part about all of this is even though I regularly download or upload large bursts that saturate my connection, I still get zero packets dropped. In my opinion, HFSC has benefits beyond just traffic shaping in the regular sense, it also seems to have a bandwidth stabilization effect that reduces the need for packets getting dropped. I've checked my queue stats after a month of usage, 0 dropped packets, terabytes transferred, and according to the RRD the connection was maxed out several times, and codel making sure there is never more than a 5ms backlog.

High utilization

Stable bandwidth

Queue isolation

No buffer bloat

Sub 0.5ms of jitter at 100% utilization. I've seen as low as ~0.15ms(For the sake of clarity, please stop using "dequeueing time" and "delay" interchangeably since the HFSC paper defines them as two different things.)

How does your connection act with no traffic shaping at all?

Regarding 100% utilization and no backlog, where are you seeing that?

When I say backlog, I am referring to what HFSC considers a backlog. -

Things that are different with traffic shaping off

- Bandwidth reaches maximum slightly faster

- Maximum bandwidth has a more distinct peak and less rounded

- Maximum bandwidth goes above my provisioned rate and has a bit of rebound where it bounces back below max, the slowly creeps back up. It has more up and down without HFSC and a slightly lower average.

- Bandwidth in general is more bursty and some sources can send bursts way to quickly and cause loss

- I used to get increased jitter during saturation with ping spikes of 10ms-20ms before packetloss, but it seems my ISP somewhat recently changed something such that latency is relatively stable. I assume an fq_codel like AQM. I have no discernible difference in latency during complete saturation and idle, only an increase in packetloss.

One big difference is without HFSC, YouTube sends 1gb microbursts at me, which can cause packetloss if I do not have HFSC enabled. I assume that HFSC limits how fast the packets are received by my computer which limits how quickly my computer ACKs the data. My ISP's traffic shaper is not very quick and I can receive 2,000+ 1500byte packets in a small fraction of a second if the connection is mostly idle at the time the burst starts. ACKing too many packets during a strong burst causes YouTube to become too aggressive and floods my connection for a brief moment. This is mostly a non-issue since the assumed AQM change, but could also be attributed to my new 100Mb provisioned rate instead of my old 50Mb.

-

Things that are different with traffic shaping off

- Bandwidth reaches maximum slightly faster

- Maximum bandwidth has a more distinct peak and less rounded

- Maximum bandwidth goes above my provisioned rate and has a bit of rebound where it bounces back below max, the slowly creeps back up. It has more up and down without HFSC and a slightly lower average.

- Bandwidth in general is more bursty and some sources can send bursts way to quickly and cause loss

- I used to get increased jitter during saturation with ping spikes of 10ms-20ms before packetloss, but it seems my ISP somewhat recently changed something such that latency is relatively stable. I assume an fq_codel like AQM. I have no discernible difference in latency during complete saturation and idle, only an increase in packetloss.

One big difference is without HFSC, YouTube sends 1gb microbursts at me, which can cause packetloss if I do not have HFSC enabled. I assume that HFSC limits how fast the packets are received by my computer which limits how quickly my computer ACKs the data. My ISP's traffic shaper is not very quick and I can receive 2,000+ 1500byte packets in a small fraction of a second if the connection is mostly idle at the time the burst starts. ACKing too many packets during a strong burst causes YouTube to become too aggressive and floods my connection for a brief moment. This is mostly a non-issue since the assumed AQM change, but could also be attributed to my new 100Mb provisioned rate instead of my old 50Mb.

Interesting. Thanks for the detailed answer.

I would think the receipt of bursts that cause packet loss would be more related to TCP settings than HFSC. I would assume it is all related though.

I have never seen HFSC referred to as an AQM. pfSense includes RED, RIO, CoDel, and ECN, which are AQMs though. Do you have sources saying HFSC is an AQM?

You mentioned 0% packet loss earlier; are you sure of that? Here is a quote from RFC5681 - TCP Congestion Control: https://tools.ietf.org/html/rfc5681

Also, note that the algorithms specified in this document work in terms of using loss as the signal of congestion. Explicit Congestion Notification (ECN) could also be used as specified in [RFC3168].

Honestly, I am a networking newbie and my knowledge outside of the topic of this thread is very spotty and very limited. Understanding and properly employing HFSC's decoupled delay and bandwidth allocation is hard enough without going off-topic and possibly overwhelming or further confusing someone who is attempting to understand HFSC. I am just saying, let's not get too far off-topic. :)

-

I wasn't trying to refer to HFSC as an AQM, sorry. I am under the impression that my ISP recently enabled an AQM because when I got upgraded to 100Mb, I started to do my normal suite of basic load tests to find my stable max bandwidth when I noticed that my ping was staying perfectly flat during the entire test, but I would get increasing packetloss. I've done a lot of load tests and I've never seen that happen before without enabling HFSC.

The burst of packetloss at the beginning of a Youtube stream only happened when I had HFSC disabled. When I enabled it, the loss went away and so did the 10ms-20ms of jitter that accompanied the loss.

The 0% dropped packets is based on what I am seeing in PFTop as reported for all queues on my firewall. Even when I saturate my connection, the dropped packet count does not go up. Unless there is a bug/feature of the FreeBSD/PFSense Codel implementation that does not report dropped packets. Even without codel, I sized my buffers to be sufficiently large to not have packetloss. The whole annoying YouTube burst issue was causing my 2500 FIFO buffer to overflow, and even sometimes my 5,000 buffer.

I understand that too large of buffers are bad, but the issue I was having was even though I had a 50mb connection, the data was coming in at 1Gb for a brief moment. If my buffer was not large enough to capture those packets, I was having issues where the 2,500 buffer queue was dropping enough packets in a row to negatively affect my ability to load YouTube and Google. I had to increase my buffer size out of necessity, it was very annoying. Then I learned about Codel, enabled it, and I haven't seen a dropped packet according to PFTop since.

I to am under the impression that the primary way TCP detects congestion is via packetloss, but I was also reading about "interrupt coalescing" on my Intel i350, and one of the thing Intel mentioned was issues with striking a balance of low latency interrupt handling without interrupt flooding. The article that I found specifically mentioned that many TCP stacks throughput can be negatively affected by delaying interrupts for even tens of microseconds. The article was from around 2005 if I remember correctly and Intel said they have worked with major TCP stacks to lessen the impact of delayed interrupts. I'm not entirely sure how microsecond will make a difference on internet ping times, but I figured if microsecond could make a difference in some situations, then milliseconds could also make a difference.

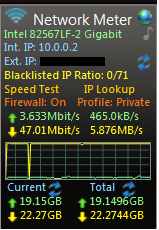

Here's an image from a few years back when I first started testing my brand-new 50/50 connection. I had no traffic shaping, I was plugged directly into my switch, no firewall, Win7 with default TCP settings. This simple test was me downloading a file from an FTP server in New York City around 9pm. That is nearly 1,000 miles away from me, one way trip. Look at how flat that transfer is. That one blip of dropping to zero is because my HD started to thrash, no SSD back then and only 4GB of memory. I do not see the classic "saw tooth" pattern at all. I also noticed at the time that my ping stayed "crazy flat" and I had "really low" packetloss. Unfortunately I don't remember the exact number. All I remember is I was coming from cable to "dedicated fiber" and I was flabbergasted.

This isn't a perfect image to represent what I'm going after, but it does kind of show no dropped packets, low queues, and high throughput. The P2P traffic is hundreds of flows from many peers. A sustained single flow typically has a much lower number of queued packets, nearing 0, in my experience.

-

I found a paper that describes delay and bandwidth coupling as the effect of better latency for higher bandwidth queues

. In this way bandwidth and delay are not decoupled because high priority classes get a better service from both the viewpoints of delay (high priority classes are served first) and bandwidth (excess bandwidth is shared among high priority classes first).

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.118.663&rep=rep1&type=pdf

Assume this same definition, HFSC has "decoupled" bandwidth and delay such that low bandwidth queues can have just as good delay characteristics, which is important for low bandwidth latency sensitive flows.

As I re-read your post I realize I am unclear what the intended purpose of your post is. I assume it is to debate what "decoupled" means or how HFSC achieves decoupled delay and bandwidth. Apologies if I have misunderstood, I am simply trying to be as clear and precise as possible when describing HFSC.

Your first sentence is true. If bandwidth and delay are coupled, the higher the connection's bitrate, the lower the delay and vice versa (lower bitrate, higher delay).

The official HFSC paper states:

In this section, we first define the service curve QoS model and motivate the advantage of using a non-linear service curve to decouple delay and bandwidth allocation.

So, non-linear service curves decouple delay and bandwidth.

A non-linear (therefore 2-piece) service curve can only be accomplished by setting the "m1", "d", and "m2" parameters.

A linear (1-piece) service curve is accomplished by exclusively setting the "m2" parameter.

(You could theoretically have a linear, 2-piece, by setting m1 and m2 to the same value, but why…?)HFSC does not automatically decouple low-bandwidth queues, or any queues, without being explicitly configured to do so by properly setting the values of the m1, d, and m2 parameters.

Let me know what things are unclear or incorrect in the thread's first post and I will fix them.

Btw, I hate you for having that 100Mbit fiber connection. :'(;)

-

I decided to google "non linear service curve" and "delay", and I see a lot of topics saying that you said: A non-linear service curve is used to decouple delay and "rate"

I can only see one of two situations that can result from this

- You must define a non-linear curve (burst) in HFSC in order to decouple delay and bandwidth

- You can define linear curves, but they turn into non-linear as part of HFSC's scheduling as bandwidth is "consumed"

#1 seems the simplest and simple is high correlated with correct.

In my defense, whatever blackmagic is going on in HFSC, it's freaking awesome. I only have linear service curves. Ping wise, you can't even tell I'm maxing out my connection. It was 50Mb and was rate limited to 48Mb. The big red arch is BitTorrent and the bursts in between are me running a speedtest in my browser.

My gut still tells me that HFSC is keeping delay and bandwidth separate in my case. No matter how hard I load my connection, I can keep packetloss and jitter to effectively zero. I cannot measure a latency difference between idle and full load, even when I have large bursty swings between queues, all the while maintaining high utilization of my connection.

-

I decided to google "non linear service curve" and "delay", and I see a lot of topics saying that you said: A non-linear service curve is used to decouple delay and "rate"

I can only see one of two situations that can result from this

- You must define a non-linear curve (burst) in HFSC in order to decouple delay and bandwidth

- You can define linear curves, but they turn into non-linear as part of HFSC's scheduling as bandwidth is "consumed"

#1 seems the simplest and simple is high correlated with correct.

In my defense, whatever blackmagic is going on in HFSC, it's freaking awesome. I only have linear service curves. Ping wise, you can't even tell I'm maxing out my connection. It was 50Mb and was rate limited to 48Mb. The big red arch is BitTorrent and the bursts in between are me running a speedtest in my browser.

My gut still tells me that HFSC is keeping delay and bandwidth separate in my case. No matter how hard I load my connection, I can keep packetloss and jitter to effectively zero. I cannot measure a latency difference between idle and full load, even when I have large bursty swings between queues, all the while maintaining high utilization of my connection.

Good post! (lol @ HFSC black magic)

An example of decoupled delay and bandwidth allocation directly saving money (this is mentioned in the HFSC paper) is in the corporate VOIP sector. With the "coupled" method, you would need to purchase/install more bandwidth to guarantee VOIP latencies because you would need to over-allocate (waste) bandwidth to decrease delay. With the "decoupled" method you can guarantee low-delay with minimum bandwidth allocation.

Most home users really have no need for HFSC. With a single low-delay dependant service like VOIP, PRIQ is much simpler and will give VOIP very similar latencies.

Regarding your gut… is he even literate? :P

Admittedly, I really only understand a few things about HFSC, so it could be causing your connection to be god-like but without something to support that assertion, I will assume otherwise. -

This quote from the HFSC paper is useful because it clarifies differences between the parameters used in the paper (u-max, d-max, r), and the parameters that pfSense uses (m1, d, m2).

Each session i is characterized by three parameters: the largest unit of work, denoted u-max, for which the session requires delay guarantee, the guaranteed delay d-max, and the session's average rate r. As an example, if a session requires per packet delay guarantee, then u-max represents the maximum size of a packet. Similarly, a video or an audio session can require per frame delay guarantee, by setting u-max to the maximum size of a frame. The session's requirements are mapped onto a two-piece linear service curve, which for computation efficiency is defined by the following three parameters: the slope of the first segment m1, the slope of the second segment m2, and the x-coordinate of the intersection between the two segments x. The mapping (u-max, d-max, r) -> (m1, x, m2) for both concave and convex curves is illustrated in Figure 8.

The paper uses u-max (packet/frame size) in it's examples, but pfSense uses m1 (bandwidth).

When glancing at a configuration using m1, d, and m2, it is obvious whether the parameters are meant to decrease delay (m1 > m2) or increase delay (m1 < m2).

The u-max/d-max notation is not as intuitive. -

Advanced real-time VOIP configuration

Disclaimer: This post is more theoretical than the first post because it gets into some undocumented capabilities (decoupled bandwidth and delay applied to simultaneous sessions/streams in the same class/queue). The possibility of me having misunderstood the HFSC paper is greater in this post. I also have zero experience with VOIP. Please report any errors.

Say you want to efficiently improve your VOIP setup. You have 7 VOIP lines and a 5Mbit upload. Each VOIP packet is 160 bytes (G.711), with an average bitrate of 64Kbit/s per line (a 160byte packet sent every 20ms). We want to improve our worst-case delay (which also improves worst-case jitter and overall call quality) from 20ms to 5ms, so we calculate the bitrate needed to achieve that:

160 bytes × 8 = 1280 bits

1280 bits × (1000ms ÷ 5ms) = 256000 bits/sSo, to send a 160 byte packet within 5ms we need to allocate 256Kbit/s. This gives us our m1 (256Kb) and our d (5).

Now we need to calculate our maximum average bitrate:

7 lines × 64Kbit/s = 448Kbit/s

Just to be safe, we allocate bandwidth for an extra line, so

8 lines × 64Kbit/s = 512Kbit/sThis gives us our m2 (512Kb).

To make sure that your m1 (the per packet delay) can always be fulfilled by your connection, make sure that the m1 (256Kb) multiplied by the maximum number of simultaneous sessions (7 or 8) is less than your maximum upload. 2048Kbit (256Kb × 8) is less than 5000Kbit, good.

Our finalized configuration is:

m1=256Kb

d=5

m2=512KbThis configuration will guarantee a 7.4ms (5ms+MTU transmission delay) worst-case delay for each packet, with a limit of 8 simultaneous calls (512Kbit/s). We get low-delay, low-jitter calls as though you had allocated 2048Kbit/s of bandwidth, but you actually only allocated 512Kbit/s of bandwidth.

The only negative side-effect is that all other traffic might have an increased latency of a few milliseconds. I am unclear on the precise latency increase, but since the worst-case (all virtual-circuits active) scenario would be 2048Kbit/s of your total 5Mbit/s for only 5ms.

-

You could over-allocate more bandwidth to improve delay, but this is inefficient and wastes precious bandwidth.

Yes and no. For fixed bandwidth traffic like VoIP, it does not increase bandwidth to fill, and any unused bandwidth will be shared with the other queues. Even if if you set the bandwidth to 256Kb and VoIP used it all, if it did not have that bandwidth, a non-linear service curve would do nothing to help because it would be bandwidth starved.

A non-linear service curve used to decouple delay and bandwidth is only useful if the flows in that queue are using less-than-or-equal-to the amount of bandwidth. In theory, you could just give that queue 256Kb. In practice, this opens you up to that queue potentially using more bandwidth than expected, which could harm the bandwidth of the other queues. This really is a management tool that says "I will give you only this amount of bandwidth, but I will make sure your latency stays good, as long as you don't try to go over".

This really is a management tool that says "I don't trust you to manage yourself, and if you don't manage yourself, you're going to have a bad time, but if you do, you'll have good latency".

The only reason I point this out is it may be less useful for home users than business/power users. Much simpler to just tell a home user to give VoIP enough bandwidth with a linear service curve since all unused bandwidth gets shared anyway. Not to say this is how it should be told all the time, just be careful about your current audience, if you think they're good enough to properly micromanage their traffic.

-

I dragged this over from another thread

I feel fairly confident that the d(duration), for the purpose of latency sensitive traffic, should be set to your target worst latency. m1 should be thought of not as bandwidth, but the size in bits of the total number of packets relieved during that duration. m2 would be set as the average amount of bandwidth consumed.

Incorrect. m1 and m2 define bandwidth.

This is where I'm a little confused. While m1 and m2 define bandwidth, the over-all average may not *exceed m2, even though m1 is larger. And the way the one paper calculated what bandwidth to use in m1 was the total the desired burst amount, which was 160bytes, converted to bits, 1280, divided by d, which was 5ms, which resulted in 256Kb, which they used as their "bandwidth".

*Exceed: I'm not sure if their is hard or soft limit, such that it may or may not temporarily exceed, as long as the average is approximately held within some exact bound related to m1 and d.

-

i'm thinking out loud here, so add thoughts if you want.

"Decoupled delay and bandwidth"

There seems to be two different but related ideas of this concept.

-

HFSC m1 and d settings. HFSC is so good at providing bandwidth, that it can accurately replicate a synchronous link of a given rate. Using m1 and d can decouple bandwidth and delay by allowing a low bandwidth queue experience the reduced delays of a high bandwidth queue, assuming it does not try to over-consume bandwidth.

-

Some traffic shapers add additional delay to the lower bandwidth queues because the algorithms are characteristically delayed similar to weighted round robin and will process higher bandwidth queues first. HFSC does not process higher bandwidth queues first, it is constantly interleaving among the queues trying to maintain the service curves.

To me it seems that HFSC has two types of decouplings of delay and bandwidth.

-