Improve Custom refresh pattern

-

Being trying to add nvidia updates (using GeForce Experience) to the refresh patten but not having any success

I have tried

#nvidia updates refresh_pattern -i download.nvidia.com/.*\.(cab|exe|ms[i|u|f]|[ap]sf|wm[v|a]|dat|zip) 4320 80% 432000 reload-into-ims #nvidia updates refresh_pattern -i international-gfe.download.nvidia.com/.*\.(cab|exe|ms[i|u|f]|[ap]sf|wm[v|a]|dat|zip) 4320 80% 432000 reload-into-ims #nvidia updates refresh_pattern -i international-gfe.download.nvidia.com.global.ogslb.com/.*\.(cab|exe|ms[i|u|f]|[ap]sf|wm[v|a]|dat|zip) 4320 80% 432000 reload-into-imsI get TCP_TUNNEL/200 international-gfe.download.nvidia.com:443

Are nvidia updates not cacheable?

-

Being trying to add nvidia updates (using GeForce Experience) to the refresh patten but not having any success

I have tried

#nvidia updates refresh_pattern -i download.nvidia.com/.*\.(cab|exe|ms[i|u|f]|[ap]sf|wm[v|a]|dat|zip) 4320 80% 432000 reload-into-ims #nvidia updates refresh_pattern -i international-gfe.download.nvidia.com/.*\.(cab|exe|ms[i|u|f]|[ap]sf|wm[v|a]|dat|zip) 4320 80% 432000 reload-into-ims #nvidia updates refresh_pattern -i international-gfe.download.nvidia.com.global.ogslb.com/.*\.(cab|exe|ms[i|u|f]|[ap]sf|wm[v|a]|dat|zip) 4320 80% 432000 reload-into-imsI get TCP_TUNNEL/200 international-gfe.download.nvidia.com:443

Are nvidia updates not cacheable?

It's using HTTPS, so unless you've got MITM enabled. I guess not. Personally I gave up using Squid as it seems be slower than other proxies at the moment. This can sometimes reduce the caching benefits.

On top of that, most pages are quite dynamic. -

I gave up on caching, period. I use squid as a base for squidguard for URL filtering only.

-

@KOM:

I gave up on caching, period. I use squid as a base for squidguard for URL filtering only.

Same, although I'm using E2 Guardian for filtering now. It's been way better than SquidGuard as it allows you to block pages based on its contents rather than just relying on a black list. It's also been significantly faster than Squid for me.

-

Hmmm. I avoided E2 because I didn't want to futz around on our production firewall with some hacky kludge that had to be put together by hand like some IKEA furniture. I also assumed it was a heavy package like pfBlocker. Maybe I'm totally wrong, but I'm happy enough with squid/squidguard for my uses.

-

@KOM:

Hmmm. I avoided E2 because I didn't want to futz around on our production firewall with some hacky kludge that had to be put together by hand like some IKEA furniture. I also assumed it was a heavy package like pfBlocker. Maybe I'm totally wrong, but I'm happy enough with squid/squidguard for my uses.

No, it's actually quite the opposite. Initially I was quite sceptical too, but most of the issues with FreeBSD has been ironed out. It's very easy to give it a try. It works by adding an extra repository, and then the package appears in the usual package manager. It's simple enough to add/remove. But if you're going to be enabling MITM, and doing anything advanced. I definitely recommend playing around with it, outside of your production environment first. Ever since V5, it's been running really well and has been scaling well. In terms of being a heavy package, it depends what you do with it. You can set up this beast to do some pretty advanced stuff like editing HTTPS pages on the fly which will use more CPU resource than the typical filtering.

Personally I use it because it allows you to have groups and have MITM enabled for some groups whereas have Splice All for other groups. Works really well for my use case, where you have Wireless users bringing their own device, where I can't deploy a CA. They use the Splice All. Where as all the other machines have CA installed on them. On top of that, I love the fact that you do not have to rely on a black list in order to deem content appropriate or inappropriate. Would love to see this default in pfSense one day. :)

But yeah, if SquidGuard works for you. Go for it, when I was using SquidGuard it gave me far more issues. SquidGuard itself pretty much needs a full re-write, and is currently sort of in limbo without proper development or updates.

-

Is their going be any more update on the Improve Custom refresh pattern

-

refresh patterns are not really my area of expertise, what would be ideal is a github page is added to the squid package and can be loaded from Dynamic and Update Content Custom refresh_patterns. That way it can be maintain more by the community (by users who know more about refresh patterns). Maybe this could be added when they update squid to 4.5.

refresh patterns are also a bit of a hit and miss with dynamic content being harder to cache.

-

I had posted a thread, talking about having made a community github collaborative effort, and asked for help contributing to it, only to have @aGeekhere reply to me linking this, so, I guess that's now a thing by my own sheer coincidental effort haha.

if anyone wants to offer to help with this github collaborative effort, COMPLETELY UNOFFICIAL TO SQUID, please let me know so I can add you as a contributor, because by sheer coincidence, my goal was the same as what this thread sought to achieve, I AM NOT AT ALL related/whatever to squid, I just want the same goal that everyone else in this thread is after....ironic, but I'm loving the amusement this is giving me haha.

https://github.com/mmd123/squid-cache-dynamic_refresh-list

-

An update to the refresh pattern which fixes Invalid regular expression, WARNINGS and UPGRADES

https://github.com/mmd123/squid-cache-dynamic_refresh-list -

Thank you!! This works great even in 2022

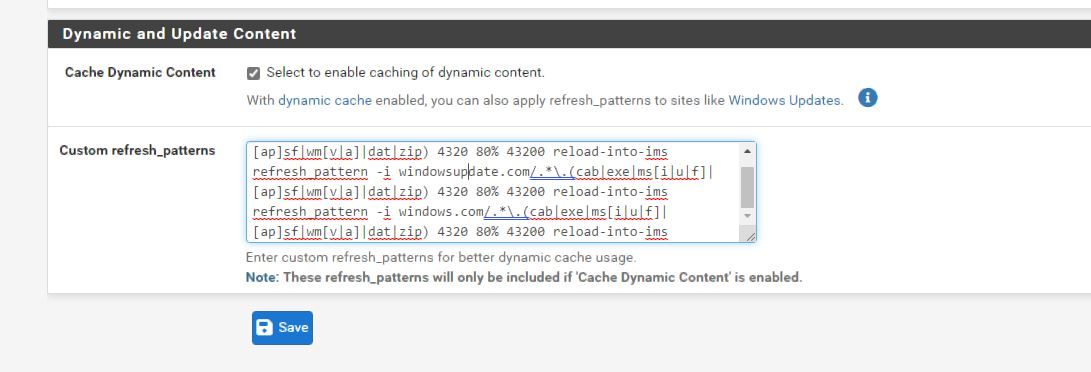

(Image: GUI Refresh added)

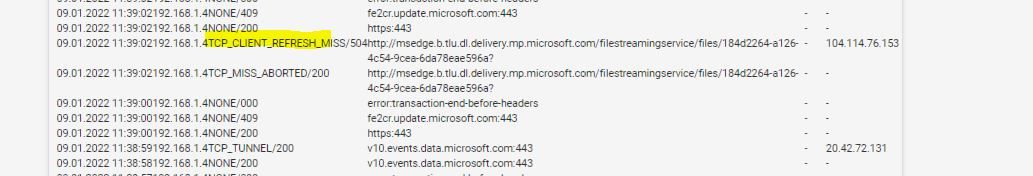

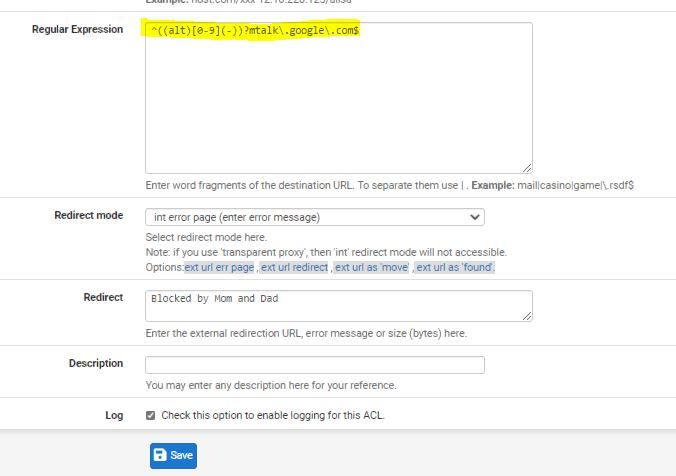

(Image: Showing a refresh is now marked as "miss" so ready for next time)Side Note: Have you tried blocking out by regular expression in Squid Guard? I did m.talk.google. I have been researching a way to do this, last night I found a bit of information on the internet on Reddit.

Reason 1. I do not have mtalk installed or in use on any system.

Reason 2. it runs all the time nonstop on any system you have Chrome installed on.Reason 3. It passed traffic even when not using Chrome.

(Image: SquidGuard regular expression)

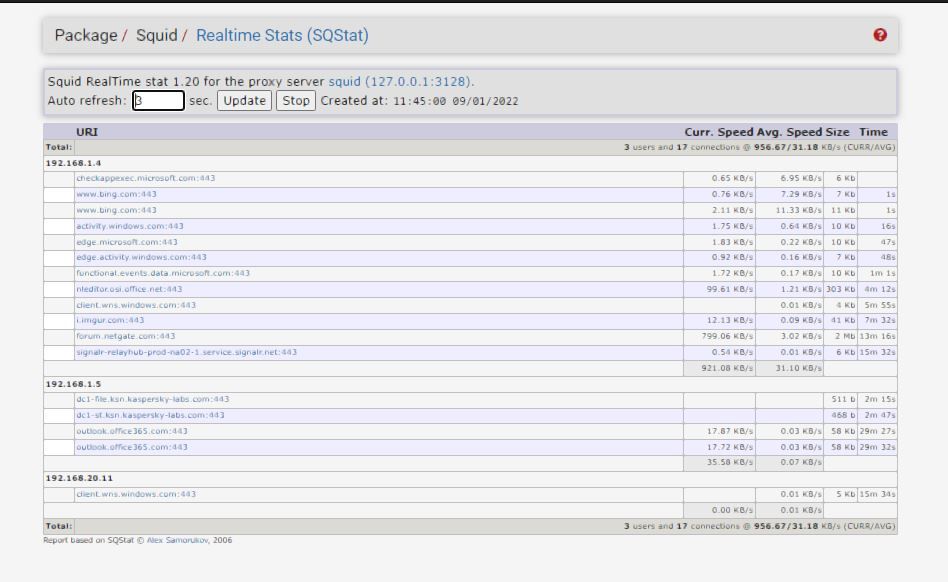

(Image: Mtalk gone!!!) -

Anyone figure out a way to use this with containers that are used with Docker? A catch and guard for approved containers?

-

@jonathanlee what exactly is it that you're wanting to know if you can cache this way for Docker images, like are you wanting the cache the apt packages or what is it you're after? If it's the apt packages, that I'm pretty confident that they already get cached regardless of whether they're in Docker or not because of some of the previous refresh patterns covered. Also my apologies I actually haven't been messing with this for a little bit due to other network complications, I actually had to uninstall squid entirely, so I'm a little bit late to responding to any of this.

-

Hello thank you for the response. Yes I am trying to cache this way for Docker VM container/images. Mainly I would like to know if Kali's pentesting toolkit/container is downloaded from a security standpoint, Kali is an amazing pentesting tool however in the wrong hands it can be scary. I would like to have the ability to block for one that specific container from unapproved use, similar to what Squidguard does with unwanted URLs. The goal here is to start to compartmentalize Docker containers and other 3rd party VM containers, with a tracking system that has labels functions similar to Squidguard's "Blacklisting" options. I really would like to learn more about blocking specific Docker containers from being downloaded at all over a firewall with the help of dynamic catching. This is very new to me working with custom patterns. However, for cybersecurity, this is pure gold for current container based security issues. With containers now having an ability to even sandbox themselves on user or antivirus discovery similar to that of Windows 10's Sandbox software, these unapproved containers are now becoming a cybersecurity issue. Todays rapid internet speeds and full VM deployments inside of containers are becoming the norm as well as becoming a real talking point for cybersecurity issues. Kali's Docker container would be the main Item I want to block to learn with. Once a container is downloaded and in use on a system that system sometimes can not see it, or even scan that container with antivirus software, as this container/VM sometimes resides in RAM or memory on some versions. Memory today has reached levels of 16GBs and up on some laptops. I for one use to run a 8088 CPU that had a 10MB HDD and MS-DOS 3.11 on it. Now with the volatile memory sizes of today this paints a new issue that plagues our electronic devices if left unchecked. The main goal is to have the proxy have container based security options and measures. This proxy caching looks like a major start for a solution for such a major issue. Older content accelerators of the past with some new software can fix a lot of container issues. Yes not all containers are a issue but like any there is always some bad ones we would like to keep a close eye on.

-

@jonathanlee said in Improve Custom refresh pattern:

Older content accelerators of the past with some new software can fix a lot of container issues. Yes not all containers are a issue but like any there is always some bad ones we would like to keep a close eye on.

"Older content accelerators used in the past with some new software can fix a lot of container issues. Yes not all containers are a issue but like any thing there is always some bugs we would like to keep a close eye on.

-

@jonathanlee assuming your using pfsense AND squid and not just using squid by itself on something like ubuntu, it might be possible to use the ACL tab and block or restrict access to specific domains used for the kali docker image and or find the urls that the kali docker image seeks for updates and block it that way, though at this point I feel this is less a job for squid refresh patterns and more a job for an actual firewall given your after blocking and not so much caching given you want to disallow access to kali. Unless I am mistaken, I feel this would be better served by firewall side and access control lists. In any case, it feels like you want to use access control lists, if you only want to allow certain authorized hosts to use kali, then you want access control lists to lock down access to specific ip addresses, ranges, or hosts.

-

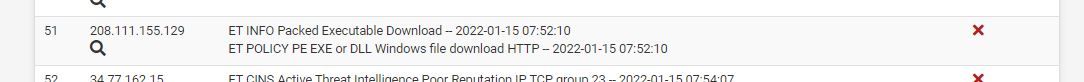

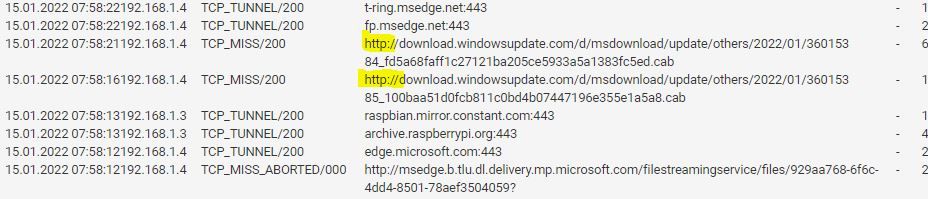

@high_voltage spot on. I added custom URL blocking for Docker, ruby gems, and hotjar, as well as expressions and blocking of url shorteners. Thanks for your reply. I also keep having Windows 10 trying to update all the sudden over http, and the firewall is blocking that as a policy violation.

It's weird this update was working before. What's confusing is I can click on that link and it will download directly also.

I was able to direct the Raspberry pi to update only over https using a different mirror. But Windows it just keeps trying http.

-

@jonathanlee have you made sure that you cleared out the squid disk cache (again, assuming you're using squid over pfsense, which I would in hindsight hope you are doing now that I think about it given your posting here on the pfsense support forum, DUH) after making any and all changes? though....hindsight, you might not need to do that since your not messing with refresh patterns this time but instead messing with ACL's.... uh, not sure if this would be useful in this case here or not, but possibly, if you're saying things are being strange (not entirely sure, my mind is on 20 different projects right now as I make this latest reply right here) but maybe if things ARE being weird, try resetting the states table in pfsense? I have had the oddball situation that things after paying a ton of attention (more than I should have to be honest) I finally said "F it" and reset the firewall states table to try and test if that cleared out oddball situations, and it has, so maybe try that?

apologies for the hastily typed up reply, but as I said, my mind is on 20 different projects at the moment that I type this up.

-

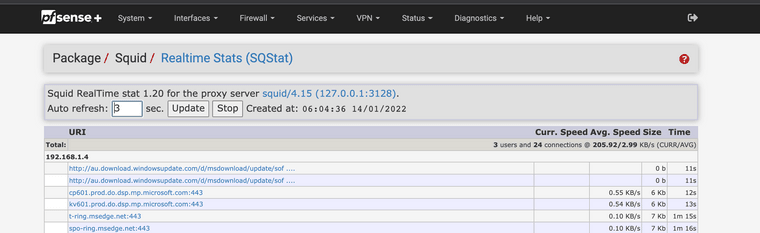

@high_voltage thanks for the reply,

Yes this Netgate 2100 max firewall is running Squid over pfSense with custom refresh patterns and Squid guard. I also reset the disk cache and have this installed WPAD for on and off the wifi with smartphone I even added SSL certificates to everything. I forced all traffic into the proxy port 3128-3129 everything works Hulu, Disney plus, Amazon video. I watch XFILES alot lately over Hulu with this config. What's weird I can take that link and manually download the update over the browser, but somehow the system won't download the update. It's almost paused just gets to 0. My Raspberry Pi was doing this also, I changed the mirrors to only use https downloads under some settings and that now updates fine. Again Windows 10 pro is not using https for the anti virus signature updates right now. I am still new to this refresh options. When you force traffic over the proxy port 80 is still working for everything else I can access http over web url use. It can see Squid guard blocks and will display viruses on clam AV tests. This thing is a tank. This last configuration fix will make it work perfectly. Why does Microsoft use http for updates? Most of the internet moved to HTTPS. It's weird right? I am about to factory default it and try again. Nat I tested with a port redirect also. I tested using just transparent. Everything works except the Windows 10 pro updates. It worked last night however Microsoft started using https for a couple hours.

-

@jonathanlee ill be totally honest, part of why i recently disabled squid was for similar issues, for whay ever reason, linux updates broke recently when using squid, not sure why but this definitely seems to be an issue for some reason right now.