Improve Custom refresh pattern

-

@jonathanlee ill be totally honest, part of why i recently disabled squid was for similar issues, for whay ever reason, linux updates broke recently when using squid, not sure why but this definitely seems to be an issue for some reason right now.

-

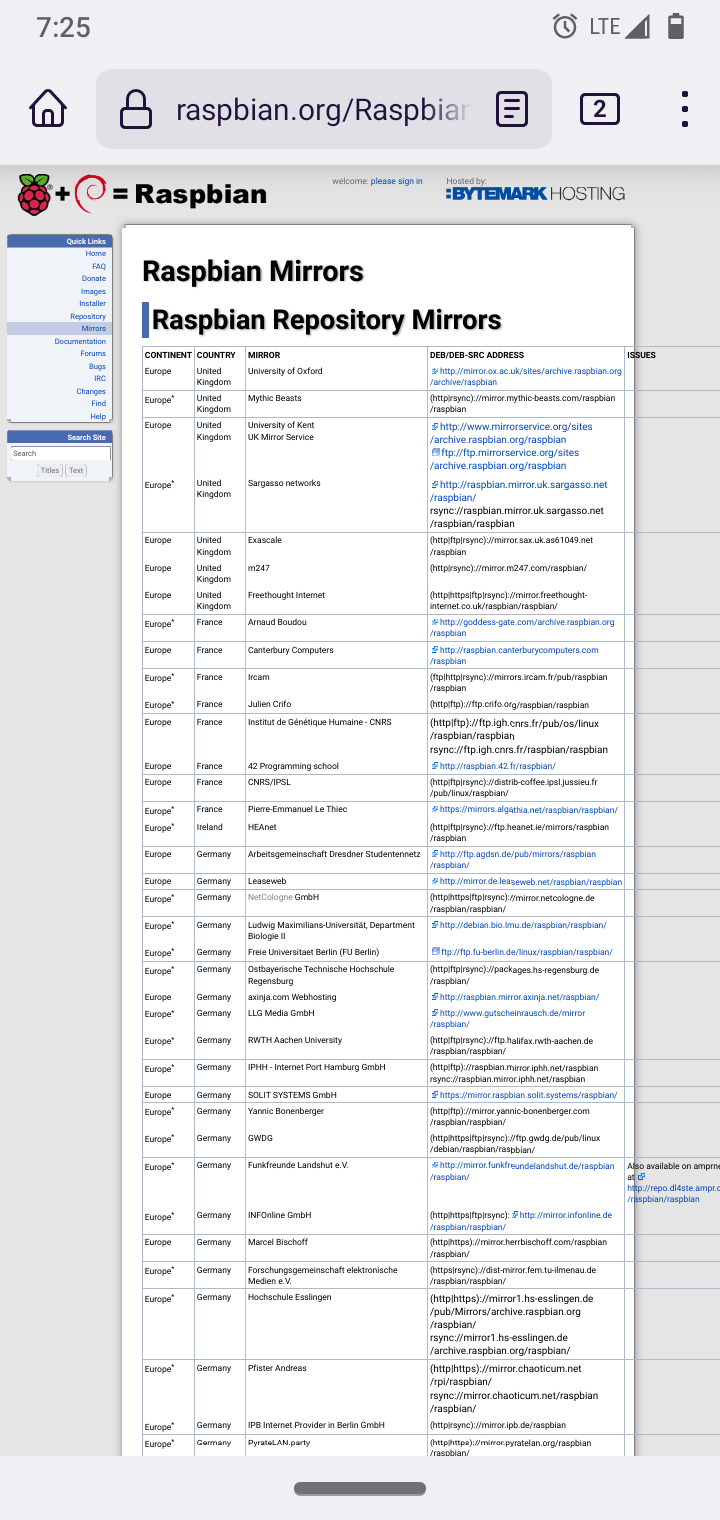

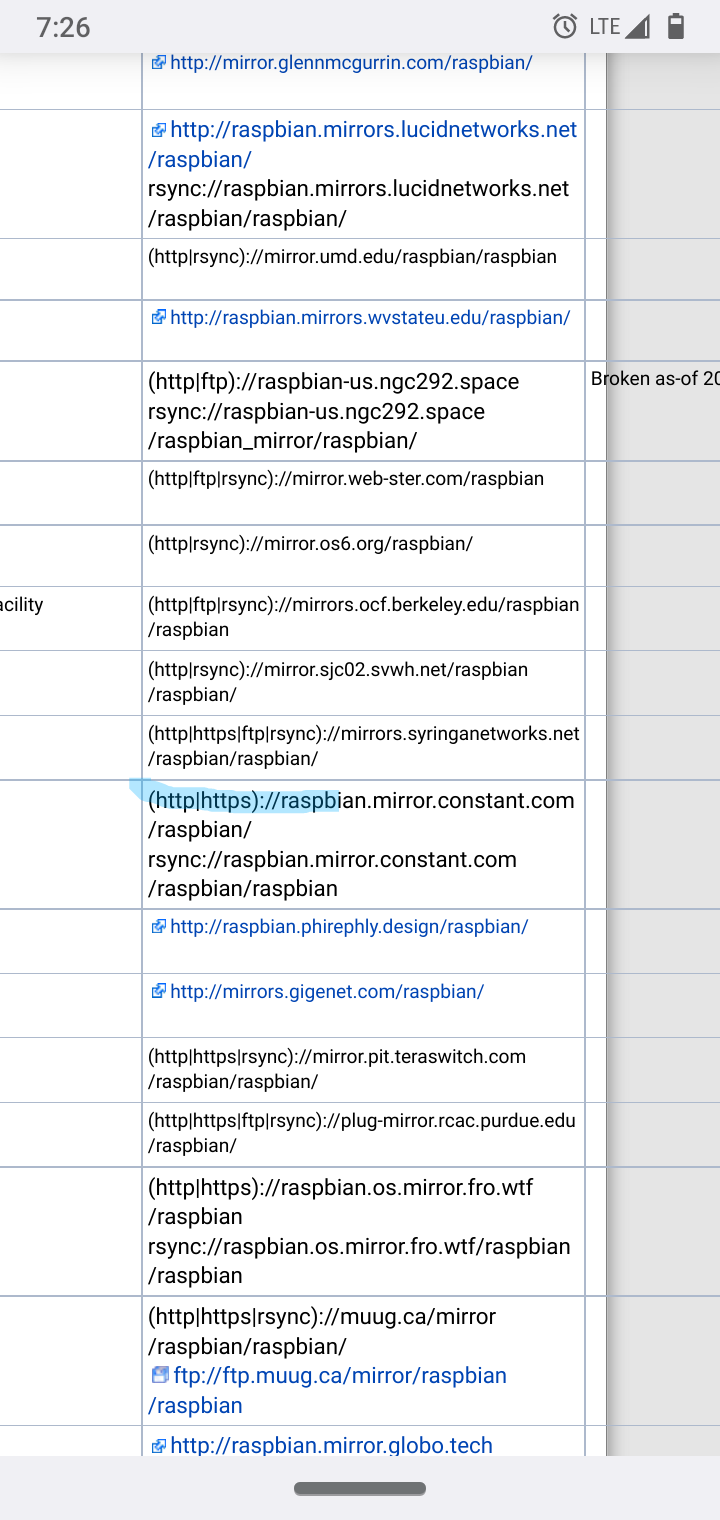

@high_voltage I got my Raspberry Pi to work with a different mirror I edited sources to one that allowed https. When I run apt-get update it uses a different mirror now I use the constant com's mirror.

Edit this file

/etc/apt/sources.listAdd a https source from the update mirrors for example in Raspberry Pi Linux I changed it to a https source.

Check out other countries some are almost all https like Germany.

-

@jonathanlee it has got to work the same for refreshers for other Linux flavors also.

-

@jonathanlee that was the only way to get Linux updates to work with Squid for me, it was doing the same thing as Windows updates, Squid would show a http and when you looked at Squid guard's live connections it would only show 0.

-

Made new post with this specific issue.

https://forum.netgate.com/topic/169166/warning-possible-bypass-attempt-found-multiple-slashes-where-only-one-is-expected-http-dl-delivery-mp-microsoft-com-filestreamingservice-files/3?_=1642466910316

-

Here it is, per your request, a Windows 10 update cached and delivered to another machine. Notice the HIT

(IMAGE: Windows dynamic refresh patterns to work recently)

-

@jonathanlee For whatever reason, it's worth noting I literally only just discovered 2 weeks ago that apparently a good chunk of my problems were due to transparent squid and clam AV, having clamAV set to scan all mode was causing random issues I cannot even begin to pinpoint. Setting it to scan Web only fixed everything, but having it set to scan all mode for whatever reason would cause apt packages To fail at trying to receive header information. Even http connections failed due to this.

-

@high_voltage I think it is the same as if you were to do a ClamAV scan on Kali Linux. So many packages and tools come up as issues when they are in fact only Pen Testing tools. In PFsense Curl, and many other items are included in packages and may scan as false positives also as they are not on a client machine however part of a firewall. It should have a scan Squid Cache option, that is what should be scanned right? Think about the number of items stored in the content accelerator that could be invasive. Why does squid not include scan local cache as an option?

-

@jonathanlee no, i mean it broke traffic entirely.

-

@high_voltage wow that's different. I had issues where I needed to clear the cache before the traffic would flow again, almost like a container was in the cache.

-

@jonathanlee Huh? last time I posted in this thread was 4 years ago.

-

@kom sorry I thought you wanted to see a Windows 10 update run that was cached.

-

@jonathanlee Perhaps four years ago I did. I don't remember since it's been four years. I don't even use squid anymore. It's completely useless other than as a base for squidguard URL filtering.

-

@kom I respectfully disagree with "useless", I use it for HTTPS cache anti-virus scanning of HTTPS websites and HTTP. Dynamic caching, URL filtering, and blocking. Don't get me wrong it is rather complicated to understand, however the vast abilities that it has to customize a network environment by need is what sets it apart. It can do many things. It is just a challenge to learn. It has also protected my system from many hidden issues that Clam AV stops and reports with HTTPS alongside pup detection as well as generates clear reports. It's Mirrored Analytics down to a granular level.

-

@kivimart is it working for squid version - 4.45

-

@dmalick You can use the latest here https://github.com/mmd123/squid-cache-dynamic_refresh-list

Yes it works with the latest squid

-

@ageekhere it is working thanks

-

@dmalick keep in mind that sometimes if changes in a website are very small it will still load old information if you use ssl intercept and have it set up to cache https. I have had a issue with a time card that would not load a new line because the watermark was to low to require a refresh, however on a different machine it would see the new time card. Just be aware that things are still a work in progress.

-

This post is deleted! -

@jonathanlee my configuration (is it fine)

# This file is automatically generated by pfSense # Do not edit manually ! http_port 192.168.1.3:3128 ssl-bump generate-host-certificates=on dynamic_cert_mem_cache_size=50MB cert=/usr/local/etc/squid/serverkey.pem cafile=/usr/local/share/certs/ca-root-nss.crt capath=/usr/local/share/certs/ cipher=EECDH+ECDSA+AESGCM:EECDH+aRSA+AESGCM:EECDH+ECDSA+SHA384:EECDH+ECDSA+SHA256:EECDH+aRSA+SHA384:EECDH+aRSA+SHA256:EECDH+aRSA+RC4:EECDH:EDH+aRSA:HIGH:!RC4:!aNULL:!eNULL:!LOW:!3DES:!MD5:!EXP:!PSK:!SRP:!DSS tls-dh=prime256v1:/etc/dh-parameters.2048 options=NO_SSLv3,SINGLE_DH_USE,SINGLE_ECDH_USE icp_port 0 digest_generation off dns_v4_first on pid_filename /var/run/squid/squid.pid cache_effective_user squid cache_effective_group proxy error_default_language en icon_directory /usr/local/etc/squid/icons visible_hostname pfSense.local.dev cache_mgr admin@localhost access_log /var/squid/logs/access.log cache_log /var/squid/logs/cache.log cache_store_log none netdb_filename /var/squid/logs/netdb.state pinger_enable on pinger_program /usr/local/libexec/squid/pinger sslcrtd_program /usr/local/libexec/squid/security_file_certgen -s /var/squid/lib/ssl_db -M 4MB -b 2048 tls_outgoing_options cafile=/usr/local/share/certs/ca-root-nss.crt tls_outgoing_options capath=/usr/local/share/certs/ tls_outgoing_options options=NO_SSLv3,SINGLE_DH_USE,SINGLE_ECDH_USE tls_outgoing_options cipher=EECDH+ECDSA+AESGCM:EECDH+aRSA+AESGCM:EECDH+ECDSA+SHA384:EECDH+ECDSA+SHA256:EECDH+aRSA+SHA384:EECDH+aRSA+SHA256:EECDH+aRSA+RC4:EECDH:EDH+aRSA:HIGH:!RC4:!aNULL:!eNULL:!LOW:!3DES:!MD5:!EXP:!PSK:!SRP:!DSS tls_outgoing_options flags=DONT_VERIFY_PEER sslcrtd_children 25 sslproxy_cert_error allow all sslproxy_cert_adapt setValidAfter all sslproxy_cert_adapt setValidBefore all sslproxy_cert_adapt setCommonName all logfile_rotate 7 debug_options rotate=7 shutdown_lifetime 3 seconds forwarded_for delete via off httpd_suppress_version_string on uri_whitespace strip cache_mem 3072 MB maximum_object_size_in_memory 51200 KB memory_replacement_policy heap GDSF cache_replacement_policy heap LFUDA minimum_object_size 0 KB maximum_object_size 512 MB cache_dir aufs /var/squid/cache 20000 32 256 offline_mode off cache_swap_low 90 cache_swap_high 95 acl donotcache dstdomain "/var/squid/acl/donotcache.acl" cache deny donotcache cache allow all # Add any of your own refresh_pattern entries above these. refresh_pattern ^ftp: 1440 20% 10080 refresh_pattern ^gopher: 1440 0% 1440 refresh_pattern -i (/cgi-bin/|\?) 0 0% 0 refresh_pattern . 0 20% 4320 #Remote proxies # Setup some default acls # ACLs all, manager, localhost, and to_localhost are predefined. acl allsrc src all acl safeports port 21 70 80 210 280 443 488 563 591 631 777 901 8080 3128 3129 1025-65535 acl sslports port 443 563 8080 acl purge method PURGE acl connect method CONNECT # Define protocols used for redirects acl HTTP proto HTTP acl HTTPS proto HTTPS # SslBump Peek and Splice # http://wiki.squid-cache.org/Features/SslPeekAndSplice # http://wiki.squid-cache.org/ConfigExamples/Intercept/SslBumpExplicit # Match against the current step during ssl_bump evaluation [fast] # Never matches and should not be used outside the ssl_bump context. # # At each SslBump step, Squid evaluates ssl_bump directives to find # the next bumping action (e.g., peek or splice). Valid SslBump step # values and the corresponding ssl_bump evaluation moments are: # SslBump1: After getting TCP-level and HTTP CONNECT info. # SslBump2: After getting TLS Client Hello info. # SslBump3: After getting TLS Server Hello info. # These ACLs exist even when 'SSL/MITM Mode' is set to 'Custom' so that # they can be used there for custom configuration. acl step1 at_step SslBump1 acl step2 at_step SslBump2 acl step3 at_step SslBump3 acl allowed_subnets src 192.168.1.44/32 192.168.1.41/32 acl whitelist dstdom_regex -i "/var/squid/acl/whitelist.acl" acl sslwhitelist ssl::server_name_regex -i "/var/squid/acl/whitelist.acl" acl blacklist dstdom_regex -i "/var/squid/acl/blacklist.acl" http_access allow manager localhost http_access deny manager http_access allow purge localhost http_access deny purge http_access deny !safeports http_access deny CONNECT !sslports # Always allow localhost connections http_access allow localhost request_body_max_size 0 KB delay_pools 1 delay_class 1 2 delay_parameters 1 -1/-1 -1/-1 delay_initial_bucket_level 100 delay_access 1 allow allsrc # Reverse Proxy settings # Custom options before auth # Always allow access to whitelist domains http_access allow whitelist # Block access to blacklist domains http_access deny blacklist # Set YouTube safesearch restriction acl youtubedst dstdomain -n www.youtube.com m.youtube.com youtubei.googleapis.com youtube.googleapis.com www.youtube-nocookie.com request_header_access YouTube-Restrict deny all request_header_add YouTube-Restrict none youtubedst ssl_bump peek step1 ssl_bump splice sslwhitelist ssl_bump bump all # Setup allowed ACLs http_access allow allowed_subnets # Default block all to be sure http_access deny allsrc icap_enable on icap_send_client_ip on icap_send_client_username on icap_client_username_encode off icap_client_username_header X-Authenticated-User icap_preview_enable on icap_preview_size 1024 icap_service service_avi_req reqmod_precache icap://127.0.0.1:1344/squid_clamav bypass=on adaptation_access service_avi_req allow all icap_service service_avi_resp respmod_precache icap://127.0.0.1:1344/squid_clamav bypass=on adaptation_access service_avi_resp allow all