Pfsense not responding to large packet pings

-

I'd still love to know why you're trying to send such huge packets. I've never seen anyone attempt that. Though I agree, I expect it to work.

Try looking atnetstat -s. Do you see fragments dropped?[22.01-DEVELOPMENT][root@6100.stevew.lan]/root: netstat -sp ip ip: 1139040 total packets received 0 bad header checksums 0 with size smaller than minimum 0 with data size < data length 0 with ip length > max ip packet size 0 with header length < data size 0 with data length < header length 0 with bad options 0 with incorrect version number 0 fragments received 0 fragments dropped (dup or out of space) 0 fragments dropped after timeout 0 packets reassembled ok 1135659 packets for this host 3070 packets for unknown/unsupported protocol 0 packets forwarded (0 packets fast forwarded) 10 packets not forwardable 0 packets received for unknown multicast group 0 redirects sent 1181042 packets sent from this host 4 packets sent with fabricated ip header 0 output packets dropped due to no bufs, etc. 15 output packets discarded due to no route 0 output datagrams fragmented 0 fragments created 0 datagrams that can't be fragmented 0 tunneling packets that can't find gif 0 datagrams with bad address in headerSteve

-

Morning guys. Thanks again for the replies.

I am not using the VM NIC or drivers for them. I'm using the Linux drivers for the real card (see link above to information and specs).

I see 0 fragments dropped when I check using that command.

tcp: 83035 packets sent 36238 data packets (41227594 bytes) 582 data packets (243226 bytes) retransmitted 6 data packets unnecessarily retransmitted 0 resends initiated by MTU discovery 38414 ack-only packets (0 delayed) 0 URG only packets 0 window probe packets 3 window update packets 7969 control packets 74803 packets received 32446 acks (for 41228656 bytes) 600 duplicate acks 0 acks for unsent data 29375 packets (14627752 bytes) received in-sequence 26 completely duplicate packets (2050 bytes) 0 old duplicate packets 0 packets with some dup. data (0 bytes duped) 11 out-of-order packets (5806 bytes) 0 packets (0 bytes) of data after window 0 window probes 10 window update packets 11 packets received after close 304 discarded for bad checksums 0 discarded for bad header offset fields 0 discarded because packet too short 0 discarded due to full reassembly queue 550 connection requests 6711 connection accepts 0 bad connection attempts 0 listen queue overflows 83 ignored RSTs in the windows 7261 connections established (including accepts) 4975 times used RTT from hostcache 4975 times used RTT variance from hostcache 247 times used slow-start threshold from hostcache 7452 connections closed (including 466 drops) 4703 connections updated cached RTT on close 4703 connections updated cached RTT variance on close 30 connections updated cached ssthresh on close 0 embryonic connections dropped 29794 segments updated rtt (of 15066 attempts) 2225 retransmit timeouts 179 connections dropped by rexmit timeout 0 persist timeouts 0 connections dropped by persist timeout 0 Connections (fin_wait_2) dropped because of timeout 0 keepalive timeouts 0 keepalive probes sent 0 connections dropped by keepalive 1855 correct ACK header predictions 22033 correct data packet header predictions 6712 syncache entries added 19 retransmitted 32 dupsyn 0 dropped 6711 completed 0 bucket overflow 0 cache overflow 1 reset 0 stale 0 aborted 0 badack 0 unreach 0 zone failures 6712 cookies sent 0 cookies received 116 hostcache entries added 0 bucket overflow 29 SACK recovery episodes 59 segment rexmits in SACK recovery episodes 84944 byte rexmits in SACK recovery episodes 563 SACK options (SACK blocks) received 125 SACK options (SACK blocks) sent 0 SACK scoreboard overflow 0 packets with ECN CE bit set 0 packets with ECN ECT(0) bit set 0 packets with ECN ECT(1) bit set 0 successful ECN handshakes 0 times ECN reduced the congestion window 0 packets with matching signature received 0 packets with bad signature received 0 times failed to make signature due to no SA 0 times unexpected signature received 0 times no signature provided by segment 0 Path MTU discovery black hole detection activations 0 Path MTU discovery black hole detection min MSS activations 0 Path MTU discovery black hole detection failures TCP connection count by state: 0 connections in CLOSED state 6 connections in LISTEN state 0 connections in SYN_SENT state 0 connections in SYN_RCVD state 1 connection in ESTABLISHED state 0 connections in CLOSE_WAIT state 0 connections in FIN_WAIT_1 state 0 connections in CLOSING state 3 connections in LAST_ACK state 0 connections in FIN_WAIT_2 state 3 connections in TIME_WAIT state udp: 783358 datagrams received 0 with incomplete header 0 with bad data length field 0 with bad checksum 12958 with no checksum 464 dropped due to no socket 560538 broadcast/multicast datagrams undelivered 0 dropped due to full socket buffers 0 not for hashed pcb 222356 delivered 1574821 datagrams output 0 times multicast source filter matched sctp: 0 input packets 0 datagrams 0 packets that had data 0 input SACK chunks 0 input DATA chunks 0 duplicate DATA chunks 0 input HB chunks 0 HB-ACK chunks 0 input ECNE chunks 0 input AUTH chunks 0 chunks missing AUTH 0 invalid HMAC ids received 0 invalid secret ids received 0 auth failed 0 fast path receives all one chunk 0 fast path multi-part data 0 output packets 0 output SACKs 0 output DATA chunks 0 retransmitted DATA chunks 0 fast retransmitted DATA chunks 0 FR's that happened more than once to same chunk 0 output HB chunks 0 output ECNE chunks 0 output AUTH chunks 0 ip_output error counter Packet drop statistics: 0 from middle box 0 from end host 0 with data 0 non-data, non-endhost 0 non-endhost, bandwidth rep only 0 not enough for chunk header 0 not enough data to confirm 0 where process_chunk_drop said break 0 failed to find TSN 0 attempt reverse TSN lookup 0 e-host confirms zero-rwnd 0 midbox confirms no space 0 data did not match TSN 0 TSN's marked for Fast Retran Timeouts: 0 iterator timers fired 0 T3 data time outs 0 window probe (T3) timers fired 0 INIT timers fired 0 sack timers fired 0 shutdown timers fired 0 heartbeat timers fired 0 a cookie timeout fired 0 an endpoint changed its cookiesecret 0 PMTU timers fired 0 shutdown ack timers fired 0 shutdown guard timers fired 0 stream reset timers fired 0 early FR timers fired 0 an asconf timer fired 0 auto close timer fired 0 asoc free timers expired 0 inp free timers expired 0 packet shorter than header 0 checksum error 0 no endpoint for port 0 bad v-tag 0 bad SID 0 no memory 0 number of multiple FR in a RTT window 0 RFC813 allowed sending 0 RFC813 does not allow sending 0 times max burst prohibited sending 0 look ahead tells us no memory in interface 0 numbers of window probes sent 0 times an output error to clamp down on next user send 0 times sctp_senderrors were caused from a user 0 number of in data drops due to chunk limit reached 0 number of in data drops due to rwnd limit reached 0 times a ECN reduced the cwnd 0 used express lookup via vtag 0 collision in express lookup 0 times the sender ran dry of user data on primary 0 same for above 0 sacks the slow way 0 window update only sacks sent 0 sends with sinfo_flags !=0 0 unordered sends 0 sends with EOF flag set 0 sends with ABORT flag set 0 times protocol drain called 0 times we did a protocol drain 0 times recv was called with peek 0 cached chunks used 0 cached stream oq's used 0 unread messages abandonded by close 0 send burst avoidance, already max burst inflight to net 0 send cwnd full avoidance, already max burst inflight to net 0 number of map array over-runs via fwd-tsn's ip: 87076069 total packets received 0 bad header checksums 0 with size smaller than minimum 1 with data size < data length 0 with ip length > max ip packet size 0 with header length < data size 0 with data length < header length 0 with bad options 0 with incorrect version number 70 fragments received 0 fragments dropped (dup or out of space) 0 fragments dropped after timeout 23 packets reassembled ok 844447 packets for this host 0 packets for unknown/unsupported protocol 48034746 packets forwarded (12779678 packets fast forwarded) 2157 packets not forwardable 0 packets received for unknown multicast group 0 redirects sent 983998 packets sent from this host 0 packets sent with fabricated ip header 0 output packets dropped due to no bufs, etc. 0 output packets discarded due to no route 810 output datagrams fragmented 2826 fragments created 0 datagrams that can't be fragmented 0 tunneling packets that can't find gif 0 datagrams with bad address in header icmp: 6483 calls to icmp_error 0 errors not generated in response to an icmp message Output histogram: echo reply: 3139 destination unreachable: 6464 time exceeded: 19 0 messages with bad code fields 0 messages less than the minimum length 0 messages with bad checksum 0 messages with bad length 0 multicast echo requests ignored 0 multicast timestamp requests ignored Input histogram: echo reply: 82 destination unreachable: 63 echo: 3139 time exceeded: 51 3139 message responses generated 0 invalid return addresses 0 no return routes ICMP address mask responses are disabled igmp: 0 messages received 0 messages received with too few bytes 0 messages received with wrong TTL 0 messages received with bad checksum 0 V1/V2 membership queries received 0 V3 membership queries received 0 membership queries received with invalid field(s) 0 general queries received 0 group queries received 0 group-source queries received 0 group-source queries dropped 0 membership reports received 0 membership reports received with invalid field(s) 0 membership reports received for groups to which we belong 0 V3 reports received without Router Alert 2 membership reports sent ipsec: 0 inbound packets violated process security policy 0 inbound packets failed due to insufficient memory 0 invalid inbound packets 0 outbound packets violated process security policy 0 outbound packets with no SA available 0 outbound packets failed due to insufficient memory 0 outbound packets with no route available 0 invalid outbound packets 0 outbound packets with bundled SAs 0 spd cache hits 0 spd cache misses 0 clusters copied during clone 0 mbufs inserted during makespace ah: 0 packets shorter than header shows 0 packets dropped; protocol family not supported 0 packets dropped; no TDB 0 packets dropped; bad KCR 0 packets dropped; queue full 0 packets dropped; no transform 0 replay counter wraps 0 packets dropped; bad authentication detected 0 packets dropped; bad authentication length 0 possible replay packets detected 0 packets in 0 packets out 0 packets dropped; invalid TDB 0 bytes in 0 bytes out 0 packets dropped; larger than IP_MAXPACKET 0 packets blocked due to policy 0 crypto processing failures 0 tunnel sanity check failures esp: 0 packets shorter than header shows 0 packets dropped; protocol family not supported 0 packets dropped; no TDB 0 packets dropped; bad KCR 0 packets dropped; queue full 0 packets dropped; no transform 0 packets dropped; bad ilen 0 replay counter wraps 0 packets dropped; bad encryption detected 0 packets dropped; bad authentication detected 0 possible replay packets detected 0 packets in 0 packets out 0 packets dropped; invalid TDB 0 bytes in 0 bytes out 0 packets dropped; larger than IP_MAXPACKET 0 packets blocked due to policy 0 crypto processing failures 0 tunnel sanity check failures ipcomp: 0 packets shorter than header shows 0 packets dropped; protocol family not supported 0 packets dropped; no TDB 0 packets dropped; bad KCR 0 packets dropped; queue full 0 packets dropped; no transform 0 replay counter wraps 0 packets in 0 packets out 0 packets dropped; invalid TDB 0 bytes in 0 bytes out 0 packets dropped; larger than IP_MAXPACKET 0 packets blocked due to policy 0 crypto processing failures 0 packets sent uncompressed; size < compr. algo. threshold 0 packets sent uncompressed; compression was useless pim: 0 messages received 0 bytes received 0 messages received with too few bytes 0 messages received with bad checksum 0 messages received with bad version 0 data register messages received 0 data register bytes received 0 data register messages received on wrong iif 0 bad registers received 0 data register messages sent 0 data register bytes sent carp: 0 packets received (IPv4) 0 packets received (IPv6) 0 packets discarded for wrong TTL 0 packets shorter than header 0 discarded for bad checksums 0 discarded packets with a bad version 0 discarded because packet too short 0 discarded for bad authentication 0 discarded for bad vhid 0 discarded because of a bad address list 0 packets sent (IPv4) 0 packets sent (IPv6) 0 send failed due to mbuf memory error pfsync: 0 packets received (IPv4) 0 packets received (IPv6) 0 clear all requests received 0 state inserts received 0 state inserted acks received 0 state updates received 0 compressed state updates received 0 uncompressed state requests received 0 state deletes received 0 compressed state deletes received 0 fragment inserts received 0 fragment deletes received 0 bulk update marks received 0 TDB replay counter updates received 0 end of frame marks received /0 packets discarded for bad interface 0 packets discarded for bad ttl 0 packets shorter than header 0 packets discarded for bad version 0 packets discarded for bad HMAC 0 packets discarded for bad action 0 packets discarded for short packet 0 states discarded for bad values 0 stale states 0 failed state lookup/inserts 0 packets sent (IPv4) 0 packets sent (IPv6) 0 clear all requests sent 0 state inserts sent 0 state inserted acks sent 0 state updates sent 0 compressed state updates sent 0 uncompressed state requests sent 0 state deletes sent 0 compressed state deletes sent 0 fragment inserts sent 0 fragment deletes sent 0 bulk update marks sent 0 TDB replay counter updates sent 0 end of frame marks sent 0 failures due to mbuf memory error 0 send errors arp: 2989 ARP requests sent 47409 ARP replies sent 203553 ARP requests received 1558 ARP replies received 205111 ARP packets received 2481 total packets dropped due to no ARP entry 1229 ARP entrys timed out 0 Duplicate IPs seen ip6: 23482557 total packets received 0 with size smaller than minimum 0 with data size < data length 0 with bad options 0 with incorrect version number 0 fragments received 0 fragments dropped (dup or out of space) 0 fragments dropped after timeout 0 fragments that exceeded limit 0 atomic fragments 0 packets reassembled ok 160041 packets for this host 17515875 packets forwarded 0 packets not forwardable 959 redirects sent 866221 packets sent from this host 0 packets sent with fabricated ip header 0 output packets dropped due to no bufs, etc. 0 output packets discarded due to no route 0 output datagrams fragmented 959 fragments created 0 datagrams that can't be fragmented 0 packets that violated scope rules 108127 multicast packets which we don't join Input histogram: hop by hop: 13610 TCP: 14062747 UDP: 9169861 fragment: 959 ESP: 36 ICMP6: 235344 Mbuf statistics: 480 one mbuf two or more mbuf: lo0= 27250 23454827 one ext mbuf 0 two or more ext mbuf 0 packets whose headers are not contiguous 0 tunneling packets that can't find gif 0 packets discarded because of too many headers 282 failures of source address selection source addresses on an outgoing I/F 273468 link-locals 559008 globals source addresses on a non-outgoing I/F 3431 globals 282 addresses scope=0xf source addresses of same scope 273468 link-locals 318195 globals source addresses of a different scope 244244 globals Source addresses selection rule applied: 835907 first candidate 282 same address 562439 appropriate scope icmp6: 955 calls to icmp6_error 0 errors not generated in response to an icmp6 message 0 errors not generated because of rate limitation Output histogram: unreach: 951 time exceed: 4 echo reply: 5 router solicitation: 2 router advertisement: 27400 neighbor solicitation: 64021 neighbor advertisement: 29642 MLDv2 listener report: 16 0 messages with bad code fields 0 messages < minimum length 0 bad checksums 0 messages with bad length Input histogram: unreach: 1 echo: 5 router solicitation: 2339 router advertisement: 32621 neighbor solicitation: 29642 neighbor advertisement: 60940 Histogram of error messages to be generated: 0 no route 0 administratively prohibited 0 beyond scope 941 address unreachable 10 port unreachable 0 packet too big 4 time exceed transit 0 time exceed reassembly 0 erroneous header field 0 unrecognized next header 0 unrecognized option 959 redirect 0 unknown 5 message responses generated 0 messages with too many ND options 0 messages with bad ND options 0 bad neighbor solicitation messages 0 bad neighbor advertisement messages 0 bad router solicitation messages 0 bad router advertisement messages 0 bad redirect messages 0 path MTU changes ipsec6: 0 inbound packets violated process security policy 0 inbound packets failed due to insufficient memory 0 invalid inbound packets 0 outbound packets violated process security policy 0 outbound packets with no SA available 0 outbound packets failed due to insufficient memory 0 outbound packets with no route available 0 invalid outbound packets 0 outbound packets with bundled SAs 0 spd cache hits 0 spd cache misses 0 clusters copied during clone 0 mbufs inserted during makespace rip6: 0 messages received 0 checksum calculations on inbound 0 messages with bad checksum 0 messages dropped due to no socket 0 multicast messages dropped due to no socket 0 messages dropped due to full socket buffers 0 delivered 0 datagrams outputMaybe it's a bad driver?

Not sure if the driver is loaded in VM Esxi or in pfSense now. I can't remember and I also don't know many linux commands. I think it might have been loaded in VM and the esxi passes the device through to the VM. -

@gemeenaapje

Do you avoid the "Why do you need 65K packets" question intentionally

/Bingo

-

@bingo600 Oh sorry, not at all. Thought I had already mentioned.

It's an old software system which streams medical images and videos.

All I know is that the system has problems when large packets don't get through to the destination in a stable manner.So while investigating it, I just wanted to understand better myself. Hence I started playing with my own network at home and found I have similar problems with the pfsense box. Might also explain why I get regular network warnings when playing computer games too.

Finding a solution for my work isn't a big issue - I just tell the hospital involved to look into it as we're not respnsible for their network.

But for my home network I'd love to figure it out and learn in the process

-

@gemeenaapje said in Pfsense not responding to large packet pings:

Might also explain why I get regular network warnings when playing computer games too.

Your game is not putting 65k sized data on the wire..

Also for work - what sized is this old stuff putting on the wire... I have a really hard time believing its 65k either..

-

@gemeenaapje said in Pfsense not responding to large packet pings:

fibre from the ISP and the other glass fibre going to a 10gb/s switch.

Are we all the interfaces ethernet ?

I only ask as many years ago we had a mix of FDDI & 100baseT in the same Chipcom chassis, FDDI had a frame size of 4.5k bytes and as a result every FDDI client we had to drop the frame size

-

@johnpoz said in Pfsense not responding to large packet pings:

I have a really hard time believing its 65k either..

Yep, even with jumbo frames, you're not likely to see that. The most I've seen that a switch can handle is 16K and typical use is 9K.

-

IIRC, we had 4K frames on token ring, when I was at IBM.

-

@gemeenaapje said in Pfsense not responding to large packet pings:

70 fragments received

That doesn't look like enough...

What are the NICs you are passing though? How do they appear in pfSense in

pciconf -lvfor example?

I could definitely believe this is some NIC hardware offloading or driver issue.The frame size us not really relevant here. I would expect to be able to pass a 65K packet in fragments over a link of any MTU size (within reason!). As long is it's correctly fragmented and assembled, which seems to be failing here.

Steve

-

@stephenw10 said in Pfsense not responding to large packet pings:

The frame size us not really relevant he

Very true..

-

@stephenw10 Hi Steve.

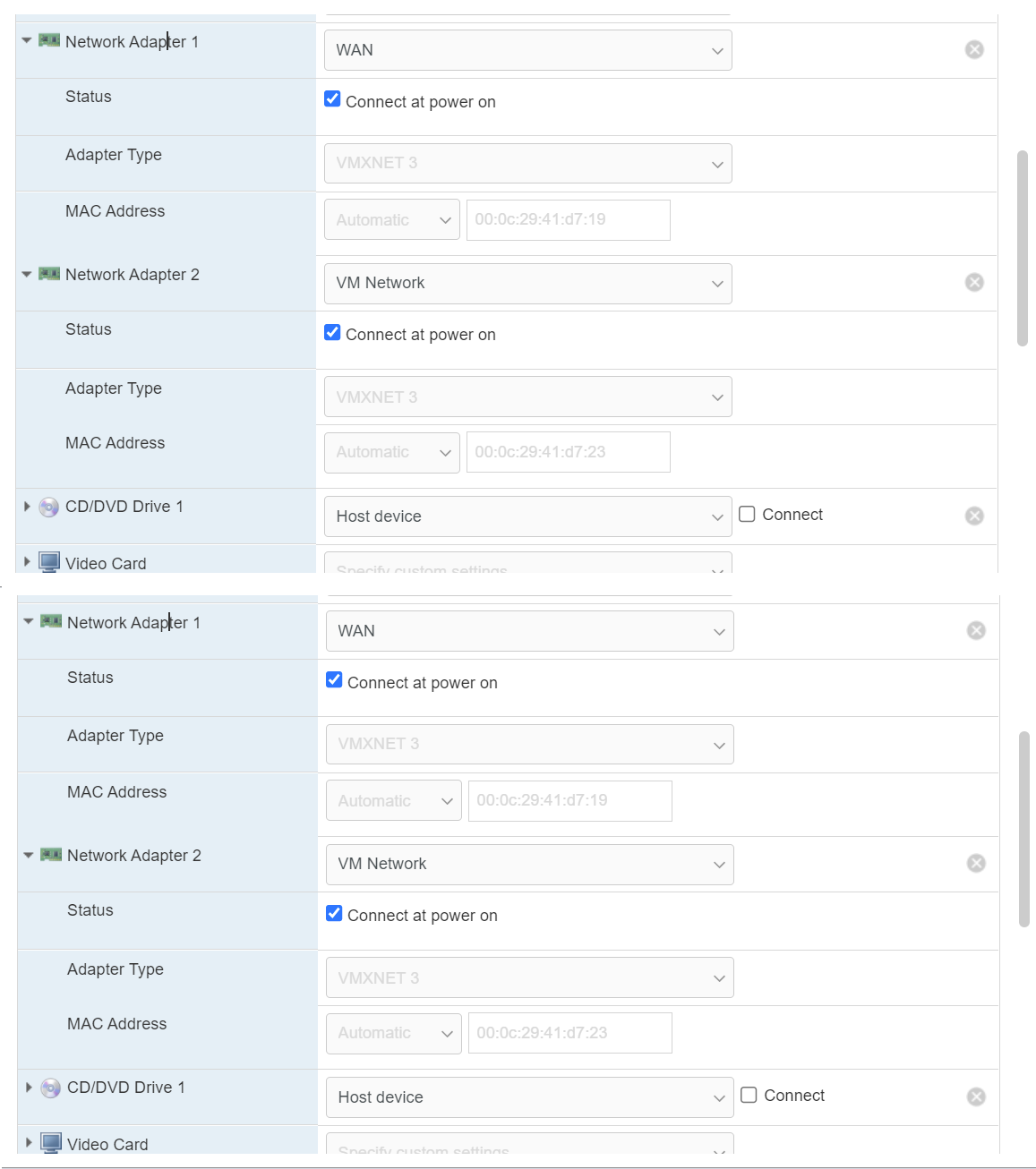

Here's the results of that command....hostb0@pci0:0:0:0: class=0x060000 card=0x197615ad chip=0x71908086 rev=0x01 hdr=0x00 vendor = 'Intel Corporation' device = '440BX/ZX/DX - 82443BX/ZX/DX Host bridge' class = bridge subclass = HOST-PCI pcib1@pci0:0:1:0: class=0x060400 card=0x00000000 chip=0x71918086 rev=0x01 hdr=0x01 vendor = 'Intel Corporation' device = '440BX/ZX/DX - 82443BX/ZX/DX AGP bridge' class = bridge subclass = PCI-PCI isab0@pci0:0:7:0: class=0x060100 card=0x197615ad chip=0x71108086 rev=0x08 hdr=0x00 vendor = 'Intel Corporation' device = '82371AB/EB/MB PIIX4 ISA' class = bridge subclass = PCI-ISA atapci0@pci0:0:7:1: class=0x01018a card=0x197615ad chip=0x71118086 rev=0x01 hdr=0x00 vendor = 'Intel Corporation' device = '82371AB/EB/MB PIIX4 IDE' class = mass storage subclass = ATA intsmb0@pci0:0:7:3: class=0x068000 card=0x197615ad chip=0x71138086 rev=0x08 hdr=0x00 vendor = 'Intel Corporation' device = '82371AB/EB/MB PIIX4 ACPI' class = bridge none0@pci0:0:7:7: class=0x088000 card=0x074015ad chip=0x074015ad rev=0x10 hdr=0x00 vendor = 'VMware' device = 'Virtual Machine Communication Interface' class = base peripheral vgapci0@pci0:0:15:0: class=0x030000 card=0x040515ad chip=0x040515ad rev=0x00 hdr=0x00 vendor = 'VMware' device = 'SVGA II Adapter' class = display subclass = VGA mpt0@pci0:0:16:0: class=0x010000 card=0x197615ad chip=0x00301000 rev=0x01 hdr=0x00 vendor = 'Broadcom / LSI' device = '53c1030 PCI-X Fusion-MPT Dual Ultra320 SCSI' class = mass storage subclass = SCSI pcib2@pci0:0:17:0: class=0x060401 card=0x079015ad chip=0x079015ad rev=0x02 hdr=0x01 vendor = 'VMware' device = 'PCI bridge' class = bridge subclass = PCI-PCI pcib3@pci0:0:21:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib4@pci0:0:21:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib5@pci0:0:21:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib6@pci0:0:21:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib7@pci0:0:21:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib8@pci0:0:21:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib9@pci0:0:21:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib10@pci0:0:21:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib11@pci0:0:22:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib12@pci0:0:22:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib13@pci0:0:22:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib14@pci0:0:22:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib15@pci0:0:22:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib16@pci0:0:22:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib17@pci0:0:22:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib18@pci0:0:22:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib19@pci0:0:23:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib20@pci0:0:23:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib21@pci0:0:23:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib22@pci0:0:23:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib23@pci0:0:23:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib24@pci0:0:23:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib25@pci0:0:23:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib26@pci0:0:23:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib27@pci0:0:24:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib28@pci0:0:24:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib29@pci0:0:24:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib30@pci0:0:24:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib31@pci0:0:24:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib32@pci0:0:24:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib33@pci0:0:24:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib34@pci0:0:24:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI uhci0@pci0:2:0:0: class=0x0c0300 card=0x197615ad chip=0x077415ad rev=0x00 hdr=0x00 vendor = 'VMware' device = 'USB1.1 UHCI Controller' class = serial bus subclass = USB ehci0@pci0:2:3:0: class=0x0c0320 card=0x077015ad chip=0x077015ad rev=0x00 hdr=0x00 vendor = 'VMware' device = 'USB2 EHCI Controller' class = serial bus subclass = USB vmx0@pci0:3:0:0: class=0x020000 card=0x07b015ad chip=0x07b015ad rev=0x01 hdr=0x00 vendor = 'VMware' device = 'VMXNET3 Ethernet Controller' class = network subclass = ethernet vmx1@pci0:11:0:0: class=0x020000 card=0x07b015ad chip=0x07b015ad rev=0x01 hdr=0x00 vendor = 'VMware' device = 'VMXNET3 Ethernet Controller' class = network subclass = ethernet bxe0@pci0:19:0:0: class=0x020000 card=0x3382103c chip=0x168e14e4 rev=0x10 hdr=0x00 vendor = 'Broadcom Inc. and subsidiaries' device = 'NetXtreme II BCM57810 10 Gigabit Ethernet' class = network subclass = ethernet bxe1@pci0:19:0:1: class=0x020000 card=0x3382103c chip=0x168e14e4 rev=0x10 hdr=0x00 vendor = 'Broadcom Inc. and subsidiaries' device = 'NetXtreme II BCM57810 10 Gigabit Ethernet' class = network subclass = ethernetSorry I was mistaken also in that the VM NICs are connected in the guest. As backups I think, it's been so long since I set them up I forget.

For the HP SFP+ NIC, one connects the server to the main switch (fibre). The other connects straight in from my fibre ISP

-

Do you see the same thing if you use the vmx interfaces instead or is it just on the bxe NICs?

Do you have all the hardware off-loading disabled?

-

@stephenw10 said in Pfsense not responding to large packet pings:

Do you see the same thing if you use the vmx interfaces instead or is it just on the bxe NICs?

Do you have all the hardware off-loading disabled?

I tried disabling the "disable" button (so enabling offload) one by one but that didn't help. So I disabled offloading again.

The router is being used for a web server I run at home so I can't risk changing the settings and swapping NICs or my customers will get upset. Also took me so long just to get this working with all the vlans tags and stuff coming from the ISP.

Is there anything else I could test?

-

@gemeenaapje said in Pfsense not responding to large packet pings:

Is there anything else I could test?

Yeah ;)

Change the title of the thread, as it is pretty clear by know that "big packets" is a hardware/driver issue.

Plenty of proof shown above that pfSense itself can go to 65xxx.@gemeenaapje said in Pfsense not responding to large packet pings:

Also took me so long ....

Well, let's say you're nothing finished yet.

Go bare bone with pfsense, exclude the VM from the configuration and you're ok.

The VM support might be able to tell you if very big packets are possible.. -

@gertjan Can't change title, too much time has passed it says.

Other VMs respond fine to 65500 over the network. It's just pfsense which is using the direct passthrough of the additional NIC card.

It must be the drivers then, I just don't know where to start with it :-/ Linux is so difficult.

Having the VM gives me a huge advantage over bare bone in that I can, if necessary, quickly roll back broken upgrades or changes. I've done this many times and it reduces downtime for my business.

-

Ok something just came back to me!

I'm using the drivers on the pfsense box, not the VM. So the pfsense OS controls the drivers.

The commands for the drivers are here:

https://www.freebsd.org/cgi/man.cgi?query=bxe&sektion=4I see settings there for offloading too.

Is it necessary for me to change some of these settings or should the normal web config page for pfsense control these too?

Here's a dump of some settings:

hw.bxe.udp_rss: 0 hw.bxe.autogreeen: 0 hw.bxe.mrrs: -1 hw.bxe.max_aggregation_size: 32768 hw.bxe.rx_budget: -1 hw.bxe.hc_tx_ticks: 50 hw.bxe.hc_rx_ticks: 25 hw.bxe.max_rx_bufs: 4080 hw.bxe.queue_count: 4 hw.bxe.interrupt_mode: 2 hw.bxe.debug: 0 dev.bxe.1.queue.3.nsegs_path2_errors: 0 dev.bxe.1.queue.3.nsegs_path1_errors: 0 dev.bxe.1.queue.3.tx_mq_not_empty: 0 dev.bxe.1.queue.3.bd_avail_too_less_failures: 0 dev.bxe.1.queue.3.tx_request_link_down_failures: 1 dev.bxe.1.queue.3.bxe_tx_mq_sc_state_failures: 0 dev.bxe.1.queue.3.tx_queue_full_return: 0 dev.bxe.1.queue.3.mbuf_alloc_tpa: 64 dev.bxe.1.queue.3.mbuf_alloc_sge: 1020 dev.bxe.1.queue.3.mbuf_alloc_rx: 4080 dev.bxe.1.queue.3.mbuf_alloc_tx: 0 dev.bxe.1.queue.3.mbuf_rx_sge_mapping_failed: 0 dev.bxe.1.queue.3.mbuf_rx_sge_alloc_failed: 0 dev.bxe.1.queue.3.mbuf_rx_tpa_mapping_failed: 0 dev.bxe.1.queue.3.mbuf_rx_tpa_alloc_failed: 0 dev.bxe.1.queue.3.mbuf_rx_bd_mapping_failed: 0 dev.bxe.1.queue.3.mbuf_rx_bd_alloc_failed: 0 dev.bxe.1.que -

@gemeenaapje said in Pfsense not responding to large packet pings:

Here's a dump of some settings:

Looks like these settings belong into /boot/loader.conf.local

-

@gertjan Looks like it's already disabled....

bxe1: flags=8943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500 description: LAN options=120b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_MAGIC,VLAN_HWFILTER> ether 10:60:4b:b4:a0:44 inet6 fe80xxxxxxxxxxxa044%bxe1 prefixlen 64 scopeid 0x4 inet6 200xxxxxxxxxxxxx prefixlen 64 inet 192.168.2.2 netmask 0xffffff00 broadcast 192.168.2.255 media: Ethernet autoselect (10Gbase-SR <full-duplex>) status: active nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> -

Strange, it looks like offloading (and other) settings are different for WAN vs LAN.

I've no idea what I should be using though.

bxe0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1508 options=527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO> ether 10:60:4b:b4:a0:40 inet6 fe80:***************40%bxe0 prefixlen 64 scopeid 0x3 media: Ethernet autoselect (10Gbase-SR <full-duplex>) status: active nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> bxe1: flags=8943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500 description: LAN options=120b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_MAGIC,VLAN_HWFILTER> ether 10:60:4b:b4:a0:44 inet6 fe80xxxxxxxxxxxa044%bxe1 prefixlen 64 scopeid 0x4 inet6 200xxxxxxxxxxxxx prefixlen 64 inet 192.168.2.2 netmask 0xffffff00 broadcast 192.168.2.255 media: Ethernet autoselect (10Gbase-SR <full-duplex>) status: active nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> -

Do that have the same capabilities? Try:

ifconfig -vvvmaAre those vmxnet NICs the pfSense VM has assigned currently?

If not try assigning one to something and see if that responds to large packets.

This seems likely to be an issue with the bxe driver or the NIC itself but we need to confirm that by, for example, showing vmx is not affected.

Steve