Iperf testing, same subnet, inconsistent speeds.

-

@erasedhammer said in Iperf testing, same subnet, inconsistent speeds.:

I am working on getting iperf on my synology to do a full proper test.

I have compiled 3.10.1 myself to run on my ds918+ but seems you can also get it in this package.

-

@johnpoz

Ha! I did not realize there was a package. I just ripped iperf3 arm binaries out of a debian 10 package and tossed them on my synology.Here are the results. My slowness definitely appears to be my disk array (7.2K RPM 4 Disk RAID 10). pfSense is definitely not the problem, nor my cables.

iperf 3.7 Linux host 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 Control connection MSS 1448 Time: Mon, 13 Dec 2021 20:23:53 GMT Connecting to host 10.10.1.3, port 4444 Cookie: yumri2t7so3e7y7mnhkgjagiwbdnbbizmwgn TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 56430 connected to 10.10.1.3 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 959 Mbits/sec 0 404 KBytes [ 5] 1.00-2.00 sec 112 MBytes 941 Mbits/sec 0 404 KBytes [ 5] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 404 KBytes [ 5] 3.00-4.00 sec 112 MBytes 937 Mbits/sec 0 441 KBytes [ 5] 4.00-5.00 sec 112 MBytes 941 Mbits/sec 0 441 KBytes [ 5] 5.00-6.00 sec 112 MBytes 941 Mbits/sec 0 441 KBytes [ 5] 6.00-7.00 sec 112 MBytes 941 Mbits/sec 0 441 KBytes [ 5] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 441 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 441 KBytes [ 5] 9.00-10.00 sec 112 MBytes 942 Mbits/sec 0 441 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 943 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver CPU Utilization: local/sender 1.6% (0.0%u/1.6%s), remote/receiver 15.0% (0.7%u/14.3%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done. iperf 3.7 Linux host 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 ----------------------------------------------------------- Server listening on 4444 ----------------------------------------------------------- Time: Mon, 13 Dec 2021 20:25:10 GMT Accepted connection from 10.10.1.3, port 40810 Cookie: tdn5vofg3yiecbjgvfaxay4wiimdqd4w4rvf TCP MSS: 0 (default) [ 5] local 10.10.0.2 port 4444 connected to 10.10.1.3 port 40812 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 108 MBytes 906 Mbits/sec [ 5] 1.00-2.00 sec 112 MBytes 941 Mbits/sec [ 5] 2.00-3.00 sec 112 MBytes 941 Mbits/sec [ 5] 3.00-4.00 sec 112 MBytes 941 Mbits/sec [ 5] 4.00-5.00 sec 112 MBytes 942 Mbits/sec [ 5] 5.00-6.00 sec 112 MBytes 942 Mbits/sec [ 5] 6.00-7.00 sec 112 MBytes 941 Mbits/sec [ 5] 7.00-8.00 sec 112 MBytes 941 Mbits/sec [ 5] 8.00-9.00 sec 111 MBytes 933 Mbits/sec [ 5] 9.00-10.00 sec 112 MBytes 941 Mbits/sec [ 5] 10.00-10.04 sec 4.23 MBytes 941 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate [ 5] (sender statistics not available) [ 5] 0.00-10.04 sec 1.09 GBytes 937 Mbits/sec receiver rcv_tcp_congestion cubic -

@erasedhammer said in Iperf testing, same subnet, inconsistent speeds.:

arm binaries out of a debian 10 package and tossed them on my synology.

what synology do you have? I was not aware you could just copy the binaries over from a linux distro ;) That would of saved so much time then compiling it myself for dsm7 ;) hehe I found that package myself after I spent a couple of hours getting the dev environment setup, etc..

Those speeds look fine! But your only seeing 40-60MBps in a file copy.. Are you just using normal SMB file copy?

Maxed out gig should be able do easy 100MBps

edit: I have someone streaming something off my nas right not (plex) but just copied over a close to 2GB file from nas to my pc.. Seeing 230ish MBps overall

That is over the 2.5ge connection.

Over the gig connection

-

@johnpoz

RS819 with DSM 7. Synology already had a lot of the dependencies on there. Just needed the iperf3 binary, libiperf.so.0, and libsctp.so.1.SMB3 from Ubuntu 20.04 PC (using rsync). I was doing a backup yesterday of some local vmdk files and 12GB was just chugging along at 40MB/s. Flat out stuck at that speed.

My local drives are Samsung 980 Pro and the NAS has 4 Seagate Ironwolf Pro 4TB.I agree the speeds should be more.

-

@erasedhammer well sure doesn't seem to be your wire speed.. Those look to be rocking for gig..

Yeah try something other than rsync? Just like SMB or NFS file copy?

-

Copying files through dolphin file manager over SMB3 is the same speed.

-

@erasedhammer did this slow down recently? Were you before seeing 100MBps file copies?

-

@johnpoz Would love to help, but I cannot see the starting posts of the conversation, and some of the details that have been posted.

What makes this forum remove some of the initial posts so we can only see later replies (some of which quotes former answers I cannot see either)?

-

@johnpoz

I went back and reviewed network interface metrics over the past year, and I replaced my DS218 with the RS819 back in March. All the historical data for the RS819 shows it never exceeded 500Mbit/s. The DS218 historical data shows it hitting 930Mbit/s regularly.One thing that may be the issue is the RS819 has an "Adaptive Load Balancing" feature using its two RJ45 1Gig ports (I guess fake LAGG?). It doesn't require any support for the connected switch.

But, then again I believe iperf3 should have shown something if the fake LAGG was the problem.

-

@keyser said in Iperf testing, same subnet, inconsistent speeds.:

What makes this forum remove some of the initial posts so we can only see later replies (some of which quotes former answers I cannot see either)?

huh? I don't see any deleted posts, and see the first post, etc.. Do you happen to have the OP blocked?

edit: could you post up a screenshot of the area where you think something is missing? I can post a screenshot of the whole thread, and you could point out what your missing?

pic of thread

-

@johnpoz Okay, that was weird... I didn't block the OP, and in the end I tried Firefox and it worked fine.

So cleared my cache for the site completely in chrome and presto - everything is visible...

How that can happen is beyond me, but it's working now. Thanks for posting the picture so I could see it was my browser view that was "screwed up" :-) -

@erasedhammer said in Iperf testing, same subnet, inconsistent speeds.:

I've been trying to nail down for a while why my LAN speeds (PC to NAS) were always stuck at around 40-60MB/s.

Doing some iperf testing between my PC and pfsense (so local subnet):

PC to Pfsense: iperf 3.7 Linux host 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 Control connection MSS 1448 Time: Mon, 13 Dec 2021 18:52:12 GMT Connecting to host 10.10.0.1, port 4444 Cookie: phntfxguuude3t4vnhys7yqikgmhupgl6ygc TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 53916 connected to 10.10.0.1 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 113 MBytes 947 Mbits/sec 0 153 KBytes [ 5] 1.00-2.00 sec 112 MBytes 942 Mbits/sec 0 153 KBytes [ 5] 2.00-3.00 sec 112 MBytes 940 Mbits/sec 0 153 KBytes [ 5] 3.00-4.00 sec 112 MBytes 940 Mbits/sec 0 153 KBytes [ 5] 4.00-5.00 sec 112 MBytes 942 Mbits/sec 0 153 KBytes [ 5] 5.00-6.00 sec 112 MBytes 940 Mbits/sec 0 153 KBytes [ 5] 6.00-7.00 sec 112 MBytes 943 Mbits/sec 0 153 KBytes [ 5] 7.00-8.00 sec 112 MBytes 940 Mbits/sec 0 153 KBytes [ 5] 8.00-9.00 sec 112 MBytes 942 Mbits/sec 0 153 KBytes [ 5] 9.00-10.00 sec 112 MBytes 940 Mbits/sec 0 153 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 942 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver CPU Utilization: local/sender 3.5% (0.4%u/3.0%s), remote/receiver 67.7% (12.9%u/54.9%s) snd_tcp_congestion cubic rcv_tcp_congestion newreno iperf Done. Pfsense to PC: iperf 3.10.1 FreeBSD host 12.2-STABLE FreeBSD 12.2-STABLE plus-RELENG_21_05_2-n202579-3b8ea9b365a pfSense amd64 Control connection MSS 1460 Time: Mon, 13 Dec 2021 18:52:49 UTC Connecting to host 10.10.0.2, port 4444 Cookie: rwbdamlfvghiksxmgxi27ii2u4leuthzhab3 TCP MSS: 1460 (default) [ 5] local 10.10.0.1 port 64301 connected to 10.10.0.2 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 71.8 MBytes 71.8 MBytes/sec 3283 24.1 KBytes [ 5] 1.00-2.00 sec 53.9 MBytes 54.0 MBytes/sec 2376 27.0 KBytes [ 5] 2.00-3.00 sec 71.4 MBytes 71.4 MBytes/sec 3194 1.41 KBytes [ 5] 3.00-4.00 sec 70.8 MBytes 70.8 MBytes/sec 3267 18.4 KBytes [ 5] 4.00-5.00 sec 73.3 MBytes 73.3 MBytes/sec 3180 1.41 KBytes [ 5] 5.00-6.00 sec 64.8 MBytes 64.8 MBytes/sec 2952 25.6 KBytes [ 5] 6.00-7.00 sec 69.1 MBytes 69.1 MBytes/sec 3275 2.83 KBytes [ 5] 7.00-8.00 sec 52.5 MBytes 52.5 MBytes/sec 2537 1.41 KBytes [ 5] 8.00-9.00 sec 69.9 MBytes 69.9 MBytes/sec 3296 25.6 KBytes [ 5] 9.00-10.00 sec 68.6 MBytes 68.6 MBytes/sec 3144 1.41 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 666 MBytes 66.6 MBytes/sec 30504 sender [ 5] 0.00-10.21 sec 666 MBytes 65.2 MBytes/sec receiver CPU Utilization: local/sender 57.0% (1.7%u/55.3%s), remote/receiver 12.0% (1.7%u/10.3%s) snd_tcp_congestion newreno rcv_tcp_congestion cubic iperf Done.Pfsense interface information:

ix1: flags=8943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500 description: Admin options=e138bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_UCAST,WOL_MCAST,WOL_MAGIC,VLAN_HWFILTER,RXCSUM_IPV6,TXCSUM_IPV6> capabilities=f53fbb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_UCAST,WOL_MCAST,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,NETMAP,RXCSUM_IPV6,TXCSUM_IPV6> ether 00:08:a2:0f:13:b1 inet6 fe80::208:a2ff:fe0f:13b1%ix1 prefixlen 64 scopeid 0x2 inet 10.10.0.1 netmask 0xfffffff0 broadcast 10.10.0.15 media: Ethernet autoselect (10Gbase-SR <full-duplex,rxpause,txpause>) status: active supported media: media autoselect media 1000baseSX media 10Gbase-SR nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> plugged: SFP/SFP+/SFP28 10G Base-SR (LC) vendor: QSFPTEK PN: QT-SFP-10G-T SN: QT202003110117 DATE: 2020-11-25 module temperature: 51.25 C Voltage: 3.30 Volts RX: 0.40 mW (-3.98 dBm) TX: 0.50 mW (-3.01 dBm) SFF8472 DUMP (0xA0 0..127 range): 03 04 07 10 00 00 01 00 00 00 00 06 67 00 00 00 1E 1E 00 1E 51 53 46 50 54 45 4B 20 20 20 20 20 20 20 20 20 00 00 1B 21 51 54 2D 53 46 50 2D 31 30 47 2D 54 20 20 20 20 47 32 2E 33 03 52 00 20 00 3A 00 00 51 54 32 30 32 30 30 33 31 31 30 31 31 37 20 20 32 30 31 31 32 35 20 20 68 F8 03 3F 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00Physical topology is my PC connected to a dumb gigabit switch, then connected through a ~50ft cat5e cable to an RJ45 SFP+ connector on my Netgate xg-7100

The tests seem to be almost identical, but why is the "download" to my PC not hitting full gigabit?

Your problem is the SFP+ RJ45 tranciever in your pfSense. You can do full GigE from NAS to and from pfSense, you can only do full GigE to but not from pfSense to your PC.

I have had millions of issues with SFP+ trancievers (especially 10Gbe) in several pfSense boxes where one direction is fine, the other is not.

I realize yours is a RJ45 1Gbe SFP+ adapter, but that still plugs as a 10Gbe tranciever, so I would expect it to be sensitive to the very same problems.Try wiring your PC to one of the 1Gbe Switch ports instead. Then you NAS <-> pfSense <-> PC iPerf and SMB filecopy will show full GigE i both directions :-)

-

Did I miss something? The original post shows about 30k retries. That is a dirty connection. iperf has done its job and pointed to the problem.

For reference I have trouble getting a copy on all flash NetApps to run faster than about 2 to 3 Gb/s when doing a file copy with large files. These systems are running clean LACP 2x10Gb. In aggregate they easily exceed 10Gb, but when reading/writing to a single file system they are limited to how the file table works, block allocation is single threaded.

Assuming you are all flash, it is still consumer level HW not backed by plenty of cache. Windows and Linux are not optimized in a way to make file transfers super fast. Just a guess, but I doubt Synology NAS systems are actually highly optimized Linux systems. Meaning you are limited by other things in the OS and file system management.

Watch for the write cliff with SSDs. They all run at blazing speed then hit a cliff and performance falls off dramatically.

Networking and pfSense are a hobby, storage has fed the family for 20 years. -

@andyrh said in Iperf testing, same subnet, inconsistent speeds.:

Did I miss something? The original post shows about 30k retries. That is a dirty connection. iperf has done its job and pointed to the problem.

For reference I have trouble getting a copy on all flash NetApps to run faster than about 2 to 3 Gb/s when doing a file copy with large files. These systems are running clean LACP 2x10Gb. In aggregate they easily exceed 10Gb, but when reading/writing to a single file system they are limited to how the file table works, block allocation is single threaded.

Assuming you are all flash, it is still consumer level HW not backed by plenty of cache. Windows and Linux are not optimized in a way to make file transfers super fast. Just a guess, but I doubt Synology NAS systems are actually highly optimized Linux systems. Meaning you are limited by other things in the OS and file system management.

Watch for the write cliff with SSDs. They all run at blazing speed then hit a cliff and performance falls off dramatically.

Networking and pfSense are a hobby, storage has fed the family for 20 years.Exactly, that is very very likely caused by the SFP+ -> RJ45 tranciever.

For the record: One of these NAS's with 4 spinning drives in Raid 5/6 will do 112 MB/s (Full GigE) easily in any somewhat sequential workload - even with copying thousands of files as long as they are 1 MB+ in size and the drives does not get bogged down in filetable updates.

-

The nas and PC are connected to dumb switch.. The sfp connection doesn't come into play when pc talking to nas

-

Are you sure? Why do we have only the ix1 info then? That could be connected to the switch, no?

-

@stephenw10 said in Iperf testing, same subnet, inconsistent speeds.:

Are you sure?

No not really.. But seems more logical.. And if the sfp was problematic, he would of seen that issue when testing between pc and nas.

Where is the nas connected if the pc is directly connected to the pfsense.. He makes no mention of bridge, etc. And that the pc and nas are on the same network.

The port of on his pfsense is the uplink from the switch..

And when stated that pfsense is not part of the conversation between pc and nas he agreed, etc. So to me the pc and nas are connected to the switch, like any normal setup.

Look at his tests between pc and nas - his wire speed is not the issue for his slow file copies.

-

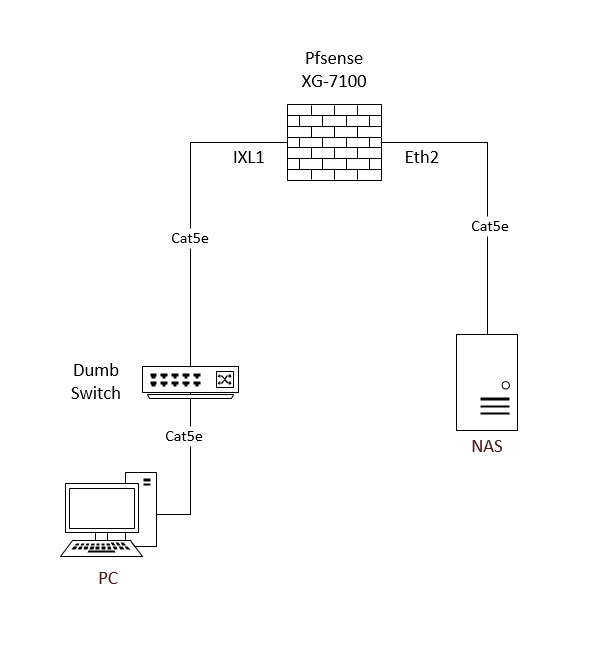

I should probably clarify, My setup is not exactly standard.

My NAS is connected to the built in marvel switch on my XG-7100. My PC is connected to a 5 port dumb switch, which is then connected to the SFP+ port on pfsense.

Here's a drawing so we don't get confused.

-

Just to add another data point. I tried scp a file of random junk, both 500MB and 1GB to both the synology flash disk and the RAID array and got the same speeds:

1GB scp to RAID array:

500MB to /tmp :

The original iperf3 test I did from synology I actually copied the results from the server side, so it omitted the retries. Here is the PC to NAS and NAS to PC iperf tests again:

PC to NAS NAS# iperf3 -V -s -p 4444 PC# iperf3 -V -c 10.10.1.3 -p 4444 Output from PC: iperf 3.7 Linux PC 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:45:40 GMT Connecting to host 10.10.1.3, port 4444 Cookie: v3oma5g64ia6jxp36hk4grhdfn2sb3j6xval TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 35896 connected to 10.10.1.3 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 355 KBytes [ 5] 1.00-2.00 sec 112 MBytes 938 Mbits/sec 0 355 KBytes [ 5] 2.00-3.00 sec 113 MBytes 946 Mbits/sec 0 373 KBytes [ 5] 3.00-4.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 4.00-5.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 5.00-6.00 sec 112 MBytes 942 Mbits/sec 0 393 KBytes [ 5] 6.00-7.00 sec 113 MBytes 947 Mbits/sec 0 393 KBytes [ 5] 7.00-8.00 sec 112 MBytes 940 Mbits/sec 0 410 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes [ 5] 9.00-10.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 943 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver CPU Utilization: local/sender 1.5% (0.0%u/1.5%s), remote/receiver 18.7% (0.8%u/17.8%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC PC# iperf3 -V -s -p 4444 NAS# iperf3 -V -c 10.10.0.2 -p 4444 Output from NAS: iperf 3.6 Linux NAS 4.4.180+ #42218 SMP Mon Oct 18 19:16:01 CST 2021 aarch64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:46:41 GMT Connecting to host 10.10.0.2, port 4444 Cookie: 5cn3v22hqr5wpyglpotmt2g63zf7kfxyntov TCP MSS: 1448 (default) [ 5] local 10.10.1.3 port 41532 connected to 10.10.0.2 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 375 KBytes [ 5] 1.00-2.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 3.00-4.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 4.00-5.00 sec 111 MBytes 934 Mbits/sec 0 375 KBytes [ 5] 5.00-6.00 sec 112 MBytes 943 Mbits/sec 0 375 KBytes [ 5] 6.00-7.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 9.00-10.00 sec 112 MBytes 940 Mbits/sec 11 314 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec 11 sender [ 5] 0.00-10.03 sec 1.09 GBytes 936 Mbits/sec receiver CPU Utilization: local/sender 7.1% (0.0%u/7.1%s), remote/receiver 16.2% (2.0%u/14.3%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC did have a few retires, nothing more than 20 per interval. Done a second time I get only 10-20 retires over all the intervals.

I am at a loss for what is the bottleneck here. I get the same speeds to the synology onboard flash as the RAID array? but its not full gigabit, yet iperf shows the network is not the problem?

It seems if I use any actual application that transfers data (ssh, rsync, smb) then I don't see full gigabit...

-

@erasedhammer said in Iperf testing, same subnet, inconsistent speeds.:

Just to add another data point. I tried scp a file of random junk, both 500MB and 1GB to both the synology flash disk and the RAID array and got the same speeds:

1GB scp to RAID array:

500MB to /tmp :

The original iperf3 test I did from synology I actually copied the results from the server side, so it omitted the retries. Here is the PC to NAS and NAS to PC iperf tests again:

PC to NAS NAS# iperf3 -V -s -p 4444 PC# iperf3 -V -c 10.10.1.3 -p 4444 Output from PC: iperf 3.7 Linux PC 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:45:40 GMT Connecting to host 10.10.1.3, port 4444 Cookie: v3oma5g64ia6jxp36hk4grhdfn2sb3j6xval TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 35896 connected to 10.10.1.3 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 355 KBytes [ 5] 1.00-2.00 sec 112 MBytes 938 Mbits/sec 0 355 KBytes [ 5] 2.00-3.00 sec 113 MBytes 946 Mbits/sec 0 373 KBytes [ 5] 3.00-4.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 4.00-5.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 5.00-6.00 sec 112 MBytes 942 Mbits/sec 0 393 KBytes [ 5] 6.00-7.00 sec 113 MBytes 947 Mbits/sec 0 393 KBytes [ 5] 7.00-8.00 sec 112 MBytes 940 Mbits/sec 0 410 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes [ 5] 9.00-10.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 943 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver CPU Utilization: local/sender 1.5% (0.0%u/1.5%s), remote/receiver 18.7% (0.8%u/17.8%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC PC# iperf3 -V -s -p 4444 NAS# iperf3 -V -c 10.10.0.2 -p 4444 Output from NAS: iperf 3.6 Linux NAS 4.4.180+ #42218 SMP Mon Oct 18 19:16:01 CST 2021 aarch64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:46:41 GMT Connecting to host 10.10.0.2, port 4444 Cookie: 5cn3v22hqr5wpyglpotmt2g63zf7kfxyntov TCP MSS: 1448 (default) [ 5] local 10.10.1.3 port 41532 connected to 10.10.0.2 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 375 KBytes [ 5] 1.00-2.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 3.00-4.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 4.00-5.00 sec 111 MBytes 934 Mbits/sec 0 375 KBytes [ 5] 5.00-6.00 sec 112 MBytes 943 Mbits/sec 0 375 KBytes [ 5] 6.00-7.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 9.00-10.00 sec 112 MBytes 940 Mbits/sec 11 314 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec 11 sender [ 5] 0.00-10.03 sec 1.09 GBytes 936 Mbits/sec receiver CPU Utilization: local/sender 7.1% (0.0%u/7.1%s), remote/receiver 16.2% (2.0%u/14.3%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC did have a few retires, nothing more than 20 per interval. Done a second time I get only 10-20 retires over all the intervals.

I am at a loss for what is the bottleneck here. I get the same speeds to the synology onboard flash as the RAID array? but its not full gigabit, yet iperf shows the network is not the problem?

It seems if I use any actual application that transfers data (ssh, rsync, smb) then I don't see full gigabit...

Please read My former replies. Your issue is the SFP+ tranciever.