[solved] pfSense (2.6.0 & 22.01 ) is very slow on Hyper-V

-

Are you able to test FreeBSD 12.3 in a similar config?

-

@stephenw10 I can't. Is in the current release this already patched? I can't tell for sure, would be helpful to know.

-

No, that patch is not in 22.01 or 2.6.

https://github.com/pfsense/FreeBSD-src/tree/RELENG_2_6_0/sys/dev/hyperv/pcib

It's not yet in 22.05/2.7 either.

Steve

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

@dd said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

@bob-dig They have new version 22.1 which is based on FreeBSD 13 and it's working ok. I have tried it. I think that problem with pfSense 2.6 is because it's based on FreeBSD 12.3. Same problem is with pfSense 2.7.0-DEVELOPMENT which is on FreeBSD 12.3 too. I think, fix will not be available for longer time but they must something to do because now is not pfSense 2.6 (and Plus) useable on Hyper-V.

I can 100% confirm this, tried it myself. I could also enable all offloading there.

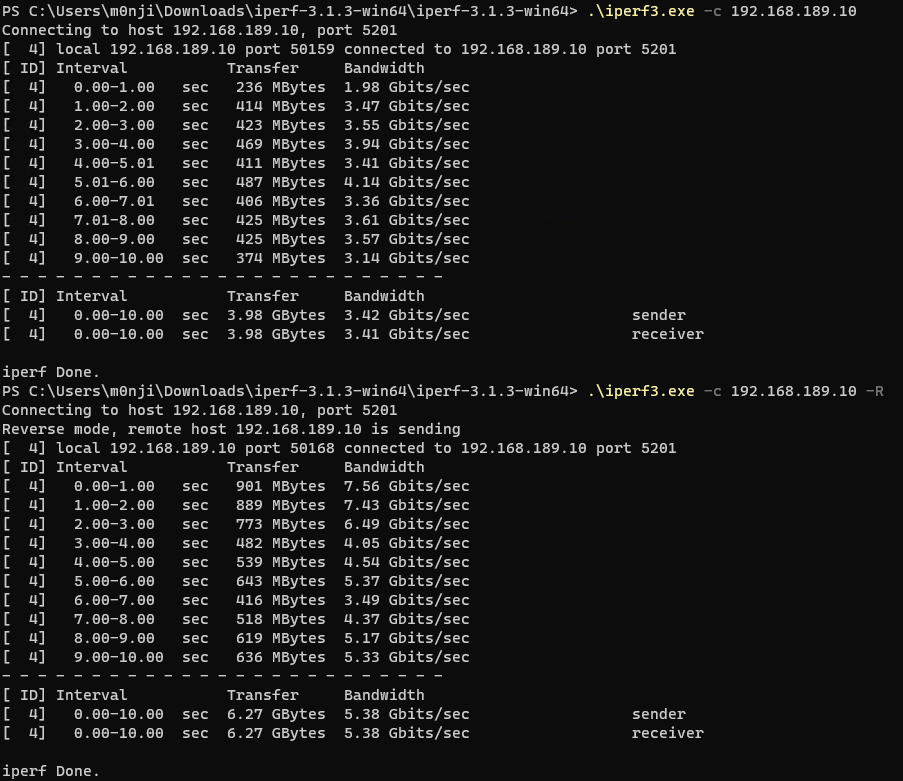

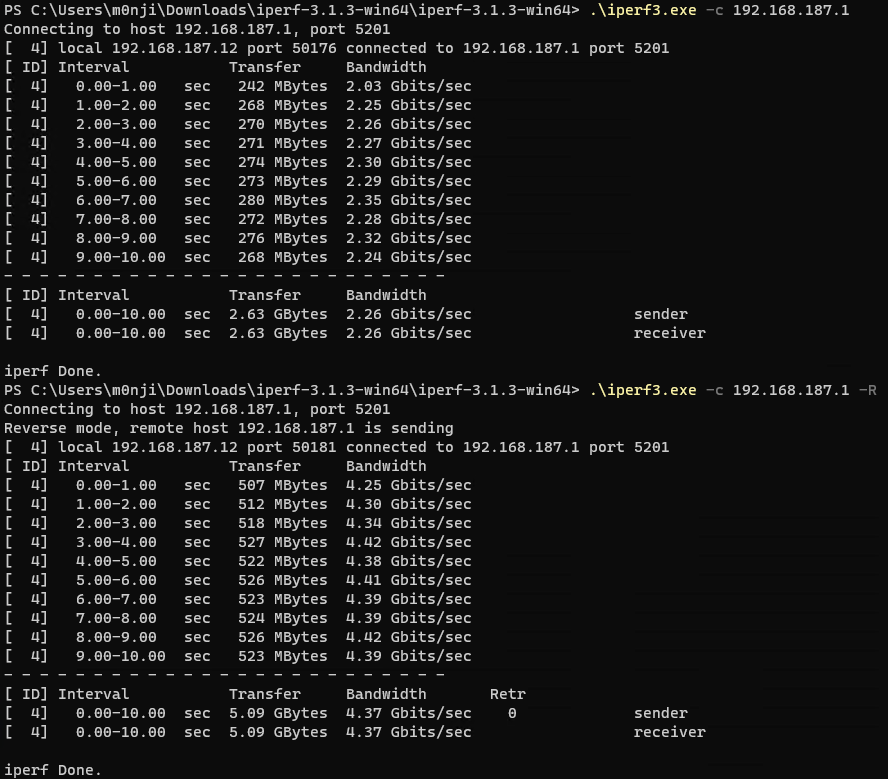

This is from a VM with 4 Cores on another VLAN (and host).

What I had to do on both is disabling VMQ in the virtual NICs in hyper-V, otherwise there were some error messages in the console.

So no problem with FreeBSD 13 on Hyper-V (Server 2022) with the other thingy.

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

Can anyone seeing this test FreeBSD 12.3 directly?

This could be a simple fix if it's something we are setting in pfSense. Though I'm not sure what it could be.

Steve

-

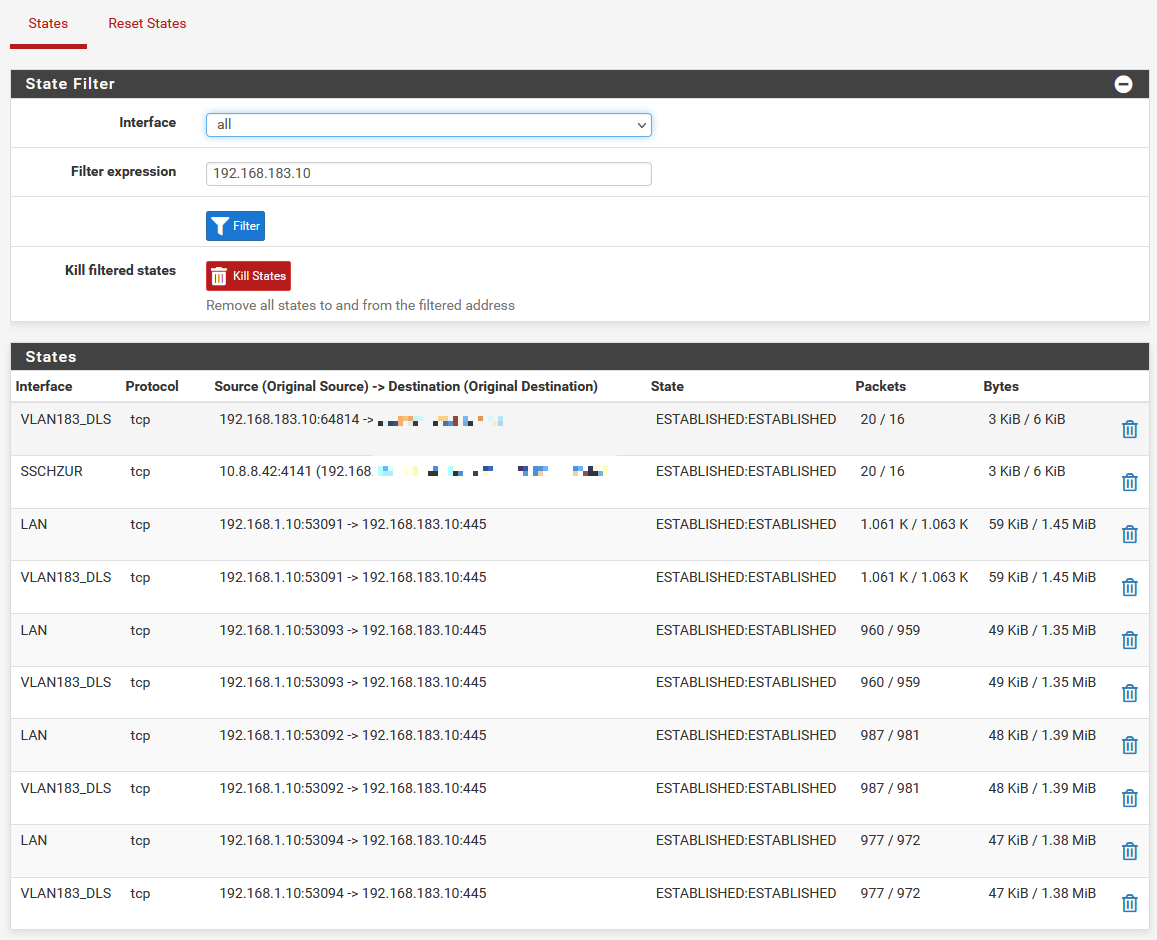

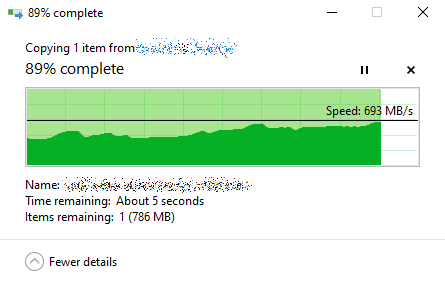

Just did a simple test

First screenshots shows 2 Windows VMs connected through pfSense 2.6.0(fresh and clean install), all running on Hyper-V.

Second screenshots shows 1 Windows VM connected to a clean FreeBSD 12.3 Install, all running on Hyper-V

-

Do you see the same thing using

iperf3 -c 192.168.189.10 -Ras you do if you run the client on 192.168.189.10?That opens the states the other way so it would be interesting to see if it fails in the opposite direction.

Steve

-

Dammit, if I only knew about the -R thing earlier.

-

@stephenw10

Sure that you want to have a iperf test when the test does not even leave the vm? But no problem:

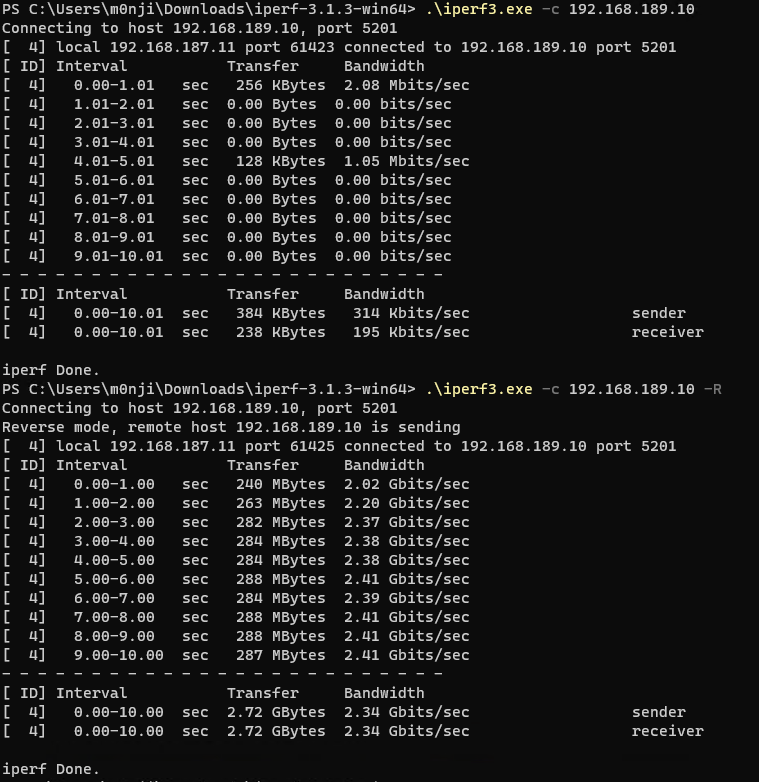

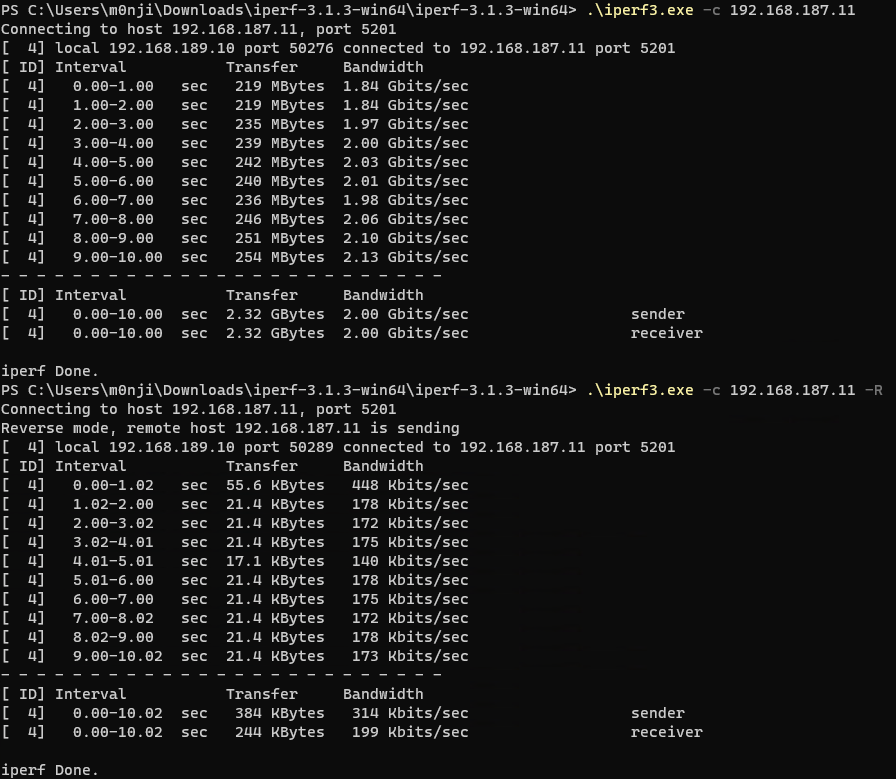

Here also the tests again from both VMs with the pfSense in between

And also a test between both VMs without pfSense (on the same subnet)

-

If it helps, i also did a test from the Windows VM to the pfSense 2.6.0 VM directly

Thats kind of interesting. So that means the problem just exist when pfSense is in between, doing routing, but it does not exist when the pfSense is a direct target/source. This would also explain, why i don't see this weird results when i do the test with the FreeBSD VM (1st post, second screenshot)

-

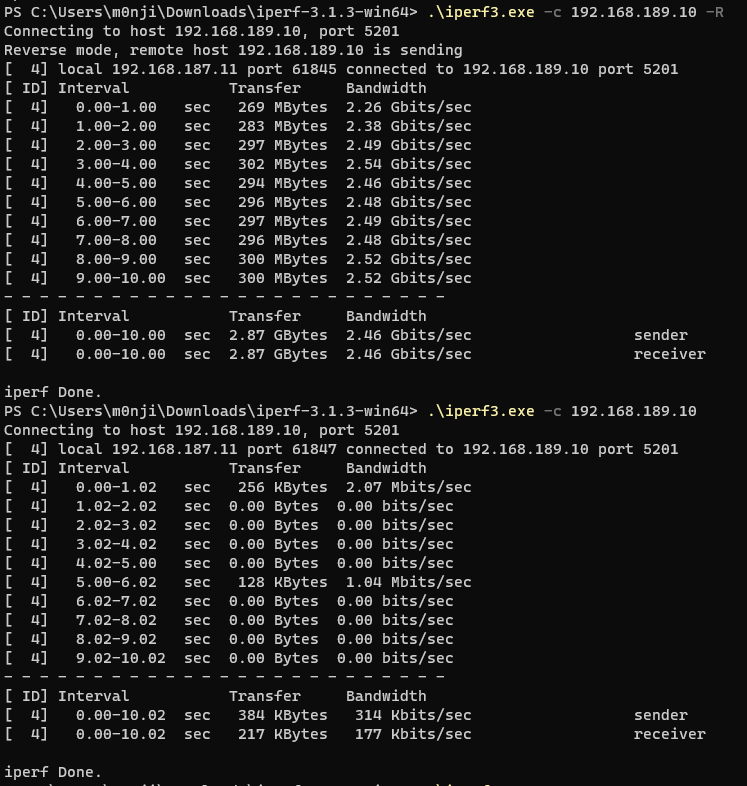

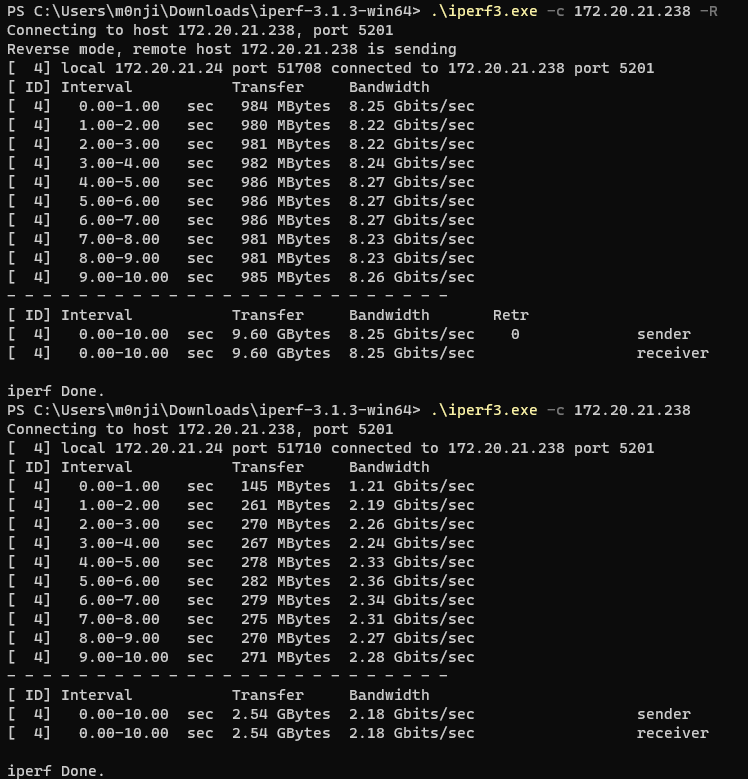

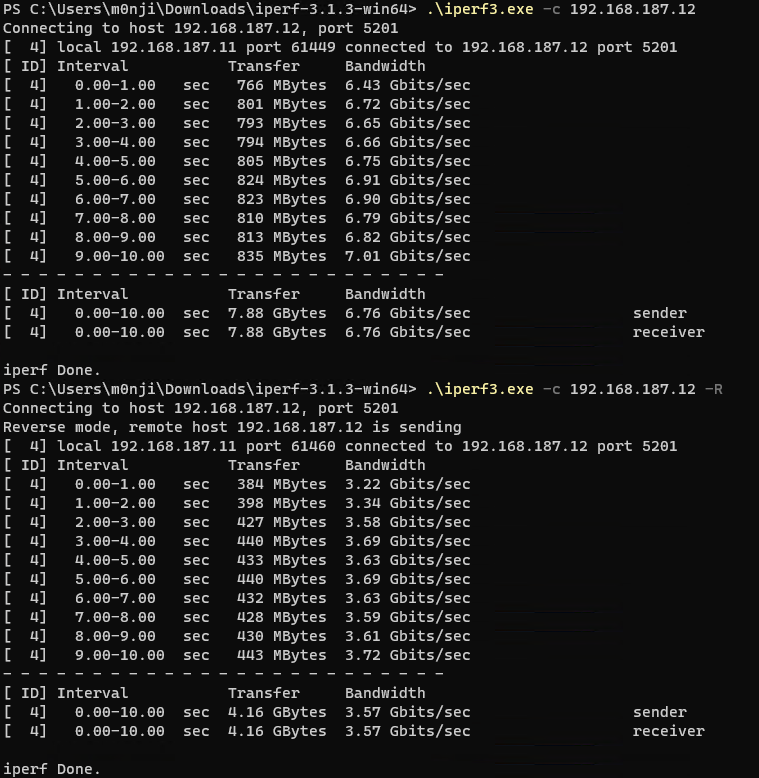

Ok did some further testing and i think i can confirm that the problem is FreeBSD 12.3 related, not pfSense specific.

I configured 2 FreeBSD VMs, one 12.3 and one 13.0 with Routing enabled (gateway_enable="YES") so that they can simulate the pfSense.

I then did iperf tests:

- Win VM (192.168.189.10) --> FreeBSD 12.3 VM (192.168.187.250 / 192.168.189.250) --> Win VM (192.168.187.11)

PS C:\Users\m0nji\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64> .\iperf3.exe -c 192.168.187.11 Connecting to host 192.168.187.11, port 5201 [ 4] local 192.168.189.10 port 58723 connected to 192.168.187.11 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 254 MBytes 2.13 Gbits/sec [ 4] 1.00-2.00 sec 268 MBytes 2.25 Gbits/sec [ 4] 2.00-3.00 sec 282 MBytes 2.37 Gbits/sec [ 4] 3.00-4.00 sec 302 MBytes 2.53 Gbits/sec [ 4] 4.00-5.00 sec 304 MBytes 2.55 Gbits/sec [ 4] 5.00-6.00 sec 304 MBytes 2.55 Gbits/sec [ 4] 6.00-7.00 sec 297 MBytes 2.49 Gbits/sec [ 4] 7.00-8.00 sec 302 MBytes 2.54 Gbits/sec [ 4] 8.00-9.00 sec 294 MBytes 2.47 Gbits/sec [ 4] 9.00-10.00 sec 301 MBytes 2.52 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 2.84 GBytes 2.44 Gbits/sec sender [ 4] 0.00-10.00 sec 2.84 GBytes 2.44 Gbits/sec receiver iperf Done. PS C:\Users\m0nji\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64> .\iperf3.exe -c 192.168.187.11 -R Connecting to host 192.168.187.11, port 5201 Reverse mode, remote host 192.168.187.11 is sending [ 4] local 192.168.189.10 port 58726 connected to 192.168.187.11 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.01 sec 55.6 KBytes 449 Kbits/sec [ 4] 1.01-2.01 sec 21.4 KBytes 175 Kbits/sec [ 4] 2.01-3.00 sec 21.4 KBytes 178 Kbits/sec [ 4] 3.00-4.01 sec 21.4 KBytes 173 Kbits/sec [ 4] 4.01-5.01 sec 17.1 KBytes 140 Kbits/sec [ 4] 5.01-6.01 sec 21.4 KBytes 175 Kbits/sec [ 4] 6.01-7.01 sec 21.4 KBytes 175 Kbits/sec [ 4] 7.01-8.01 sec 21.4 KBytes 176 Kbits/sec [ 4] 8.01-9.01 sec 15.7 KBytes 128 Kbits/sec [ 4] 9.01-10.01 sec 20.0 KBytes 163 Kbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.01 sec 384 KBytes 314 Kbits/sec sender [ 4] 0.00-10.01 sec 237 KBytes 194 Kbits/sec receiver iperf Done.- Win VM (192.168.189.10) --> FreeBSD 13.0 VM (192.168.187.251 / 192.168.189.251) --> Win VM (192.168.187.11)

PS C:\Users\m0nji\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64> .\iperf3.exe -c 192.168.187.11 Connecting to host 192.168.187.11, port 5201 [ 4] local 192.168.189.10 port 49997 connected to 192.168.187.11 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 268 MBytes 2.25 Gbits/sec [ 4] 1.00-2.00 sec 300 MBytes 2.51 Gbits/sec [ 4] 2.00-3.00 sec 316 MBytes 2.65 Gbits/sec [ 4] 3.00-4.00 sec 316 MBytes 2.65 Gbits/sec [ 4] 4.00-5.00 sec 318 MBytes 2.67 Gbits/sec [ 4] 5.00-6.00 sec 316 MBytes 2.65 Gbits/sec [ 4] 6.00-7.00 sec 317 MBytes 2.66 Gbits/sec [ 4] 7.00-8.00 sec 319 MBytes 2.68 Gbits/sec [ 4] 8.00-9.00 sec 319 MBytes 2.67 Gbits/sec [ 4] 9.00-10.00 sec 316 MBytes 2.65 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 3.03 GBytes 2.60 Gbits/sec sender [ 4] 0.00-10.00 sec 3.03 GBytes 2.60 Gbits/sec receiver iperf Done. PS C:\Users\m0nji\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64> .\iperf3.exe -c 192.168.187.11 -R Connecting to host 192.168.187.11, port 5201 Reverse mode, remote host 192.168.187.11 is sending [ 4] local 192.168.189.10 port 49999 connected to 192.168.187.11 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 326 MBytes 2.73 Gbits/sec [ 4] 1.00-2.00 sec 324 MBytes 2.72 Gbits/sec [ 4] 2.00-3.00 sec 337 MBytes 2.83 Gbits/sec [ 4] 3.00-4.00 sec 340 MBytes 2.85 Gbits/sec [ 4] 4.00-5.00 sec 337 MBytes 2.83 Gbits/sec [ 4] 5.00-6.00 sec 340 MBytes 2.85 Gbits/sec [ 4] 6.00-7.00 sec 341 MBytes 2.86 Gbits/sec [ 4] 7.00-8.00 sec 342 MBytes 2.87 Gbits/sec [ 4] 8.00-9.00 sec 342 MBytes 2.87 Gbits/sec [ 4] 9.00-10.00 sec 342 MBytes 2.87 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 3.29 GBytes 2.83 Gbits/sec sender [ 4] 0.00-10.00 sec 3.29 GBytes 2.83 Gbits/sec receiver iperf Done. -

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

Ah, nice. Ok, I wonder what changed in 12.3 then. Hmm.

@m0nji said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

Sure that you want to have a iperf test when the test does not even leave the vm?

No sorry I meant swap the server and client iperf machines to see if opening the states the other way changes the direction that is slow. It seems very likely it will though.

Steve

-

Let's hope netgate will ditch 12.3 sooner than later...

-

Maybe it's been mentioned already, but I have read at altaro.com that the VMQ feature can be problematic. I have it disabled and have no issues, but I'm also running an i340-T4 on 2012R2. Maybe MS has fixed it. YMMV

-

@rmh-0 Thanks, also sovled it in my environment. Happy to be back on normal performace.

-

Because none of this helped in my case, I tried dda a quad-port-intel 1G-NIC into pfSense. This worked, so I was able to use no virtual NICs at all in pfSense. My test Windows-VM is still using a virtual adapter but now has to go through a physical switch every time... with that, everything is working like it should. Now I hope I can dda my 10G-NIC in pfSense too.

-

Just for some additional info:

We run pfSense in HA (carp) on 2 Hyper-V gen2 VM's. Both run on Hyper-V 2022. Both on HPe DL360 Gen10 hardware, both with 2 HPE FlexFabric 10Gb 2-port 556FLR-SFP nics, that are teamed in a VSwitch SET with dynamic loadbalancing on the nics (Switch Embedded Teaming, no LACP). We always disable RSC during the install scripts, as it gave us nothing but troubles. VMQ is not enabled for the pfSense VM's either, as in the past it didn't work with VMQ anyway. For the love of God I cannot replicate this issue of slow intervlan traffic.However, a colleage-company we work with have this issue on their internal network. Now, that is a gen1 VM on a DL360 gen9, with a SET team on the 4 regular internal HPe 331i nics, but with Hyper-V port balancing. They have two Hyper-V nodes (clustered) and their pfSense 2.6.0 VM is on one of those nodes. They have a hardware NAS accessible through SMB. Now, accessing that NAS (different VLAN) is slow as molasses, BUT ONLY when the VM accessing the NAS is on the same host as the pfSense VM. When we move the VM, or pfSense to the other node, poof everything is up to linespeed again. We move the two VM's back together and it's turdspeed again.

When we access a SMB share from physical machines in another VLAN, when we run the VM with the SMB share on the same Hyper-V host as pfSense 2.6, access to that share is super slow. When we move that VM to another host, it all works fine. So for this specific environment it seems like the inter-vlan routing performance is only bad if those VMs reside on the same host as pfSense.

Needless to say it all works fine with 2.5.x.

The one thing I see in common with at least one person in this thread is that the problem pfSense runs on HPe Gen9 hardware. Are there more people running on Gen9 with issues? If you guys use a SET team, what is the load balancing mode?

-

@cannyit you fixed your slow WAN speed but not the inner vlan routing?!

I think it needs two separate threads because we are talking about 2 different problems -

@rgijsen no i was using a lab system (Ryzen based Windows 11 Pro with Hyper-V enabled and 10Gig Asus Nic)

-

This post is deleted!