QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.05

-

If you only have one WAN and one LAN and no VPNs then matching in on LAN may be OK. One of the main reasons to do it on WAN outbound is because there is no chance you are catching local traffic in the limiter (to/from the firewall, to/from other LANs, VPNs, other unrelated WANs, etc) -- there is a ton of room for error there so for most people it's much easier to take care of it outbound on WAN instead.

Sure you can setup a lot more rules to pass to the other destinations without the limiter but you end up adding so much extra complexity it's just not worth the effort to avoid using floating rules when it's a much cleaner solution.

-

Ok @jimp thanks for the advice. I'll probably have time this weekend to pave my box and try with stock 2.4.5, 2.5 / 2.6 and 22.01 to see if this is a config problem or some edge case (I am known for those...)

If I can't sort it by then I'll probably just plunk down for TAC so I can work on it with you guys.

-

@jimp Today I did 2 things:

- updated to 22.05.a.20220331.1603 (no change)

- factory reset my box, all defaults. Then ONLY set up the limiters and floating rule in accordance with the official guide and re-tested. Sadly I got the same results (wildly fluctuating speeds, failed speedtests, C or D grade on bufferbloat tests)

Without the limiters enabled, I get a perfect 880/940 result on various speedtests, and everything basically works well—except when my upload gets saturated. Then latency spikes >200ms and we start having problems with VoIP, Zoom, Teams etc.

I'm at the end of my rope... my "WAF" score is very low right now

and I need to fix this. I'm totally willing to buy TAC to continue troubleshooting, but, do you think that will be helpful? I can't imagine this is a config issue at this point, given the factory reset ... could this possibly be a hardware problem?? (using a 6100)

and I need to fix this. I'm totally willing to buy TAC to continue troubleshooting, but, do you think that will be helpful? I can't imagine this is a config issue at this point, given the factory reset ... could this possibly be a hardware problem?? (using a 6100) -

What limits are you setting for your circuit? What happens if you set them a lot lower? For example, if you have a 1G/1G line what happens if you set them at 500/500? 300/300?

I wouldn't expect results like you are seeing unless the limits are higher than what the circuit is actually capable of pushing, so it isn't doing much to help because it doesn't realize the circuit is loaded.

It's also possible the queue lengths are way too low for the speed.

-

It's a 1G FIOS circuit, real world I get 880 down and 939 up consistently. Latency to 8.8.8.8 is 4ms.

[22.05-DEVELOPMENT][root@r1.lan]/root: ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8): 56 data bytes 64 bytes from 8.8.8.8: icmp_seq=0 ttl=118 time=4.097 ms 64 bytes from 8.8.8.8: icmp_seq=1 ttl=118 time=4.315 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=118 time=4.118 ms 64 bytes from 8.8.8.8: icmp_seq=3 ttl=118 time=4.004 ms ^C --- 8.8.8.8 ping statistics --- 4 packets transmitted, 4 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 4.004/4.133/4.315/0.113 msI played around with the queue length. Tried leaving it empty/default, as well as 3000 and then 5000. Didn't try higher than that.

I also had the same thought as you- let's just see if the limiter is even working at all, so I tried setting it much lower e.g. 50Mbit or 100Mbit, and that didn't work (as seen in my screenshots from the post above).

-

@luckman212 Are you seeing any sort of activity in "Diagnostic > Limiter Info" if you watch it during a speed test? Because it sure sounds as if traffic is somehow not even being directed through your limiters right?

-

@thenarc I do see activity but tbh not quite sure what to look for. I also do see the CoDel Limiter in Floating Rules matching some states.

I had thought that maybe some of my outbound NAT or policy-based routing rules on the LAN were interfering with this—that's why I did the factory reset, to rule that out. I've been playing around with this script and watching it from the console since it refreshes faster than Diags > Limiter Info, but again nothing jumps out, the bandwidth on the pipes looks correct etc...

#/bin/sh _do() { clear cat /tmp/rules.limiter echo echo "PIPES" echo "=====" ipfw pipe show echo echo "QUEUES" echo "======" ipfw queue show echo echo "SCHED" echo "=====" ipfw sched show sleep 0.5 } while [ 0 ]; do _do done -

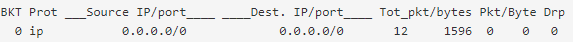

@luckman212 Yeah in fairness I'm not sure exactly what to look for either aside from just "more than nothing". For example, I see non-zero values in my output for Tot_pkt/bytes:

But seeing matches on the floating rule seems like positive confirmation as well. It's definitely a different problem than the one I've been having myself, because my limiters are definitely working (insofar as they're limiting throughput as expected) it's just that I still get catastrophic packet loss and latency on downloads.

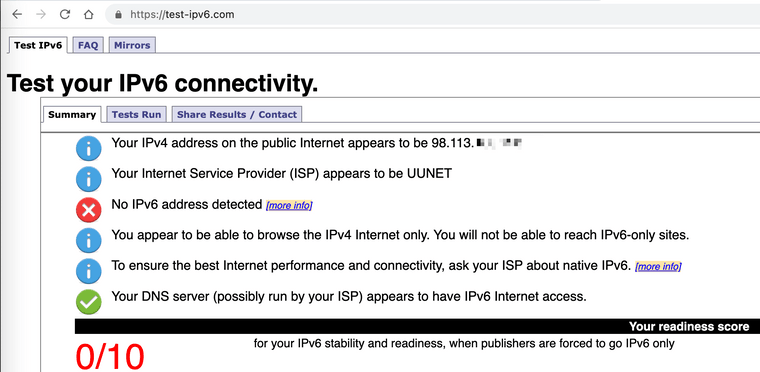

Anyway, grasping at straws here, but I do see that your rule is IPv4 only; is there any chance at all you've got an IPv6 WAN IP and the speed test is using IPv6? Seems highly unlikely, I don't think most speed tests will, but at the moment that's the only idea I've got.

-

@thenarc said in QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.0x:

Seems highly unlikely,

waveform.com definitively does use IPv6.

-

@thenarc said in QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.0x:

speed test is using IPv6

Comcast also does, there is a small gear icon in the upper right to change to IPv4.

In particular I've found speed through Hurricane Electric IPv6 is way less than IPv4.

@luckman212 If the limiter isn't applying then the rule isn't matching. Are you clearing states between making rule/limiter changes? Do the states agree with what you expect? For example a web site file download is an outbound state (device to web server) and the download just returns on that state. (Or from the perspective of the web server's router it would be an inbound connection/state.)

-

Definitely no IPv6 here! I've been waiting 12 years for Verizon to roll it out for residential FIOS customers. See this 45+ page thread on DSLreports.

@SteveITS yes I am clearing states via

pfctl -F statebetween runs. I don't know how many connections for example the waveform bufferbloat test opens (I'd assume >1, probably dozens) so it's hard to know for sure if the # of states is correct. -

@jimp I just went ahead and bought a TAC Pro sub. Order SO22-30515. Hope I can get some assistance next week.

-

An update for anyone following along:

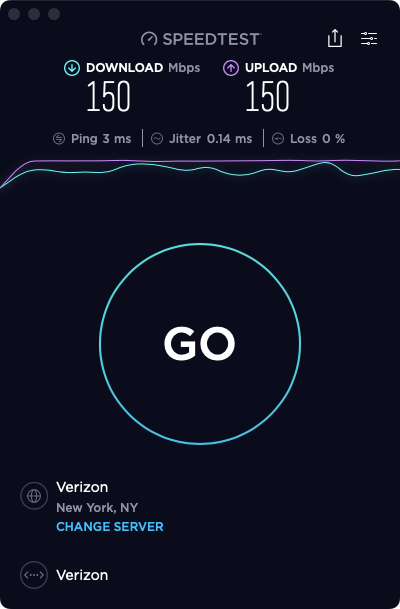

Today I unboxed a brand new 6100, flashed

22.01-RELEASEonto it and proceeded to make only ONE configuration change from the default factory config: creating 2 limiters/queues and adding the floating rule exactly as per the offical docsI set the bandwidth at 150Mbps for testing, to ensure I'd be able to easily see if the limiters were working.

Guess what? It worked flawlessly.

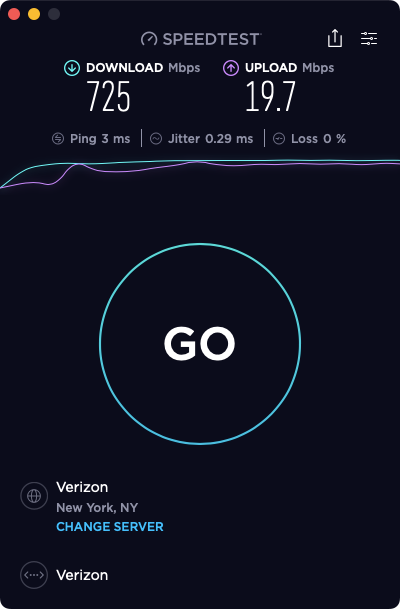

Next, I went to System > Update and updated to 22.05.a.20220403.0600. No other changes were made.

After rebooting, I re-tested and got this (which matches my original problem throughout this thread):

I diff'ed the

config.xml's from before and after the 22.05 upgrade to be sure there were no other changes made behind the scenes (there were not).So now I am even more convinced there's either a bug in 22.05 or something's changed in the

ipfwthat ships with it that requires some sort of syntax change which hasn't been accounted for. -

Issue report here:

https://redmine.pfsense.org/issues/13026 -

Since this seems to be just an issue with how the ruleset syntax is generated, is there a way I can manually run a fixup command or hand-edit the rules to fix this problem right now on 22.05? I have a somewhat urgent need to use limiters now...and since 22.05 is still at least 2 months away and I can't roll back my config anymore (too many changes and it's not backwards-compatible with 22.01) it would be very helpful.

-

L luckman212 referenced this topic on

L luckman212 referenced this topic on

-

L luckman212 referenced this topic on

L luckman212 referenced this topic on

-

This post is deleted! -

This post is deleted! -

Just adding some notes from redmine...

Currently this bug (#13026: Limiters do not work) appears to be blocked by the following 2 bugs:

- #12579: Utilize dnctl(8) to apply changes without reloading filter

- #13027: Input validation prevents adding a floating match rule with limiters and no gateway

12579 says "#12003 should be merged first" but even though progress is at 0%, it appears a patch has been merged. 13027 also has a merge request pending. Target on 13027 is 22.09—hope we don't have to wait that long to have functioning limiters again!

@jimp is there any movement going on with this (imo) important bug? Thanks

-

@luckman212 It's being worked on.

-

@marcos-ng Good to know. I just updated to 22.05.a.20220426.1313 and was going to test a bit, but I'll keep waiting for some news on redmine.

-

T thomas.hohm referenced this topic on

-

T thomas.hohm referenced this topic on

-

T thomas.hohm referenced this topic on

-

T thomas.hohm referenced this topic on