Editing loader.conf

-

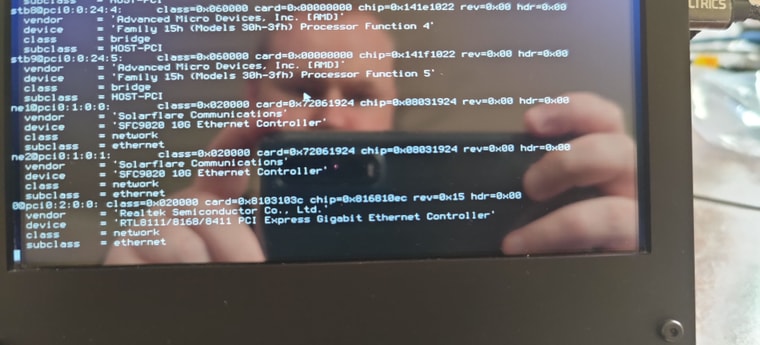

pciconf -lvwill show you all the PCI devices with their IDs. -

Thanks very much. So I finally had time to sit and look at this. I ran the command to look at the PCI devices and came up with the below.

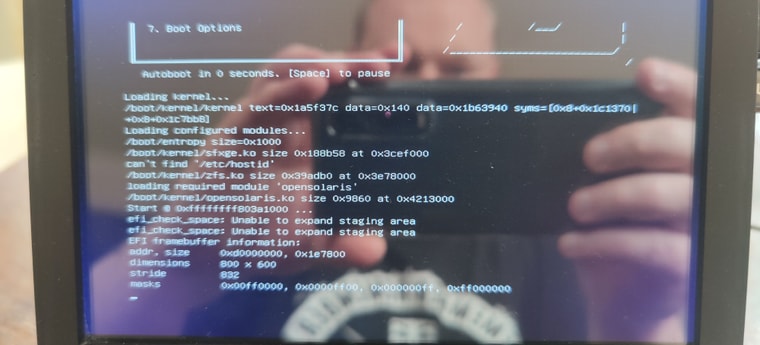

I added the driver to the kernel as per your command which evidently worked, but now the system hangs at boot and is stuck at the following screen

Any suggestions ?

Thanks as always.

-

Hmm, interesting.

Ok, so the text isn't centered by the monitor correctly so we can't the left most characters. We can assume those NICs are shown as

none1andnone2which is expected when no driver attached.But, then it looks like you are exhausting the uefi space trying to load the sfxge driver and the required zfs components.

So one option here would be install as ufs so you don't need the zfs and opensolaris drivers loaded.

You could also try loading the drivers after boot to test NIC. No point going to a lot of trouble if it won't work anyway.Interrupt the boot at loader menu to reach the OK prompt and enter the following to prevent the driver loading:

Exiting menu! Type '?' for a list of commands, 'help' for more detailed help. OK set sfxge_load="NO" OK boot booting...It should then boot completely. Then at the command line run:

kldload sfxge

You can check that is loaded with:[22.05-DEVELOPMENT][root@5100.stevew.lan]/root: kldstat Id Refs Address Size Name 1 36 0xffffffff80200000 3b033d8 kernel 2 2 0xffffffff83d05000 9870 opensolaris.ko 3 1 0xffffffff83d0f000 39bca0 zfs.ko 4 1 0xffffffff840ab000 1cf0 sg5100.ko 5 1 0xffffffff840ad000 3970 wbwd.ko 6 2 0xffffffff840b1000 5f50 superio.ko 7 1 0xffffffff84321000 1010 cpuctl.ko 8 1 0xffffffff84323000 87a0 aesni.ko 9 1 0xffffffff8432c000 bf8 coretemp.ko 10 1 0xffffffff8432d000 11da8 dummynet.ko 11 1 0xffffffff8433f000 7d3a0 sfxge.koYou will probably also see the NICs load. If not check the system logs for errors.

Steve

-

Yes sorry about that. Im using my small diagnostic screen which annoyingly cuts off the far left of anything om screen.

I will give your recommendations a go.

I had installed pfSense using the Guided UEFI method. In order to do set it up using UFS, what install method should I be using ? I know the other two methods if I recall correctly are Guided ZFS and Guided BIOS, or do I select Guided UEFI and then select UFS afterwards as the filesystem? Just making sure I do the initial setup correctly to attempt this.

Many thanks!

-

Oh, yes, completely forgot about installing legacy BIOS if you can. That should allow you to load both zfs and sfxge. The other systems where we see this are UEFI only.

Steve

-

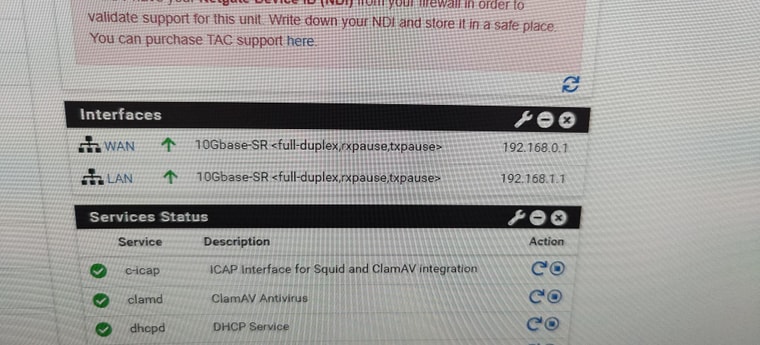

Good news, using the BIOS method solved me issue. I have restored my pfSense configuration (used from a backup file from my other pfsense machine).

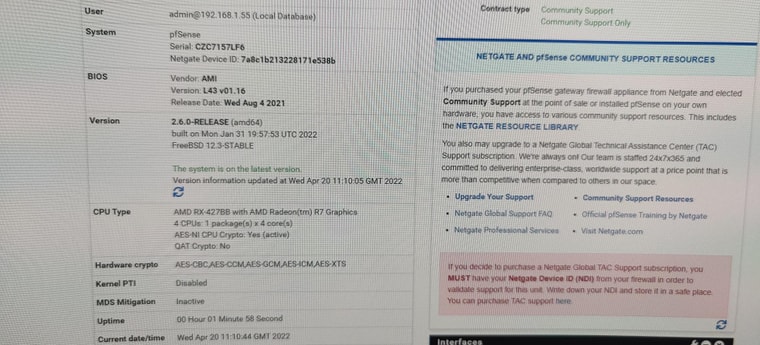

However, Upon running some speed tests via fast.com I am noticing that my new smaller more power efficient machine is struggling with regards to throughput on both Down and Up. My first thought was that it was something to do with the weaker CPU in my new machine. Current specs can be seen below of my new setup:

I can get about 260MB down and 13Up max.

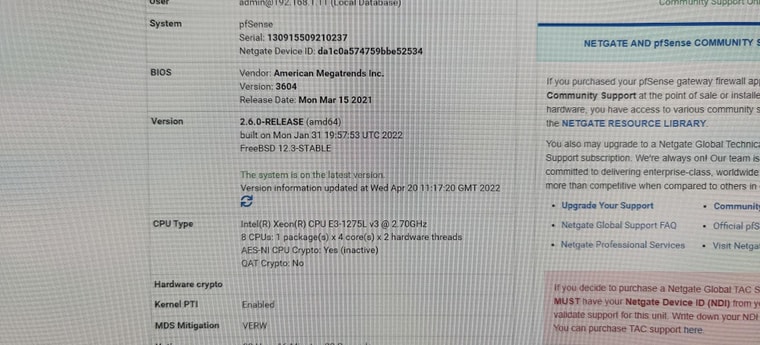

My existing machine which has the below spec in the image can do the full expected speeds of my internet which is currently 550 Down and 70 Up.

Something else curious that I noted that was my SFP adapters in my NIC in my new machine are detected as 10GBase-SR ? Strange as I am not running fibre modules.

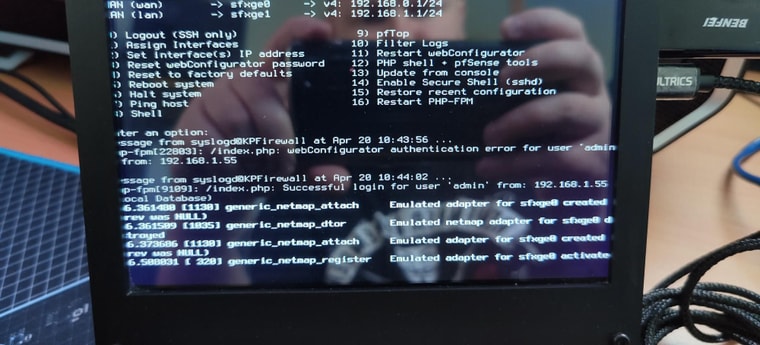

The other curious item is that I note that after boot, I am getting an "emulation" message regarding my NIC, this only really started appearing once I restored my configuration, but I did not see it on my other pfsense install ever. So I am wondering if there is some emulation going on that could be hampering speeds ?

Any ideas come to mind ? I would have thought the AMD CPU in my new build would have been enough to satisfy up to Gigabit speeds. It is worth noting that the CPU usage is not at maximum when running speedtests. So leads me to think we have another issue.

-

@panzerscope said in Editing loader.conf:

The other curious item is that I note that after boot, I am getting an "emulation" message regarding my NIC, this only really started appearing once I restored my configuration, but I did not see it on my other pfsense install ever. So I am wondering if there is some emulation going on that could be hampering speeds ?

Do you, or did you in the configuration you restored, have Snort or Suricata installed and configured for Inline IPS Mode? Either of those two packages, when configured for Inline IPS Mode, will utilize the kernel netmap device. When the physical NIC driver/interface does not support native netmap operation, the kernel will create an emulated netmap adapter. That's what the logged message is indicating (an emulated netmap adapter is being created).

The emulated adapter is very slow compared to a native implementation.

-

Mmm, sfxge is odd in a whole number of ways. It would not surprise me at all if it doesn't support netmap natively. The kernel module isn't even named as an interface!

Check the output of:

ifconfig -vvvm sfxge0and the same for sfxge1. Make sure they both show the correct link type as available.

Since it's passing more than 100Mbps you at least know it's not linked at 100M,Are those not SFP+ ports there then? 10Gbase-T?

Steve

-

Hey @bmeeks & @stephenw10

Thank you so much for your help, I can already confirm your suspicions to be correct. I was using such services as Snort in Inline mode and it must be the software emulation of netmap that was causing the issue. Rather than testing further....I decided it was time to see if I could bypass this whole Solarflare NIC altogether.

Long story short, I recalled that I had a spare Intel I350 -T4 NIC in my hardware pile. It is not 10Gig, but frankly the pfsense does not need to be as the incoming internet is only going to be at a maximum of 900MB. So no loss there.

After installing pfsense again and restoring my configuration, my speeds are back to normal after Snort was setup. So I guess long story short, which further confirms what I see on the internet....stick with Intel NICS. The Solarflare NICS are OK but under Windows it seems lol.

Hopefully moving forward, I will not have any issues :)

-

Yeah, that's a much better choice if you don't really need 10G.

That NIC is used by thousands of installs and very well tested.Steve

-

@stephenw10 said in Editing loader.conf:

Yeah, that's a much better choice if you don't really need 10G.

That NIC is used by thousands of installs and very well tested.Steve

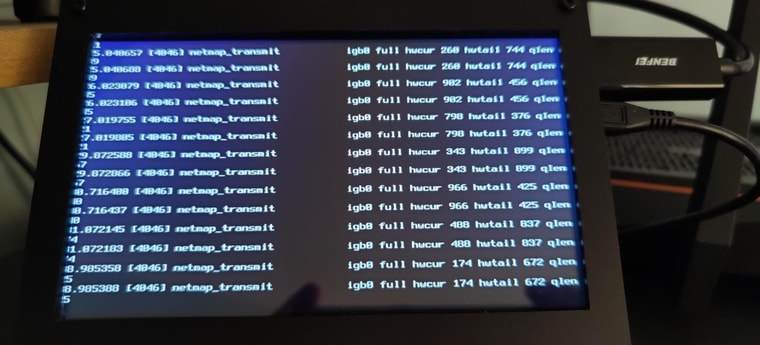

Well that is certainly good to hear. This may be nothing, again I did not get this on my last PfSense install which used an Intel X540-T2 NIC, but I notice that now with my new setup, I am getting the netmap logging.

Any idea ? Just so you know, it is referring to my WAN in these logs.

Thanks once again!

-

Might need to wait for Bill to comment there.

It looks like a queue is fillling somewhere. You might need to increase a buffer. I'm not sure where though.Steve

-

@panzerscope said in Editing loader.conf:

@stephenw10 said in Editing loader.conf:

Yeah, that's a much better choice if you don't really need 10G.

That NIC is used by thousands of installs and very well tested.Steve

Well that is certainly good to hear. This may be nothing, again I did not get this on my last PfSense install which used an Intel X540-T2 NIC, but I notice that now with my new setup, I am getting the netmap logging.

Any idea ? Just so you know, it is referring to my WAN in these logs.

Thanks once again!

That error indicates the netmap queues are not being emptied fast enough. Snort is a single-threaded process. High network throughput can be stressing it, especially without a very high-end high-speed CPU. Since Snort is single-threaded, raw CPU clock speed matters much more than the number of cores.

You may have better luck running the newest Suricata version as Suricata is multithreaded, and the netmap code within that binary has been rewritten to allow use of multiple queues (when the NIC exposes more than one).

-

Thanks guys. So it was indeed Snort. Removed it, and the logging went away. I have since installed Suricata and so far so good. Looks like high volume traffic through the WAN is not producing any queuing issues which is awesome.

-

@panzerscope said in Editing loader.conf:

Thanks guys. So it was indeed Snort. Removed it, and the logging went away. I have since installed Suricata and so far so good. Looks like high volume traffic through the WAN is not producing any queuing issues which is awesome.

Thank you for the feedback. Hopefully this thread may help someone else in the future with a similar issue.

I'm glad Suricata seems to be working better for you. I collaborated with the Suricata upstream team to add the multiple queue support for netmap back during the summer of 2021.

Just be aware that using Inline IPS Mode (which requires netmap) will cause some issues with certain other pfSense/FreeBSD features. First and foremost, limiters and shapers are not currently compatible with netmap. Secondly, VLANs do not always work well. It depends on the exact configuration. When using Inline IPS Mode, you must run the Snort or Suricata instance on the physical parent VLAN interface.

-

P panzerscope referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on