Performance issue on virtualised pfSense

-

Any multiqueue NIC can spread the load across multiple CPU cores for most traffic types. That includes other types in KVM like vmxnet.

Other hardware offloading is not usually of much use in pfSense, or any router, where the router is not the end point for TCP connections. So 'TCP off-loading' is not supported.

Steve

-

@stephenw10, how can I know if my ethernet card will work under vmxnet driver in KVM with multiqueue capability? Actually I was expecting performance degrade on speeds above ~1Gb/s. Do you think that running pfSense on bare metal server can provide me a performance near to 10Gig firewalling capacity?

Let's say having the same hardware on which I'm hosting pfSense now, will it help if I setup it natively, without KVM? Will I have multiQ capability for my 10Gb NICs?

-

It doesn't matter what the hardware is as long as the hypervisor supports it. Unless you are using PCI pass-through the hypervisor presents the NIC type to the VM with whatever you've configured it as. I'm using Proxmox here, which is KVM, and vmxnet is one of the NIC types it can present.

-

@stephenw10 , is there any chance to change virtio to vmxnet network drivers in virsh and get multiqueue NIC? It's a big deal to change such settings in our environment that's why I'm asking. If it was in my lab I would easily test, but if I do it now, I may loose virtual appliance and access to it. Is it worth even trying to change virtio to vmxnet ?

-

@shshs said in Performance issue on virtualised pfSense:

Is it worth even trying to change virtio to vmxnet ?

Only if you're not seeing the throughput you need IMO. High CPU use on one core is not a problem until it hits 100% and you need more.

Steve

-

@stephenw10, thanks a lot! How can I verify if NIC is multiQ, except of verifying vmxnet driver?

-

Usually by checking the man page like: vmx(4)

Most drivers don't require anything, vmx has specific instructions.Most drivers support multiple hardware chips so the number of queues available will depend on that.

Drivers that do will be limited by the number of CPU cores or the number of queues the hardware supports. Whichever is greater. So at boot you may see:

ix3: <Intel(R) X553 L (1GbE)> mem 0x80800000-0x809fffff,0x80c00000-0x80c03fff at device 0.1 on pci9 ix3: Using 2048 TX descriptors and 2048 RX descriptors ix3: Using 2 RX queues 2 TX queues ix3: Using MSI-X interrupts with 3 vectors ix3: allocated for 2 queues ix3: allocated for 2 rx queuesThat's on a 4100 but on a 6100 the same NIC shows:

ix3: <Intel(R) X553 L (1GbE)> mem 0x80800000-0x809fffff,0x80c00000-0x80c03fff at device 0.1 on pci10 ix3: Using 2048 TX descriptors and 2048 RX descriptors ix3: Using 4 RX queues 4 TX queues ix3: Using MSI-X interrupts with 5 vectors ix3: allocated for 4 queues ix3: allocated for 4 rx queuesSteve

-

@stephenw10 we don't have vmxnet driver in KVM, where did you get it?

-

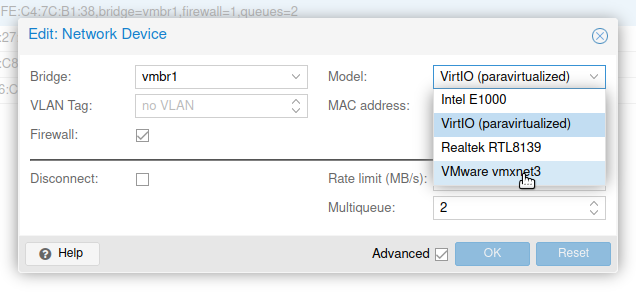

It's available by default in Proxmox:

And since that is built on KVM I would assume it can be used there also. I have no idea how to add though.

Steve

-

@stephenw10 yeah i forgot proxmox supports 'vmxnet', I assume its not as optimised as it is in esxi, but I do wonder if a multi queue 'vmxnet' on proxmox is more capable than a single queue 'vtnet'. It will be interesting to find out.