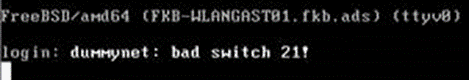

dummynet: bad switch 21!

-

I am seeing this dummynet error as well on the SG-1100 version 22.05. Enabling captive portal on OPT1 will continually generate this error. If I disable CP, everything is fine again.

I also have limiters, but they are all disabled since limiters + captive portal broke in the last (22.01) version. Very frustrating!

-

We have a similar problem with a customer. Running plus-22.05 inside a VM in VMware. Big VM, 6 cores, upper end of 5GHz per core box.

Customer is running the new plus version because he didn't want to wait to 2.7 release and as this should become a production system we went the plus route.

Captive Portal for a bigger audience set up, limits in portal to ~4Mbps per user and the system is on 100% CPU and the connected users see high latency in 300-400ms area and packet loss. Setting the limit to around 10Mbps, the latency drops down quite a bit and the loss stops. But after a few hours (max 24h) the pfSense VM completely freezes up. As the lockups didn't produce a kernel dump or anything this wasn't really debuggable but today he finally had a last message on the console:

dummynet: bad switch 21!

So it seems somehow related to the new portal and limiter system now working with pf instead of ipfw as before as the customer did tests with the old 2.6 version that didn't lock up the machine (but had other quirks that's why we wanted to use 22.05 for the newer portal).

@bitrot AFAIK our customer is NOT running multiple WANs so multiWAN doesn't necessarily play into that particular problem.

As it isn't a live instance yet(!) we'd be happy to provide support & testing ground or test in any way possible to perhaps bag and fix that problem :)

Cheers

-

The bad switch error has been fixed, it has to do with IPv6 on the CP zone:

https://redmine.pfsense.org/issues/13290The CPU usage may have to do with pfBlockerNG if it's installed - patch here:

https://redmine.pfsense.org/issues/13154#note-8Unclear on the freezing up part. I suggest monitoring resource usage to see if there's a clue there.

-

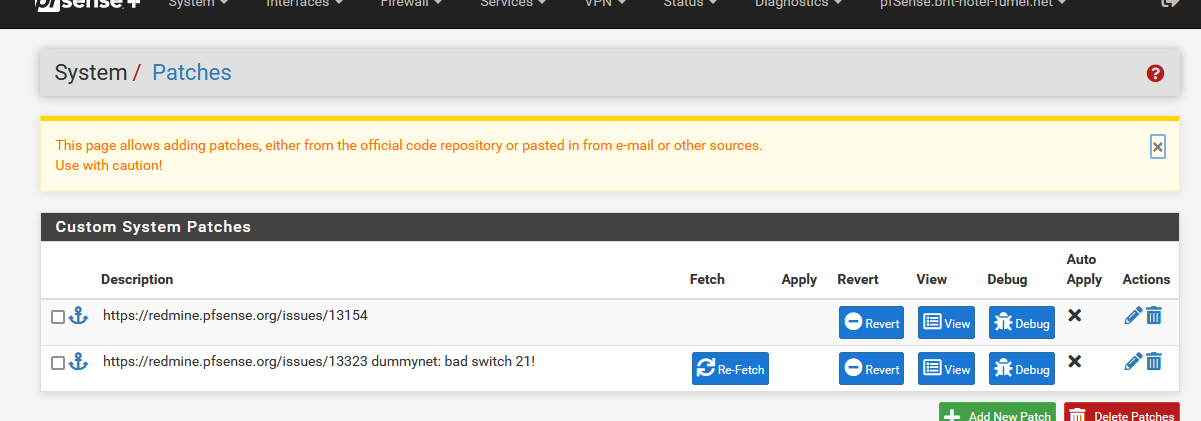

Install the 'Patches' pfSEnse package.

For 22.05 it will be empty.Now, add "13290":

@marcosm mentioned https://redmine.pfsense.org/issues/13290 and over there, in the last comment you will find good patch-food : https://redmine.pfsense.org/issues/13323 that took care of dummy net messages.

Btw : https://redmine.pfsense.org/issues/13154 is a pfblockerng-devel patch.

-

@gertjan I already know ;) Thanks though.

@marcosm said in dummynet: bad switch 21!:

The bad switch error has been fixed, it has to do with IPv6 on the CP zone:

https://redmine.pfsense.org/issues/13290The CPU usage may have to do with pfBlockerNG if it's installed - patch here:

https://redmine.pfsense.org/issues/13154#note-8Unclear on the freezing up part. I suggest monitoring resource usage to see if there's a clue there.

I have a session coming up with the customer and will check. He had multiple freezes yesterday so disabled everything until tomorrow so we can have a look again. The freeze/lockup seems quite annoying. As the setup is relatively simple, there's no pfBlockerNG right now, so the CPU seems to come from anything else. Will let you know as soon as I see more clearly :)

Cheers

-

So actually I've got feedback from the client. Indeed he installed pfBlocker unknown to us - so the CPU problem seems very much accounted for. But:

We took the patch from the Redmine Issue 13290 in note 6

https://redmine.pfsense.org/issues/13290#note-6But with only that part applied we had the problem, that the dummynet message was gone (nice!) and a client was redirected to the portal, but after logging in, the client gets the success / click to disconnect page but NO traffic is going on. That was with a windows test client that actually had IPv6 disabled so to not trigger the dummynet things accidentally. So it seems that the patch described in #6 in the redmine issue is only half the patch? Or missing something? If he reverts the patch, the client simply gets the "success" message in the portal page and it's fine and having access.

Did we miss some of the patch content?

-

@jegr

The fix for the dummynet error can't be applied via a patch - that will need to wait for 22.11. It's mainly cosmetic as long as IPv6 doesn't matter in their setup. The patch you applied is an incomplete patch for the policy routing issue here: https://redmine.pfsense.org/issues/13323 -

@jegr said in dummynet: bad switch 21!:

Or missing something?

Yep. Read my post above.

Go to https://redmine.pfsense.org/issues/13323@Kristof Provost a while ago :

And that fix has landed: https://github.com/pfsense/pfsense/commit/add6447b9dc801144141bb24f8c264e03a0e7cae

I know, it's isn't very clear.

@marcosm said in dummynet: bad switch 21!:

@jegr

The fix for the dummynet error can't be applied via a patch - that will need to wait for 22.11. It's mainly cosmetic as long as IPv6 doesn't matter in their setup. The patch you applied is an incomplete patch for the policy routing issue here: https://redmine.pfsense.org/issues/13323Since I used the two patches I showed above, the " dummynet error" messages I saw after installing 232.05 were gone.

I use the captive portal on an interface.

The captive portal is "IPv4" only.

I thought IPv6 traffic entering the captive portal interface was provoking these dummy messages.

Since the patch(es), this message is gone.@jegr said in dummynet: bad switch 21!:

feedback from the client. Indeed he installed pfBlocker unknown to us

Yeah, we love them, these "clients" ;)

Before, you would be fine with some one who thinks he knows DNS.

With pfB, you have to have some one who knows that he 'thinks' DNS.

Start billing the client more, and get your hands on that guy that 'thinks' ;) (could be you)

-

@marcosm Ah I see. Alright, as that's a prod system, I can't let them upgrade to 22.09-snaps/22.11 dev yet but as far as it's cosmetic, that's fine and IPv6 doesn't play any role in their CP usage scenario anyways.

Then I hope the other problems were only related to the cpu-maxout of pfBlocker as were the lockups.

The other thing mentioned was the latency and packet loss occuring if the limit per user is set to around 4Mbps and if increased to ~10Mbps that has normalized. We'll test and see if those happenings were also related to the high CPU problem or if they are portal-related in any kind. It wouldn't be impossible that 4Mbps just is too small a limit with too much packets bottling up in general for the mobile devices on that subnet. But we'll see how the tests go.Thanks!

-

@gertjan said in dummynet: bad switch 21!:

Start billing the client more, and get your hands on that guy that 'thinks' ;) (could be you)

We're already billing them ;) That's not a problem. Problem was lack of communication. We talked about pfB in the design phase of the project but afterwards we got no feedback so I assumed we're still on the stage we were before. As that is a closed system providing public WiFi in a big location (

) I can't just jump in and have a look myself as they are almost air-gapped from their normal system to just provide internet while waiting without compromising internal systems.

) I can't just jump in and have a look myself as they are almost air-gapped from their normal system to just provide internet while waiting without compromising internal systems.But after more then 20y you get used to that

And after more then 16y pfSense and Forum you get used to even more

At least I can fall back to the Guru-status and tell him it's not our (or the software's) fault that he didn't supply enough information

Now just have to wait about the first user-test cases hoping without the CPU lockups of the pfB problem that the other things will solve itself.

Cheers

\jens