High CPU load on single CPU core

-

@stephenw10 said in High CPU load on single CPU core:

Mmm, that is where the load from pf itself appears. 100% of one CPU core on a C3758 is a lot for 200Mbps though. And pf loading would normally be spread across queues/cores unless the NICs are being deliberately limited to one queue.

Steve

Do you have any idea or hint where I might be able to see if there is any (accidental?) setting to use only one core per NIC?

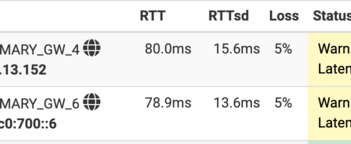

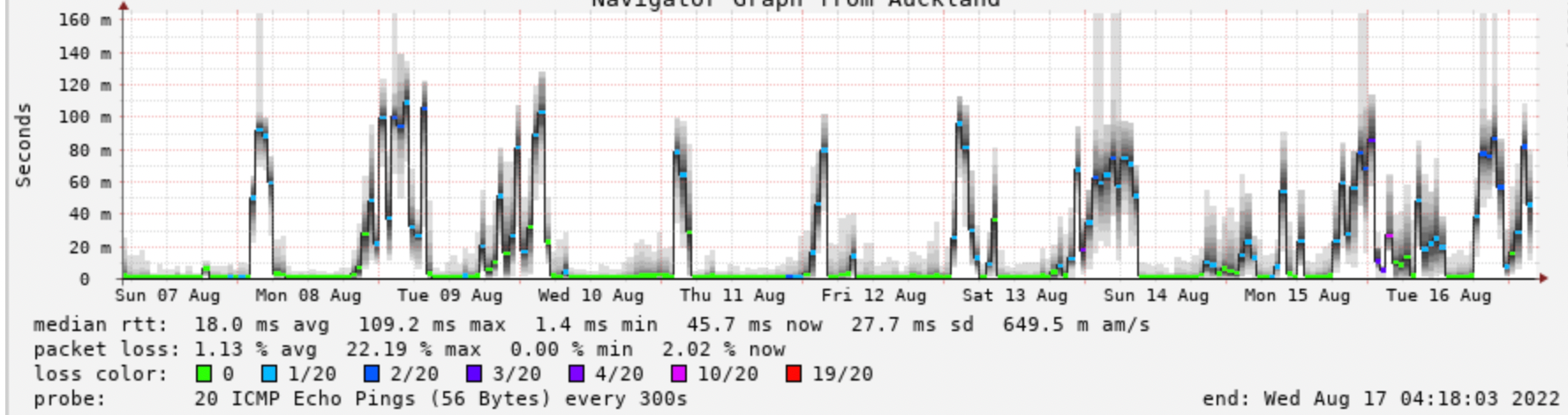

While this occurs (which is more and more frequently in the past 2 weeks for us) we are seeing these spikes in latency and packet loss to our (directly connected) upstream.

When this issue isnt occurring we usually see under 1ms latency

When this issue isnt occurring we usually see under 1ms latencyIs there a way to see what type of traffic is trigging this CPU use?

The reason why I think its a "certain type of traffic" is because we sometimes see 200Mbps (or more if we run speedtests) without any issues at allHere is an example of (external) monitoring

-

What pfSense version are you running?

Easiest way to check the NIC queues is usually the boot log. You should see something like:

ix0: <Intel(R) X553 N (SFP+)> mem 0x80400000-0x805fffff,0x80604000-0x80607fff at device 0.0 on pci9 ix0: Using 2048 TX descriptors and 2048 RX descriptors ix0: Using 4 RX queues 4 TX queuesSteve

-

@stephenw10 said in High CPU load on single CPU core:

Easiest way to check the NIC queues is usually the boot log. You should see something like:

Oh interesting, this is what I see:

$ cat /var/log/dmesg.boot | grep ix3 ix3: <Intel(R) X553 (1GbE)> mem 0xdd200000-0xdd3fffff,0xdd600000-0xdd603fff at device 0.1 on pci7 ix3: Using 2048 TX descriptors and 2048 RX descriptors ix3: Using an MSI interrupt ix3: allocated for 1 queues ix3: allocated for 1 rx queues ix3: Ethernet address: ac:1f:6b:b1:d8:af ix3: eTrack 0x8000087c ix3: netmap queues/slots: TX 1/2048, RX 1/2048ix3 being the WAN interface, but all 4 ixN devices are all showing "allocated for 1 queues"

This is pfsense 2.6 CE but this box is a few years old from v2.4 days and has been incrementally updated. (so might have some settings it now should not)

-

Ah, maybe you have set queues to 1 in /boot/loader.conf.local?

That was a common tweak back in the FreeBSD 10 (or was it 8?) era when multiqueue drivers could prove unstable.

But those should be at least 4 queues.

I would still have expected it to pass far more though even with single queue NICs. But that does explain why you are seeing the load on one core.

Steve

-

So I just removed a bunch of older configs now this is what I see:

ix3: <Intel(R) X553 (1GbE)> mem 0xdd200000-0xdd3fffff,0xdd600000-0xdd603fff at device 0.1 on pci7 ix3: Using 2048 TX descriptors and 2048 RX descriptors ix3: Using 8 RX queues 8 TX queues ix3: Using MSI-X interrupts with 9 vectors ix3: allocated for 8 queues ix3: allocated for 8 rx queuesAnd holy wow, things are running beautifully (for now)

What even more crazy, is when the network is under-utilised we WERE getting ~0.7ms to our transit provider, and now we're seeing 0.3ms stable (and staying at 0.3ms under our regular 200mbit load)

I might be counting the chickens before the hatch, but this change alone seems to have made a dramatic improvement (better than we have recorded in our historic smokepings for the past 2 years even)

Thanks pointing this out!

-

Nice.

-

@stephenw10

Hi,

I was looking at my own loader.conf since i didn't have the loader.conf.localIs this normal?

kern.cam.boot_delay=10000 kern.ipc.nmbclusters="1000000" kern.ipc.nmbjumbop="524288" kern.ipc.nmbjumbo9="524288" opensolaris_load="YES" zfs_load="YES" kern.geom.label.gptid.enable="0" kern.geom.label.disk_ident.enable="0" kern.geom.label.disk_ident.enable="0" kern.geom.label.gptid.enable="0" opensolaris_load="YES" zfs_load="YES" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" net.link.ifqmaxlen="128" autoboot_delay="3" hw.hn.vf_transparent="0" hw.hn.use_if_start="1" net.link.ifqmaxlen="128"Why so many "net.link.ifqmaxlen="128"

And some other double lines too -

@moonknight I get those double lines as well. It’s really weird!

-

@moonknight said in High CPU load on single CPU core:

Why so many "net.link.ifqmaxlen="128"

Your machine is stuttering ...

(joke)

(joke) -

It's a known issue but it's only cosmetic. The duplicate entries don't hurt anything.

Steve