FQ_CODEL only working on downstream?

-

I'm experiencing some problems in relation to FQ_CODEL

It appears FQ_CODEL is working on the downstream but not on the upstream. I applied rules limiting downstream to 30 mbps and did speed tests which showed me being limited appropriately. Doing the same with upstream though didn't work.

so yeah im not really sure where to go from here. The correct rules are applied, ones which worked perfectly in 2.4 (i am now on the 2.7 development build as reports of issues were mentioned in 2.6)

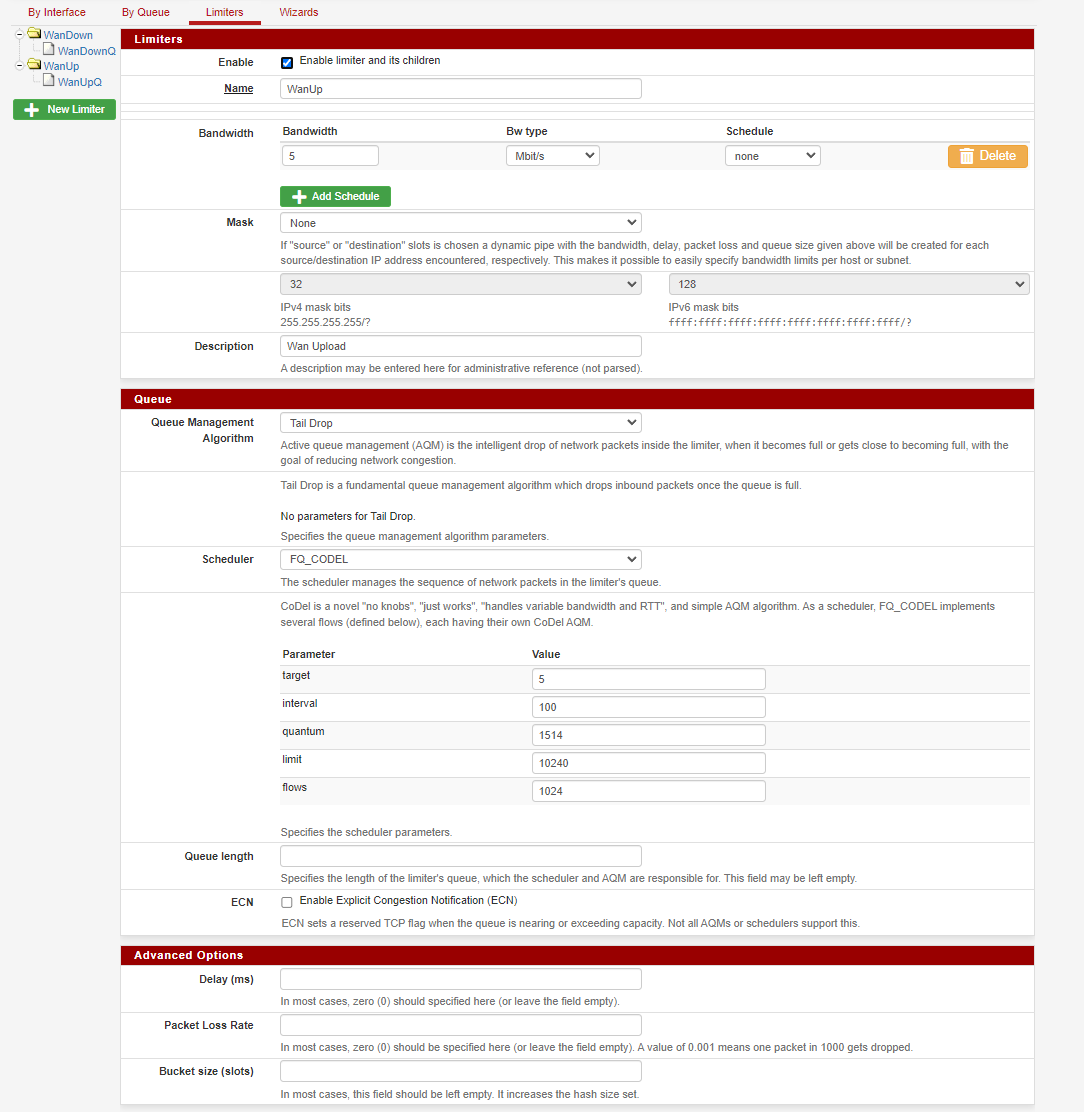

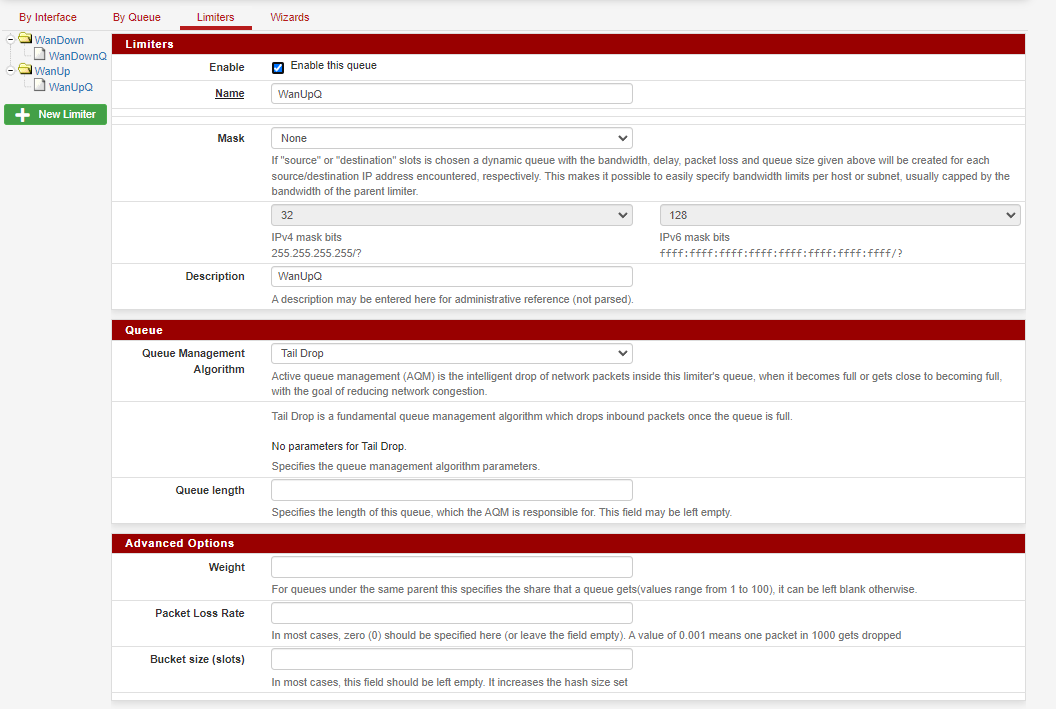

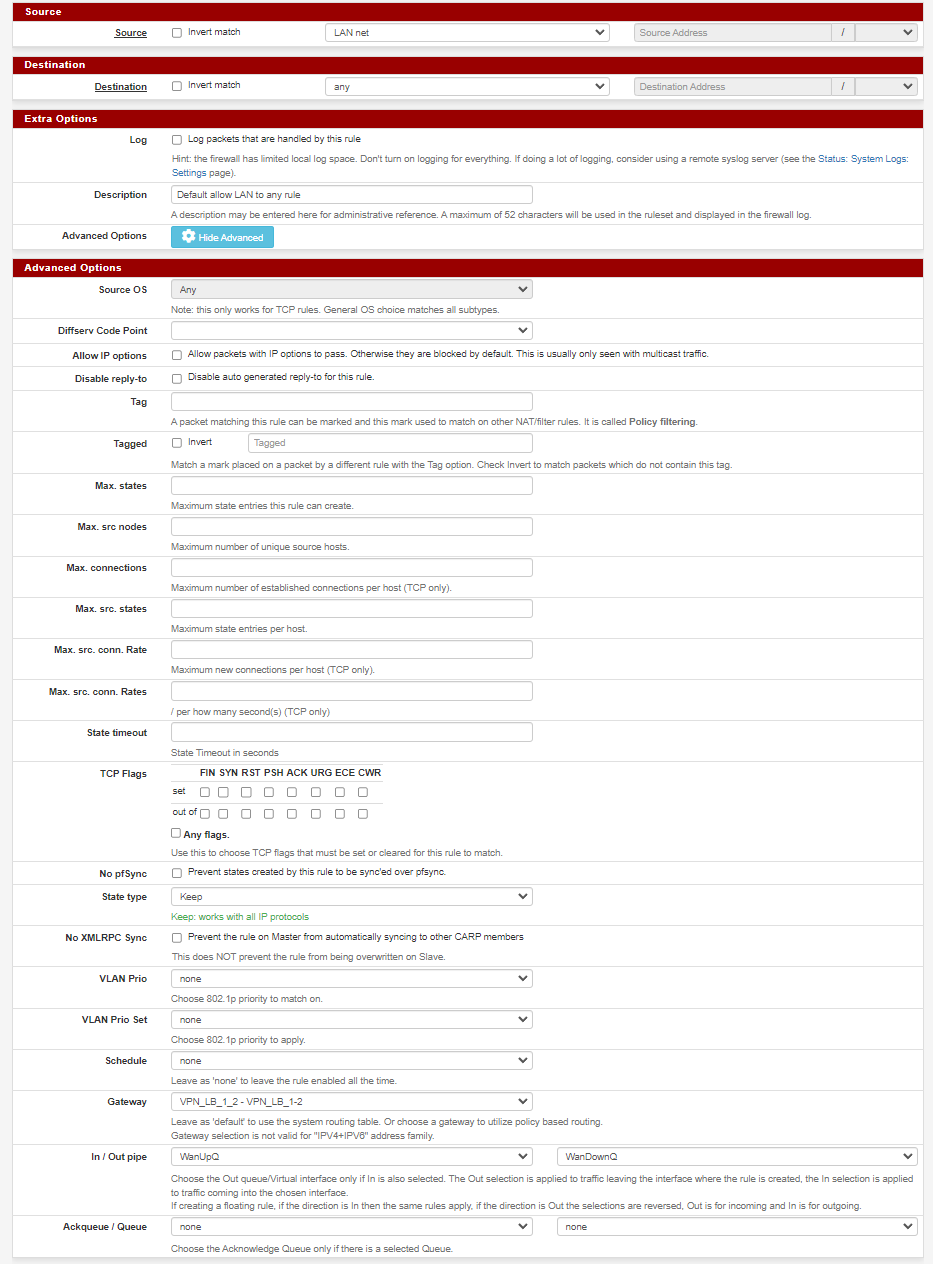

I'll post some screenshots of my config just in case anybody can notice any glaring issues with them but i'm certain traffic is flowing from them, the limiter as you can see the values go up once the transfer starts

Any idea where to go from here in getting this resolved? Is anybody else experiencing the same issues? Has anybody successfully tested and managed to get their upstream limited? in 2.7?

-

well... reports of upgrading to pfsense plus mentioned it resolved the issue in the other thread so i tried that, only to find that it corrupted my entire config forcing me to reinstall from scratch. (fortunately i backed up my settings or else that'd have been another 5 hour job).

So I reinstalled 2.6.0 again, tried to upgrade to pfsense plus only to face a cert error (googling it says its a netgate backend issue but who knows). so i then restore my previous config settings on 2.6.0 this time now (you know the one which has reported problems with FQ_CODEL)....

oh but wait, it was supposed to be problems with downstream right?

i genuinely have no clue here. its clear theres major bugs in pfsense which aren't getting resolved. how can it be like this, that just regular usage ends up 'breaking' pfsense so completely that major functionality just doesn't work but yet reports back working... requiring a complete reinstall to correct?

oh well everything is functional now, and working as intended - for anybody else who stumbles on any problem, a good course of action is to backup your config and just reinstall. it may or may not resolve your issue.

-

well good news and bad news, it seems everything is looking good in terms of FQ_CODEL for all real physical clients, and for virtual machines but not for docker containers.

I run Pfsense in a virtual machine, on my esxi host as well as unraid in a virutal machine (both on the same physical host) and the unraid virtual machine runs docker containers.

esxi host

pfsense vm || unraid

docker

containersI have 2 physical ethernet ports on the esxi host, so I dedicated one for WAN and one for LAN for easy segregation. pfsense being the sole VM with access to WAN vswitch

In order for docker to use subnet addresses for containers (hosting them at different addresses than the host address) requires macvlan or ipvlan network settings in docker. I opted for macvlan as some random internet article mentioned that cpu overhead was higher using ipvlan than macvlan.

Now the way in which macvlan is done is through mac for forging the mac address. The security settings in esxi virtual networking permit this so I was forced to enable promiscuous mode and forged transmits, placing the docker host on its own port group within the same vswitch.

This fixed the docker address issue but created problems in relation to FQ_CODEL as now when traffic was coming in destined for docker, it didn't appear to journey through the pfsense vm first. I thought it was strange that a rule which usually has 2k+ states created, only had 7 but didn't really think much of it at the time.

i've now changed to ipvlan docker network setting but this hasn't resolved the issue. I have disabled promiscuous mode and forged transmits, and placed the docker host back on the same port group within the same vswitch. Traffic rules seem to all properly be flowing through pfsense now but I still have it bypassing FQ_CODEL for some reason.

At this point i'm not really sure where to go from here, it could be pfsense it could be docker, it could be my own user error. What is a strange observation is that it sometimes works.

For example starting a large downstream file transfer (for now I have set up quite low artificial limits of 50mbps down and 15 mbps up so I can easily observe if its clamping appropriately)

for a time it did clamp appropriately (although at a lower rate than it should) then it progressively gets higher. If the rules didn't exist then it would have started off at 600 mbps. During the times where its clamped at 25mbps (even though it should be 50) the traffic was visible and updating in the firewall rules. After it went beyond that the left hand counter stopped going up.Okay returned to this after a couple of hours of head scratching and found the culprit. The half speed is a result of lan side rules + floating rules, which in effect double up and cause half bandwidth. Disabling one of them resolved that part.

Since this is a service which required port forwarding I had an additional rule (i don't use floating rules, i prefer the old rule style of applying them to lan interfaces with a single lan to lan rule at the top which doesn't use fq_codel). It appears that a racing condition was happening, in that traffic meant for the VPN was split between 2 different rules (the LAN rule and the port forwarding rule on the VPN interface). This was what resulted in the weird behaviour of it clamping for a time and then not. Once a significant amount of clamping had occurred, traffic was flowing through the VPN interface rule which didn't have FQ_CODEL limiters placed on it.

Why this behaviour occurs I have no clue, its a fun little racing condition. When you add them both together, it results in the correct amount of traffic flowing through the interface too.

Lan side

VPN sideThis all occurred on PFsense CE 2.6 and now at this point I think I can comfortably say everything is working as normal.

In my foolishness I sidegraded / upgraded to pfsense plus 22.05 and this caused the behaviour to perform differently / poorly.

In CE 2.6 FQ_CODEL clamping happens instantly. In 22.05 it was delayed, which caused latency spikes until it got it under wraps.

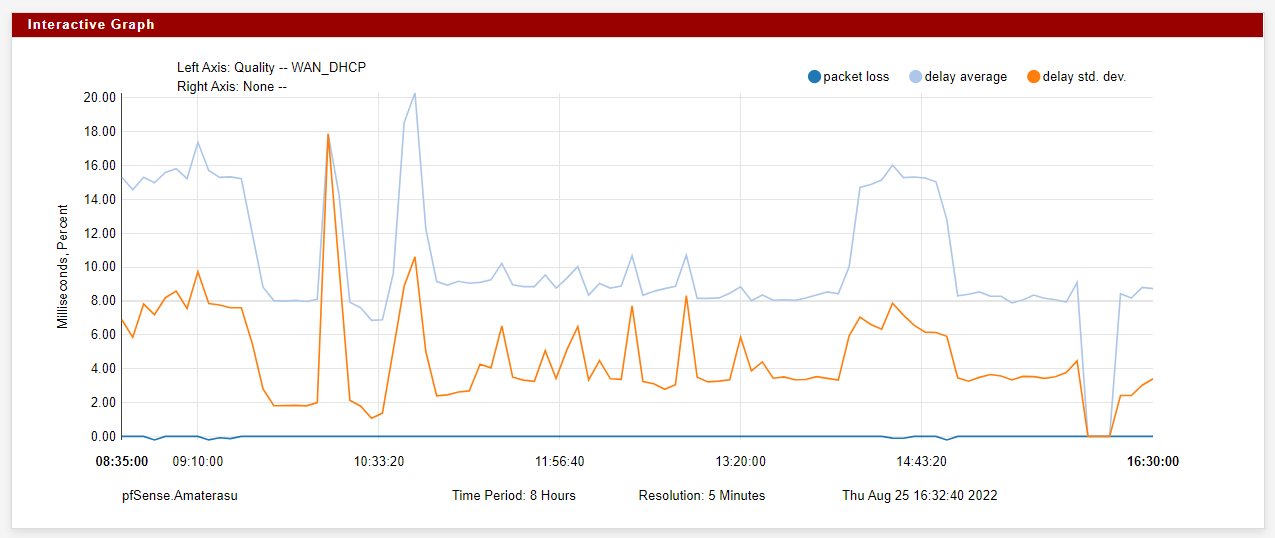

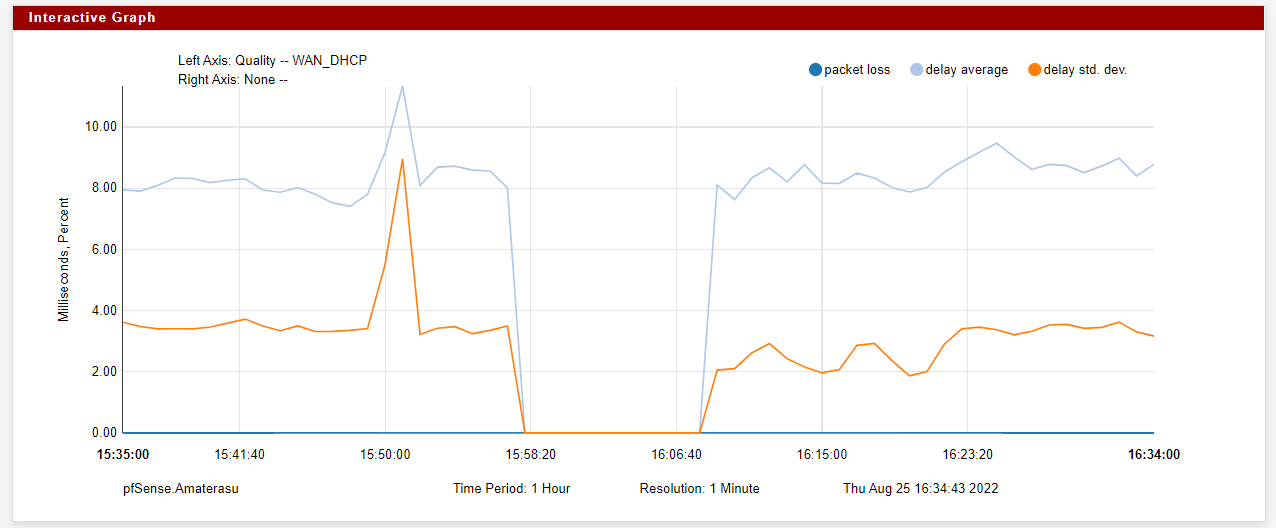

Might not be clearly visible, but you can kind of see the sawtoothing occurring in 22.05.

Its more clearly visible on the 1h resolution but only shows 1 instance of it occurring.

So thats it! i've now got a working setup with pfsense 2.6.0 and i'll stick to this now until something major comes down the pipelines or some bugs exist.

-

M MindlessMavis referenced this topic on