PfSense on a Riverbed Steelhead

-

@foureight84 FWIW Proxmox is even worse when it comes to wearing out SSDs. :)

That and for whatever reason the 770 (with a Xeon E3-1125C v2 CPU so 4cores at 2.5Ghz) is almost embarrassingly slow at running VMs under Proxmox. I have one stacked with 32GB RAM, running Proxmox, and really disappointed in VM performance. It is so bad, I think something must be misconfigured, but can't find anything glaring.

-

@okijames haha I thought it would be. It's a pretty old CPU at this point.

-

@foureight84 Yup, on the plus side it is a solid/reliable machine. So I use it for a few lightweight things that need to run 24/7 without a hiccup.

-

Hmm, it's not that old. Just how terrible is the performance?

-

@stephenw10 Reminds me of 486 performance. Then again I'm basing that on my experience with a Windows VM. So maybe the lack of any sort of video card in the system is the real culprit?

-

Could be. Current Windows versions seem to have pretty significant hardware requirements.

-

Please help me.....

root: smbmsg -p Probing for devices on /dev/smb0: Device @0x10: w Device @0x32: rw Device @0x46: rw Device @0x4c: rw Device @0x5a: w Device @0x5c: rw Device @0x62: rw Device @0x7c: rw Device @0x88: rw Device @0xa2: rw Device @0xac: rw Device @0xd2: rw Device @0xd8: rwWhat is "smbmsg" code?

Thanks. -

What hardware is that?

-

@stephenw10

This is Riverbed CXA-255.

-

The list we had from the file in this thread only lists devices by the motherboard part number like '400-00300-01'. You'll probably need to find that.

-

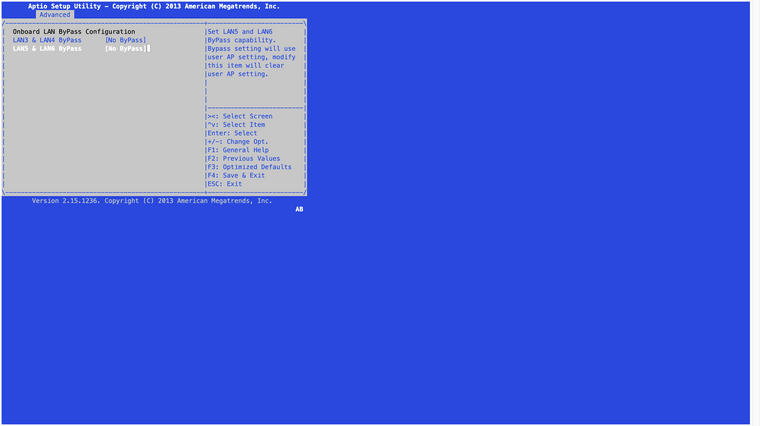

@pantigon I've not tried a CXA-255, but based on the chassis, it might have BIOS control of the bypass NICs like 570/770 boxes. Meaning you won't have to fiddle with smbus settings. Take a look through the BIOS options and search for bypass NIC settings. I don't remember for sure, but I think they need to be set to "disable".

-

@pantigon I should have clarified. The LAN/WAN NICs should be enabled, but then disable the "bypass" feature.

As a reminder, the bypass feature causes the LAN/WAN ports to act like a wired crossover coupler when the box is powered off.

-

@pantigon I was poking around with my CX-770 today, and the BIOS setting for the bypass NICS should be set to "No Bypass". Your's might be the same.

-

@okijames Hi, in my CX-570, I have done the following settings in the bios:

but it seem to not work in proxmox 7.x

Could you help me. -

@anonsaber What's not working? Proxmox itself or pfsense inside Proxmox? FWIW installing Proxmox is a pain to install without a video card. What was your process?

-

@okijames I have connected GT710 with PCIE extension cable, booted from USB and finished the installation of Proxmox.

It showed that the former two ports worked in proxmox, but the last 4 ports was unavailable . (I just connected my worksation with CX-570 and executed ifup <interface name> on each nic in pve host.)

Then according to this post, I modified the BIOS, re-entered proxmox, but unfortunately these ports were still out of action.

PS: psfence was not been installed or used.

-

Quick Q for moderators, if you'd prefer we move this conversation to Proxmox forums, I'd be happy to.

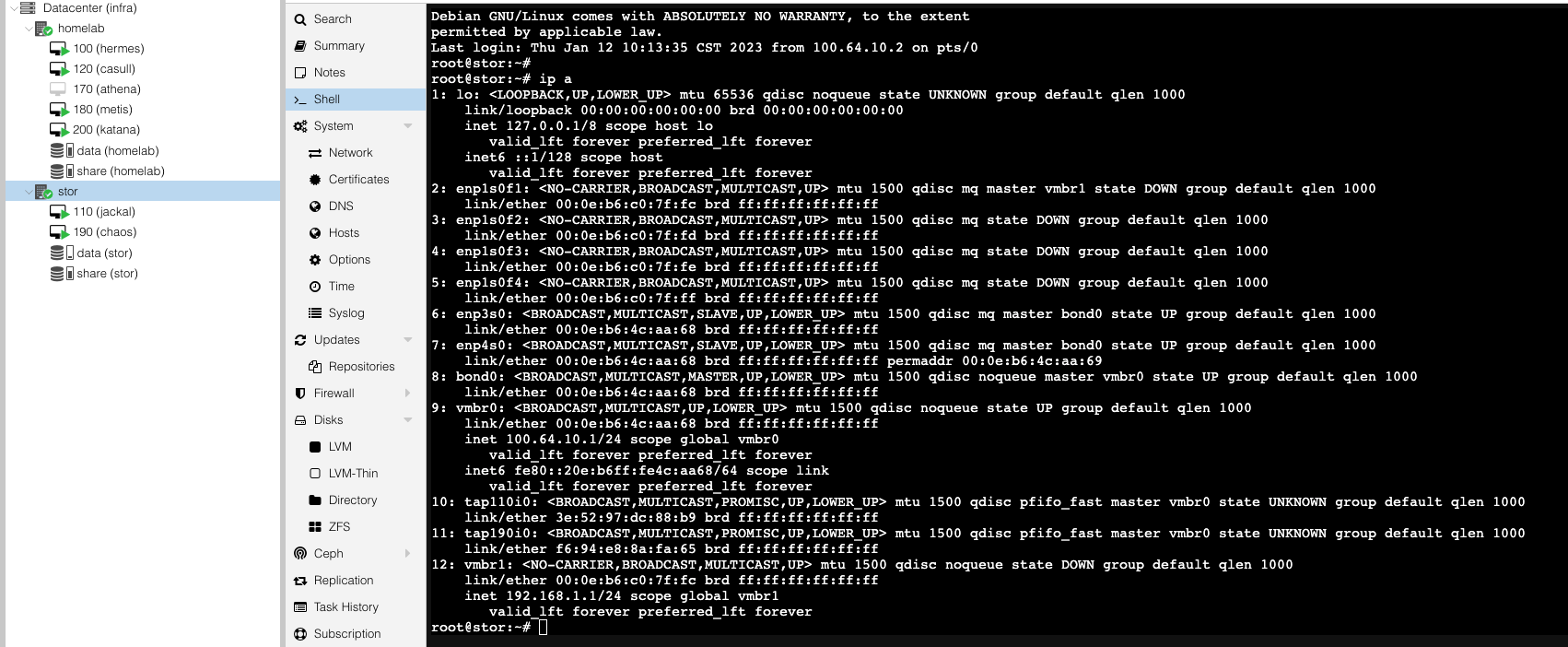

@anonsaber Under Proxmox the NICs have interesting names of enpXs0. Going left to right, starting with the Pri port on the front of the 570/770, the NICs are...

enp2s0, enp3s0, enp1s0f1, enp1s0f2, enp1s0f3, enp1s0f4

These should all show up in dmesg. I enabled the NICs (by setting "No Bypass" like you did) before installing Proxmox. I have no idea how/if Proxmox will recognize them when you enable them post-install.

I only use one NIC (enp1s0f1) and I don't think I had to do an if up. Just edit /etc/network/interfaces. Mine is pasted below, and note that I modified from static IP to DHCP because prefer to use DHCP static assignments via DHCP.

cat /etc/network/interfaces # network interface settings; autogenerated # Please do NOT modify this file directly, unless you know what # you're doing. # # If you want to manage parts of the network configuration manually, # please utilize the 'source' or 'source-directory' directives to do # so. # PVE will preserve these directives, but will NOT read its network # configuration from sourced files, so do not attempt to move any of # the PVE managed interfaces into external files! auto lo iface lo inet loopback iface enp1s0f1 inet manual iface enp1s0f2 inet manual iface enp1s0f3 inet manual iface enp1s0f4 inet manual iface enp2s0 inet manual iface enp3s0 inet manual auto vmbr0 iface vmbr0 inet dhcp bridge-ports enp1s0f1 bridge-stp off bridge-fd 0Also note Proxmox uses the bridge interfaces "vmbrX" rather than assigning IP addresses to the physical NICs. My "ip a" output looks like this...

ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: enp1s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000 link/ether 00:0e:b6:b2:33:f0 brd ff:ff:ff:ff:ff:ff 3: enp1s0f2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:0e:b6:b2:33:f1 brd ff:ff:ff:ff:ff:ff 4: enp1s0f3: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:0e:b6:b2:33:f2 brd ff:ff:ff:ff:ff:ff 5: enp1s0f4: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:0e:b6:b2:33:f3 brd ff:ff:ff:ff:ff:ff 6: enp2s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:0e:b6:78:06:b0 brd ff:ff:ff:ff:ff:ff 7: enp3s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether 00:0e:b6:78:06:b1 brd ff:ff:ff:ff:ff:ff 8: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 00:0e:b6:b2:33:f0 brd ff:ff:ff:ff:ff:ff inet 10.0.0.10/24 brd 10.0.0.255 scope global dynamic vmbr0 valid_lft 7065sec preferred_lft 7065sec inet6 fe80::20e:b6ff:feb2:33f0/64 scope link valid_lft forever preferred_lft forever -

@okijames said in PfSense on a Riverbed Steelhead:

Quick Q for moderators, if you'd prefer we move this conversation to Proxmox forums, I'd be happy to.

Probably more relevant there. But this thread doesn't see much action, it's not like everyone has to read it. Not really a problem IMO.

-

@okijames At first I didn't to do an if up, but when I connect to the network nothing happens.

These is my interface status:

and these is my network config file:# network interface settings; autogenerated # Please do NOT modify this file directly, unless you know what # you're doing. # # If you want to manage parts of the network configuration manually, # please utilize the 'source' or 'source-directory' directives to do # so. # PVE will preserve these directives, but will NOT read its network # configuration from sourced files, so do not attempt to move any of # the PVE managed interfaces into external files! auto lo iface lo inet loopback auto enp3s0 iface enp3s0 inet manual auto enp1s0f1 iface enp1s0f1 inet manual auto enp1s0f2 iface enp1s0f2 inet manual auto enp1s0f3 iface enp1s0f3 inet manual auto enp1s0f4 iface enp1s0f4 inet manual auto enp4s0 iface enp4s0 inet manual auto bond0 iface bond0 inet manual bond-slaves enp3s0 enp4s0 bond-miimon 100 bond-mode balance-rr auto vmbr0 iface vmbr0 inet static address 100.64.10.1/24 bridge-ports bond0 bridge-stp off bridge-fd 0 auto vmbr1 iface vmbr1 inet static address 192.168.1.1/24 bridge-ports enp1s0f1 bridge-stp off bridge-fd 0and there is my dmesg logfile:

dmesg.txt -

@lemon-k I must be misinterpreting something. It appears to me both your nodes are connected in a cluster (therefore using a functional network), and you have VMs running on each node. This is significantly different from "nothing happens" so I don't understand the issue.

I suggest trying the Proxmox forums and detailing what you're trying to accomplish and what is and is not working.