slow pfsense IPSec performance

-

Mmm, 1ms between then is like in the same data center. Or at least geographically very close. What is the route between them?

When you ran the test outside the tunnel, how was that done? Still between the two ESXi hosts?

-

@cool_corona said in slow pfsense IPSec performance:

Nobody uses Atoms for Virtualization.....

Ha. Assume nothing!

-

@cool_corona my ISP is not throttling the VPNs, we already use several VPNs (host to LAN) without any problem (iperf test bitrate is optimal). We are experiencing this low bitrate only with IPSEC LAN2LAN VPN

-

@stephenw10 we have 2 data centres in the same city, they are interconnected with a dedicated 1Gb link on the GARR network.

the test outside the tunnel is between the WAN interfaces of the two pfsense instances: that is between PF_A and PF_B -

@stephenw10 our hypervisors have "2 x Intel(R) Xeon(R) Gold 5218 CPU - 32 cores @ 2.30GHz"

-

Hmm, that should be plenty fast enough. What happens if you test across the tunnel between the two pfSense instances directly? So set the source IP on the client to be in the P2.

-

@mauro-tridici said in slow pfsense IPSec performance:

@stephenw10 our hypervisors have "2 x Intel(R) Xeon(R) Gold 5218 CPU - 32 cores @ 2.30GHz"

Then it’s definitely not hardware that is limiting the transferspeed. Those CPU’s/platforms have loads of power for this usecase.

-

@stephenw10 Sorry, I didn't understand the test I should do?

Should I do an iperf or a ping test between PF_A[opt1] and PF_B[opt2]?PF_A has

WAN IP: xxxxxxxx

LAN IP (for management only): 192.168.240.11

OPT1 IP: 192.168.202.1PF_B has

WAN IP: yyyyyyyy

LAN IP (for management only): 192.168.220.123

OPT1 IP: 192.168.201.1 -

Normally you run iperf3 server on one pfSense box then run iperf3 client on the other one and give it the WAN address of the first one to connect to.

But to test over the VPN the traffic has to match the defined P2 policy so at the client end you need to set the Bind address to, say, the LAN IP and then point it at the LAN IP of the server end.

Then you are testing directly across the tunnel without going through any internal interfaces that might be throttling.

So run

iperf3 -son the PF_A as normal.

Then on PF_B runiperf3 -c -B 192.168.220.123 192.168.240.11 -

@stephenw10 ok, thanks. this is the output of the iperf test:

iperf3 -B 192.168.201.1 -c 192.168.202.1

Connecting to host 192.168.202.1, port 5201

[ 5] local 192.168.201.1 port 2715 connected to 192.168.202.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.04 sec 25.5 MBytes 205 Mbits/sec 0 639 KBytes

[ 5] 1.04-2.01 sec 27.0 MBytes 234 Mbits/sec 0 720 KBytes

[ 5] 2.01-3.03 sec 29.7 MBytes 246 Mbits/sec 0 736 KBytes

[ 5] 3.03-4.01 sec 28.2 MBytes 240 Mbits/sec 1 439 KBytes

[ 5] 4.01-5.05 sec 28.2 MBytes 229 Mbits/sec 0 521 KBytes

[ 5] 5.05-6.04 sec 25.2 MBytes 213 Mbits/sec 0 585 KBytes

[ 5] 6.04-7.01 sec 25.8 MBytes 222 Mbits/sec 0 642 KBytes

[ 5] 7.01-8.01 sec 28.4 MBytes 240 Mbits/sec 0 701 KBytes

[ 5] 8.01-9.00 sec 28.0 MBytes 235 Mbits/sec 0 735 KBytes

[ 5] 9.00-10.04 sec 29.1 MBytes 237 Mbits/sec 0 735 KBytes

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.04 sec 275 MBytes 230 Mbits/sec 1 sender

[ 5] 0.00-10.13 sec 275 MBytes 228 Mbits/sec receiverPlease note that the interfaces OPT1 are the ones involved in the P2. LAN interfaces are used only to reach and manage the pfsense instances.

-

Ok, so no difference. Do you see any imrovement with more parallel streams?

-P 4Edit: Or actually slightly slower but testing from the firewall itself usually is.

-

@stephenw10 mmmh, no, no improvement, I'm sorry.

It is a big mystery :(

I don't know what I should check, where is my error...or the issue... -

Like identical total throughput?

Really starts to look like some limiting somewhere if so.

You could try an OpenVPN or Wireguard tunnel instead.

-

What is the NIC Type of theses VMs (vmxnet3 or e1000)?

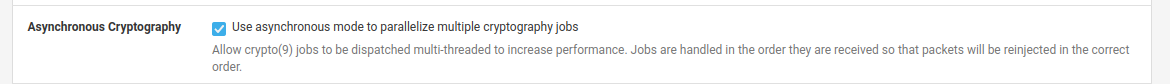

You may want to check your IPsec Advanced Settings:

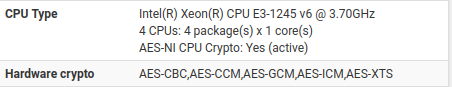

Ensure AES-NI is enabled and running:

The throughput is definitely to low on your VMs. Even unencrypted traffic, isn't hitting line rate. Assuming the Gigabit link is idling.

I measured on a 4 CPU VM, running on the Xeon E3 Box, around 600Mbit/s IPSec throughput.@stephenw10 said in slow pfsense IPSec performance:

You could try an OpenVPN or Wireguard tunnel instead.

I don't think OpenVPN can outperform IPsec, at least without DCO. Haven't tried that feature yet :)

-

It is surprising with DCO but I would still use IPSec in a situation like this. I only suggested that as a test in case there is something throttling IPSec specifically.

Definitely worth checking async-cypto. Usually that kills throughput almost completely on hardware that isn't compatible though.

Steve

-

@averlon sorry for my late answer, but I have been busy during the last days.

AES-NI is available only on one of the hypervisor, so I can't enable it on both ends.I would like to know the level of impact of NIC types of the VMs.

One VM uses vmxnet3 nic, the other one is using e1000. What do you suggest to do with these nics?Thank you,

Mauro -

Unless you're passing through hardware vmxnet will be faster.

But you must add a tunable to enable mutli-queue on them:

https://docs.netgate.com/pfsense/en/latest/hardware/tune.html#vmware-vmx-4-interfacesSteve

-

@mauro-tridici said in slow pfsense IPSec performance:

AES-NI is available only on one of the hypervisor, so I can't enable it on both ends.

This is most likely the bottleneck here. You have to ensure, that AES-NI is available on both ends. Otherwise you won't see any higher throughput with 4 vCPUs for you IPsec traffic.

@stephenw10 said in slow pfsense IPSec performance:

Unless you're passing through hardware vmxnet will be faster.

vmxnet will have less overhead, but won't deliver necessary more throughput. I had horrible performance on pfSense 2.4.x / FreeBSD 11.x with vmxnet3. Something between average 600 and 700 Mbit with a high variance for bulk downloads.

I'm still using e1000 on good old pfSense 2.5.1. For Gigabit Link it delivers almost full rate. I just re-tested it from a VM NAT'ed by pfSense on the same ESXi. This is the same old Xeon E3-1245v6 Box

@mauro-tridici: You should test both NIC Types with the current Version and implement the tunable stephenw mentioned. It may improve non encrypted throughput, but won't solve you IPsec issue.

-

@stephenw10 nothing to do, VMXNET + tunable didn't help me. thank you again for you support.

I think I should change from IPSEC to a different lan to lan vpn solution.

Could you please say me the solution you suggest?Thank you in advance,

Mauro -

@averlon thank you for your support. unfortunately, vmxnet and tunable didn't help and I think I have to give up.

non encrypted throughput between the WAN interface of the two pfsense instances is very good. encrypted traffic on IPSEC tunnel is very poor...Is there any other solution to create a lan to lan vpn easily ?

Thank you,

Mauro