NFS Server WAN - mount within opnsense(pfsense) LAN

-

@cool_corona the main proxmox server itself has only one hardware Nic, the nfs server has also only one hardware nic. So Pfsense is operating via virtual bridges only.

-

@leonidas-o Then youre running VLAN's I presume?

-

@cool_corona no VLAN's, really just one WAN (vmbr10) and one LAN (vmbr11). And for now I just put one VM into LAN (assigned vmbr11 to it).

Don't know If I will use VLAN's at all, maybe Proxmox EVPN (BGP) + VXLAN, will see but for now, it's just this simple setup. -

@leonidas-o And both client and NFS is connected to LAN NIC?

-

The NFS server is a separate dedicated server with its own public IP. The nfs clients are just VM's in a Proxmox cluster. When switching the network device to vmbr11 the VM is running behind pfsense. The nfs server contains e.g. some configurations files and the VM itself has to have access to it. For example one VM has a dockerized traefik proxy, the conf files are on the nfs server.

So each VM itself is going from pfsense LAN -> WAN -> enp0s31f6 -> INTERNET -> 136.XX.XX.XX (NFS Server)@cool_corona No, like already said, the nfs server is a separate dedicated server. This server is from the same server provider, but it is even in another Datacenter. So basically I'm going all the way out LAN -> WAN -> enp0s31f6 the hardware nic of the main server over the providers infrastructure (switches etc.) to the nfs server itself (here the last entry in the tracereoute results)

Traceroute:

1 10.10.10.1 0.258 ms 0.263 ms 0.254 ms 2 94.XX.XX.XX 0.542 ms 0.571 ms 0.531 ms 3 213.XX.XX.XX 3.984 ms 0.800 ms 0.865 ms 4 213.XX.XX.XX 0.563 ms 0.582 ms 0.566 ms 5 136.XX.XX.XX 0.785 ms !X 0.656 ms !X 0.821 ms !X -

@leonidas-o Try an outbound TCP/UDP rule from specific client to NFS server public IP.

-

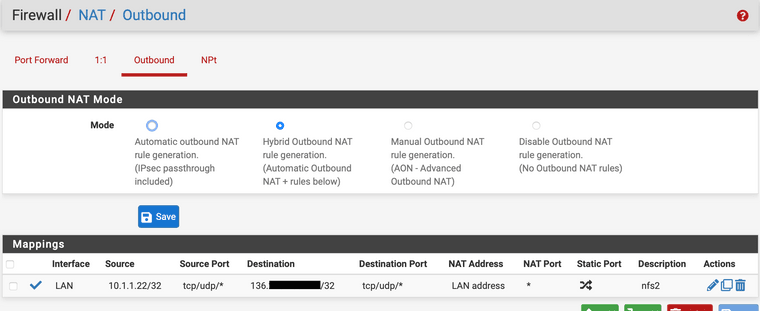

@cool_corona unfortunately nothing changed, same behaviour, same error when using the following outbound rule:

-

@leonidas-o Keep the source IP and any to the rest and change the LAN to WAN on the interface

-

@cool_corona no, still not working. I tried what you said, I also tried actually every combination which came to my mind.

WAN/ LAN, keeping the source, removing it, setting destination/ removing it, changing the translation address from "Interface Address" to the public IP of the proxmox server.It's crazy how I can't/ or I don't know how, view any logs with some proper messages. The firewall system logs are not showing anything blocking.

-

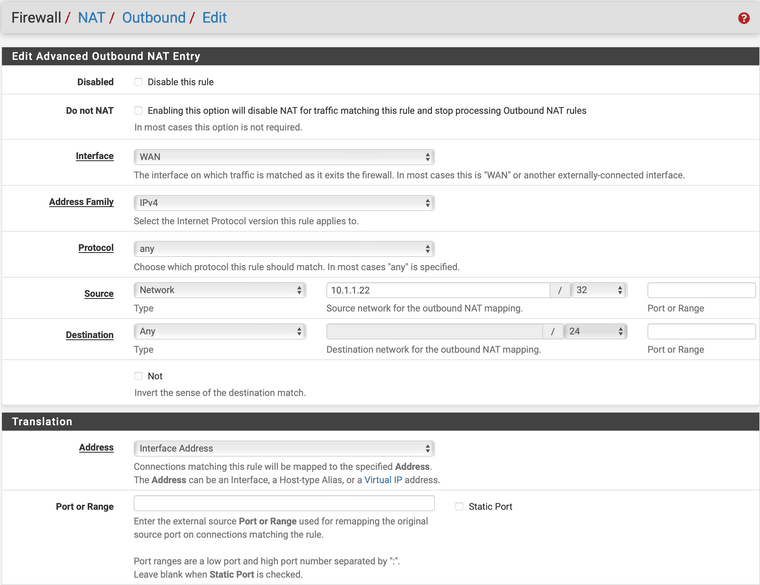

I've set the "Static Port" checkbox and immediately it worked. The mount was successful and I could see the files.

What does this checkbox really mean or better to say, what consequences do I have when it is checked?

-

Here is a long thread about NFS and pfSense. It mentions that both NAT ports and the pfSense IP figure into the equation: https://www.experts-exchange.com/questions/29118882/nfsv4-mount-fails-with-operation-not-permitted.html. I found this link by specifically searching for nfs getattr operation not permitted as that is the error you saw in your packet capture.

The "operation not permitted" error says to me it is a server-side permissions issue of some sort. Further evidence it may be a server-side issue can be found here: https://serverfault.com/questions/577342/nfsv4-through-portforward. I think the port translations that are happening with the intervening OPNsense or pfSense firewalls are the culprit.

-

@leonidas-o said in NFS Server WAN - mount within opnsense(pfsense) LAN:

I've set the "Static Port" checkbox and immediately it worked. The mount was successful and I could see the files.

What does this checkbox really mean or better to say, what consequences do I have when it is checked?

Read the last link I posted in a reply above. I think it answers your question. pfSense is altering the port number as part of NAT, and that trips up NFS on the server side. Clicking Static Port says "don't mess with the source port". You could also try unchecking "Static Port" and trying the

insecureoption on the export setting on the server side to see if that works as well. -

@bmeeks Yes, I will read both links thanks for that.

Are there any downsides to using the "Static Port" option?

I read about the nfs insecure option, it doesn't sound if it would be related to my port issue, but I will try it anyway.

I saw in the packet capture, always something like "136.XX.XX.XX.2049 > 10.10.10.2.18318". So a random high Port.Now with the static port option it changed to "136.XX.XX.XX.2049 > 10.10.10.2.1009".

But I'm also seeing "10.10.10.2.812 > 136.XX.XX.XX.2049" and "136.XX.XX.XX.2049 > 10.10.10.2.812".I don't know, maybe a stupid question, can I face any performance issues, when all my VM's are running with this static port option? Somehow the traffic from all VM's jamming at the same port?

-

a quick followup regarding the "insecure" option, I have two mounts, one is owned by "root" on the nfs server the other by a regular user.

After using the "insecure" flag and removing the "static port" option, in my VM (nfs client), as a regular linux user, I can see the files from the one mount which was owned by root but not the other mount. So yes, somehow the "insecure" option has an influence on that. Although I don't why I'm just seeing the "root" owned nfs mounted files. -

@leonidas-o said in NFS Server WAN - mount within opnsense(pfsense) LAN:

a quick followup regarding the "insecure" option, I have two mounts, one is owned by "root" on the nfs server the other by a regular user.

After using the "insecure" flag and removing the "static port" option, in my VM (nfs client), as a regular linux user, I can see the files from the one mount which was owned by root but not the other mount. So yes, somehow the "insecure" option has an influence on that. Although I don't why I'm just seeing the "root" owned nfs mounted files.Your solution is likely to require some changes on the NFS server side in its export options. As I understand it from quickly reviewing the links I provided, NFS by default expects certain authentication communications to occur on "secure" ports. NFS considers port numbers below 1024 as "secure" (don't really know why that is). The port translation associated with NAT across the FreeBSD firewalls (whether OPNsense or pfSense) causes the NFS server to see authentication communication happening on a port higher than 1024, thus it rejects it. That is the source of the "operation not permitted" error.

Another piece of the puzzle is who owns the mounted file system (root or someone else). That figures into the remote mounting. The first link I provided has some information about that as you read down the long thread. Something like

fsid=rootor something similar is required in the export configuration file. Thisrootthing is probably why one mount works and another is giving trouble on the remote end.The Static Port option tells the firewall to always use the same low-numbered port for NAT. So long as that port is less than 1024, you get around the "not secure" error from NFS. The workaround for not using the Static Port option is to use the

insecureoption in the export specification for the NFS share. -

@bmeeks hmm, I once tried that "fsid=" option on the nfs server a week ago. I had "fsid=0" for the one path owned by root and fsid=1000 for the other path owned by the regular user. This option however broke all the clients. Don't know why, don't know If I have to adapt the clients as well if adding this "fsid" flag, but no nfs client was working anymore. So I quickly removed it and all clients worked again.

I'm currently reading through the longer thread.

Do you have a recommendation, or maybe just a feeling what might be better, "insecure" or "static port"?

-

@leonidas-o said in NFS Server WAN - mount within opnsense(pfsense) LAN:

Do you have a recommendation, or maybe just a feeling what might be better, "insecure" or "static port"?

I would gravitate towards the "insecure" option in the NFS setup. Here is a description of what the Static Port option is all about from the official Netgate documentation: https://docs.netgate.com/pfsense/en/latest/nat/outbound.html#static-port. Short answer is Static Port can ever so slightly reduce security by making spoofing of traffic easier.

What is tripping up your NFS mounting across the firewall is the source port randomization the firewall does when applying NAT to the NFS traffic.

If you follow the link in the docs to Working with Manual Outbound NAT Rules, and then scroll down a bit, you will find a good description of what Static Port does and why it is making your NFS mounts work. The NFS server is not happy when the source port is rewritten UNLESS the

insecureoption has been enabled in the NFS export options.The trouble you have had with NFS (and the similar kinds of trouble that frequently happen trying to get VoIP and SIP working across firewalls) is why NAT is considered such an abomination among network purists ...

.

.pfSense and OPNsense are trying to improve security by randomizing source ports when NAT'ing, but some applications just do not tolerate that. Figuring out what the problem is can sometimes be difficult.

-

@bmeeks was about to read the static port section in the pfsense docs, had it already open.

So @bmeeks and @Cool_Corona thank you very much guys, you provided lots of insights and useful information, help and hints. I really appreciate that.I think I can figure out now, what the best option will be. I will go through all the threads/links, read up on fsid again and figure out a solution. At least I do have a "running" example of a nfs mount behind pfsense. So I can research on all the options now. Thanks a lot!

The trouble you have had with NFS (and the similar kinds of trouble that frequently happen trying to get VoIP and SIP working across firewalls) is why NAT is considered such an abomination among network purists ...

I "heard" there is no NAT in IPv6 maybe we all just should switch to IPv6, no more NAT'ing, problem solved

Okay, quickly googled it, it seems there is NAT for ipv6, so forget what I said

-

@leonidas-o said in NFS Server WAN - mount within opnsense(pfsense) LAN:

Okay, quickly googled it, it seems there is NAT for ipv6, so forget what I said

There is NAT for IPv6, but no network admin in his right mind should want to use it. Hopefully it remains a solution in search of a problem. There are enough IPv6 addresses to last until the end of the universe.