Just got a Protectli FW4C!

-

@stephenw10 For good measure i would test another protocol like wireguard if you can. Curious if the low performance follows.

-

@stephenw10 said in Just got a Protectli FW4C!:

You might try a much lower value just to check. I have seen IPSec tunnels that require MSS as low as 1100 to prevent fragmentation. Though not over a route as short as 10ms.

Ok, I'll try that tonight. Does the MSS have to be set on both sides of the tunnel? And does the tunnel have to be disconnected and reconnected in order for the new value to take effect?

-

@michmoor said in Just got a Protectli FW4C!:

@stephenw10 For good measure i would test another protocol like wireguard if you can. Curious if the low performance follows.

The problem with WG is that I don't have a baseline, and Protectli doesn't, either. So if I get some performance number, I won't know if it's higher, lower, or exactly as expected.

I also was not successful in setting it up last time I tried.

Whereas for IPSec, we have a Netgate person letting us know that I'm way under expectations.

But WG testing would be useful down the road, once I have IPSec established and optimized.

-

It should only need to be set on one side but it doesn't hurt to se it on both.

-

@thewaterbug Not sure it was asked but what Phase 2 parameters are you using?

-

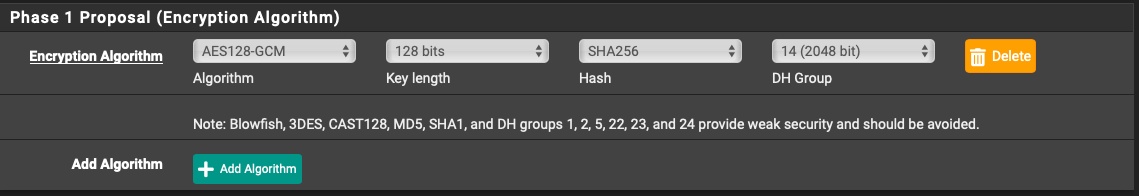

Both Phase 1 and Phase 2 are AES-GCM-128, SHA256, and DH14.

-

@thewaterbug Ahhh theres one more setting that helped out a lot for me. PowerD settings. Enable and set to either Maximum or HiAdaptative.

When i was running OPNsense on a Protectli a year ago i had problems with poor performance on Wireguard. The recommendation was to enable this. Once i did that things moved a lot better.

-

AES-GCM doesn't require a hash for authentication, that's one of the reasons it's faster. You can remove that. It should just ignore it already though.

-

Ah yes. It was selected before, when I was using AES-CBC to work around the SG-1100/SafeXcel problem, and once I deselected AES-CBC and selected AES-GCM, the hash just stayed selected.

-

I'm already set to HiAdaptive on both sides. It doesn't make a difference in my test results.

-

@stephenw10 said in Just got a Protectli FW4C!:

AES-GCM doesn't require a hash for authentication, that's one of the reasons it's faster. You can remove that. It should just ignore it already though.

Is this true for both Phase 1 and Phase 2? If yes, I'm curious as to why the Phase 1 setup has a selector for Hash if AES-GCM is chosen as the encryption:

-

It is true but it doesn't really matter at phase 1. The phase 2 config is what actually governs the traffic over the tunnel once it's established.

-

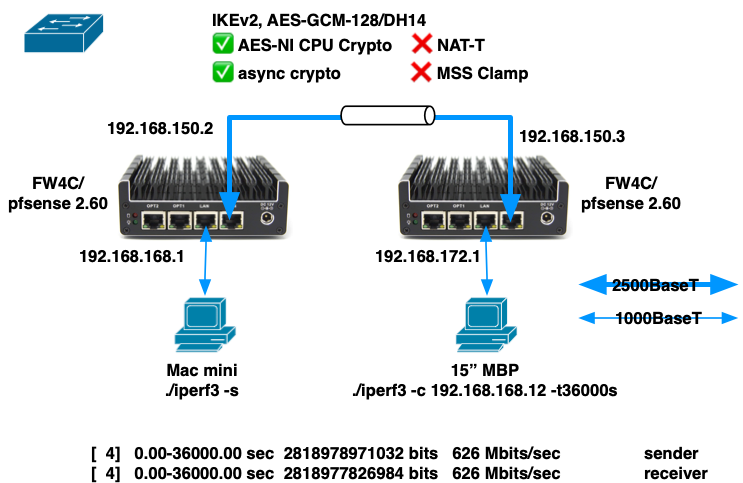

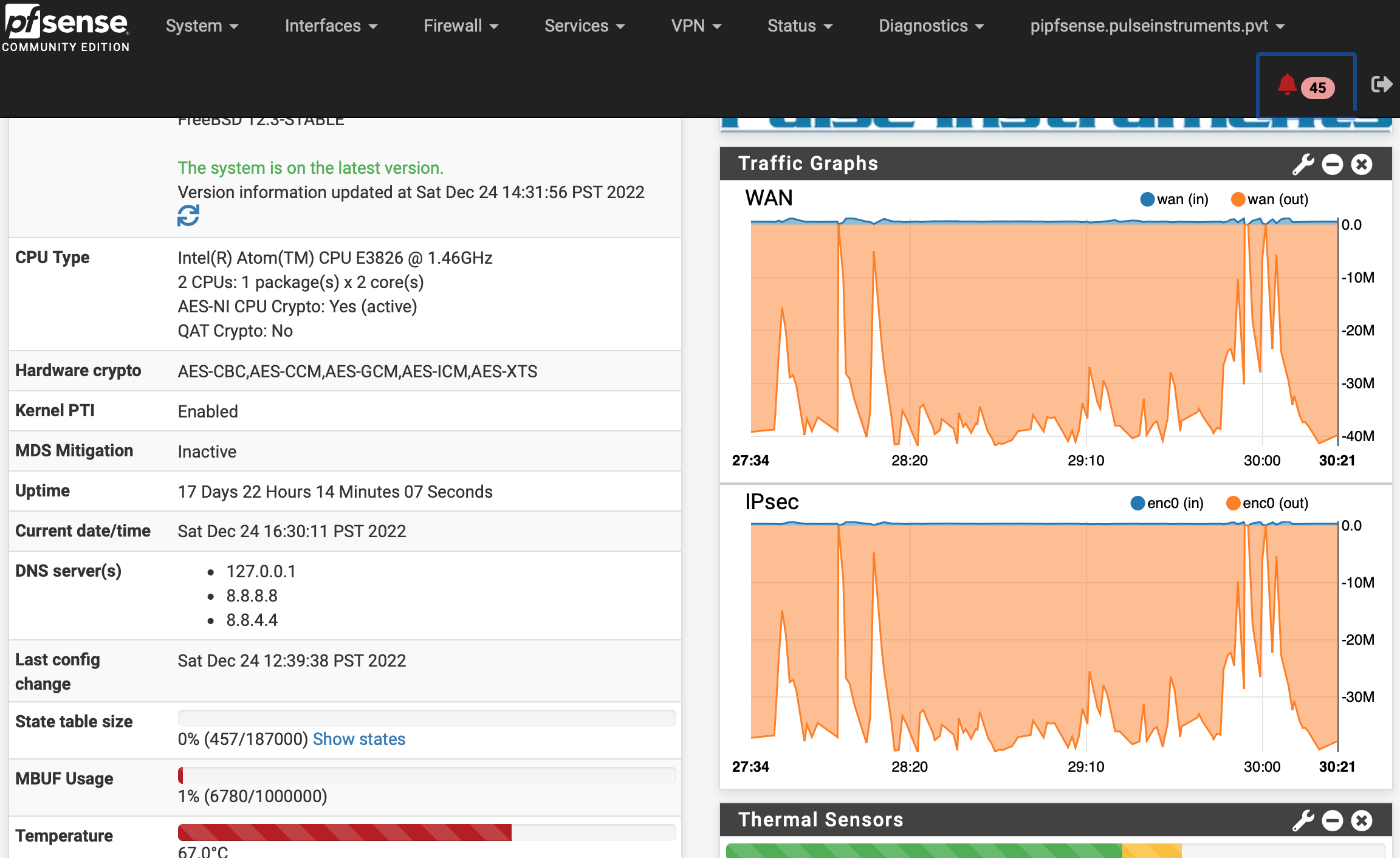

While I'm mulling over how to improve throughput on the MBT-2220 side, I thought I'd put the two FW4C units on the bench and try them out, side-by-side, with only 6' of cabling between them, <<<< 1 ms ping, and no other traffic:

The best I could achieve was 626 Mbps over a 10 hour period.

Things that puzzle me:

- Throughput seems to vary from run to run, despite there being very few variables in the setup.

- There is no internet traffic, no routing outside of the two units, and not even a switch (I have the two WAN ports connected with a cable at 2500BaseT).

- Sometimes a 10 second run will achieve ~720 Mbps

- Sometimes a 10 second run will achieve only ~300 Mbps

- CPU utilization on both sender and receiver get no higher than 80%, and core temps no higher than 61ºC, but I'm still getting significantly less than the ~980 Mbps reported by Protectli.

Things I fiddled with that made no improvement:

- NAT-T

- MSS Clamping

- Connecting the WAN ports through a 1000BaseT switch.

- This reduced throughput by maybe 5 Mbps, but that might be just sampling error.

- Unchecked all the "Disable . . . " checkboxes in System > Advanced > Networking > Network Interface

- iperf simultaneous connections, e.g. "-P 2" or "-P 4". No improvement, and significant degradation at > 4.

- iperf TCP window size, e.g. "-w 2M" or "-w 4M". No improvement.

- iperf direction, e.g. "-R". Performance is the same, and just as variable, in both directions.

Are there another tunables that might improve things in this type of lab scenario?

My real goal is to maximize application throughput in the real world, where I have 2 ISPs, 8 miles, and 10 msec of ping between my two locations, but first I want to optimize in the lab to see what's possible.

- Throughput seems to vary from run to run, despite there being very few variables in the setup.

-

@thewaterbug If you do just an iPerf test without VPN, what do you get?

I gotta be honest with you, I got a Protectli 2 port and 6 port. Inter-vlan at a house i can get around 970Mbps. Over a IPsec vlan where the remote site is capped at 200/10 i can saturate that link no issue. Local speedtests at each site i can cap the connection no issue.Could it be possible that your FW4C is a dud?

-

I didn't test through a port forward, and I took one of the units out of the lab, but I can do that test on Monday. I might also be using iperf incorrectly.

Right now I've returned one of the FW4C units into service at my house, with the tunnel up between it and the MBT-2220 at the main office, and I'm watching a Veeam Backup Copy Job in progress.

This tunnel will iperf from FW4C-->MBT-2220 at ~200 Mbps, and will iperf the other way MBT-2220-->FW4C at ~135 Mbps, but this Veeam copy job is showing throughput in the "slow" direction (MBT-2220-->FW4C) of up to 250 - 300 Mbps, which is several times the iperf speed, and a lot closer to what Netgate has suggested the MBT-2220 is capable of:

So it's possible that I'm not using iperf optimally. When I iperf across the LAN, with no tunnel or port forwarding, I get 940 Mbps between two machines with just:

./iperf3 -son the server and

./iperf3 -c iperf.server.ip.addressor

./iperf3 -c iperf.server.ip.address -Ron the client, so I've been using that same command across the tunnel. I've experimented with -P and -w as noted above, but there might be other knobs I can turn to improve the throughput.

Because I'm not building an iperf tunnel; I'm building a tunnel to do real work, like backup copy jobs, so I need to measure it using the correct metrics.

-

@thewaterbug to take the whole iperf thing out of the equation I use Speedtest running on a container. Just run and go to your server. The end.

https://hub.docker.com/r/openspeedtest/latest -

Remember iperf is deliberately single threaded, even when you run multiple parallel streams. So if the client device you are running it on has a large number of relatively slow CPU cores you may need to run multiple iperf instances to use multiple cores.

Though that would affect any traffic so if you can get line rate between the sites outside the tunnel it's not he problem.Steve

-

I'm stumped. I put the second FW4C at the main office yesterday, so I now have these new units on both ends of the tunnel, configured as similarly as possible.

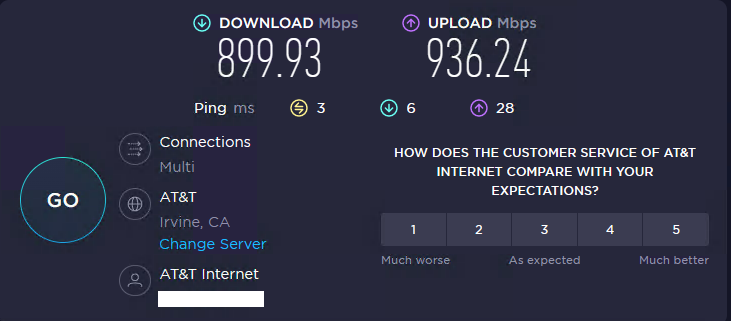

The main office (1000/1000 AT&T Business Fiber) will Speedtest at 900/900+:

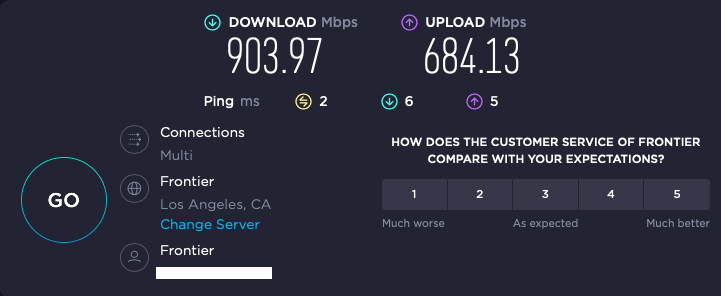

while the home office (1000/1000 Fronter Gig Fiber) will Speedtest at 900/700.:

iperf through the tunnel from my home office to my main office is variable, but has achieved throughput as high at 560 Mbps:

./iperf3 -w 4M -c 192.168.0.4 -t120s[ 4] 0.00-120.00 sec 7.83 GBytes 561 Mbits/sec sender [ 4] 0.00-120.00 sec 7.83 GBytes 560 Mbits/sec receiverbut reversing the direction drops the throughput down to ~120 Mbps:

./iperf3 -w 4M -c 192.168.0.4 -t120s -R[ 4] 0.00-120.00 sec 1.72 GBytes 123 Mbits/sec sender [ 4] 0.00-120.00 sec 1.72 GBytes 123 Mbits/sec receiver receiverCPU utilization never got higher than 20% on either side, and core temps never above 59º, and that was on the sending side (Main office). On the receiving side the core temps were around 45º. So neither side is working very hard.

If I iperf to the same host through a port forward, the throughput goes way down to <<<<< 100 Mbps, which doesn't make any sense.

And in every case, pushing bits from the main office to my home office is always dramatically slower than it is in the other direction, which is unfortunate because that's the direction I really need the speed (e.g. for an off-site backup repo).

-

Actually scratch that part about the port forward. Now Home-->Main through a port forward is achieving good throughput:

[ 6] 0.00-10.00 sec 681 MBytes 571 Mbits/sec sender [ 6] 0.00-10.00 sec 678 MBytes 568 Mbits/sec receiverThe reverse direction is better than it was 10 minutes ago, but still a fraction of the "good" direction:

[ 6] 0.00-10.00 sec 272 MBytes 228 Mbits/sec sender [ 6] 0.00-10.00 sec 272 MBytes 228 Mbits/sec receiver -

Ok, that's outside the tunnel though so it shows whatever the issue is it's probably nothing to do with the IPSec encryption/decryption.

You still see ~10ms latency between the sites? Consistently?