Packet error on physical interface

-

Hmm, well looks like they are all checksum errors on the ix NICs. However that was updated int he driver for 2.6 and then further changes were made more recently as some of those were shown to be spurious.

Looks it's buffer exhaustion on the bce NIC which normally means the NIC is not being serviced by the CPU fast enough for the incoming traffic.

I would check that value matched the current input error count from netstat though.Steve

-

@stephenw10

here is full output from sysctl and netstat:

output.txt -

Hmm, OK so those errors are shown as

InputDiscardsfor bge, ascom_no_buffersfor bce and aschecksum_errsin ix.Do you have flow control active on any of those links?

My first though here is that the NICs are just dropping packets because the CPU queues are maxed out.Steve

-

@stephenw10

Do you have flow control active on any of those links?

--> No, I don'tMy first though here is that the NICs are just dropping packets because the CPU queues are maxed out.

--> so how can I check CPU queues ? and Is there solution for this? -

Check the boot logs to make sure each NIC is coming up with the expected number of queues initially. Check the CPU usage per core on the firewall using

top -HaSPat the CLI. Do you have cores that are maxed out.

Try enabling flow control. That should prevent packet loss in the even the NIC cannot be serviced fast enough. Though it may introduce other issues. -

@stephenw10 ,

top -HaSPlast pid: 81614; load averages: 0.49, 0.57, 0.52 up 42+17:32:16 08:22:31 453 threads: 18 running, 384 sleeping, 51 waiting CPU 0: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 1: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 2: 0.0% user, 0.0% nice, 16.7% system, 0.0% interrupt, 83.3% idle CPU 3: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 4: 0.0% user, 0.0% nice, 16.7% system, 0.0% interrupt, 83.3% idle CPU 5: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 6: 0.0% user, 0.0% nice, 8.3% system, 0.0% interrupt, 91.7% idle CPU 7: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 8: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 9: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 10: 0.0% user, 0.0% nice, 7.7% system, 0.0% interrupt, 92.3% idle CPU 11: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 12: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 13: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 14: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 15: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle Mem: 106M Active, 6357M Inact, 1549M Wired, 763M Buf, 23G Free Swap: 4096M Total, 4096M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 11 root 155 ki31 0B 256K CPU9 9 1022.9 100.00% [idle{idle: cpu9}] 11 root 155 ki31 0B 256K CPU11 11 1022.8 100.00% [idle{idle: cpu11}] 11 root 155 ki31 0B 256K CPU15 15 1022.6 100.00% [idle{idle: cpu15}] 11 root 155 ki31 0B 256K CPU7 7 1022.4 100.00% [idle{idle: cpu7}] 11 root 155 ki31 0B 256K CPU1 1 1021.9 100.00% [idle{idle: cpu1}] 11 root 155 ki31 0B 256K CPU8 8 1016.7 96.53% [idle{idle: cpu8}] 11 root 155 ki31 0B 256K CPU10 10 1016.9 96.35% [idle{idle: cpu10}] 11 root 155 ki31 0B 256K CPU12 12 1016.5 96.32% [idle{idle: cpu12}] 11 root 155 ki31 0B 256K CPU14 14 1016.7 95.39% [idle{idle: cpu14}] 11 root 155 ki31 0B 256K CPU0 0 1000.3 94.90% [idle{idle: cpu0}] 11 root 155 ki31 0B 256K CPU3 3 1021.4 91.30% [idle{idle: cpu3}] 11 root 155 ki31 0B 256K CPU4 4 1004.4 91.23% [idle{idle: cpu4}] 11 root 155 ki31 0B 256K RUN 6 958.7H 88.47% [idle{idle: cpu6}] 11 root 155 ki31 0B 256K CPU2 2 1010.2 85.78% [idle{idle: cpu2}] 11 root 155 ki31 0B 256K CPU13 13 1022.7 85.24% [idle{idle: cpu13}] 11 root 155 ki31 0B 256K CPU5 5 1021.5 63.86% [idle{idle: cpu5}] 0 root -92 - 0B 1616K - 2 420:57 8.32% [kernel{bge2 taskq}] 0 root -92 - 0B 1616K - 1 61.3H 8.04% [kernel{bge0 taskq}] 0 root -76 - 0B 1616K - 2 381:58 6.41% [kernel{if_io_tqg_2}] 0 root -92 - 0B 1616K - 4 357:50 4.62% [kernel{bge3 taskq}] 0 root -76 - 0B 1616K - 12 450:05 3.25% [kernel{if_io_tqg_12}] 0 root -76 - 0B 1616K - 0 541:11 2.73% [kernel{if_io_tqg_0}] 0 root -92 - 0B 1616K CPU0 0 906:30 2.41% [kernel{bge1 taskq}] 12 root -92 - 0B 816K WAIT 4 502:59 2.20% [intr{irq258: bce2}] 0 root -76 - 0B 1616K - 10 426:32 2.18% [kernel{if_io_tqg_10}] 0 root -76 - 0B 1616K - 4 384:42 2.01% [kernel{if_io_tqg_4}] 0 root -76 - 0B 1616K - 6 374:11 1.83% [kernel{if_io_tqg_6}] 81614 root 20 0 14M 4512K CPU6 6 0:00 1.74% top -HaSP 0 root -76 - 0B 1616K - 14 430:22 1.57% [kernel{if_io_tqg_14}] 0 root -76 - 0B 1616K - 8 440:59 1.49% [kernel{if_io_tqg_8}]cat dmesg.boot

ix0: <Intel(R) X520 82599ES (SFI/SFP+)> port 0xecc0-0xecdf mem 0xdf300000-0xdf37ffff,0xdf2f8000-0xdf2fbfff irq 35 at device 0.0 on pci5 ix0: Using 2048 TX descriptors and 2048 RX descriptors ix0: Using 8 RX queues 8 TX queues ix0: Using MSI-X interrupts with 9 vectors ix0: allocated for 8 queues ix0: allocated for 8 rx queues ix0: Ethernet address: 00:1b:21:bc:77:c0 ix0: PCI Express Bus: Speed 5.0GT/s Width x4 ix0: eTrack 0x00012b2c PHY FW V65535 ix0: netmap queues/slots: TX 8/2048, RX 8/2048 ix1: <Intel(R) X520 82599ES (SFI/SFP+)> port 0xece0-0xecff mem 0xdf380000-0xdf3fffff,0xdf2fc000-0xdf2fffff irq 46 at device 0.1 on pci5 ix1: Using 2048 TX descriptors and 2048 RX descriptors ix1: Using 8 RX queues 8 TX queues ix1: Using MSI-X interrupts with 9 vectors ix1: allocated for 8 queues ix1: allocated for 8 rx queues ix1: Ethernet address: 00:1b:21:bc:77:c1 ix1: PCI Express Bus: Speed 5.0GT/s Width x4 ix1: eTrack 0x00012b2c PHY FW V65535 ix1: netmap queues/slots: TX 8/2048, RX 8/2048 pcib6: <ACPI PCI-PCI bridge> at device 7.0 on pci0 pci6: <ACPI PCI bus> on pcib6 pcib7: <ACPI PCI-PCI bridge> at device 9.0 on pci0 pci7: <ACPI PCI bus> on pcib7I find the ix0 and ix1 interface using 8 queues.

Additionally, I've re-checked and found pfsense already enabled flow control:

sysctl hw.ix

hw.ix.enable_rss: 1 hw.ix.enable_fdir: 0 hw.ix.unsupported_sfp: 0 hw.ix.enable_msix: 1 hw.ix.advertise_speed: 0 hw.ix.flow_control: 3 hw.ix.max_interrupt_rate: 31250Thanks!!!

-

Flow control is negotiated though, both ends have to support it.

Nothing much happening in that top output. Was there any traffic flowing at that point? Were the errors increasing ?

What I expect to see is that when traffic through the NIC peaks the CPU core(s) servicing it max out and it drops packets. Assuming that is the cause.Are you able to see the number of queues on the bce/bge NICs?

vmstat -imay show it. -

@stephenw10

yes, a lot of traffic at that point, and the errors increasing as well.

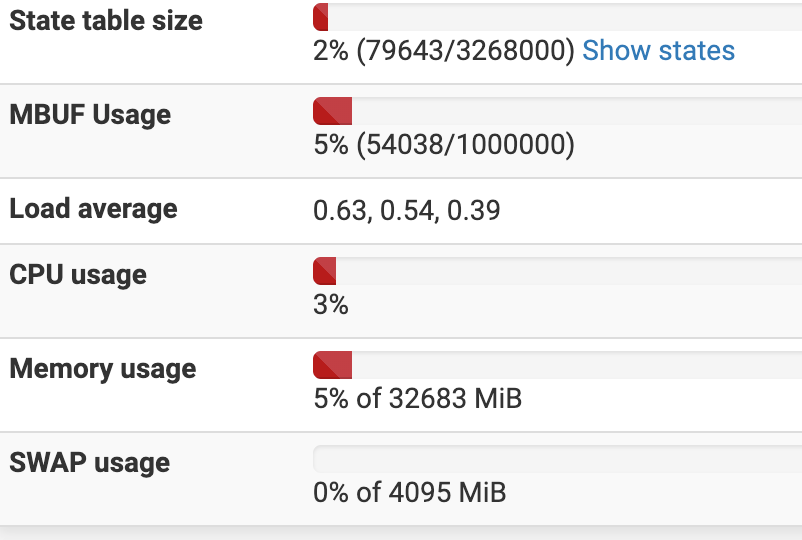

CPU usage is always less than 10%.

[2.6.0-RELEASE][admin@pfs2]/root: vmstat -i interrupt total rate irq17: uhci0 24 0 irq19: ehci0 2947641 1 irq23: atapci0 2616920 1 cpu0:timer 1228218976 311 cpu1:timer 43088269 11 cpu2:timer 515777307 131 cpu3:timer 44479430 11 cpu4:timer 665350682 168 cpu5:timer 43841493 11 cpu6:timer 1046412756 265 cpu7:timer 41014489 10 cpu8:timer 163311089 41 cpu9:timer 42267453 11 cpu10:timer 143573306 36 cpu11:timer 43289875 11 cpu12:timer 162167544 41 cpu13:timer 44236981 11 cpu14:timer 152160861 39 cpu15:timer 45152052 11 irq257: bce1 6913402 2 irq258: bce2 2158281404 547 irq259: bce3 1 0 irq260: mfi0 7352305 2 irq261: ix0:rxq0 7415677330 1878 irq262: ix0:rxq1 709426817 180 irq263: ix0:rxq2 756092990 191 irq264: ix0:rxq3 701942814 178 irq265: ix0:rxq4 771849533 195 irq266: ix0:rxq5 709479026 180 irq267: ix0:rxq6 813804607 206 irq268: ix0:rxq7 701544714 178 irq269: ix0:aq 6 0 irq270: ix1:rxq0 7808112326 1977 irq271: ix1:rxq1 1041604395 264 irq272: ix1:rxq2 1028264841 260 irq273: ix1:rxq3 1045890761 265 irq274: ix1:rxq4 1094897663 277 irq275: ix1:rxq5 1009812406 256 irq276: ix1:rxq6 1165305943 295 irq277: ix1:rxq7 1047942990 265 irq278: ix1:aq 7 0 irq279: bge0 6303565889 1596 irq280: bge1 3249572863 823 irq281: bge2 1628365893 412 irq282: bge3 1149357028 291 Total 46754965102 11839 [2.6.0-RELEASE][admin@pfs2]/root: netstat -i Name Mtu Network Address Ipkts Ierrs Idrop Opkts Oerrs Coll bce0* 1500 <Link#1> b8:ac:6f:97:06:ea 0 0 0 0 0 0 bce1 1500 <Link#2> b8:ac:6f:97:06:ec 7117685 0 0 70405 0 0 bce1 - fe80::%bce1/6 fe80::baac:6fff:f 0 - - 1 - - bce1 - 192.168.103.0 pfs2 2343399 - - 515 - - bce2 1500 <Link#3> b8:ac:6f:97:06:ee 1868223693 2155425 0 906688134 0 0 bce2 - fe80::%bce2/6 fe80::baac:6fff:f 0 - - 1 - - bce3 1500 <Link#4> b8:ac:6f:97:06:f0 0 0 0 0 0 0 bce3 - fe80::%bce3/6 fe80::baac:6fff:f 0 - - 1 - - bce3 - xxx.xxx.xx.xx xxx.xxx.xx.xx 0 - - 0 - - ix0 1500 <Link#5> 00:1b:21:bc:77:c0 6590339737 7248161 0 16393394382 0 0 ix1 1500 <Link#6> 00:1b:21:bc:77:c0 10209454971 22546676 0 16548545943 0 0 bge0 1500 <Link#7> 00:0a:f7:90:e6:ec 21040184018 25001666 0 8758689519 0 0 bge0 - fe80::%bge0/6 fe80::20a:f7ff:fe 0 - - 1 - - bge1 1500 <Link#8> 00:0a:f7:90:e6:ed 3801913176 6100037 0 2248351113 0 0 bge1 - fe80::%bge1/6 fe80::20a:f7ff:fe 0 - - 0 - - bge2 1500 <Link#9> 00:0a:f7:90:e6:ee 1722390896 1717684 0 825525005 0 0 bge2 - fe80::%bge2/6 fe80::20a:f7ff:fe 0 - - 1 - - bge3 1500 <Link#10> 00:0a:f7:90:e6:ef 1665701913 2291555 0 914404232 0 0 bge3 - fe80::%bge3/6 fe80::20a:f7ff:fe 0 - - 2 - - -

Hmm, looks like 1 irq per NIC for bce/bge. However I'd expect to see one CPU core at 100% if it was failing to service the NIC fast enough.

What are those NICs connected to? Are you seeing errors on the connected devices?

-

@stephenw10 ,

here is output of top:[2.6.0-RELEASE][admin@pfs2]/root: top -HaSP last pid: 11585; load averages: 0.58, 0.76, 0.67 up 45+17:40:30 08:30:45 450 threads: 17 running, 382 sleeping, 51 waiting CPU 0: 0.0% user, 0.0% nice, 12.8% system, 0.0% interrupt, 87.2% idle CPU 1: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 2: 0.0% user, 0.0% nice, 4.3% system, 0.0% interrupt, 95.7% idle CPU 3: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 4: 0.0% user, 0.0% nice, 8.0% system, 3.7% interrupt, 88.2% idle CPU 5: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 6: 0.0% user, 0.0% nice, 10.7% system, 0.0% interrupt, 89.3% idle CPU 7: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 8: 0.0% user, 0.0% nice, 2.7% system, 0.0% interrupt, 97.3% idle CPU 9: 0.5% user, 0.0% nice, 0.0% system, 0.0% interrupt, 99.5% idle CPU 10: 0.5% user, 0.0% nice, 2.7% system, 0.0% interrupt, 96.8% idle CPU 11: 0.0% user, 0.0% nice, 0.5% system, 0.0% interrupt, 99.5% idle CPU 12: 0.0% user, 0.0% nice, 2.1% system, 0.0% interrupt, 97.9% idle CPU 13: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle CPU 14: 0.0% user, 0.0% nice, 2.7% system, 0.0% interrupt, 97.3% idle CPU 15: 0.5% user, 0.0% nice, 0.5% system, 0.0% interrupt, 98.9% idle Mem: 104M Active, 6370M Inact, 1570M Wired, 782M Buf, 23G Free Swap: 4096M Total, 4096M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 11 root 155 ki31 0B 256K CPU11 11 1094.8 100.00% [idle{idle: cpu11}] 11 root 155 ki31 0B 256K CPU13 13 1094.7 100.00% [idle{idle: cpu13}] 11 root 155 ki31 0B 256K CPU3 3 1093.3 99.84% [idle{idle: cpu3}] 11 root 155 ki31 0B 256K CPU1 1 1093.8 99.77% [idle{idle: cpu1}] 11 root 155 ki31 0B 256K CPU15 15 1094.6 99.46% [idle{idle: cpu15}] 11 root 155 ki31 0B 256K CPU7 7 1094.3 98.81% [idle{idle: cpu7}] 11 root 155 ki31 0B 256K CPU5 5 1093.5 98.73% [idle{idle: cpu5}] 11 root 155 ki31 0B 256K RUN 8 1088.5 98.21% [idle{idle: cpu8}] 11 root 155 ki31 0B 256K CPU14 14 1088.5 98.10% [idle{idle: cpu14}] 11 root 155 ki31 0B 256K CPU10 10 1088.6 97.99% [idle{idle: cpu10}] 11 root 155 ki31 0B 256K CPU12 12 1088.2 97.60% [idle{idle: cpu12}] 11 root 155 ki31 0B 256K CPU9 9 1094.8 97.09% [idle{idle: cpu9}] 11 root 155 ki31 0B 256K CPU2 2 1081.5 94.77% [idle{idle: cpu2}] 11 root 155 ki31 0B 256K CPU4 4 1075.0 92.37% [idle{idle: cpu4}] 11 root 155 ki31 0B 256K CPU0 0 1071.4 88.04% [idle{idle: cpu0}] 11 root 155 ki31 0B 256K CPU6 6 1028.6 85.34% [idle{idle: cpu6}] 0 root -92 - 0B 1616K - 6 63.1H 12.78% [kernel{bge0 taskq}] 0 root -92 - 0B 1616K - 0 938:32 8.50% [kernel{bge1 taskq}] 0 root -76 - 0B 1616K - 0 564:06 3.74% [kernel{if_io_tqg_0}] 12 root -92 - 0B 816K WAIT 4 533:51 3.09% [intr{irq258: bce2}] 0 root -92 - 0B 1616K - 2 446:02 2.95% [kernel{bge2 taskq}] 0 root -92 - 0B 1616K - 4 395:52 2.95% [kernel{bge3 taskq}] 0 root -76 - 0B 1616K - 12 467:41 2.23% [kernel{if_io_tqg_12}] 0 root -76 - 0B 1616K - 6 389:13 2.03% [kernel{if_io_tqg_6}] 0 root -76 - 0B 1616K - 2 398:09 2.03% [kernel{if_io_tqg_2}] 0 root -76 - 0B 1616K - 10 442:43 1.90% [kernel{if_io_tqg_10}] 0 root -76 - 0B 1616K - 14 447:08 1.84% [kernel{if_io_tqg_14}] 0 root -76 - 0B 1616K - 8 457:22 1.69% [kernel{if_io_tqg_8}] 0 root -76 - 0B 1616K - 4 403:09 1.64% [kernel{if_io_tqg_4}] 54075 netdata 40 19 350M 213M nanslp 13 69:02 0.48% /usr/local/sbin/netdata -u netdata -P /var/db/netdata/netdata.pid{PLUGIN[freebsd]}bce/bge is internet lines, so they are connecting converter (ISP devices). I can't check on ISP devices.

ix is LAN interface . It is connecting to cisco meraki switch, but I checked on this switch and I don't see any error related to interfaces (those connecting to Pfsense) -

Hmm, hard to say.

You might swapping the ix and bce/bge NICs to see if the errors follow them. That might not be possible with the media types you have.