Virtualized ESXI PFSense can't pass ~1gbit with iPerf3

-

@bob-dig

"just because" is not a very illuminating answer.

Can you please clarify why I can't test it "terminating" on pfsense?

Thank you!

-

@fmroeira86 I can't, only I have read that it is like that. But how often do you copy stuff onto your firewall? Just test between your hosts and see yourself...

-

@fmroeira86 said in Virtualized ESXI PFSense can't pass ~1gbit with iPerf3:

@heper Why?

https://docs.netgate.com/pfsense/en/latest/packages/iperf.html?highlight=iperf#usage

iperf running on pfSense software is NOT a suitable way of testing firewall throughput, as there is a significant difference between performance of traffic initiated or terminated on the firewall and traffic traversing the firewall. There are many suitable uses for iperf running on pfSense software, but testing the throughput capabilities of the firewall is not one of them. -

Thank you for your clarification!

-

@bob-dig Thank you!

-

Well, I just did a test as your described with two machines on different subnets, routed by PFSense and I could get more than this:

[SUM] 0.00-10.00 sec 1.46 GBytes 1.25 Gbits/sec 8097 sender

[SUM] 0.00-10.00 sec 1.45 GBytes 1.24 Gbits/sec receiver -

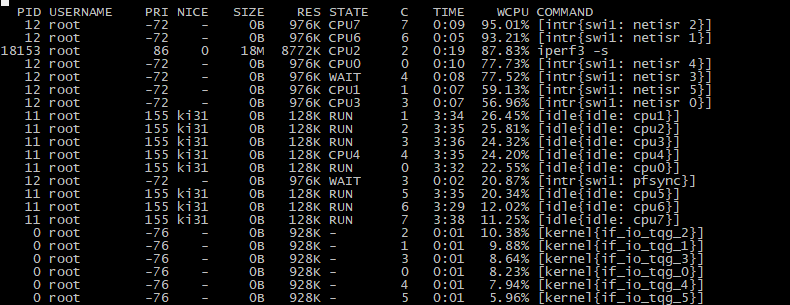

Check the per-core loading shown in

top -HaSPwhen testing throughput. -

@stephenw10 said in Virtualized ESXI PFSense can't pass ~1gbit with iPerf3:

top -HaSP

Ok.

After some reading I added these entries to loader.conf.local

hw.pci.honor_msi_blacklist="0"

dev.vmx.0.iflib.override_ntxds="0,4096"

dev.vmx.0.iflib.override_nrxds="0,2048,0"

dev.vmx.1.iflib.override_ntxds="0,4096"

dev.vmx.1.iflib.override_nrxds="0,2048,0"

kern.ipc.nmbclusters="1000000"

kern.ipc.nmbjumbop="524288"I also added:

net.isr.dispatch=deferred

and I got:

[SUM] 0.00-10.00 sec 4.65 GBytes 4.00 Gbits/sec 2111 sender

[SUM] 0.00-10.01 sec 4.62 GBytes 3.96 Gbits/sec receiverIt's improving but still far from 10gbit. I would be satisfied with 8Gbits/sec...

During my testing this is the output from top -HaSP command :

-

Update:

New results with:

Hardware Checksum Offloading

Hardware TCP Segmentation Offloading

Hardware Large Receive OffloadingENABLED (meaning= unchecked)

And also removed

net.isr.dispatch=deferred

[SUM] 0.00-60.00 sec 56.8 GBytes 8.14 Gbits/sec 12804 sender

[SUM] 0.00-60.00 sec 56.8 GBytes 8.13 Gbits/sec receiver -

That's probably as good as you're going to get.

You might check he number of queues each vmx NIC is using. It should show in the boot log.

-

In the meantime I just tried to do iperf3 between two servers (with pfsense in the middle) and I only got:

[SUM] 0.00-20.00 sec 10.1 GBytes 4.33 Gbits/sec 12919 sender

[SUM] 0.00-20.01 sec 10.0 GBytes 4.31 Gbits/sec receiverIf I set the pfsense box as a iperf3 server I get the results I told before:

[SUM] 0.00-60.00 sec 56.8 GBytes 8.14 Gbits/sec 12804 sender

[SUM] 0.00-60.00 sec 56.8 GBytes 8.13 Gbits/sec receiver