Major DNS Bug 23.01 with Quad9 on SSL

-

Well unfortunately this is still an issue for me despite my efforts to change ISP and restore / rebuild my configuration.

Although I am not using Quad9 but Cloudflare DNS over TLS (without DNSSEC enabled).

So back to plain old port 53 forwarding to Cloudflare.

Whilst I had some quiet time I did run some dnsperf load testing with an average of 81 queries per second from an Ubuntu VM and managed to recreate what I intermittently see when this issue occurs. DNS over TLS breaks fairly quickly and completely stops DNS queries. I have to restart unbound to fix it.

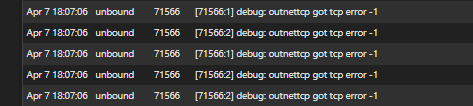

Errors in the syslog are as before when it occurred randomly..

[54217:3] debug: tcp error for address 1.1.1.1 port 853

[54217:0] debug: outnettcp got tcp error -1I did bump up some of the unbound cache settings, buffers and queries per thread but it still borks up quite quickly.

Running the same forwarding to Cloudflare without DNS over TLS I can run around 165 queries per second, it eventually slows down but out of the 3 or 4 times I only managed to break it once where DNS just stopped, otherwise it appeared to recover.

If there is anything I can adjust or commands run, I am happy to give it a go if this test can lead us closer to figuring out what’s going on?

-

Interesting.

The common factor is "1.1.1.1".

'Normal' DNS uses port 53, most probably most traffic is UDP. Request and answer will always fit in just one Ethernet packet.

When using port 853, it uses DNS over TLS, so traffic can only be TCP. I wonder if just one ethernet pack can handle the entire DNS sequence.

Still, both methods used would be a fraction of your your WAN throughput.When you visit with your PC a web site, or your mail client drop a mail into your SMTP mail server, or when it retrieves a mail, all traffic will use TLS also.

Small difference : this traffic isn't generated by pfSense, pfSense just passes the 'packets' not knowing that it is 'TLS' stuffDid someone do this test :

Instead of having pfSense being the forwarder, contact "1.1.1.1" on behalf of the entire LAN, what about setting up the DNS (statically) so they all get their DNS from "1.1.1.1" using TLS (port 853) ? I understood that Windows 11 can do DNS over TLS (Windows 10 needs an extra program to be able to do DNS over TLS).

If the issue isn't local or 'pfSense' but somewhere on the link (ISP) or with "1.1.1.1", the issue should be the same.

If the issue goes away : that a big finger pointing to pfSense::Unbound.I tend to think : no way .... as me using unbound 1.17.1 (pfSense 23.01) as a forwarder too 1.1.1.1:853 works just fine. Maybe my LANs are to small / not enough devices hammering out enough DNS requests ( a hotel, with many clients connected on the captive portal all day long ) to make the issue show up ?

@steveits said in Major DNS Bug 23.01 with Quad9 on SSL:

It was working in 22.05 and earlier for me but problematic in 23.01.

Humm, I didn't test that.

I followed a long and tedious seminar years ago, to learn about DNSSEC, as I wanted to make my own DNS server DNSSEC 'ready'. It was pure pain. I really had the impression that they just made world's most used Internet service (DNS) also the most complicated one.

But it worked out : https://dnsviz.net/d/brit-hotel-fumel.fr/dnssec/

I admit, I don't know what what unbound does if it was asked to do 'dnnsec' while forwarding.

Does it, at first, just get the DNS request and forward "what is the A of 'www.google.com'" to "1.1.1.1" and waits for an answer. And then, in paralellel ( ?) it also gets the RSIG DNSSEC records of the TLD of dot com and then the RSIG records of the DNS name server of google.com to re create from the root key (preloaded and initialized unbound startup - its the upper "DNSKEYalg=8, id=20326 - 2048 bits" key you can see at the DNSSEC trust chain, see my link) to bottom the validity of the chain ? ?

I don't know if 1.1.1.1 will honor these RSIG DNSSEC requests.

No ... wait ....

These RSIG will get send over '53' to the corresponding zone ...they can't be send to 1.1.1.1.

And if they ware, 1.1.1.1 would surely ditch them.What I do know : this means unbound is still resolving .... while forwarding, and that doesn't make sens.

Also : the root key "20326 " is already known at unbound start, it doesn't change often ( key roll over is a admin's DNS nightmare )

But when unbound contacts the TLD domain server (like dot com) it will ask for a NS record of the google.com domain name server AND the zone RSIG at signs the dot come zone at the same moment.

The same thing will happen when the domain name server is asked for a A for the ending google.com. The entire trust chain is calculated from top to bottom, and if ok, the answer, the A record is made available for the requesting client.So, yeah, doing DNSSEC while forwarding is like putting gasoline in a Tesla.

You would still be able to drive the Tesla, but thinks would become very stinky at the long run.

I even wonder where you would put the gasoline.

The slights spark would even explode the car as their is not a gas tank to hold it.It doesn't make sense to use DNSSSEC when forwarding, as all these RSIG requests will totally annihilate the gain of time. All DNSSEC traffic is 'non TLS' (DNSSEC isn't available over TLS).

When forwarding, you chose to trust the upstream DNS - "no matter what".I will re activate forwarding to 1.1.1.1 over TLS (853) WITH DNSSEC enabled.

All bets are open

Will useless DNS traffic sky rocket ? Will my pfSense take fire ?Extra : and will unbound really do these RSIG lookup directly to the implicated TLDs and domain name servers, completely bypassing 1.1.1.1 ? I have to check that.

....

Sorry for the complicated rent.

All I know is that I don't know everything

-

@gertjan Note I’m not saying DNSSEC is supposed to work just that it didn’t have failures for me in earlier versions and does now. Netgate says

https://docs.netgate.com/pfsense/en/latest/services/dns/resolver-config.html

“DNSSEC works best when using the root servers directly, unless the forwarding servers support DNSSEC. Even if the forwarding DNS servers support DNSSEC, the response cannot be fully validated.If upstream DNS servers do not support DNSSEC in forwarding mode or with domain overrides, DNS queries are known to be intercepted upstream, or clients have issues with large DNS responses, DNSSEC may need to be disabled.”

Quad9’s setup doc says

https://support.quad9.net/hc/en-us/articles/4433380601229-Setup-pfSense-and-DNS-over-TLS

“DNSSEC is already enforced by Quad9, and enabling DNSSEC at the forwarder level can cause false DNSSEC failures.”All I’m saying is I’ve suggested to maybe 15-20 (a guess) people here having DNS problems after upgrading to 23.01, to disable DNSSEC. I’d guess 2/3 said it fixed their issue and 1/3 had to disable TLS also. (Note that Quad9 doc is how to set up TLS…)

And yes it seems weird any rate limiting on the remote server end would be related to upgrading pfSense, but it’s unlikely multiple DNS providers set up rate limits all at the same time. So I’m guessing probably some change in unbound is related. Which is of course not very scientific! But the tests above do seem to indicate some sort of issue.

-

@joedan said in Major DNS Bug 23.01 with Quad9 on SSL:

Errors in the syslog are as before when it occurred randomly..

[54217:3] debug: tcp error for address 1.1.1.1 port 853

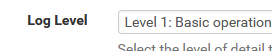

[54217:0] debug: outnettcp got tcp error -1What logging level do you have set in Unbound when you see that?

I would expect to be able to see additional logging with those sorts of errors.

-

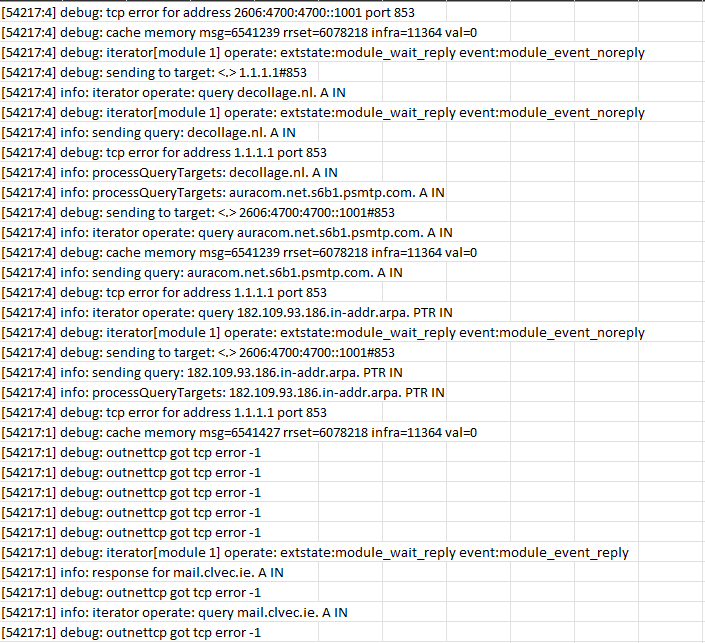

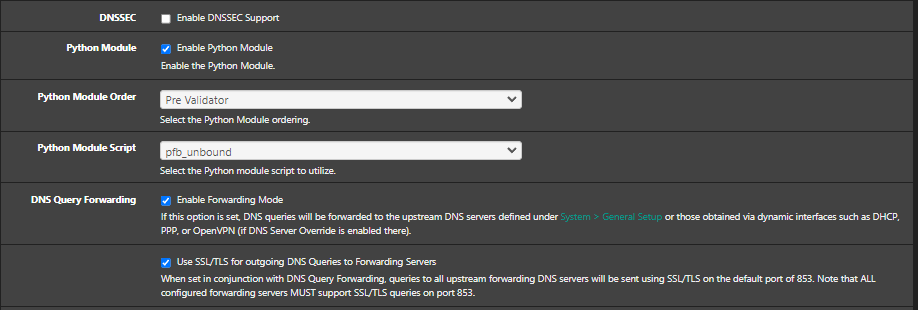

Unbound Logging Level 3 (although I did manage to change it to 4 very briefly but had to stop due to the amount of data). Snippet of Level 3 logging downloaded from graylog below.

Hopefully I am ingesting everything.

-

There is a closed bug report on the

unboundGithub site with this exact same TCP error here: https://github.com/NLnetLabs/unbound/issues/535. Theunbounddeveloper attributed the TCP error to the other end of the connection (meaning not theunboundside) closing the TCP socket. Maybe this is a clue ??? -

@gertjan said in Major DNS Bug 23.01 with Quad9 on SSL:

what about setting up the DNS (statically) so they all get their DNS from "1.1.1.1" using TLS (port 853)

@joedan Can you run your test against 1.1.1.1 directly, not using pfSense as the DNS server?

Quoting from @bmeeks' referenced link, "This is normal if the other server restarts for example, or maybe because it wants to manage the TCP connections that it has; possibly with timeouts for how long they can be used."

-

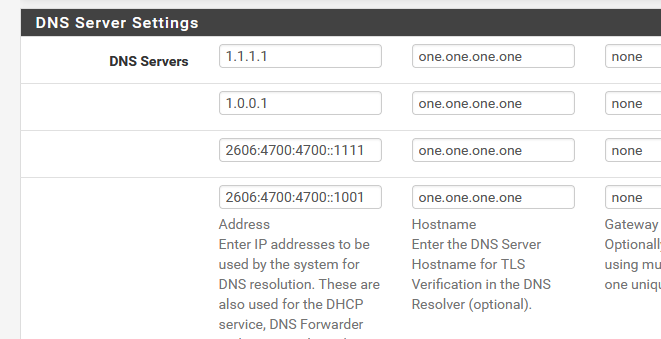

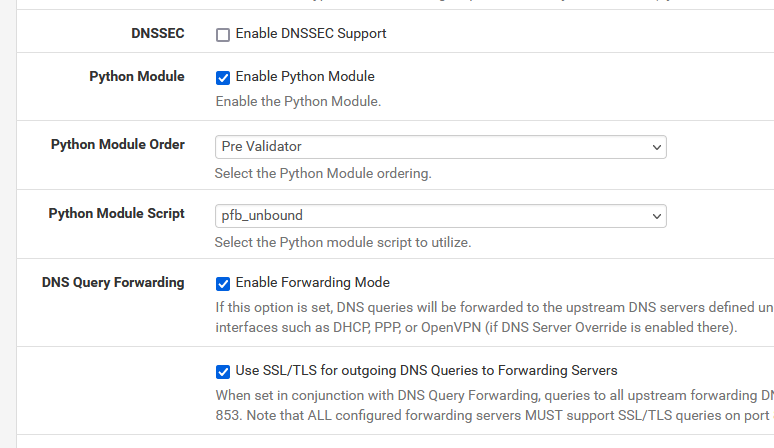

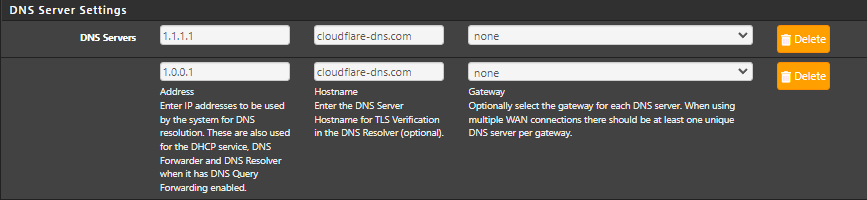

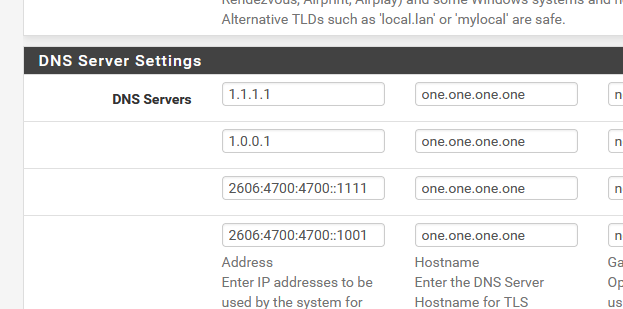

I've activated these again :

with :

When the log level was set to 3, I saw some

debug: tcp error for address 2606:4700:4700::1111 port 853

and

debug: tcp error for address 2606:4700:4700::1001 port 853

and

debug: tcp error for address 1.1.1.1 port 853

and

debug: tcp error for address 1.0.0.1 port 853Also some repeating :

....

outnettcp got tcp error -1

outnettcp got tcp error -1

outnettcp got tcp error -1

outnettcp got tcp error -1

....Recent reading makes me think these are rather harmless.

I'll leave it for the weekend.

When DNSSEC was activated, I saw a lot of DS and other DNSSEC related records were requested. DNS resolving worked just fine, though.

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

Mmm, it looks like log level 4 might be needed to see any additional logging associated with those errors.

-

@stephenw10 said in Major DNS Bug 23.01 with Quad9 on SSL:

level 4

shows 'nothing' special.

And what you can't see doesn't exist ;)

I'm forwarding right now to 1.1.1.1 etc using 853 - see setup above.

I even have DNSSEC activated + "Harden DNSSEC Data" (on the Resolver Advanced Settings page) .... because, as Clause Kellerman always says : "why not !!".

I'll leave it like this for the weekend. I'll get back on this monday morning.I'm banking, send some mails, received a ton of mail, all my colleges are also doing their toktok things, and no one came complaining to me (they know who to look) to tell me that I have to stop with "messing with the connection".

-

@gertjan

Hi, I don't see those errors here. I activated log level 4.

I use Cloudflare DNS servers. 1.1.1.1 and 1.0.0.1

But I use another hostname for the TLS verification.

Sorry, I take it back. It was on Log level 3.

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

As far as i’m concern, quad9, google, whatever you use. 23.01 has a outstanding bug base on DoT using forwarding mode (dnssec uncheck) what ever config you use you will get « FAILED TO RESOLVE HOST » in DoT mode. For all alias that has to resolve to dynamic dns (xxxx.dyndns.org)

-

J Jimbohello referenced this topic on

-

J Jimbohello referenced this topic on

-

J Jimbohello referenced this topic on

-

here log level 3

from pfsense resolution imself for ALIASES with dynamic dns (xxxx.dyndns.org)

Apr 7 21:33:30 unbound 88702 [88702:0] info: finishing processing for vrac-nicolas.dyndns.org.jimbohello.arpa. AAAA IN

Apr 7 21:33:30 unbound 88702 [88702:0] info: query response was NXDOMAIN ANSWER

Apr 7 21:33:30 unbound 88702 [88702:0] info: reply from <.> 1.1.1.1#853

Apr 7 21:33:30 unbound 88702 [88702:0] info: response for vrac-nicolas.dyndns.org.jimbohello.arpa. AAAA IN

Apr 7 21:33:30 unbound 88702 [88702:0] info: iterator operate: query vrac-nicolas.dyndns.org.jimbohello.arpa. AAAA IN

Apr 7 21:33:30 unbound 88702 [88702:0] debug: iterator[module 0] operate: extstate:module_wait_reply event:module_event_replyFrom the client side (lan)

Apr 7 21:38:42 unbound 88702 [88702:0] info: finishing processing for vrac-nicolas.dyndns.org. A IN

Apr 7 21:38:42 unbound 88702 [88702:0] info: query response was ANSWER

Apr 7 21:38:42 unbound 88702 [88702:0] info: reply from <.> 8.8.8.8#853

Apr 7 21:38:42 unbound 88702 [88702:0] info: response for vrac-nicolas.dyndns.org. A IN

Apr 7 21:38:42 unbound 88702 [88702:0] info: iterator operate: query vrac-nicolas.dyndns.org. A INJESUS I FOUND THE ISSUE I GUEST :

WHY IS PFSENSE ITSELF TRY TO RESOLVE

vrac-nicolas.dyndns.org.jimbohello.arpa

when it suppose to be vrac-nicolas.dyndns.orgpfsense is adding the domain part of itself ! no wonder why it can't resolve

-

@jimbohello said in Major DNS Bug 23.01 with Quad9 on SSL:

vrac-nicolas.dyndns.org.jimbohello.arpa

when it suppose to be vrac-nicolas.dyndns.org

pfsense is adding the domain part of itself ! no wonder why it can't resolveYour Windows PC is doing the same thing ...

Have a look what 'nslookup' does :

C:\Users\Gauche>nslookup Serveur par defaut : pfSense.mydomain.tld Address: 2a01:cb19:beef:a6dc::1 > set debug > google.com Serveur : pfSense.mydomain.tld Address: 2a01:cb19:beef:a6dc::1 ------------ Got answer: HEADER: opcode = QUERY, id = 2, rcode = NXDOMAIN header flags: response, want recursion, recursion avail. questions = 1, answers = 0, authority records = 1, additional = 0 QUESTIONS: google.com.mydomain.tld, type = A, class = IN AUTHORITY RECORDS: -> mydomain.tld ttl = 446 (7 mins 26 secs) primary name server = ns1.mydomain.tld responsible mail addr = postmaster.mydomain.tld serial = 2023020723 refresh = 14400 (4 hours) retry = 3600 (1 hour) expire = 1209600 (14 days) default TTL = 10800 (3 hours) ------------ ------------ Got answer: HEADER: opcode = QUERY, id = 3, rcode = NXDOMAIN header flags: response, want recursion, recursion avail. questions = 1, answers = 0, authority records = 1, additional = 0 QUESTIONS: google.com.mydomain.tld.net, type = AAAA, class = IN AUTHORITY RECORDS: -> mydomain.tld ttl = 446 (7 mins 26 secs) primary name server = ns1.mydomain.tld responsible mail addr = postmaster.mydomain.tld serial = 2023020723 refresh = 14400 (4 hours) retry = 3600 (1 hour) expire = 1209600 (14 days) default TTL = 10800 (3 hours) ------------ ------------ Got answer: HEADER: opcode = QUERY, id = 4, rcode = NOERROR header flags: response, want recursion, recursion avail. questions = 1, answers = 1, authority records = 0, additional = 0 QUESTIONS: google.com, type = A, class = IN ANSWERS: -> google.com internet address = 142.250.74.238 ttl = 30 (30 secs) ------------ Réponse ne faisant pas autorité : ------------ Got answer: HEADER: opcode = QUERY, id = 5, rcode = NOERROR header flags: response, want recursion, recursion avail. questions = 1, answers = 1, authority records = 0, additional = 0 QUESTIONS: google.com, type = AAAA, class = IN ANSWERS: -> google.com AAAA IPv6 address = 2a00:1450:4007:80c::200e ttl = 30 (30 secs) ------------ Nom : google.com Addresses: 2a00:1450:4007:80c::200e 142.250.74.238 >You saw what happened ?

I wanted details (fact checking) so I used 'set debug' first.Then it showed that when I look up a domain, it adds the local PC domain first, mydomain.tld.

Because ..... we (me and you) are doing it wrong

When you want to do a DNS lookup, you have to ask :

google.com.

The final dot is important.It's not really an issue.

If I wanted to look up the IP of my PC, called 'gauche2' :

( which is just the host name, not the FQDN !)

nslookup adds again mydomain.tld. and this time and asks pfSense

gauche2.mydomain.tldand that is a 'good' question :

I got an IPv4 and IPv6 as nslookup asks both by default.So, not really an error, and you could consider adding a final dot if the GUI accepts it (it does, I guess).

Btw : when unbound receives "google.com.mydomain.tld." as the request, it knows that it is authoritative for "mydomain.tld." so it isn't going to ask upstream details about "mydomain.tld." : after all "unbound handles "mydomain.tld" and the upstream resolver doesn't know anything about local domains and resources (normally).

I'm not going to ask 9.9.9.9 or 1.1.1.1 about FQDN info for the device in my LAN, that's not logic.

Btw : I have the resolvers/unound "System Domain Local Zone Type" set to "Static", not to the (default?) "Transparant".

When set to "Transparant", unbound will ask 9.9.9.9 to resolve "vrac-nicolas.dyndns.org.jimbohello.arpa." which ... no surprise, will give no answer or a "NXDOMAIN" as this domain is unknown or "new ?" to 9.9.9.9edit : since yesterday, I'm doing the forward thing : DoT to :

No issues what so ever.

-

Well I am 95% sure I fixed my issue. Decided to switch dns over tls back on and after a couple of hours had the dreaded dns failures. This time I removed ntopng package completely. Ntopng has been monitoring both lan and wan since Nov 22 under 22.05 and 23.01 since release candidate.

As soon as I removed ntopng, dns over tls through Cloudflare has been running ok for 24 hours. Browsing websites is super quick. My machine and bandwidth were never under stress however since removing ntopng it has drastically sped up overall speed to load a website and even the pfsense web interface itself. Pfblockerng is showing around 20k dns entries per hour which is normal load.

-

Nevermind, thought I had fixed it, been over 24 hours and it happened again. Will just stay in Unbound resolver mode for now and leave it be. That seems to be stable and working at least.

-

I’ve tried static ! All dyndns in my aliases does not resolves.

Before 22.05 was transparent and ad no issue

I did a work arround

Instead of regular network/host aliases i did « url ip table aliases » update frequency 1 days. Now it’s working as expected !

-

To be clear you created a file with the dyndns FQDNs in it hosted locally and added that as the URL Table location?

-

Exaclly

Aliases url ip table

Host on a web server

Http://server.com/mydnamicdns.txt

All my dynamic in that files

DoT activated

All good ninja style

-

Ok, good. I thought for a minute it was handling URL aliases incorrectly.

Well that seems like a clue then. Why is it resolving those entries differently.