BGP + Metallb (K8) Intermittent Long Load Times For HTTP traffic

-

Hi,

I preface this by saying I am very new to pfsense and still learning a lot about it and networking in general. I've been chasing this issue for a few weeks and have been unable to make traction on it. I am using pfsense with FRR BGP to set up internal IP address allocation to Metallb speakers/controller in a K3s cluster for ingress-nginx pods. These pods (2+) are behind a K8 service which receives it's LoadBalancerIP from Metallb via BGP peering with pfsense.

I pretty much followed the doc here to get things set up:

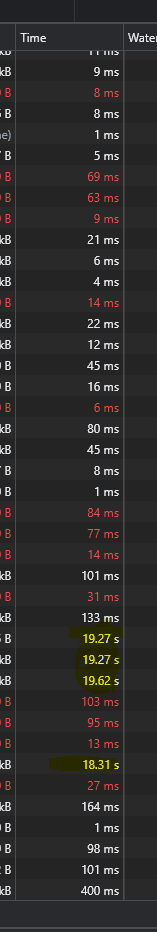

I noticed that every ~20 requests or so would hang and take 15+ seconds to finally complete as shown below

This happens consistently and makes it rather a pain to use BGP + Metallb + K3s for internal websites. I also stumbled upon another user experiencing the same thing: here. I tried various things as discussed here as well . But I am still running into the same issue after some tinkering.

I was wondering if anyone else had this same issue and was able to resolve it? Metallb also works with L2-advertisement but the user experience is better with BGP and I would like to make it work. As stated, I am still very new to Pfsense and have limited networking knowledge but can try my best to provide any additional info/logs for this issue.

-

How and what are you testing there to generate those results?

Do you see anything logged on either side?

Was the load-balancer working as expected before adding BGP?

Steve

-

Hey thanks for the response!

How and what are you testing there to generate those results?

- This is from the "Inspect -> Network" page from the Chrome browser. That page has the response/load times of the HTTP calls being made. Same issue happens with Edge browser, haven't tried any others yet.

- I am testing various internal web pages (plex, proxmox UI, custom apps, etc.) that run through a reverse proxy ingress-nginx pods (2+ but also tried with just 1) deployed on a k3s cluster (on proxmox VMs - 3 leader/5 follower cluster - app pods only run on follower/workers)

- The Metallb (installed via helm - version:

v0.13.9) pods deployed in the k3s cluster perform BGP peering with pfsense (FRR BGP) and request IP addresses in a specified subnet for any k8 service created with spec:type: LoadBalancer - In my case, I launch ingress-nginx (installed via helm - version:

1.6.4) with 2+ pods and a LoadBalancer service. These receive the IP address in the subnet range as expected and I am able to use any browser to access internal web pages behind the reverse proxy. I also have cert-manager handling certs through letsencrypt and route53 for these internal sites. Purchased a public domain for this purpose and also have pihole running internally that re-points the ip address for my public domain. Pihole has a wildcard DNS entry to point the public domain to Metallb IP address

Do you see anything logged on either side?

- I do not see much with pfsense but as stated very new to pfsense and BGP, so I can try to gather something specific (location or wording) that you might find helpful during the window the problem occurs. Since it occurs very often, I can definitely get some info out without too much work.

- From what I can tell though, no obvious errors in other applications in the stack. Metallb controller and speaker logs do not show anything at current/info level logging when the issue happens.

- Ingress-nginx logs are a bit more interesting though, when HTTP calls start to hang, the request does not show up in the nginx logs at all. This gap suggests that the traffic is not even getting there (so not an issue with slow apps) and during this hanging time, other browser tabs/sessions I have open to other internal sites work fine but will also experience the same issue later. This finding points at being a per connection thing/issue? That led me to experiment with TCP timeout values in the Firewall advanced settings but still same issue exists. When the requests do finally get to ingress-nginx it sends all that have been hanging/waiting on the web page at what appears to be the exact same time.

Was the load-balancer working as expected before adding BGP?

- So I had this exact same set up with Unifi USG working and did not run into this specific issue before. When the USG started randomly shutting down, I replaced it with a pfsense router (attempted to recreate BGP config via FRR) and the problem started occurring. So I came here hoping maybe someone else had similar issue(s) and resolved it.

- As linked, this exact issue appears for other users so I do believe it's something pfsense related at this moment. I just don't have enough knowledge in this area to potentially identify the problem.

- I've also tried tuning ingress-nginx various times to see if it would help. I did things like change timeouts for client/read/proxy, tuning http2, etc. and was unable to resolve the issue.

- Problem seems to only happens for internal sites I run.

-

Additional info from FRR Status section in pfsense that may help?

My router ip address is set at 10.0.0.1 and the BGP router-id is set to something outside of any used subnet (10.100.100.69)

Zebra Routes

Codes: K - kernel route, C - connected, S - static, R - RIP, O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP, T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP, F - PBR, f - OpenFabric, > - selected route, * - FIB route, q - queued, r - rejected, b - backup K>* 0.0.0.0/0 [0/0] via REDACTED_PUB_IP, 20:11:36 C>* 10.0.0.0/24 [0/1] is directly connected, igb1, 20:11:36 K * 10.0.20.0/24 [0/0] via 10.0.20.2, 20:11:36 C>* 10.0.20.0/24 [0/1] is directly connected, ovpns1, 20:11:36 B>* 10.0.30.250/32 [200/0] via 10.0.0.220, igb1, weight 1, 00:19:25 * via 10.0.0.221, igb1, weight 1, 00:19:25 * via 10.0.0.222, igb1, weight 1, 00:19:25 * via 10.0.0.223, igb1, weight 1, 00:19:25 * via 10.0.0.224, igb1, weight 1, 00:19:25 C>* REDACTED_PUB_IP/22 [0/1] is directly connected, igb0, 20:11:36Zebra IPv6 Routes

Codes: K - kernel route, C - connected, S - static, R - RIPng, O - OSPFv3, I - IS-IS, B - BGP, N - NHRP, T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP, F - PBR, f - OpenFabric, > - selected route, * - FIB route, q - queued, r - rejected, b - backup K>* ::/0 [0/0] via REDACTED_PUB_IP, 20:11:36 C>* REDACTED::/64 [0/1] is directly connected, igb0, 20:11:36 C>* REDACTED::38/128 [0/1] is directly connected, igb0, 20:11:36 C * fe80::/64 [0/1] is directly connected, ovpns1, 20:11:36 C>* fe80::/64 [0/1] is directly connected, lo0, 20:11:36 C * fe80::/64 [0/1] is directly connected, igb1, 20:11:36 C * fe80::/64 [0/1] is directly connected, igb0, 20:11:36BGP Routes

BGP table version is 10, local router ID is 10.100.100.69, vrf id 0 Default local pref 100, local AS 64512 Status codes: s suppressed, d damped, h history, * valid, > best, = multipath, i internal, r RIB-failure, S Stale, R Removed Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self Origin codes: i - IGP, e - EGP, ? - incomplete Network Next Hop Metric LocPrf Weight Path *>i10.0.30.250/32 10.0.0.220 0 0 i *=i 10.0.0.222 0 0 i *=i 10.0.0.223 0 0 i *=i 10.0.0.224 0 0 i *=i 10.0.0.221 0 0 i Displayed 1 routes and 5 total pathsBGP IPv6 Routes

No BGP prefixes displayed, 0 existBGP Summary

IPv4 Unicast Summary: BGP router identifier 10.100.100.69, local AS number 64512 vrf-id 0 BGP table version 10 RIB entries 1, using 192 bytes of memory Peers 5, using 71 KiB of memory Peer groups 1, using 64 bytes of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt 10.0.0.220 4 64512 2430 2419 0 0 0 00:19:25 1 0 10.0.0.221 4 64512 2432 2420 0 0 0 00:19:26 1 0 10.0.0.222 4 64512 2431 2420 0 0 0 00:19:26 1 0 10.0.0.224 4 64512 2432 2421 0 0 0 00:19:26 1 0 10.0.0.223 4 64512 2432 2421 0 0 0 00:19:26 1 0 Total number of neighbors 5BGP Neighbor

BGP neighbor is 10.0.0.220, remote AS 64512, local AS 64512, internal link Hostname: k3s-worker-0 Member of peer-group metallb-k8 for session parameters BGP version 4, remote router ID 10.0.0.220, local router ID 10.100.100.69 BGP state = Established, up for 00:19:25 Last read 00:00:25, Last write 00:00:24 Hold time is 90, keepalive interval is 30 seconds Neighbor capabilities: 4 Byte AS: advertised and received AddPath: IPv4 Unicast: RX advertised IPv4 Unicast Route refresh: advertised Address Family IPv4 Unicast: advertised and received Address Family IPv6 Unicast: received Hostname Capability: advertised (name: pfSense.localdomain,domain name: n/a) not received Graceful Restart Capability: advertised Graceful restart information: Local GR Mode: Helper* Remote GR Mode: Disable R bit: False Timers: Configured Restart Time(sec): 120 Received Restart Time(sec): 0 Message statistics: Inq depth is 0 Outq depth is 0 Sent Rcvd Opens: 4 4 Notifications: 0 0 Updates: 1 6 Keepalives: 2414 2418 Route Refresh: 0 2 Capability: 0 0 Total: 2419 2430 Minimum time between advertisement runs is 0 seconds For address family: IPv4 Unicast metallb-k8 peer-group member Update group 6, subgroup 12 Packet Queue length 0 Community attribute sent to this neighbor(large) 1 accepted prefixes Connections established 4; dropped 3 Last reset 00:22:04, No AFI/SAFI activated for peer Local host: 10.0.0.1, Local port: 179 Foreign host: 10.0.0.220, Foreign port: 42481 Nexthop: 10.0.0.1 Nexthop global: fe80::6662:66ff:fe21:5b9d Nexthop local: fe80::6662:66ff:fe21:5b9d BGP connection: shared network BGP Connect Retry Timer in Seconds: 120 Read thread: on Write thread: on FD used: 28 BGP neighbor is 10.0.0.221, remote AS 64512, local AS 64512, internal link Hostname: k3s-worker-1 Member of peer-group metallb-k8 for session parameters BGP version 4, remote router ID 10.0.0.221, local router ID 10.100.100.69 BGP state = Established, up for 00:19:26 Last read 00:00:26, Last write 00:00:24 Hold time is 90, keepalive interval is 30 seconds Neighbor capabilities: 4 Byte AS: advertised and received AddPath: IPv4 Unicast: RX advertised IPv4 Unicast Route refresh: advertised Address Family IPv4 Unicast: advertised and received Address Family IPv6 Unicast: received Hostname Capability: advertised (name: pfSense.localdomain,domain name: n/a) not received Graceful Restart Capability: advertised Graceful restart information: Local GR Mode: Helper* Remote GR Mode: Disable R bit: False Timers: Configured Restart Time(sec): 120 Received Restart Time(sec): 0 Message statistics: Inq depth is 0 Outq depth is 0 Sent Rcvd Opens: 4 4 Notifications: 0 0 Updates: 1 6 Keepalives: 2415 2420 Route Refresh: 0 2 Capability: 0 0 Total: 2420 2432 Minimum time between advertisement runs is 0 seconds For address family: IPv4 Unicast metallb-k8 peer-group member Update group 6, subgroup 12 Packet Queue length 0 Community attribute sent to this neighbor(large) 1 accepted prefixes Connections established 4; dropped 3 Last reset 00:22:03, No AFI/SAFI activated for peer Local host: 10.0.0.1, Local port: 179 Foreign host: 10.0.0.221, Foreign port: 60415 Nexthop: 10.0.0.1 Nexthop global: fe80::6662:66ff:fe21:5b9d Nexthop local: fe80::6662:66ff:fe21:5b9d BGP connection: shared network BGP Connect Retry Timer in Seconds: 120 Read thread: on Write thread: on FD used: 24 BGP neighbor is 10.0.0.222, remote AS 64512, local AS 64512, internal link Hostname: k3s-worker-2 Member of peer-group metallb-k8 for session parameters BGP version 4, remote router ID 10.0.0.222, local router ID 10.100.100.69 BGP state = Established, up for 00:19:26 Last read 00:00:26, Last write 00:00:24 Hold time is 90, keepalive interval is 30 seconds Neighbor capabilities: 4 Byte AS: advertised and received AddPath: IPv4 Unicast: RX advertised IPv4 Unicast Route refresh: advertised Address Family IPv4 Unicast: advertised and received Address Family IPv6 Unicast: received Hostname Capability: advertised (name: pfSense.localdomain,domain name: n/a) not received Graceful Restart Capability: advertised Graceful restart information: Local GR Mode: Helper* Remote GR Mode: Disable R bit: False Timers: Configured Restart Time(sec): 120 Received Restart Time(sec): 0 Message statistics: Inq depth is 0 Outq depth is 0 Sent Rcvd Opens: 4 4 Notifications: 0 0 Updates: 1 6 Keepalives: 2415 2419 Route Refresh: 0 2 Capability: 0 0 Total: 2420 2431 Minimum time between advertisement runs is 0 seconds For address family: IPv4 Unicast metallb-k8 peer-group member Update group 6, subgroup 12 Packet Queue length 0 Community attribute sent to this neighbor(large) 1 accepted prefixes Connections established 4; dropped 3 Last reset 00:22:03, No AFI/SAFI activated for peer Local host: 10.0.0.1, Local port: 179 Foreign host: 10.0.0.222, Foreign port: 52305 Nexthop: 10.0.0.1 Nexthop global: fe80::6662:66ff:fe21:5b9d Nexthop local: fe80::6662:66ff:fe21:5b9d BGP connection: shared network BGP Connect Retry Timer in Seconds: 120 Read thread: on Write thread: on FD used: 27 BGP neighbor is 10.0.0.224, remote AS 64512, local AS 64512, internal link Hostname: k3s-worker-4 Member of peer-group metallb-k8 for session parameters BGP version 4, remote router ID 10.0.0.224, local router ID 10.100.100.69 BGP state = Established, up for 00:19:26 Last read 00:00:26, Last write 00:00:24 Hold time is 90, keepalive interval is 30 seconds Neighbor capabilities: 4 Byte AS: advertised and received AddPath: IPv4 Unicast: RX advertised IPv4 Unicast Route refresh: advertised Address Family IPv4 Unicast: advertised and received Address Family IPv6 Unicast: received Hostname Capability: advertised (name: pfSense.localdomain,domain name: n/a) not received Graceful Restart Capability: advertised Graceful restart information: Local GR Mode: Helper* Remote GR Mode: Disable R bit: False Timers: Configured Restart Time(sec): 120 Received Restart Time(sec): 0 Message statistics: Inq depth is 0 Outq depth is 0 Sent Rcvd Opens: 4 4 Notifications: 0 0 Updates: 1 6 Keepalives: 2416 2420 Route Refresh: 0 2 Capability: 0 0 Total: 2421 2432 Minimum time between advertisement runs is 0 seconds For address family: IPv4 Unicast metallb-k8 peer-group member Update group 6, subgroup 12 Packet Queue length 0 Community attribute sent to this neighbor(large) 1 accepted prefixes Connections established 4; dropped 3 Last reset 00:22:03, No AFI/SAFI activated for peer Local host: 10.0.0.1, Local port: 179 Foreign host: 10.0.0.224, Foreign port: 55361 Nexthop: 10.0.0.1 Nexthop global: fe80::6662:66ff:fe21:5b9d Nexthop local: fe80::6662:66ff:fe21:5b9d BGP connection: shared network BGP Connect Retry Timer in Seconds: 120 Read thread: on Write thread: on FD used: 25 BGP neighbor is 10.0.0.223, remote AS 64512, local AS 64512, internal link Hostname: k3s-worker-3 BGP version 4, remote router ID 10.0.0.223, local router ID 10.100.100.69 BGP state = Established, up for 00:19:26 Last read 00:00:26, Last write 00:00:24 Hold time is 90, keepalive interval is 30 seconds Neighbor capabilities: 4 Byte AS: advertised and received AddPath: IPv4 Unicast: RX advertised IPv4 Unicast Route refresh: advertised Address Family IPv4 Unicast: advertised and received Address Family IPv6 Unicast: received Hostname Capability: advertised (name: pfSense.localdomain,domain name: n/a) not received Graceful Restart Capability: advertised Graceful restart information: Local GR Mode: Helper* Remote GR Mode: Disable R bit: False Timers: Configured Restart Time(sec): 120 Received Restart Time(sec): 0 Message statistics: Inq depth is 0 Outq depth is 0 Sent Rcvd Opens: 4 4 Notifications: 0 0 Updates: 1 6 Keepalives: 2416 2420 Route Refresh: 0 2 Capability: 0 0 Total: 2421 2432 Minimum time between advertisement runs is 0 seconds For address family: IPv4 Unicast Update group 7, subgroup 13 Packet Queue length 0 Community attribute sent to this neighbor(large) 1 accepted prefixes Connections established 4; dropped 3 Last reset 00:22:03, No AFI/SAFI activated for peer Local host: 10.0.0.1, Local port: 179 Foreign host: 10.0.0.223, Foreign port: 41171 Nexthop: 10.0.0.1 Nexthop global: fe80::6662:66ff:fe21:5b9d Nexthop local: fe80::6662:66ff:fe21:5b9d BGP connection: shared network BGP Connect Retry Timer in Seconds: 120 Read thread: on Write thread: on FD used: 26 -

Where exactly are you running the browser http test from?

The time periods you're seeing are about what I might expect for ICMP redirects in an asymmetric routing situation. And it would not surprise me to find that the Unifi just deals with that for you behind the scenes.

Otherwise I think I'd be trying to capture this in a pcap in the pfSense internal interface. You would be able to confirm that the traffic is not leaving pfSense and we can then look at why. Lack of a route is what I'd expect there but if it was I'd expect to see something in the routing logs.

Steve

-

Hey thanks for the follow up!

Where exactly are you running the browser http test from?

- I am running these from a Windows 11 Desktop on the 10.0.0.1/24 subnet and it gets assigned an IP address from pfsense DHCP lease

- Metallb is set to use IP addresses from:

spec: addresses: - 10.0.30.6-10.0.30.250- In my case, ingress-nginx pods use a service that is assigned 10.0.30.250 IP address

I apologize, I didn't have time today to look into more details but I will do some more digging tomorrow. I should have some time to do a pcap capture through GUI or tcpdump but am not very well versed in BGP yet so will need to do some reading on BGP routing. But thank you very much and I appreciate your time. I will post my pcap findings here when I get a chance

-

Hey thanks again for working with me on this issue, I am very appreciative.

Attached is a pcap dump done through pfsense GUI (I can also run tcpdump locally if needed). I can definitely provide more information if needed.

10.0.30.250 is the IP address for ingress-nginx service running in k3s, it receives that IP from Metallb + pfsense. 10.0.0.220 is the k3s worker node where ingress-nginx is deployed. It is in the default subnet (10.0.0.1/24) but outside the DHCP client range. I added a DHCP reservation for this node so it will use a specific IP address.

10.0.0.24 is the laptop I was accessing the webpages from and is on my default subnet. During the test/recreation of the issue, I encountered the problem twice and it should be in the pcap. I am not sure what to look for but I do see ICMP redirects. I am looking forward to seeing what you find and getting closer to resolving this issue!

Thanks,

Peter -

Ok, a diagram might be useful here just to remove confusion.

However I think that is your problem:

22:35:53.091736 IP 10.0.0.1 > 10.0.0.24: ICMP redirect 10.0.30.250 to host 10.0.0.220, length 72As I understand it that is pfSense (10.0.0.1) redirecting your test host (10.0.0.24) to access nginx (10.0.30.250) via 10.0.0.220.

It does that because pfSense has a route via that and it's telling the host it can access it directly without having to go through pfSense.

So the host will do that until the ICMP redirect expires. At which point it will start sending traffic to pfSense again but pfSense will block it because it will be TCP traffic that is out of state. I would expect to see that blocked traffic in the firewall log.

This: https://docs.netgate.com/pfsense/en/latest/troubleshooting/asymmetric-routing.html#common-scenarioBasically the test is invalid because it's in the same subnet as the target creating an asymmetric route.

If you test from somewhere external I would not expect to see this issue.Steve

-

I've been busy lately so haven't had the chance yet to respond back but I read through your response and linked documents and it all makes sense to me. As stated I am very new to pfsense + BGP so I tried my best to understand the concepts.

I have resolved the issue for now with your help and will try to post a longer read/explanation in a few days (or edit this existing post) when I can about what I learned/fixed since I always like to share/document what I've learned for myself and also to help future readers that may stumble on the same issue.

But essentially yes, it's due to asymmetric routing as you explained in your post. I ended up using my unifi 16 port switch and pfsense to create a VLAN for the proxmox server (setting the LACP bonded ports in unifi to use the VLAN Id that matches in both systems) where the k3s VMs run. Then added firewall rules to allow traffic between VLAN network and LAN network which is something also briefly mentioned in the doc you linked. I also found this doc very helpful:

https://docs.netgate.com/pfsense/en/latest/routing/static.html#asymmetric-routing

The issue from my new understanding was due to my traffic going as:

Client (10.0.0.0/24 via DHCP) -> Pfsense (10.0.0.1) -> Metallb (10.0.30.0/24) -> k3s workers (10.0.0.220-230)As stephen and the docs state, pfsense tells client to directly access and client will do so until ICMP redirect expires. Since my return traffic is going from k3s workers -> client, it creates an asymmetric route as stated and traffic will eventually be blocked when ICMP redirect expires and ICMP redirect will have to occurr again when you try to reconnect.

With asymmetric routing such as in this example, any stateful firewall will drop legitimate traffic because it cannot properly keep state without seeing traffic in both directions. This generally only affects TCP, since other protocols do not have a formal connection handshake the firewall can recognize for use in state tracking.

From my new/limited understanding, one way to resolve this, would be to add a static route that avoids using the router for ICMP redirects/routing. But since in my previous/problem set up, I had the client (10.0.0.0/24 via DHCP) going to k3s workers (10.0.0.220-230), you can't put a static route on the same subnet (doesn't make sense anyways).

So a more traditional/proper set up would be to put the proxmox server(s) behind a VLAN in pfsense and the managed unifi switch. This would create a new subnet (10.0.10.0/24 in my case) for the k3s worker VMs and also provide a different gateway (10.0.10.1) and ability to create static routes and/or firewall rules that actually make sense. In my case, I only had to allow firewall rules to allow traffic between VLAN and LAN interfaces.

This change allows for a pathway that does not introduce asymmetric routing since there is a default gateway in both subnets and traffic would be able to flow forward and backward in the same manner.

Thanks again @stephenw10 - your help and explanations led me to the right direction and how to more properly set up my homelab network!

-

Nice! Yeah moving the server to a different VLAN so the route is always through pfSense in both direction is the 'correct' solution here.