After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts

-

@getcom said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

What is a super large DHCP lease file

A long time ago I vaguely recall a post where the person had a relatively few devices but a corrupted file and it had thousands upon thousands of entries. Just trying to guess at what could be making unbound use CPU, and reading in files was one thought.

@getcom said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

Should I update this to the pfBlockerNG

They are supposed to be the same now, at least at release. (Edit: release of 23.01)

-

@steveits said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

What is a super large DHCP lease file

This one, for the IPv4 'pool' leases : /var/dhcpd/var/db/dhcpd.leases

There is a small gleu-ware program called /usr/local/sbin/dhcpleases that is kickstarted when a dhcpd IPv4 server assigns a new lease to some LAN device.

dhcpleases will parse the file for valid, actif leases, and write them out to /etc/hostsWhen it finished, it will a stone from the ground and sling-shotd unbound.

To see if this happens a lot : you ... can't. As you can't be sure what the reason was why unbound was instructed to restart.

You can see how often unbound restarts :grep 'start' /var/log/resolver.logand again, this could also happen after a WAN event (your ISP wanted to give you a new IP) or some one remove a LAN cable from the pfSense box.

Or the pfSense admin instructed pfBlockerng that it needs to reload every hour.

Or some idiot admin (like me) likes to mess around with his pfSense.So, keep in mind, some events are normal, and it's ok that unbound restarts ones in a while.

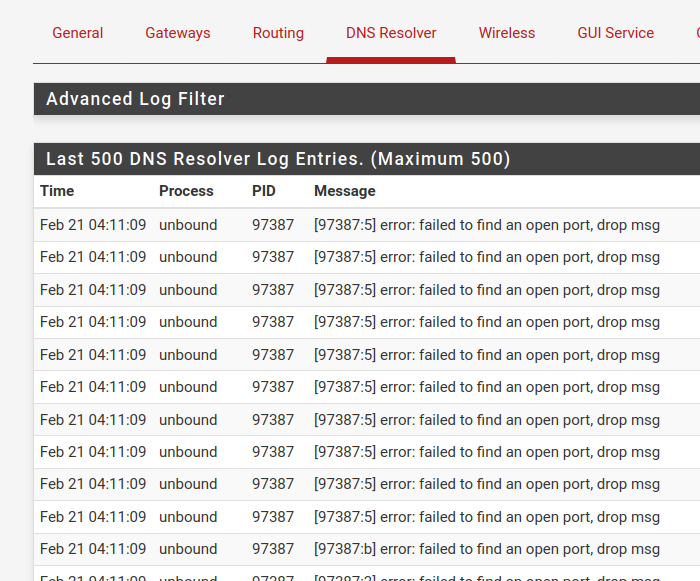

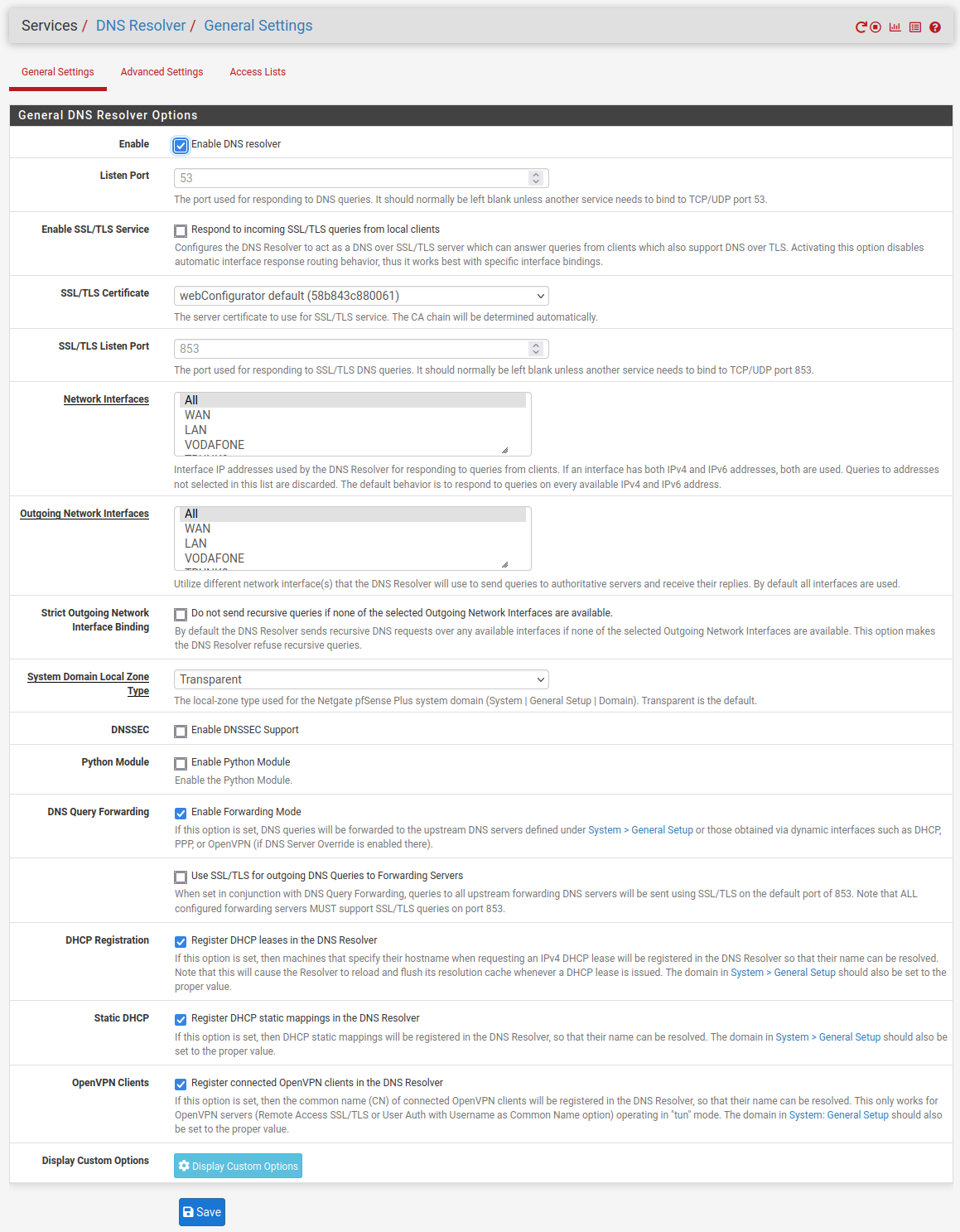

...... <30>1 2023-02-19T08:32:55.264556+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] info: start of service (unbound 1.17.1). <29>1 2023-02-19T14:35:35.966065+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] notice: Restart of unbound 1.17.1. <30>1 2023-02-21T14:35:42.050332+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] info: start of service (unbound 1.17.1). <29>1 2023-02-21T14:35:44.782969+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] notice: Restart of unbound 1.17.1.But one thing is sure : those who have a lot of LAN devices using DHCP, and have "Register DHCP leases in the DNS Resolver" will get a free membership of the 'DNS/unbound' sucks - club.

At least they know why (now).This club has of course tight relations with the 'I have to forward to some one' club.

Sorry for the rant.edit : Yes, This will get resolved.

Even if this that means 'KEA' joins the party (see other threads/rdmine). -

@gertjan said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

@steveits said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

What is a super large DHCP lease file

This one, for the IPv4 'pool' leases : /var/dhcpd/var/db/dhcpd.leases

There is a small gleu-ware program called /usr/local/sbin/dhcpleases that is kickstarted when a dhcpd IPv4 server assigns a new lease to some LAN device.

dhcpleases will parse the file for valid, actif leases, and write them out to /etc/hostsWhen it finished, it will a stone from the ground and sling-shotd unbound.

To see if this happens a lot : you ... can't. As you can't be sure what the reason was why unbound was instructed to restart.

You can see how often unbound restarts :grep 'start' /var/log/resolver.logand again, this could also happen after a WAN event (your ISP wanted to give you a new IP) or some one remove a LAN cable from the pfSense box.

Or the pfSense admin instructed pfBlockerng that it needs to reload every hour.

Or some idiot admin (like me) likes to mess around with his pfSense.So, keep in mind, some events are normal, and it's ok that unbound restarts ones in a while.

...... <30>1 2023-02-19T08:32:55.264556+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] info: start of service (unbound 1.17.1). <29>1 2023-02-19T14:35:35.966065+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] notice: Restart of unbound 1.17.1. <30>1 2023-02-21T14:35:42.050332+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] info: start of service (unbound 1.17.1). <29>1 2023-02-21T14:35:44.782969+01:00 pfSense.mydomain.tld unbound 65402 - - [65402:0] notice: Restart of unbound 1.17.1.But one thing is sure : those who have a lot of LAN devices using DHCP, and have "Register DHCP leases in the DNS Resolver" will get a free membership of the 'DNS/unbound' sucks - club.

At least they know why (now).This club has of course tight relations with the 'I have to forward to some one' club.

Sorry for the rant.edit : Yes, This will get resolved.

Even if this that means 'KEA' joins the party (see other threads/rdmine).@SteveITS, @Gertjan

The /var/dhcpd/var/db/dhcpd.leases file looks normal. Nothing different to other setups.

There are ~4000 open connections to the forwarder DNS servers. I`m wondering if it asks every forwarding server for every client request?

It looks like that the connections will not be closed.

This means for every site2site VPN the load will increase dramatically in the 23.01 release. In previous versions this setup was unobtrusive.lsof | grep unbound | wc -l 4039sockstat | grep unbound | wc -l 3750cat /var/unbound/host_entries.conf | wc -l 291If I restart unbound every hour by cron, it does not look so bad. But this is an ugly workaround.

last pid: 1173; load averages: 1.19, 1.18, 1.39 up 1+17:00:36 21:34:05 75 processes: 1 running, 74 sleeping CPU: 1.7% user, 0.1% nice, 4.0% system, 1.1% interrupt, 93.2% idle Mem: 743M Active, 4365M Inact, 3241M Wired, 54G Free ARC: 1431M Total, 218M MFU, 1095M MRU, 156K Anon, 17M Header, 96M Other 1201M Compressed, 2544M Uncompressed, 2.12:1 Ratio Swap: 2048M Total, 2048M Free PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND 67966 unbound 16 23 0 568M 471M kqread 12 34:05 69.36% unboundCompared to a similiar setup on a pfSense release 2.6 without unbound cron restart:

last pid: 6402; load averages: 0.58, 0.73, 0.86 up 368+03:08:31 21:40:34 69 processes: 1 running, 67 sleeping, 1 zombie CPU: 0.7% user, 0.0% nice, 3.8% system, 0.2% interrupt, 95.4% idle Mem: 448M Active, 319M Inact, 3283M Wired, 58G Free ARC: 1144M Total, 200M MFU, 907M MRU, 32K Anon, 6030K Header, 31M Other 356M Compressed, 771M Uncompressed, 2.17:1 Ratio Swap: 24G Total, 24G Free PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND 38497 unbound 16 22 0 253M 163M kqread 4 50:33 52.76% unboundIs there any difference or disadvantage if I would use bind instead of unbound?

My external DNS servers are all running bind 9 on LXC containers. I could never recognize any problem with it. -

@getcom Hm, I have had 23.01 running at my home on a 2100, about 18 hours since I changed a setting last night. I don't have a billion devices at home though.

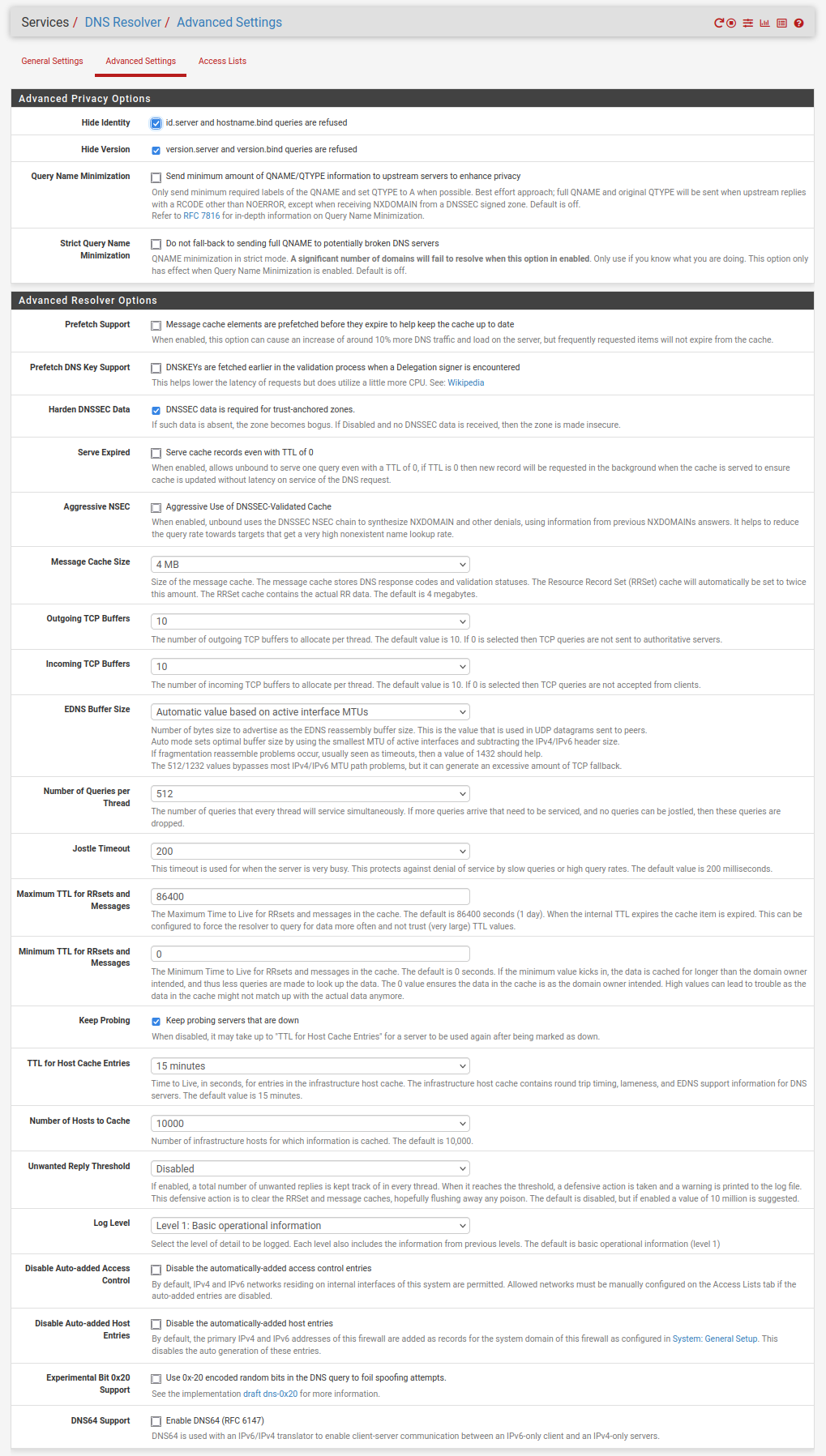

: sockstat | grep unbound | wc -l 12 PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND 25498 unbound 2 20 0 158M 124M kqread 0 7:37 0.25% unboundDNS Forwarder has a "Query DNS servers sequentially" option, Resolver does not. I found a 2015 post (https://serverfault.com/questions/732920/how-to-do-parallel-queries-to-the-upstream-dns-using-unbound) that unbound queries them all.

How many DNS servers do you have configured?

re: BIND, there is a package but as I vaguely recall it's not meant for resolving? Not too familiar with the package (am of course familiar with BIND).

-

@steveits said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

How many DNS servers do you have configured?

re: BIND, there is a package but as I vaguely recall it's not meant for resolving? Not too familiar with the package (am of course familiar with BIND).

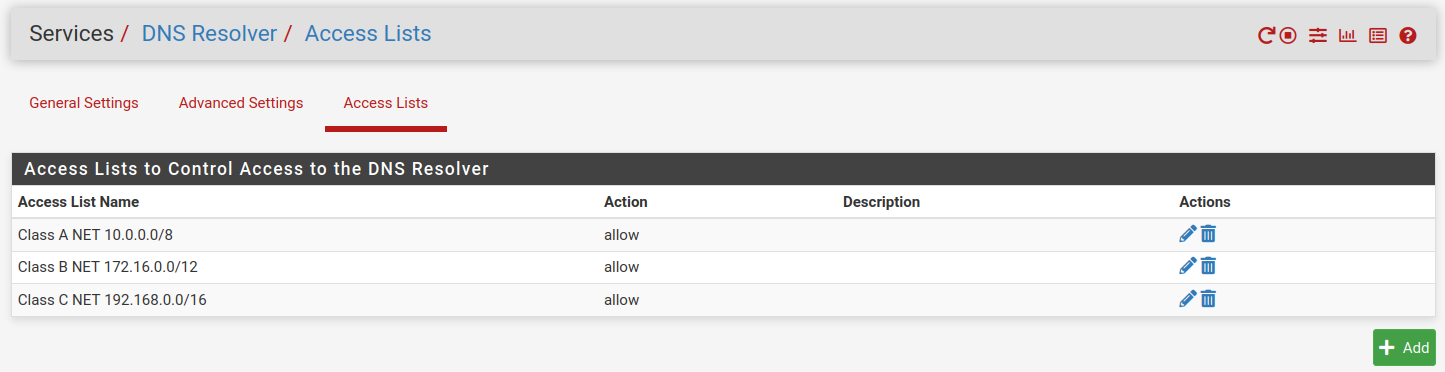

Two external DNS servers, one for each WAN connection (=>dual WAN setup) , four for the four site2site VPNs, one for an AD sub domain.

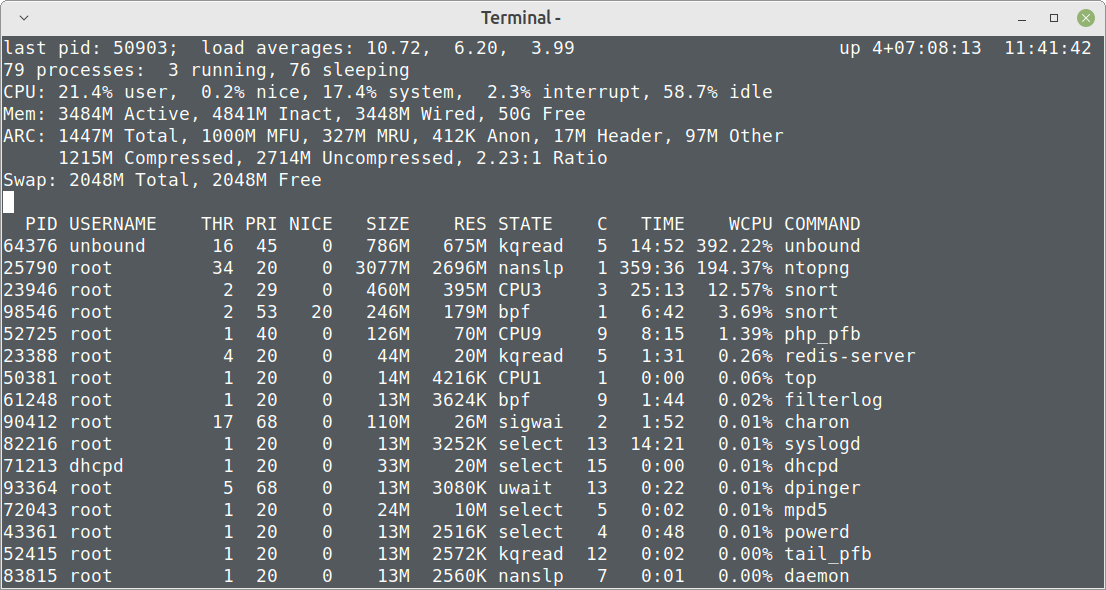

Related to the 2015 post: unbound did this parallel queries also in the 22.05 release. This means this is/was not the root cause.What is strange is the fact that after a few cron unbound restarts, the load is now in a normal range (~0.19). Also the sockstat shows only 400 to 600 connections.

Puuh...this is something what I don`t like because it is gone before I could find out the root cause...this issue can happen again. -

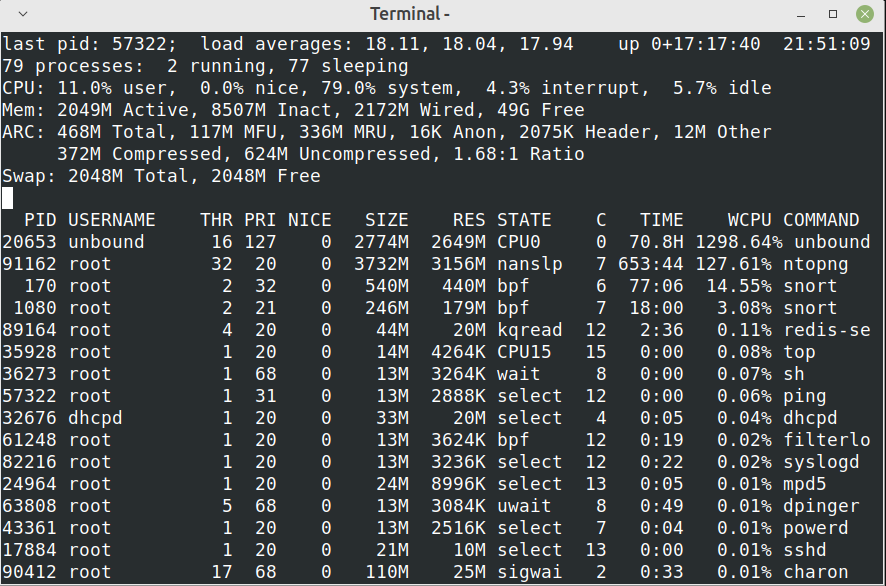

It is back again. The load is definitely too high. I could recognize audio problems in Jitsi meetings.

The system load goes up and down all the time.

I think I will go back to the previous release until this is fixed.

-

After updating another system to 23.01 with same behavior, I came to the end that the parallel requests are so much more aggressive in 23.01 that the answers are killing the messenger. I saw ~4500+ requests/packets per second shooting up unbound in a way that it was not able to answer anymore. I got lots of timeouts on VMs and clients for some seconds/minutes and all was freezing.

@Gertjan I think you are right...I`m a member of the 'DNS/unbound' sucks - club' now ...

My conclusion is that until unbound is not able to handle sequential requests, I should give dnsmasq alias DNS-Forwarder a try as mentioned by @steveits. -

@getcom One other idea that has come up in other threads e.g. https://forum.netgate.com/topic/178413/major-dns-bug-23-01-with-quad9-on-ssl/ is to disable DNS over TLS. It doesn't seem to be a problem for everyone but some people say it is necessary when using Unbound in forwarding mode.

I don't recall anyone reporting high CPU usage though.

-

rant:

there are quite a few problems with pfSense and DNS services , i just given up trying to chase them down, either high cpu usage, flaky dns service, dns not working at all on localhost on the firewall ... it just times out , domain overrides not working and so onnow running DNS server on a couple separate boxes, no issues at all

pfSense does show it's limits even on netgate hardware (we have a couple of 7100 appliances), they cram a lot of things into this firewall appliance for whatever reason ... i'm guessing if we need professional business level firewall that's what TNSR is for -

@steveits said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

@getcom One other idea that has come up in other threads e.g. https://forum.netgate.com/topic/178413/major-dns-bug-23-01-with-quad9-on-ssl/ is to disable DNS over TLS. It doesn't seem to be a problem for everyone but some people say it is necessary when using Unbound in forwarding mode.

DNS over TLS is/was not activated...

-

This post is deleted! -

@aduzsardi said in After upgrade to 23.01: unbound has a high CPU load resulting in DNS request timeouts:

rant:

there are quite a few problems with pfSense and DNS services , i just given up trying to chase them down, either high cpu usage, flaky dns service, dns not working at all on localhost on the firewall ... it just times out , domain overrides not working and so onnow running DNS server on a couple separate boxes, no issues at all

pfSense does show it's limits even on netgate hardware (we have a couple of 7100 appliances), they cram a lot of things into this firewall appliance for whatever reason ... i'm guessing if we need professional business level firewall that's what TNSR is forSome tcpdumps later: all clear

At the moment we have lots of DoS and bruteforce attacks here in Germany. We are delivering tanks and defense systems to Ukraine. Perhaps there is a connection with this? Don`t know, but I never saw something like this before.

With the regular VDSL or cable contract you get an asynchronous connection, e.g. 100mbps downsteam/40mbps upstream. I saw up to 6000 DNS requests per second, each of them had only some kB, but the answers were up to four times larger. Additionally with the smaller upstream bandwidth the complete internet connection was unusable.

The smaller Netgate SG boxes ran hot in this situation...

Since I activated geoip blocking for all countries except Germany and USA and added ~150 badbot blacklists into pfblockerNG there is a silence now... -

@getcom dang man! i feel for you. keep up the good work and keep those ruzzkies out !!!