10 GBit questions

-

@stephenw10 said in 10 GBit questions:

You should make sure the 10G NICs show the expeced number of queues when they attach at boot. Especially since you're seeing traffic limited in one direction.

It looks like 2 queues are being allocated for both ix0/ix1 interfaces. Not sure if that's what it's supposed to be:

ix1: netmap queues/slots: TX 2/2048, RX 2/2048 ix1: eTrack 0x80000528 PHY FW V286 ix1: PCI Express Bus: Speed 5.0GT/s Width x8 ix1: Ethernet address: 80:61:5f:0e:8c:25 ix1: allocated for 2 rx queues ix1: allocated for 2 queues ix1: Using MSI-X interrupts with 3 vectors ix1: Using 2 RX queues 2 TX queues ix1: Using 2048 TX descriptors and 2048 RX descriptors ix1: <Intel(R) X540-AT2> mem 0xf0000000-0xf01fffff,0xf0400000-0xf0403fff irq 18 at device 0.1 on pci2 ix0: netmap queues/slots: TX 2/2048, RX 2/2048 ix0: eTrack 0x80000528 PHY FW V286 ix0: PCI Express Bus: Speed 5.0GT/s Width x8 ix0: Ethernet address: 80:61:5f:0e:8c:24 ix0: allocated for 2 rx queues ix0: allocated for 2 queues ix0: Using MSI-X interrupts with 3 vectors ix0: Using 2 RX queues 2 TX queues ix0: Using 2048 TX descriptors and 2048 RX descriptors ix0: <Intel(R) X540-AT2> mem 0xf0200000-0xf03fffff,0xf0404000-0xf0407fff irq 17 at device 0.0 on pci2 -

Yeah, that's probably correct Though that CPU looks like it's 2-cores with 2-threads per core so 4 virtual cores., if hyper-threading is enabled. If it shows 4 CPUs I'd expect 4 queues.

It's the same number of queues for Tx and Rx though so that doesn't look like a problem.

-

@stephenw10 said in 10 GBit questions:

Yeah, that's probably correct Though that CPU looks like it's 2-cores with 2-threads per core so 4 virtual cores., if hyper-threading is enabled. If it shows 4 CPUs I'd expect 4 queues.

I was wondering about that myself -- I did turn Hyperthreading off to test to see if it got faster, but there was no appreciable difference. So I turned it back on. This is the log from the last boot and shows 2 queues, despite there being 4 cores (HT).

It's the same number of queues for Tx and Rx though so that doesn't look like a problem.

So it sounds like there aren't many more avenues to optimize this box. For whatever reason it's just not able to handle > 1GBit. My new box should be here in a week or so. Hopefully that one will run a lot faster...

(I did go through the optimization articles that were mentioned earlier, but none of the tricks made it any faster. Some actually made it slower -- like turning off HW offload options).

Thanks all for the tips and suggestions!

-

The Xeon D2123IT

4 Cores

8 Threadsmax 3.0GHz

TurboBoost

HyperThreading

AES-NI

DPDK?4 from 5!

If you are not using the PPPoE it will saturate a 1 GBit/s

with ease. And in theoretic it should be then able to

feed or support 8 queues, but you can also "tune" the;- queue size

- queue length

- queue amount pending on the CPU "C/T"

perhaps you will be reporting back here if that box

was arriving. -

@dobby_

I ended up going with the Dell R210-II system with Xeon 1275v2 CPU. I'll be more than happy to report to this thread once I have some measurements with iperf3 / Speedtest! -

@tman222 said in 10 GBit questions:

Dell R210-II system should be more than capable

In my testing, the R2x series cannot move more than about 1.8-2Gbps ... the CPUs simply max out on single thread routing

-

@rvdbijl said in 10 GBit questions:

Dell R210-II system with Xeon 1275v2 CPU

3,5 - 3,9 GHZ

CPU 4C/8T

AES-NI

TurboBoost

HyperthreadingMay be also an interesting choice! If you will not forced

to use PPPoE it can be significant faster then imagine of. -

@spacebass said in 10 GBit questions:

@tman222 said in 10 GBit questions:

Dell R210-II system should be more than capable

In my testing, the R2x series cannot move more than about 1.8-2Gbps ... the CPUs simply max out on single thread routing

What CPU did you test with on the R210-ii?

-

@rvdbijl 1270 v5

-

@spacebass

The v5's work on the R210-ii? From what I read, it only supports up to the E3-12xx v2 series ... -

To wrap up this post -- I have my R210-II in with the E3-1275v2 CPU and 16GB RAM. I loaded pfSense, restored my backup and replaced the old i7 box with this one. I haven't tested with a 10G to 10G connection yet, but the 10G to 2.5G connection on two of my PC's seems to be able to push 2Gbit up and down to my ISP with no issues. I'll do some more benchmarking and post the results in the next few days.

Very happy that this box also seems to use ~50W while running, and is quiet as a mouse (once the fans have done their test when the system boots).

-

And here are some results --

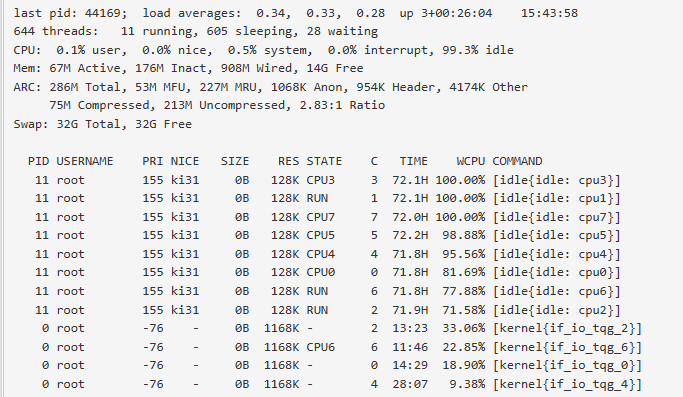

on a 2.5Gbit NIC and on a 10GBit NIC the performance is identical. I have no (easy) way to get more bandwidth on the WAN side of my connection, so I can't test beyond that. What I can see is how busy the box is while doing this Speedtest:

Not too shabby .. Looks like there is some more headroom.

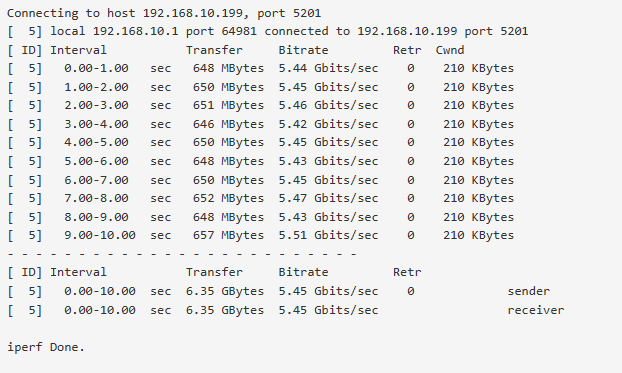

Doing iperf3 testing is a bit more .. interesting. On a 10Gbit NIC I see this with pfSense as the client and my 10Gbit NIC PC as the server (the server is a Core i7-10700T running Win10):

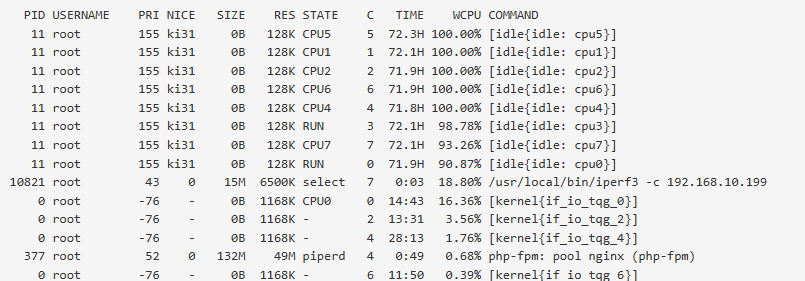

And the process running iperf3 is using ~18% CPU:

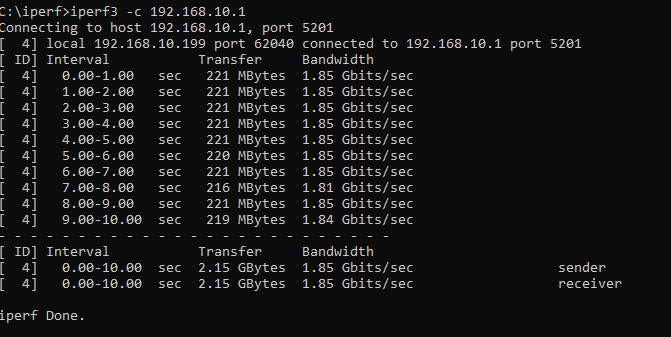

The reverse path is worse (pfSense as server, 10Gbit NIC as client):

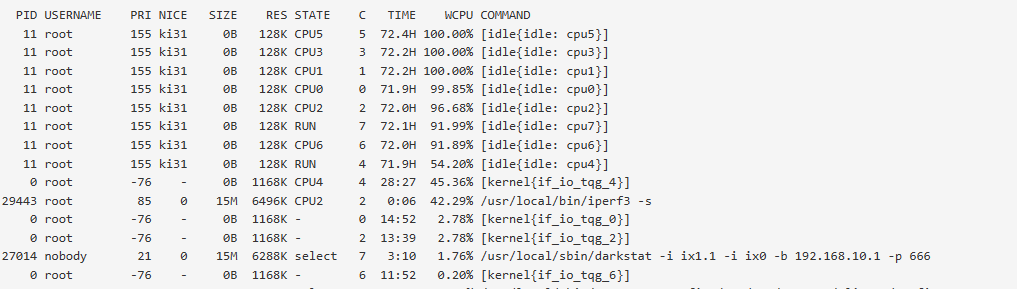

With the utilization here:

That is weird -- why does this path suck up 42-43% of CPU?

Multiple parallel threads don't seem to help here either. I'm guessing that this is some strange artifact of iperf3 running on the pfSense box?

In any case, I don't see this while running a speedtest in either direction (up or down) to my ISP. Solid 2Gbit as they promised. We'll see how this box does if/when my ISP raises speeds again. ;) I may try a VLAN-VLAN routing through this box and see how much data I can push, but that'll require another 10 Gbit NIC which I don't have .. yet .. ;)

Hope this benchmark data is at least helpful to some folks.

-

iperf is deliberately single threaded no matter how many parallel streams you set. To use multiple cores you need to run multiple instances of iperf.

iperf ends traffic from the client to the server by default so you're seeing worse performance when pfSense is receiving. Some of the hardware off-loading options may improve that. -

@stephenw10 said in 10 GBit questions:

need to run multiple instances of iperf.

Or you could use the new beta that is out..

https://github.com/esnet/iperf/releases/tag/3.13-mt-beta2

iperf3 was originally designed as a single-threaded program. Unfortunately, as network speeds increased faster than CPU clock rates, this design choice meant that iperf3 became incapable of using the bandwidth of the links in its intended operating environment (high-performance R&E networks with Nx10Gbps or Nx100Gbps network links and paths). We have created a variant of iperf3 that uses a separate thread (pthread) for each test stream. As the streams run more-or-less independently, this should remove some of the performance bottlenecks, and allow iperf3 to perform higher-speed tests, particularly on 100+Gbps paths. This version has recorded transfers as high as 148Gbps in internal testing at ESnet. -

Ooo, that's fun.