Terrible performance at 2.5g

-

Hi,

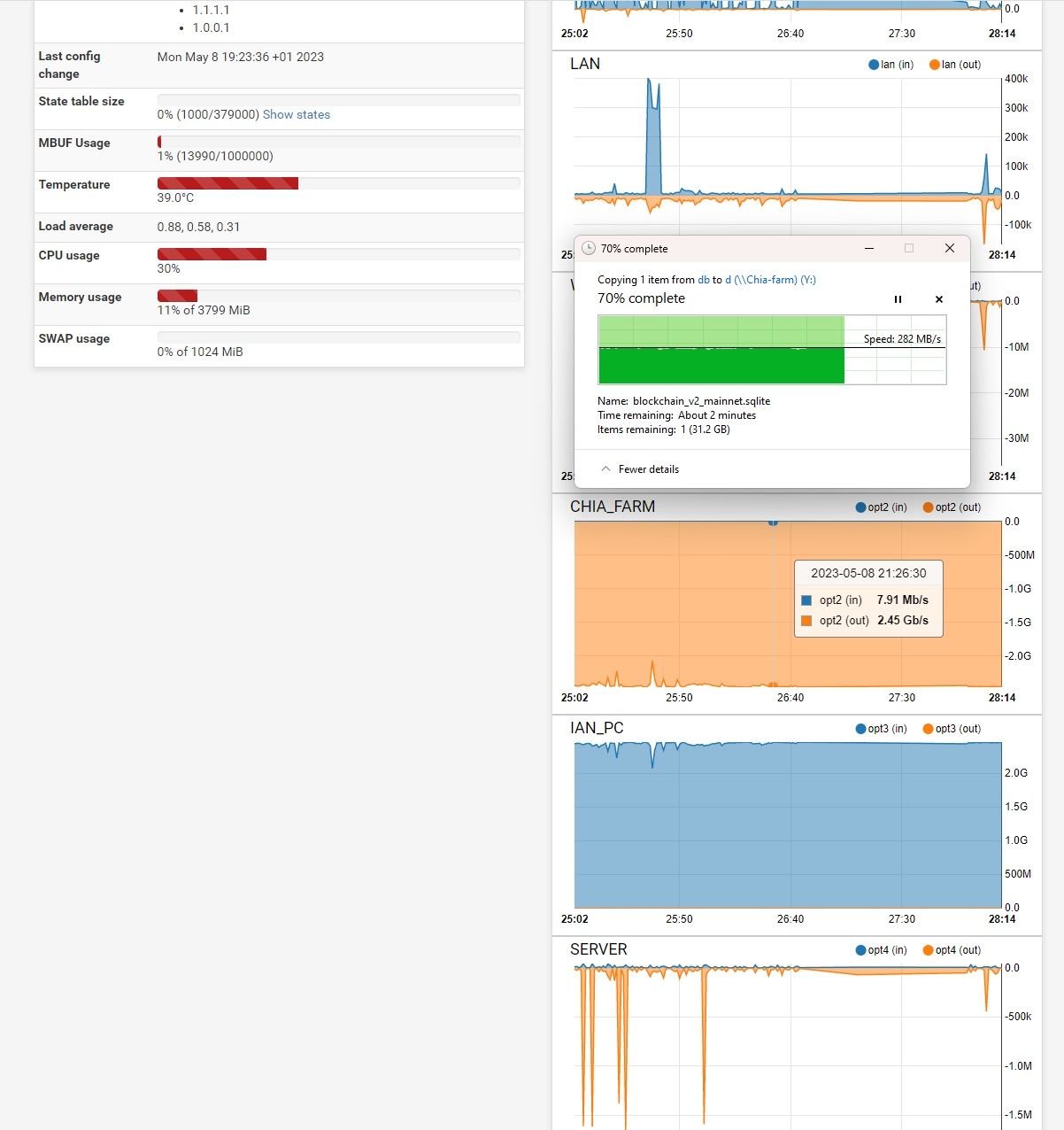

First post so go easy. I have a 6 nic 2.5g hardware box running pfsense and am having inconsistent network transfers at 2.5g. I have 3 different 2.5g nics and all react in the following way.

Transferring from an SSD to an SSD which should net me circa 250mb/s over a 2.5g link starts off well for about 20 seconds and then fluctuates up and down which makes the transfer slower than over a 1g connection.

It seems like it fills a buffer and then drops but I have no idea how to fix it.

I've tried turning off hardware checksum offload and suppressing ARP messages to no avail.

Please help :-)

-

@masetime Are you sure the SSD’s can sustain that kind of write rate? It sound more like a OS or SSD Cache filling up, and then meets the actual SSD write rate. If the drive has been filled and the OS or drive does not support TRIM, it might very well be garbage collection and forced block overwrites that causes the write rate of the SSD to < 100MB/s

-

@keyser Thanks For the reply, Yes they definitely can support it, Tried it with Sabrent rocket 4 NVMEs, p981s and a 4 drive raid 0.

As soon as i limit to 1g it transfers at a rock solid 113MB/s. enable 2.5g and it fluctuates up and down to as low as 30MB/s.

It must be something in pfsense i need to tick on

-

@masetime 20 secs is quite a long time before it starts fluctuating… Which NIC (make) and which pfSense driver is it using?

20 secs could maybe suggest a heat dissipation issue on the NIC controller itself. Does the chip have a heatspreader? Have you tried touching it when the issue is present (be careful, you might get serious skin burns)

-

@keyser There is something call the write cliff on SSDs. To me it sounds like you have found it. The quality of the SSD may move the cliff, but it exists on multi-million dollar arrays.

To verify take pfSense out of the path and see if you get the same results. Also, iperf is really good at testing bandwidth. You can adjust the number of streams and the direction. -

@andyrh it's not the ssds or raid array, between them in the same pc/server I get sustained 600MB/s reads and writes, over 1,000MB/s between nvme ssds. It's pfsense that's the bottleneck.

It's a dual core n5105 with 4gb 2400mhz ram. Cpu peaks at 28% on transfers and memory is at 13%

-

@keyser 6 x i225v nics, not hot it's something in pfsense that's bottlnecking it. I'm a complete novice with it so would love a little guidance on what I could try.

-

@masetime That still leaves taking pfSense out of the path and repeating the test. You will find the problem by removing variables.

-

Well this is very strange. I'd forced the nic on one of the pcs to 1g to test and was taking some screenshots to post. After setting it back to auto negotiate and running the same test I've done for days its now transferring as it should.

Must be a negotiation problem.

Thank you for taking the time to reply

-

Be aware that the igc driver only supports autonegotiation. Setting it to 1G simply omits the other link speeds as choices in the negotiation. If something is not enabled for negotiation it will fallback to a default speed or fail to link entirely.