Memory shortage

-

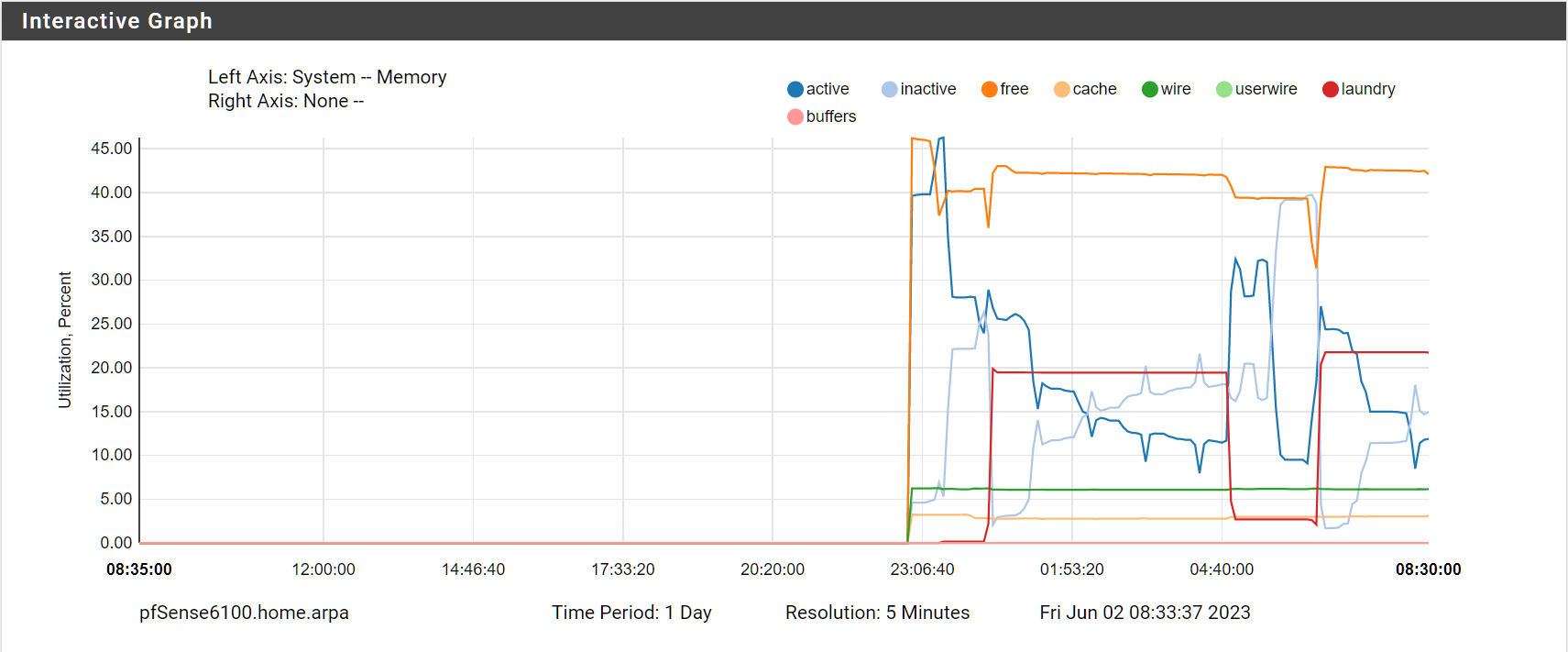

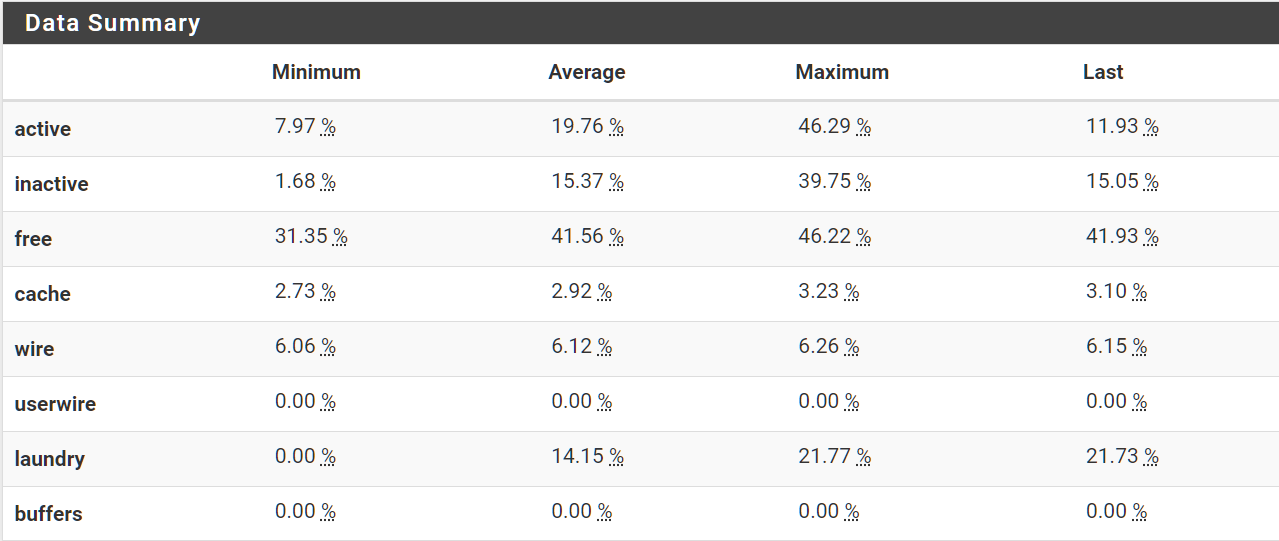

Is there a memory shortage in Netgate 6100MAX based on this?

If about third of the RAM is still free, I would not call it a shortage, yet pfSense+ 23.05 is swapping. 23.01 rarely, if ever did swap.

ZFS memory usage should not be a problem with these tunables?

- vfs.zfs.prefetch.disable=1

- vfs.zfs.arc.max=200000000

- vfs.zfs.arc.free_target=1100000

Packages:

- arpwatch 0.2.1

- Cron 0.3.8_3

- iperf 3.0.3

- Netgate_Firmware_Upgrade 23.05.00

- nmap 1.4.4_7

- nut 2.8.0_2

- pfBlockerNG-devel 3.2.0_5

- Shellcmd 1.0.5_3

- snort 4.1.6_7

- System_Patches 2.2.3

-

These :

@pfsjap said in Memory shortage:

arpwatch 0.2.1

Cron 0.3.8_3

Netgate_Firmware_Upgrade 23.05.00

nut 2.8.0_2

Shellcmd 1.0.5_3

System_Patches 2.2.3Are GUI packages, and don't do much - use very few resources.

These :

nmap 1.4.4_7

iperf 3.0.3Dono, never used them.

These two :

pfBlockerNG-devel 3.2.0_5

snort 4.1.6_7Let me put it this way : remove them, reboot.

I'll bet you'll say : Problem solved !And, just for the kicks : let me invent a potential problem.

I create a nice DNSBL list or IP list, with all the DNS and IP everybody wants.

A couple of month later, many are using my list, 'as it is so efficient'.

Now, I strike: I Update my list, and include huge numbers of DNSBL (or IPs). Millions of them.

snort, pfBlockerng will eventually their local copy of the the list.

Moments later I kill all those those routers .... it will be raining OOM, or worse.

( I just concerted these two tools in some sort of Trojan alike, me being the puppet master )My advise : if you use snort, or pfBlocker : stay on it 24/24h.

Btw : you have a Laundy 'red' line : it's a zero flat line on my 4100.

I'm using myself pfBlockerng with some known 'small' list.

No memory issues ...

Never saw the swap (more then 10 years) being used. -

@Gertjan said in Memory shortage:

Let me put it this way : remove them, reboot.

I'll bet you'll say : Problem solved !That may be the case, but I would rather have both and no swapping, as it was still on 23.01.

Of course the feeds used might have grown since upgrading to 23.05, but more likely the change is in 23.05.

-

Mmm, it's probably ZFS. But with those values I would not have expected it to stat swapping as you say. Especially if Free is at 40%.

How quickly does it start swapping?

-

@stephenw10 said in Memory shortage:

How quickly does it start swapping?

Rebooted and then reset data/graphs, so quite soon.

-

Like, minutes?

-

@stephenw10 No, I don't think so, but can't say when it started, because I wasn't watching. Within those 9 hours in the graph, apparently.

-

The thing is - for me - that you have memory that needs to be ... laundered.

Dono what this means, so, time to learn something : https://wiki.freebsd.org/Memory

It's is very clear :Laundry Queue for managing dirty inactive pages, which must be cleaned ("laundered") before they can be reused Managed by a separate thread, the laundry thread, instead of the page daemon Laundry thread launders a small number of pages to balance the inactive and laundry queues Frequency of laundering depends on: How many clean pages the page daemon is freeing; more frees contributes to a higher frequency of laundering The size of the laundry queue relative to the inactive queue; if the laundry queue is growing, we will launder more frequently Pages are scanned by the laundry thread (starting from the head of the queue): Pages which have been referenced are moved back to the active queue or the tail of the laundry queue Dirty pages are laundered and then moved close to the head of the inactive queueI'm joking of course.

What does your system miss ? Soap ??What I presume is that these 'pages' stay to long 'non clean' (not laundered) and that's why your system start to take swap space.

The real question is : some deep down kernel process, the one that cleans up, doesn't do so in time.

Finding why and you'll be close to 'solved'.You are using a very known hard ware device.

What you can do : is finding out who (what) process is provoking this situation.

pfSense+ 23.05 'clean' : don't think so.

pfBlockerNG ?

snort ?

Something else ? Like ZFS ? -

@Gertjan said in Memory shortage:

What you can do : is finding out who (what) process is provoking this situation.

Well, it's obvious that Snort is consuming a lot of memory, but 23.01 was able to handle it.

Why there are 4 unbound instances, when I have configured 3 interfaces for DNS Resolver

last pid: 33114; load averages: 0.17, 0.25, 0.27 up 0+18:59:58 17:46:31 330 threads: 5 running, 286 sleeping, 39 waiting CPU: 0.1% user, 0.3% nice, 0.3% system, 0.0% interrupt, 99.3% idle Mem: 1618M Active, 1937M Inact, 136M Laundry, 704M Wired, 3394M Free ARC: 220M Total, 114M MFU, 102M MRU, 16K Anon, 1677K Header, 3742K Other 194M Compressed, 513M Uncompressed, 2.64:1 Ratio Swap: 2048M Total, 222M Used, 1826M Free, 10% Inuse PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 69586 root 52 20 1229M 1061M bpf 2 0:21 0.33% /usr/local/bin/snort -R _34675 -D -q --suppress-config-log --daq pcap --daq-mode p 69586 root 52 20 1229M 1061M nanslp 1 0:00 0.00% /usr/local/bin/snort -R _34675 -D -q --suppress-config-log --daq pcap --daq-mode p 99170 root 52 20 1229M 1054M bpf 1 0:10 0.21% /usr/local/bin/snort -R _8486 -D -q --suppress-config-log --daq pcap --daq-mode pa 99170 root 52 20 1229M 1054M nanslp 2 0:00 0.00% /usr/local/bin/snort -R _8486 -D -q --suppress-config-log --daq pcap --daq-mode pa 13338 unbound 20 0 851M 786M kqread 3 0:02 0.30% /usr/local/sbin/unbound -c /var/unbound/unbound.conf{unbound} 13338 unbound 20 0 851M 786M kqread 2 0:02 0.15% /usr/local/sbin/unbound -c /var/unbound/unbound.conf{unbound} 13338 unbound 20 0 851M 786M kqread 1 0:01 0.00% /usr/local/sbin/unbound -c /var/unbound/unbound.conf{unbound} 13338 unbound 20 0 851M 786M kqread 0 0:11 0.00% /usr/local/sbin/unbound -c /var/unbound/unbound.conf{unbound} 66173 root 20 0 47M 34M bpf 3 0:02 0.00% /usr/local/sbin/arpwatch -Z -f /usr/local/arpwatch/arp_igc0.dat -i igc0 -w digger9 66825 root 20 0 47M 34M bpf 2 0:02 0.00% /usr/local/sbin/arpwatch -Z -f /usr/local/arpwatch/arp_igc2.dat -i igc2 -w digger9 64463 root 20 0 72M 23M piperd 0 0:07 0.01% /usr/local/bin/php_pfb -f /usr/local/pkg/pfblockerng/pfblockerng.inc filterlog -

Also, Snort has been configured for two interfaces, yet there are four instances?

-

@pfsjap I think that's normal in that view; try

ps aux |grep snort -

@SteveITS Yes, my bad. Two Snort instances and one unbound instance.

-

Yeah top can show all threads there. That's expected.

-

@pfsjap BTW for Snort you should read https://forum.netgate.com/topic/180501/snort-v3/6 and consider Suricata.

"At some point in the future I expect the upstream Snort team will cease development work on Snort 2.9.x (the version currently in pfSense). At that point, unless someone has stepped up and created a Snort3 package, Snort will die on pfSense."