Infamous /409 issue

-

@johnpoz potentially could... Could be a virus. What's funny is the Internet runs the same even when it blocks all of those. The only one that caused me confusion was a school website but it was not listed as pup it was listed as a Trojan virus

-

@michmoor it does show a connection to an IPv6 address in that last photo you shared. I had that same issue until I set the DNS resolver to send only A records and ignore AAA records thats what fixed it in the end.

-

I was having 409s even with IPv4-only DNS records. One example in my environment was for self.events.data.microsoft.com (Yeah, Microsoft Windows telemetry). This is hosted behind Azure Traffic Manager, a DNS load balancing solution. The record has a very short TTL, but there is absolutely no IPv6 (AAAA) record attach to this host. So there has to be other factors at play with this issue.

I started to read on Squid, and how it was handling DNS resolution... It is configured to cache DNS queries by default. See this: http://www.squid-cache.org/Doc/config/positive_dns_ttl/

Squid will cache a DNS query for up to 6 hours!!!For most traffic, it is not an issue, and it even makes sense. For anything hosted using elastic cloud services behind DNS-based load balancing, with hosts that will live for minutes or maybe one hour or two at a time (depending on demand), it doesn't work.

So here's what I tried:1- I made sure to configure Squid to use 127.0.0.1 for name resolution (rely on Unbound, which respect RFCs and TTLs unless it is explicitly configured to serve stale data)

2- I added the following configuration options for squid:

positive_dns_ttl 2 minutes

negative_dns_ttl 30 secondsIt made absolutely no difference whatsoever, 409 back on that same host within the next 15 minutes.

In conclusion, I am back to bypassing all Azure Cloud traffic from transparent Squid, again. Palo Alto Networks is hosting EDLs that pfBlockerNG is able to consume: https://saasedl.paloaltonetworks.com/feeds/azure/public/azurecloud/ipv4

This is the only solution that works reliably, for now (bypass). Any Azure service behind Traffic Manager could potentially create the same issue, so it is advisable to bypass Azure Cloud. I would rather not, but I see no other way.On a side note... I was running packet captures on both the Windows client computer, and pfSense WAN interface. Analyzing traces after a few 409s on my host, I could easily locate the TLS Client Hello request with tls.handshake.extensions_server_name == "self.events.data.microsoft.com", but the same query on the capture of the wan interface would return 0 packets. Searching for the destination IPs on the WAN interface would also return 0 packets. It's just like Squid wouldn't even try to connect, gave up, and served a 409 error to the client. Or it tried a stale IP, couldn't get a TCP handshake and gave up. That tells me there has to be a bug somewhere, because the client hello request "failing" for a splice has nothing special. I should have seen a connection matching on the WAN side. Next step would be to try with a tcp replay of my packets, see if it consistently reproduces the problem (nothing captured on the WAN interface). Because then, I would have something to report to the developers. If I even knew how to make such a report.

-

I read some more and not sending any connection to the WAN interface would indeed be normal behavior when detecting an header forgery. (dst IP doesn't match IP of the domain).

You can try tweaking your DNS environment as much as you can to reduce the occurrence of this issue, but you can still expect this to fail at times: some apps will perform one DNS query, and reuse the answer for a while, thus exceeding the TTL of the DNS record that will just expire at the resolver. This becomes even more likely when records have a TTL of 5 minutes or less. Then, when Squid sees the Client Helo request, it will resolve the SNI but the client dst IP will not match IPs of the domain. And it will reject the connexion with a HTTP status code 409. End of story.

This validation can only create a lot of "false positives" with native cloud applications leveraging DNS load balancing (short TTLs). But since this isn't new, I started looking for what others might have done about this. Cloud and DNS load balancing aren't new concepts.

And it looks like some other distros have simply decided to remove the host forgery check altogether: https://github.com/NethServer/dev/issues/5348

It's not a big change in terms of lines of code... Should be fairly straightforward to patch this on pfSense as well. -

@vlurk there’s a redmine already for this with a request to remove/disable the forgery security feature.

Netgate is not taking action on it which unfortunately means Transparent Proxy is broken.

The resolution is to either don’t use Squid or to use it in an explicit form.

/409 has been an issue for years so devs are aware of it.Edit: https://redmine.pfsense.org/issues/14390

-

@michmoor Good enough. I will start a discussion on the Squid-Users mailing list, see if it's worth moving it up to Squid-Dev. It's been a long time since I participated in a mailing list but the ones for Squid still looks to be active....

IMHO, the way the header validation is implemented, with a simple DNS check from the client dst IP, is flawed: it will create a lot of false positives for web servies relying on DNS based load balancing. This is inevitable, because application threads may be running for longer than the TTL, creating connexions without calling gethostbyname and making Squid completely unable to validate the request. Dropping perfectly legitimate traffic with 409 in the process. At this point, I am bypassing a lot of IP addresses from Squid: Azure Cloud, Azure Front Door, AWS, Akamai... I don't get a lot of 409s at this point, but this is more of a workaround than a real solution.

The proper way to solve this, would be to just compare the SNI received from the client, with the subject and alt names from the web server certificate. If it matches, and the certificate is completely valid, Squid should trust the connexion as legitimate even though it can't validate that the dst IP match the domain. But that would require a lot more development that simply disabling the check.

-

For bug reporting you can also use . . .

https://bugs.squid-cache.org/index.cgi

I have reported some bugs on this and they do respond very quickly.

I just opened one for host_verify_strict off not working. That should work right?

https://bugs.squid-cache.org/show_bug.cgi?id=5304

-

@JonathanLee said in Infamous /409 issue:

For bug reporting you can also use . . .

https://bugs.squid-cache.org/index.cgi

I have reported some bugs on this and they do respond very quickly.

I just opened one for host_verify_strict off not working. That should work right?

https://bugs.squid-cache.org/show_bug.cgi?id=5304

Actually, I fear that this is the intended behavior. As per documentation:

For now suspicious intercepted CONNECT requests are always responded to with an HTTP 409 (Conflict) error page.My take is that if you run in a transparent mode and the proxy has to fake a CONNECT (applies to HTTPS only), it will always drop the requests that fails the check. Ignoring host_verify_strict off directive.

Also interesting to note: "for now" makes me believe the DNS check was a band aid to close a security gap, and the author always intended to develop a better solution someday.I may be wrong about this though. It is worth validating if it is indeed the intended behavior, as it seems we have different understanding of the documentation.

-

Side note:

I use Squidguard, Squid and Squidlite. My ISP does not provide me with IPv6 so I have it disabled in unbound resolver custom config. I was getting the same errors you have after I disabled IPv6 it stopped. Again I use SSL intercept for some devices and have custom devices set to splice.

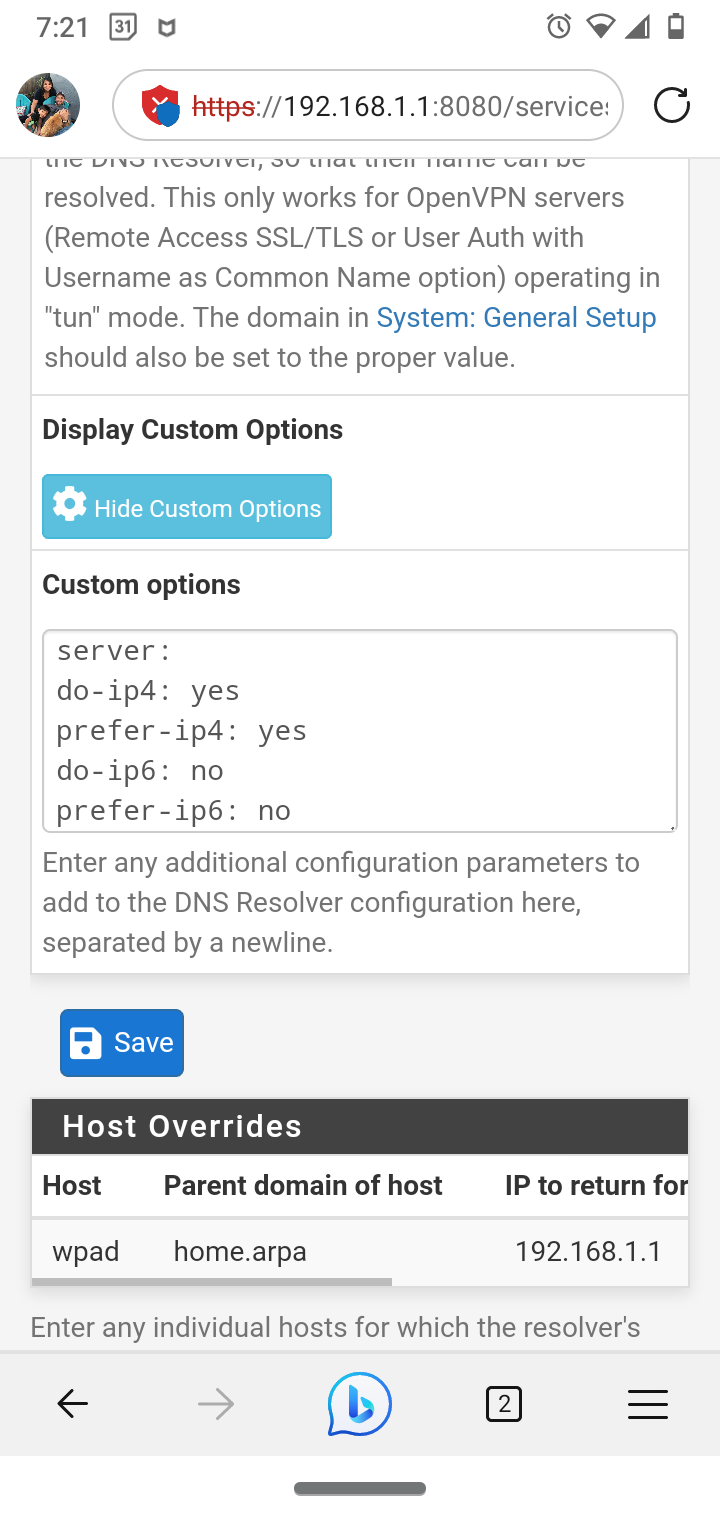

(Unbound custom options for no IPv6)server:

do-ip4: yes

prefer-ip4: yes

do-ip6: no

prefer-ip6: no

private-address: ::/0

dns64-ignore-aaaa: .

do-not-query-address: ::

do-not-query-address: ::1

do-not-query-address: ::/0

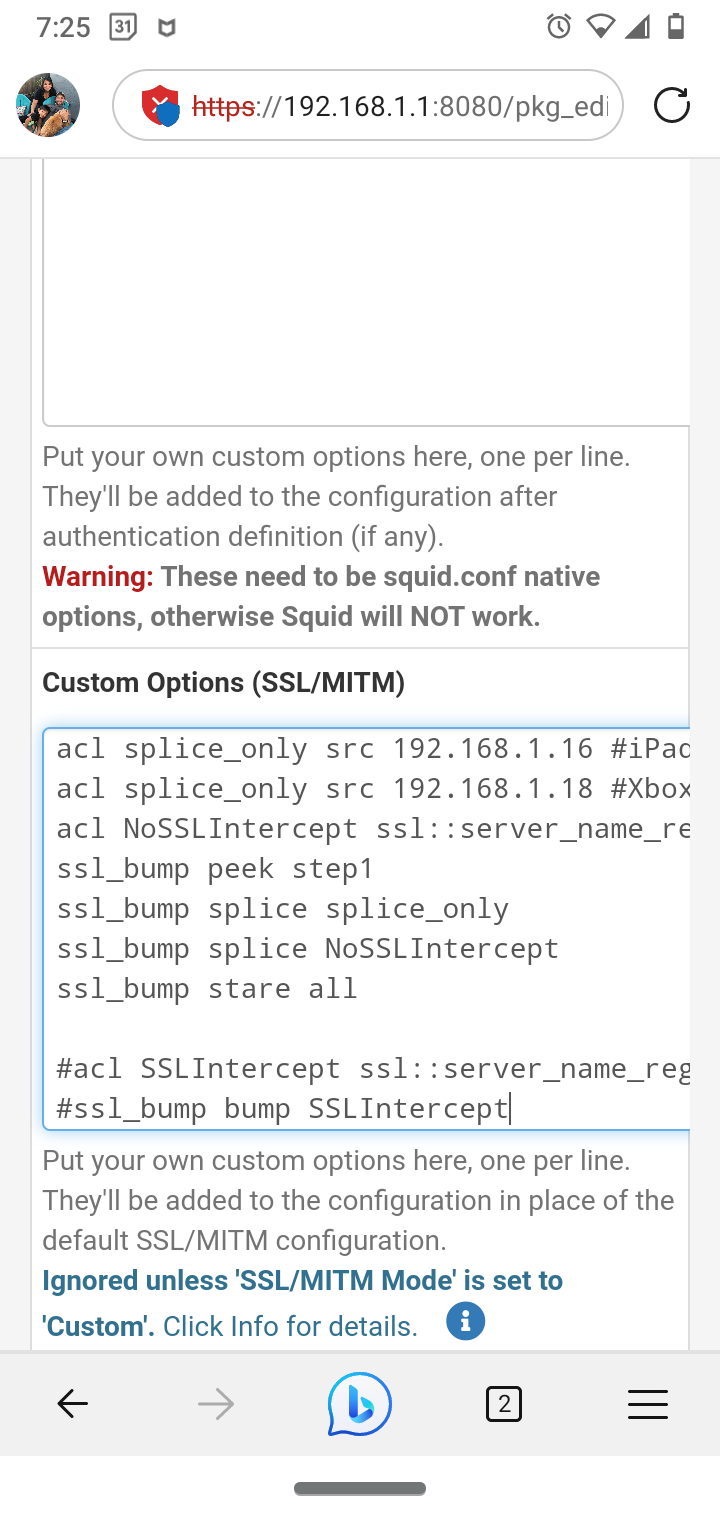

(Squid set to custom)acl splice_only src 192.168.1.7 #Jon Android

acl splice_only src 192.168.1.8 #Tasha Apple

acl splice_only src 192.168.1.11 #Amazon Fire

acl splice_only src 192.168.1.15 #Tasha HP

acl splice_only src 192.168.1.16 #iPad

acl splice_only src 192.168.1.18 #Xbox

acl NoSSLIntercept ssl::server_name_regex -i "/usr/local/pkg/url.nobump" #custom Regex splice url list filessl_bump peek step1 #peek

ssl_bump splice splice_only #splice devices

ssl_bump splice NoSSLIntercept #splice only url

ssl_bump stare all # stare all defaults to bump on step 3#Commented out lines

#acl SSLIntercept ssl::server_name_regex -i "/usr/local/pkg/url.bump" #always bump list

#ssl_bump bump SSLIntercept -

@JonathanLee

I saw your other message about force disabling IPv6.I gave it a shot. It didn't seem to have much of an impact here. Maybe it did for some DNS records that have IPv6 addresses attached to them. But I have identified tons of DNS records randomly failing with 409 that don't resolve to any IPv6 address, whatsoever.

This is an issue with short TTLs.

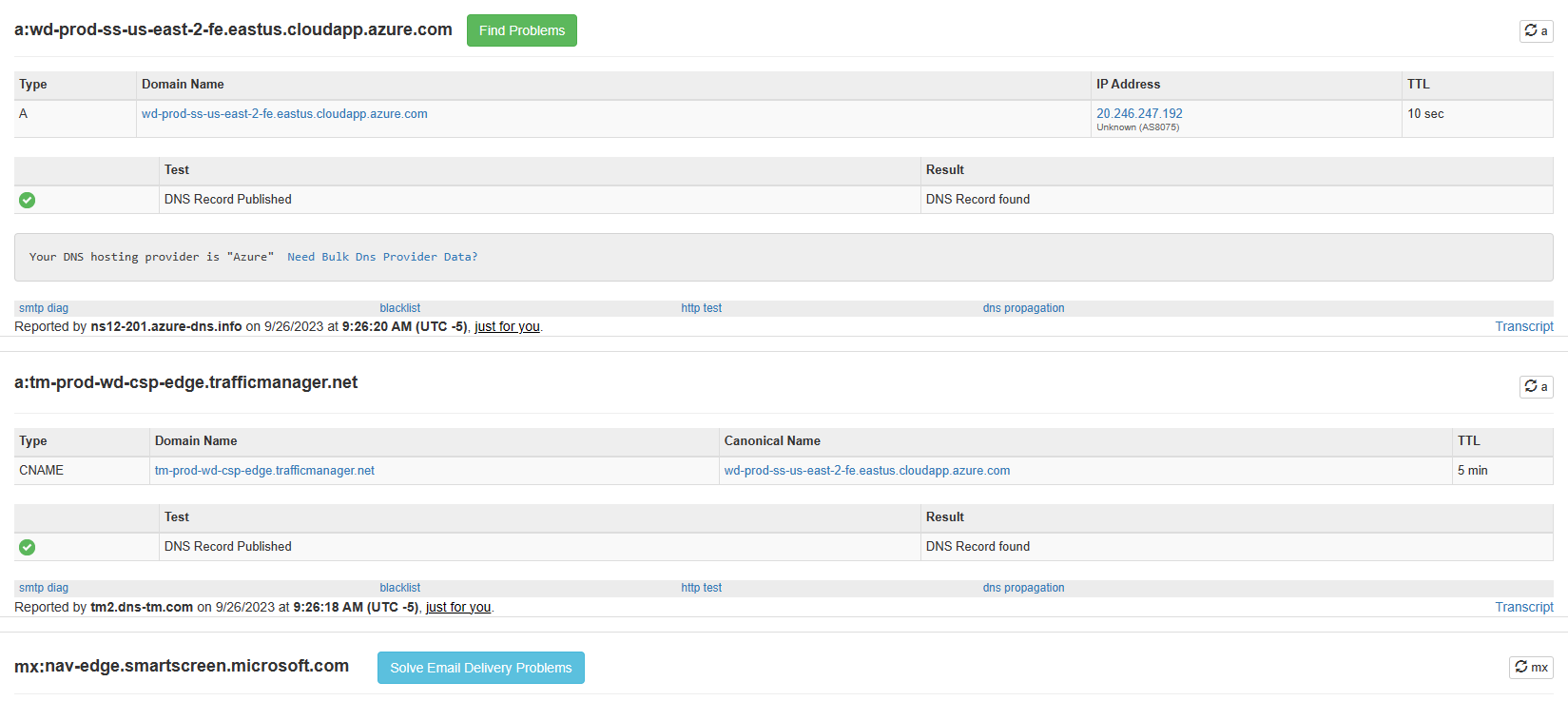

Consider this DNS record: nav-edge.smartscreen.microsoft.com Edge can randomly query this for SmartScreen. The A record has a 10sec TTL.

No IPV6 address will be returned for this, ever. Yet, I am logging a few 409 for this destination, from time to time. (until I made sure all traffic toward Azure Cloud would bypass Squid). All it takes is that the web browser tries to establish a connexion to it with a given IP, and when squid attempts the same resolution, it cannot confirm that this IP match the domain in destination.

Of course, I am making sure that no DNS query can "leak" to the Internet from my clients: NAT rule for UDP port 53, block on outbound 853, and blocklist for known DOH resolvers.

This is not an IPv6 related issue.

-

@vlurk I had some issues for Disney plus, I ended up making an alias for it so that the DNS entry was on hand already.

-

Has anyone attempted this

http://www.squid-cache.org/Doc/config/dns_nameservers/dns_nameservers "pi-hole dns and or dns address of whatever you require"

-

@JonathanLee I haven’t. Are you going to do it?

-

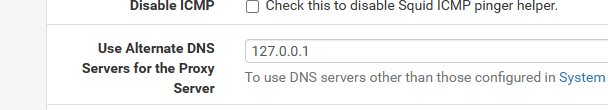

@JonathanLee Yes. You can configure this parameter from the GUI:

This field will set the dns_nameservers config directive in squid.conf.

I use 127.0.0.1 (Unbound), and all my clients point to the same DNS server.

-

@vlurk o yeah that's already in the GUI

What about. ..

client_persistent_connections on

client_persistent_connections off"Squid uses persistent connections (when allowed). You can use

this option to disable persistent connections with clients."http://www.squid-cache.org/Doc/config/client_persistent_connections

Maybe this could help I must have read every single squid option to find something

I also have certificate adaption disabled I leave the certificates as the are and have it set to not check. The other ways had issues for me for some reason.

I am just throwing ideas out now for testing. I had no idea squid has so many other configuration options.

http://www.squid-cache.org/Doc/config/sslproxy_cert_error/

They have a certificate SSL based error conditional statement for ACL use that is domain specific I found.

http://www.squid-cache.org/Doc/config/happy_eyeballs_connect_gap/

http://www.squid-cache.org/Doc/config/happy_eyeballs_connect_timeout/

Maybe...

happy_eyeballs_connect_gab ??

Or

happy_eyeballs_connect_timeout

"Happy Eyeballs is an algorithm published by the IETF that makes dual-stack applications more responsive to users by attempting to connect using both IPv4 and IPv6 at the same time, thus minimizing common problems experienced by users with imperfect IPv6 connections or setups."

-

Updating this thread for everyone..

Uninstall Squid Proxy. As i long suspected by the lack of movement in any of the redmines, Netgate has decided to deprecate Squid Proxy.

I consider this a really good thing.....But if there is a need to MITM something i dont know of any open source alternatives other than looking at other security vendor firewalls which have custom but supported proxy configuration

https://www.netgate.com/blog/deprecation-of-squid-add-on-package-for-pfsense-software

-

@michmoor Like Lightbeam, and many other tools that worked very well it seems soon after you can no longer use them.

I wonder what alternatives there are ?

I for one will stay with 23.09 just to use Squid. Dang :( It's sad I spent years getting this to actually work.

-

What is the next official Netgate product that will continue to support a proxy with SSL intercept that can be purchased? Now that this is being twightlighted?

What version should I upgrade too for proxy cacheing abilities? I have a SG-2100 currently. Should users move to Palo Alto?