Web Configurator traffic is not being fragmented when it should be

-

Hmm, that's odd. I assume then that the pcap and commissioning setup is something you're quite familiar with. You would have noticed if, for example, it is assembling packets?

How are you seeing that TCP packet at the switch if the link between it and pfSense is only 1500B?

Try sending some large pings. Do they fragment as expected?

Try running a pcap in pfSense on that VLAN at the same time. Packet fragments will show there if that's what's happening.

Steve

-

@stephenw10 in the past I have seen where you could see stuff like that depending on where your sniffing before it was handed off to the nic and the nic did the fragmentation.. But if sniffing on a span port, where its already on the wire is odd.. Unless something in wireshark is reassembling stuff that has been fragmented to show as original..

Very odd indeed.

-

Exactly I could imagine Wireshark just showing the size of the TCP segment there except that it specifically show bytes on the wire.....

-

Thanks both for your comments so far. We've actually managed to fix the issue, sort of. But I still don't fully understand what was going on, so am concerned its going to bite me in the ass in production!

I've learned a bit since I last posted. Although the MTU is 1500 on all interfaces in pfSense, the MTU is actually 9000 (jumbo frmaes enabled) in the FS switch.

On the Palo Alto firewall however, jumbo frames are not enabled, and the MTU is 1500.

In this network it was configured such that some VLANs (networks that didn't require internet access) had their Layer 3 gateway in the FS switch.

The other VLANs (the ones that do require internet access) had their Layer 3 gateway all the way up in the Palo Alto firewall.

The VLAN we were trying to connect to the Web Configurator on had it's layer 3 gateway in the FS switch. We moved the gateway up to the Palo Alto, and hey presto! It 'fixed' the issue!

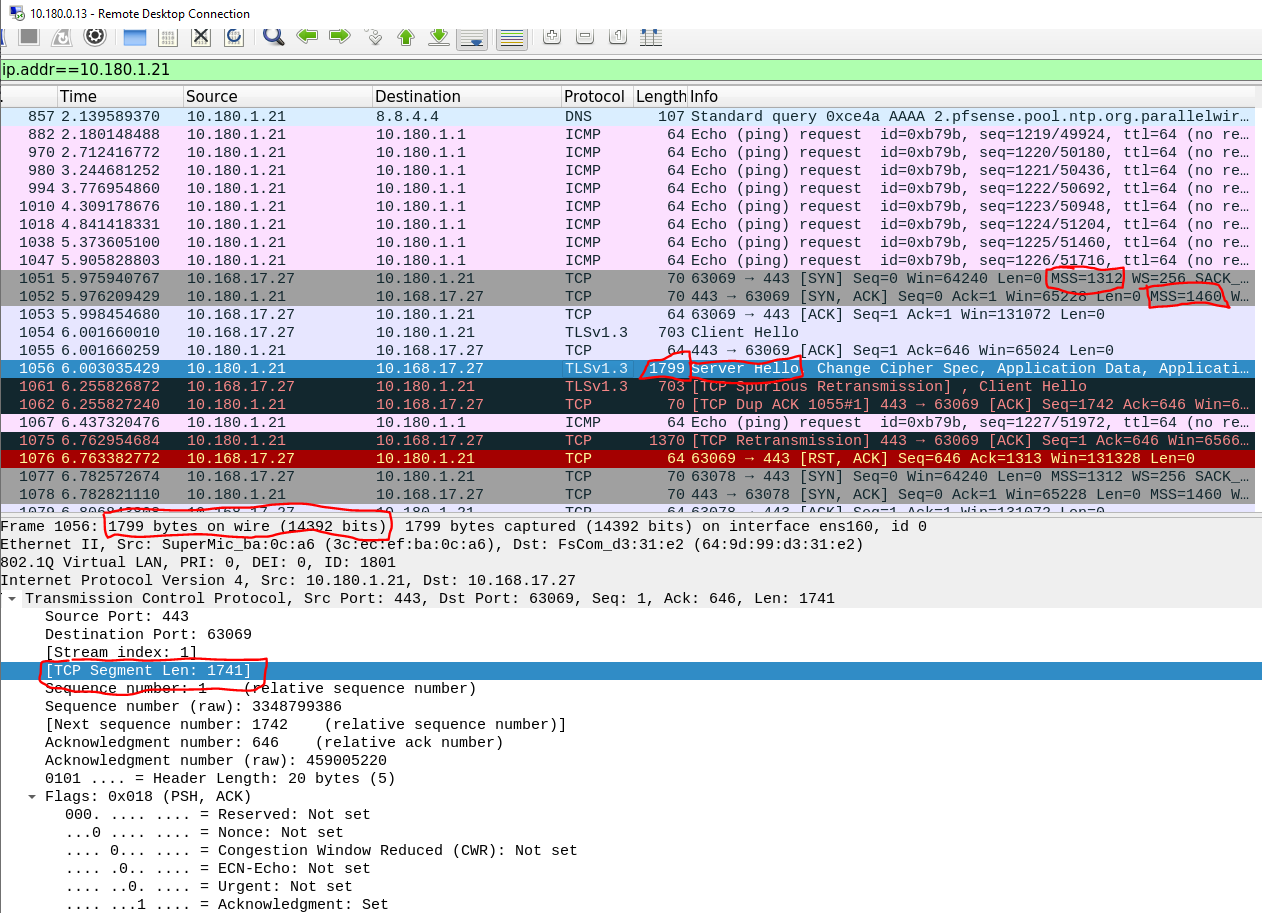

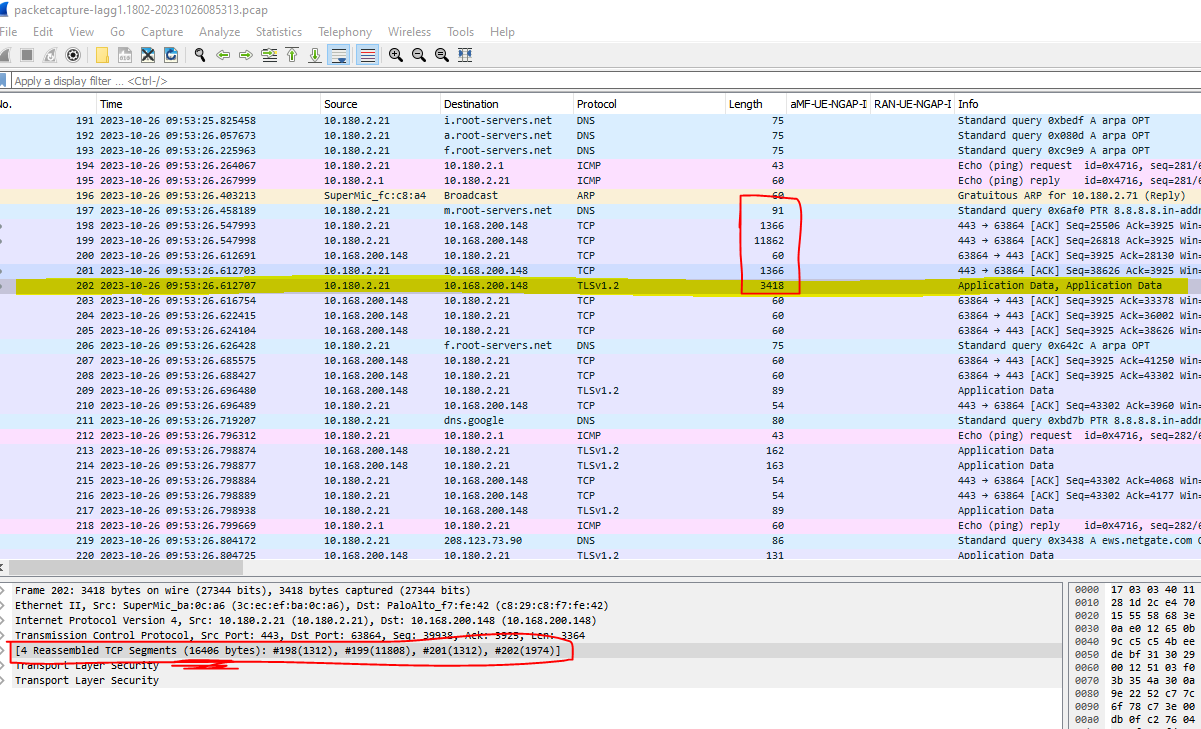

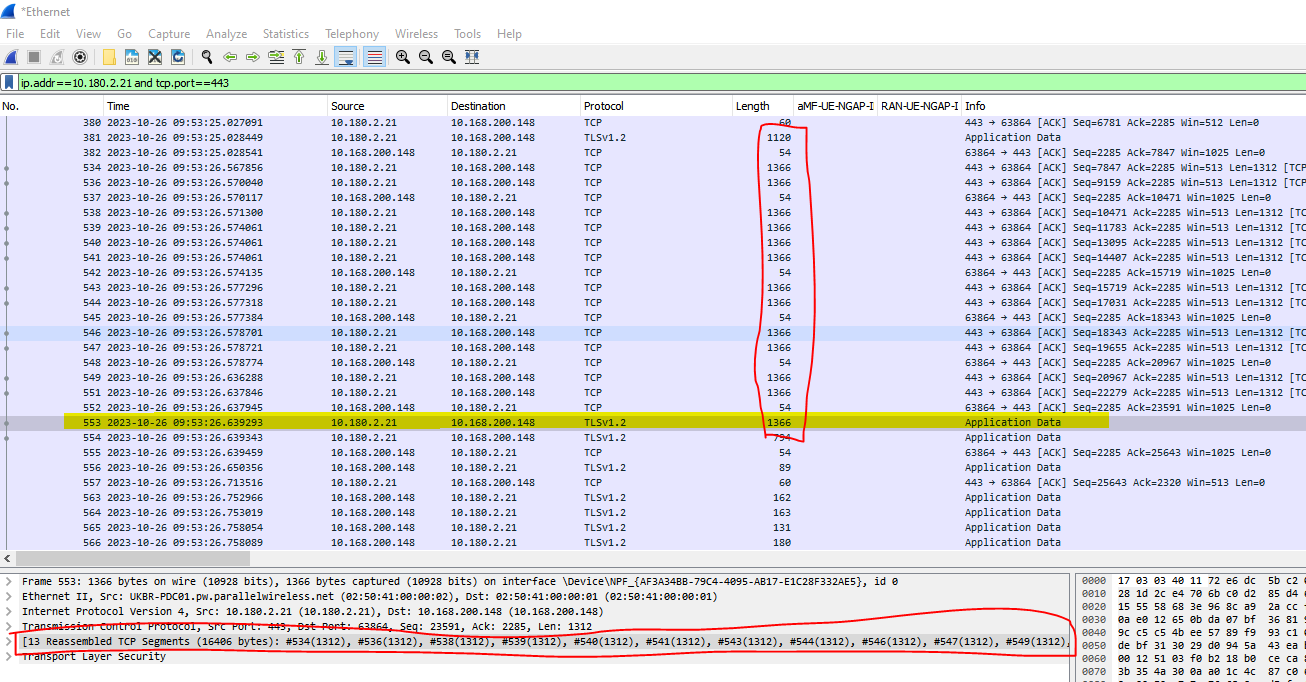

Now, if I look at our SPAN port on the FS switch, as before, and if I do a 'packet capture' from pfSense (using the diagnostics menu, which just uses tcpdump), then it shows the massive frames as shown above, BUT if I look at a packet capture on my laptop Ethernet adapter, it shows the packet has been segmented! Not fragmented at IP layer, but actually segmented into multiple packets:This is the packet capture from the pfSense itself:

And this is the same packet, captured on my Ethernet adapter:

Conclusion - looks like the Palo Alto is segmenting the over-sized TCP segment before sending it on, rather than dropping it. When the FS was the gateway it had no such cleverness, and so the packet was dropped by the first layer 3 interface it got to.

So while I have found a work-around, it still seems there is a bug in pfSense which means it is disregarding the interface MTU when sending packets for its own web configurator.

If you guys agree with my analysis, then I'll raise a bug -

Looks like at least one other person has experienced the same issue:

https://www.reddit.com/r/PFSENSE/comments/kw6bz8/cant_access_pfsense_gui_configuator_page_from_a/ -

Still seems odd that it shows those frames as larger than 1500B when the connection is working. That one frame is over 9000, I wouldn't expect that to pass anywhere.

When you captured it in pfSense itself do they show as that large in the tcpdump output?

It sounds like you have devices with different MTU values in the same L2 segment which is always asking for trouble IMO.

Steve

-

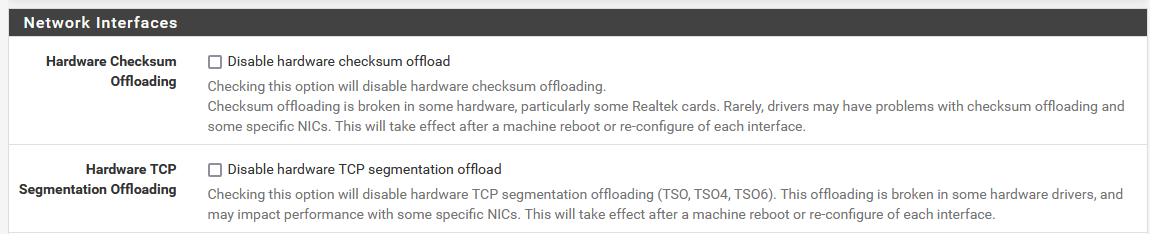

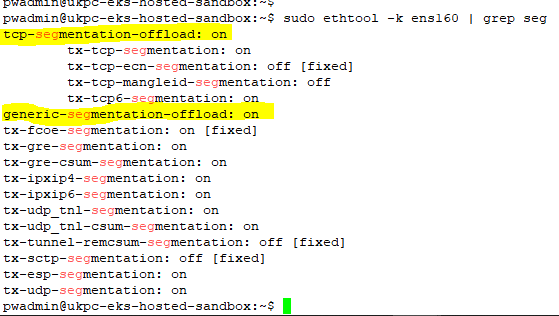

@stephenw10 OK, I really have definitely figured this out this time!! This is all to do with TCP segmentation offload in the NIC. It doesn't look like a bug in pfSense, although I still can't figure out why moving the L3 gateway to the firewall has fixed the issue for me. That may be more to do with the VLAN tag, but still an unknown, and one for later.

Basically, what's happening, is in Wireshark we are seeing the packet as it is when the kernel offloads the packet to the hardware. With tcp-segmentation-offload: on - this means we see the whole application layer PDU, in all it's massiveness, unsegmented. Once the hardware has the packet it performs the TCP segmentation of the application layer PDU (plus any other hardware functions that have been enabled, like checksum calculation) and sends the multiple segments out on the wire.

Generally, higher-end NICs have lots of functions that can be offloaded to the hardware, but lower-end NICs, like the one you would get in a laptop, don't.

Both the pfSense FW, and the Sandbox server, have higher-end NICs. The pfSense has an Intel X710 2x10G NIC, and the Sandbox server on which I was doing the snooping, has an Intel X520.

I checked and, sure enough, both have offloading enabled!

Here's the setting in pfSense Advanced settings:

And here's the ethtool output from our Sandbox server:

So, in summary - The packets on the wire are compliant with the interface MTU for the network (i.e. 1500 bytes) - I just can't see them, as I'm snooping what the kernel sees, rather than what the hardware is outputting.

Sorry to have wasted time with a non-issue, but I certainly learned something along the way anyhow!

-

@othomas said in Web Configurator traffic is not being fragmented when it should be:

rather than what the hardware is outputting.

@johnpoz said in Web Configurator traffic is not being fragmented when it should be:

where your sniffing before it was handed off to the nic and the nic did the fragmentation

Just saying ;) heheheh

BTW - IMHO, jumbo have very little use in a normal network - they can make sense in a specific SAN network, etc.. where your moving large files between specific device and its storage..

@stephenw10 said in Web Configurator traffic is not being fragmented when it should be:

e devices with different MTU values in the same L2 segment which is always asking for trouble IMO.

Completely in agreement with Steve's statement here..

-

Ah, nice catch! Yeah that's disabled by default in pfSense. We hardly ever see it.

-

@stephenw10 said in Web Configurator traffic is not being fragmented when it should be:

Sounds like you have devices with different MTU values in the same L2 segment which is always asking for trouble IMO.

Slightly surprised at this as down at L2 is really the only place I would expect to accommodate jumbo frames in a mixed-traffic environment.

With the rise of 10 GbE clients running 9k frames is more normalised and, on some systems, specifically called for. I have both servers and clients using 10 GbE and 9k frames on a subnet that also hosts devices at 1 GbE and below with regular 1500 MTU packets. Never expected an issue, never experienced an issue to date, including with pfSense routing them across subnets or VLANs.

(I have seen hiccups with 'L3' switches though but that's kinda out-of-scope here.)

️

️ -

Well it could be that I only ever see problems.

But I have worked many tickets with users blaming pfSense for blocking traffic that never even went through pfSense and turned out to be an MTU mismatch between the clients. Maybe just cynical but I would avoid mixing MTUs if possible.

But I have worked many tickets with users blaming pfSense for blocking traffic that never even went through pfSense and turned out to be an MTU mismatch between the clients. Maybe just cynical but I would avoid mixing MTUs if possible. -

@stephenw10 said in Web Configurator traffic is not being fragmented when it should be:

ell it could be that I only ever see problems

Its not just you.. Devices on the same L2 with different mtu is going to be problematic that is for sure.. if you have devices that never need to talk to each other - then you prob wont see a problem.

If they are on different network and through a router then the L3 should fix it up for you..

-

@stephenw10 said in Web Configurator traffic is not being fragmented when it should be:

Well it could be that I only ever see problems.

Yeah and medics only meet the sick & wounded. Who knew?

My home network above is not massive, almost fits on a page, even with working-from-home stuff. Typically there is less than 50 devices and often much lower than that; but there is a mix of old and new, IoT to servers, UPS to NTP, Windows (ugh) to 'nix, protocols to proprietary, NASes to thermostats and all working perfectly at their preferred MTUs.

Thankfully my Netgate device joined this melee without a murmur or a dropped packet. No complaints from this direction, even at L2.

️

️ -

Just saying ;) heheheh

Yeh fair comment, need to read replies better!

On MTU, my experience is any change from the default 1500 causes major headaches, especially when you work for a service provider network (I.e a telco network) and you're carrying user data, which always originated on a device with 1500 mtu.

The fun really starts when you start wrapping that data in lots of tunnels!

In one case in my last company we had,

User data TCP/IP over IPSEC, over IPSEC (again), then over GTP-U. We had to do MSS clamping down to 1280 bytes. -

@othomas said in Web Configurator traffic is not being fragmented when it should be:

User data TCP/IP over IPSEC, over IPSEC (again), then over GTP-U. We had to do MSS clamping down to 1280 bytes.

ugghh - yeah that isn't fun..