10GB link but only 1GB speeds

-

No I wouldn't expect to need those.

If you're hitting the rate limit issue it should be pretty obvious once you apply that first loader tunable. -

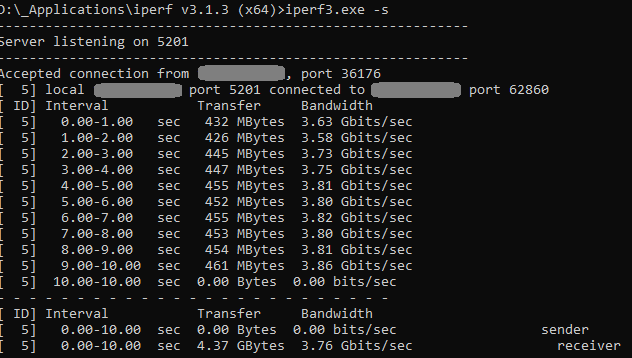

It sounds really better... 1Gb to 3Gb but far as ~7,6Gb

Is there any other optimisation that i can do ?

-

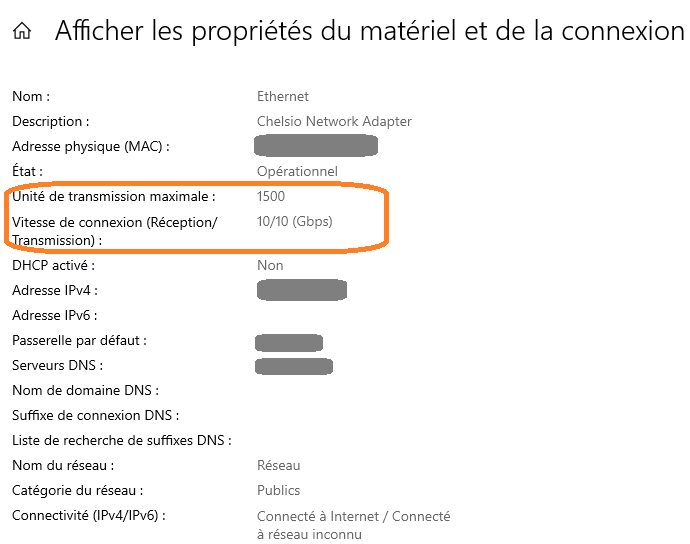

How are you testing that exactly? That looks like single steam so one CPU core. What is running the iperf client?

-

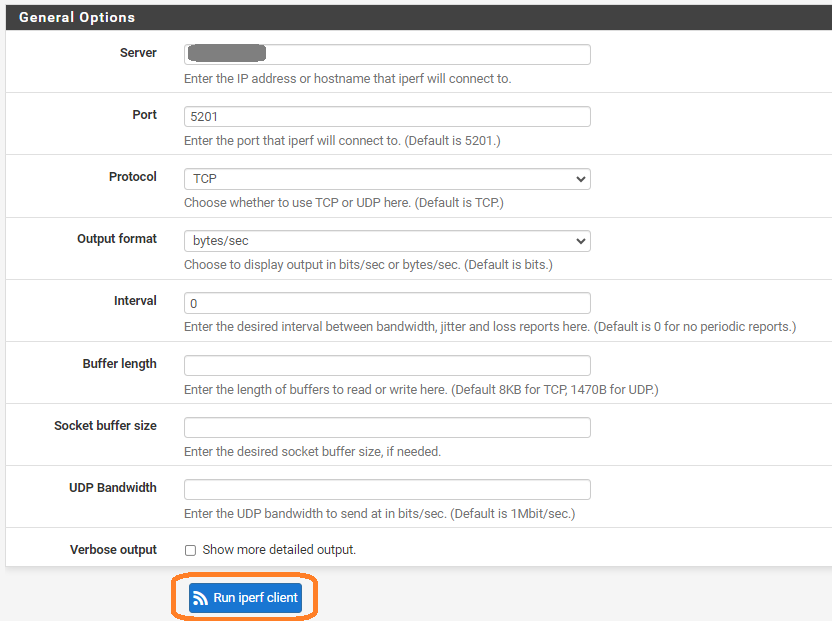

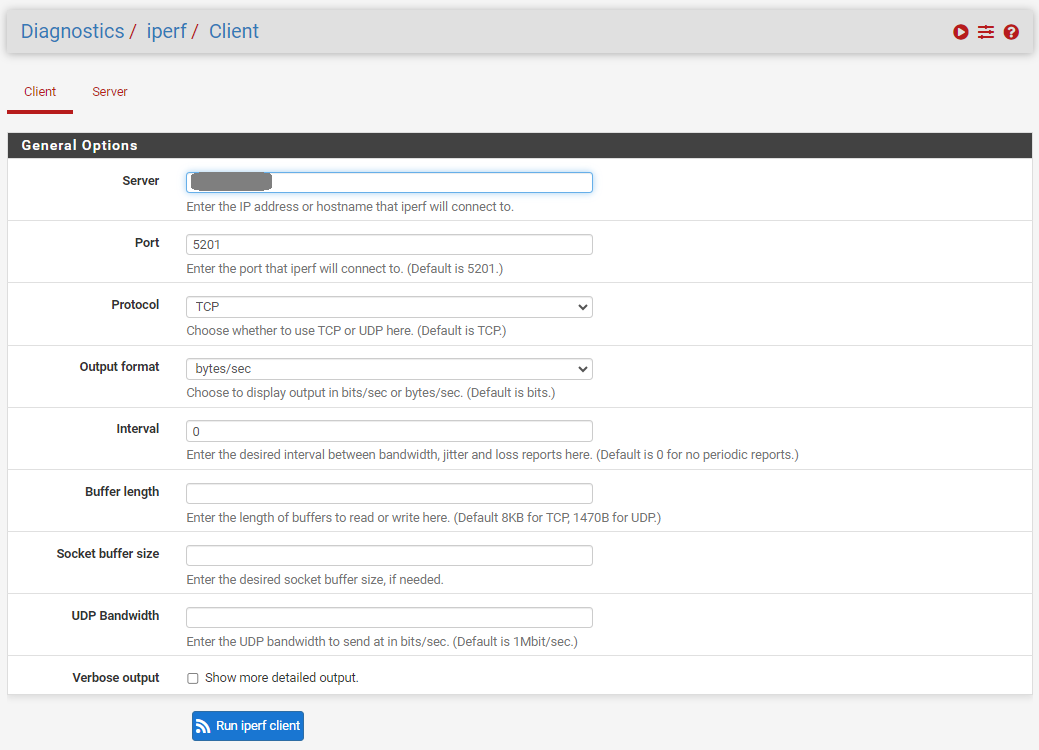

@stephenw10 Exactly the same like before.

pfSense as client, a workstation as server.

-

Ok try testing between two interfaces not to/from pfSense directly. pfSense is a bad server!

-

@stephenw10 said in 10GB link but only 1GB speeds:

Ok try testing between two interfaces not to/from pfSense directly. pfSense is a bad server!

The reason I was doing the test was to get the best bandwidth with my ISP, which offers a 10Gb connection (~8Gb max in reality).

So I need to understand and improve the speed between pfsense and my local network if I'm going to be able to match the speed with my ISP... because with only 3Gb, I'm way off the mark.What could be a problem with pfsense that I can still improve? Unless it's the card and its driver that aren't optimized to reach this target!

The change that we made is changing my bandwotch with my ISP too... i have 3Gb downstream rate.

-

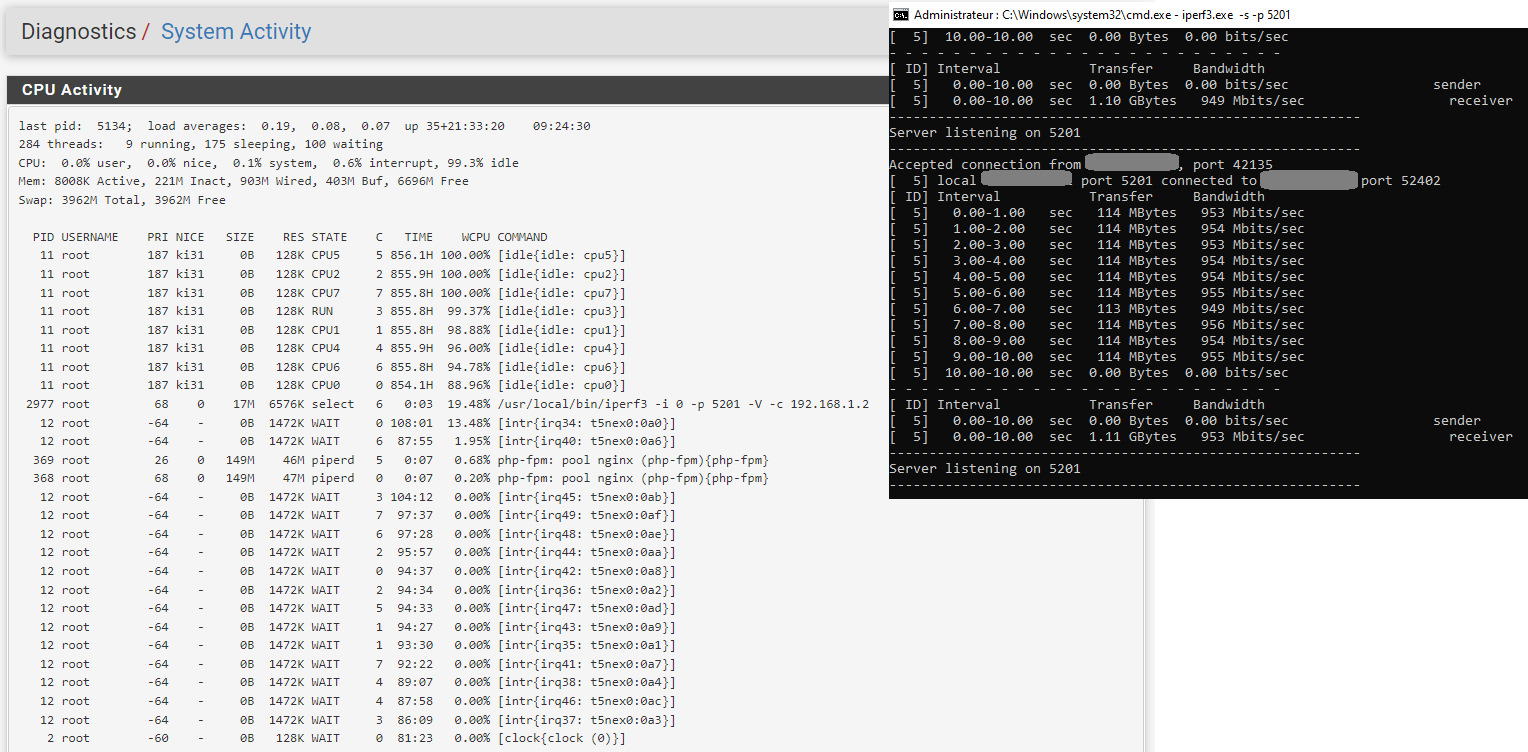

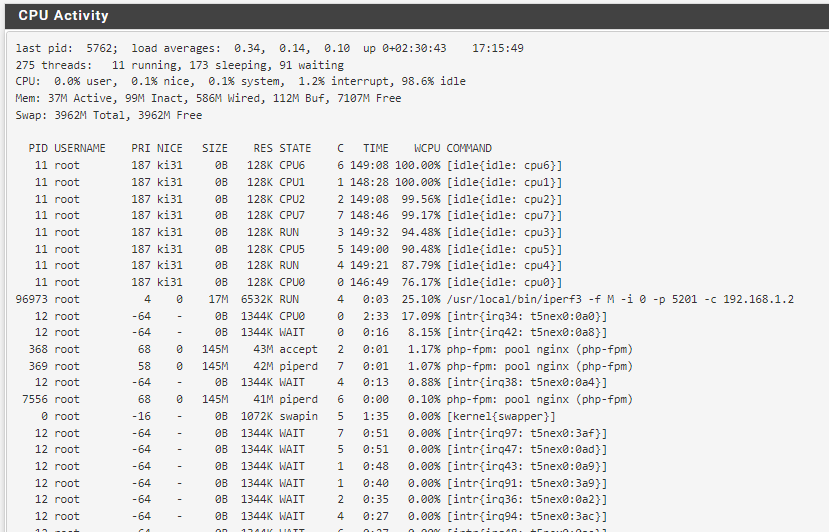

First check the per core CPU uage whilst testing either in Diag > System Activity or at the CLI using

top -HaSP -

-

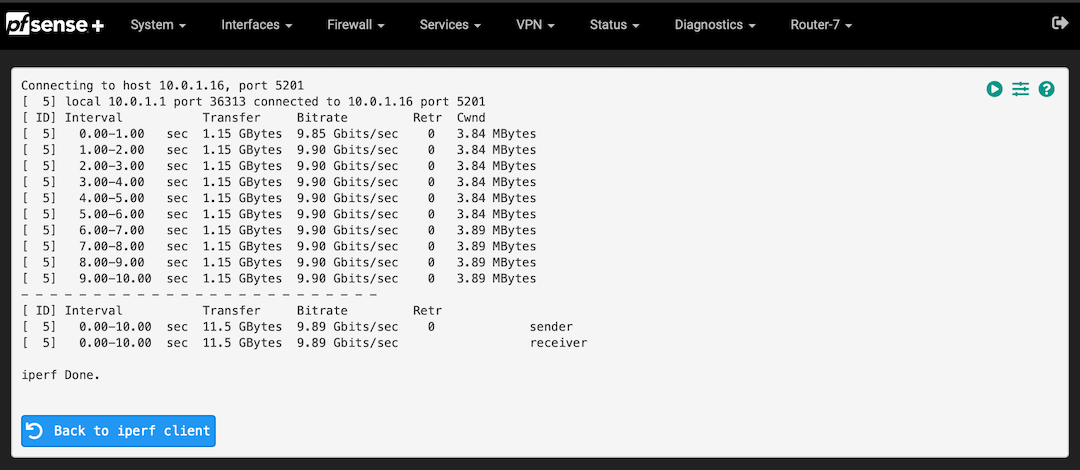

Hmm, nothing filling a core there. But you can see iperf itself is the largest consumer.

What about at the other end?

What can you pass if you run multiple streams in iperf?

Or with multiple iperf instances?

-

@stephenw10 said in 10GB link but only 1GB speeds:

Ok try testing between two interfaces not to/from pfSense directly. pfSense is a bad server!

Hi Steve,

May I ask about this and why pfSense is a bad server?

I can understand hardware limitations that can limit a system and these are pretty common on low power CPUs on router / firewall devices but unsure why pfSense or BSD itself would provide a barrier.

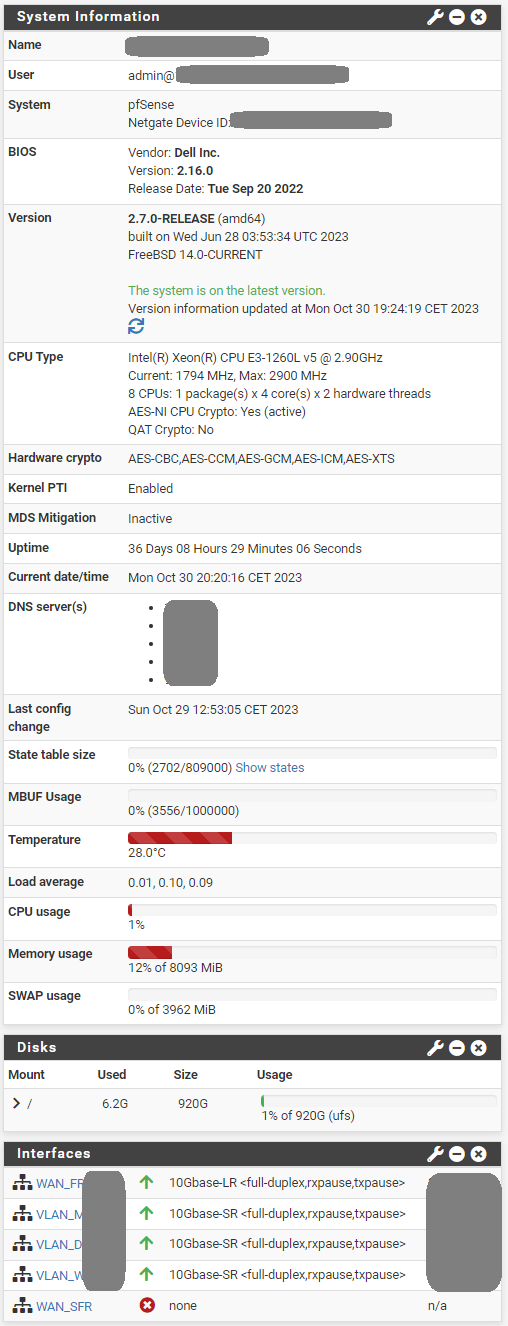

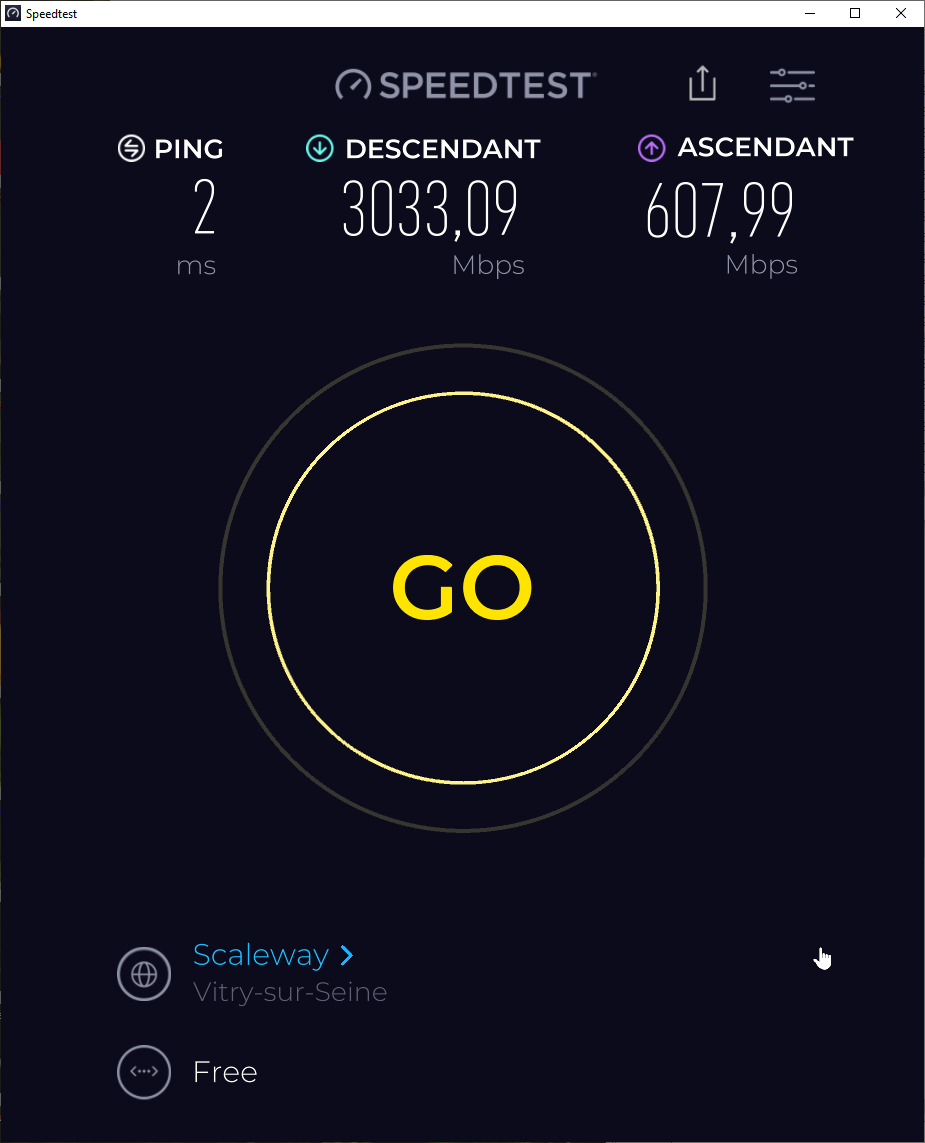

I don't run anything above 10 GbE (ie SFP+ being the limiting factor) but I don't appear to have any issues with iPerf traffic beyond the physical interface limits, even when using the GUI version and with the link handling other concurrent traffic:

The actual limits for production routing sit elsewhere (traffic mix, encryption, ACLs, firewall, states, VLANs or (in my case) PPPoE). Simple iPerf testing seems trivial, if both ends have the guts to process the packets.

️

️ -

That's on the Ice Lake Xeon box you have?

Mostly it's bad because a bunch of TCP tuning stuff that you would want on a server only hurts on a router where TCP connections are not terminated. The TCP hardware offloading options make quite a big difference.

For many devices the iperf process itself uses significant CPU cycles that could otherwise be routing packets. You often see much higher throughput values testing from a client behind it on low core count appliances.Steve

-

@stephenw10 said in 10GB link but only 1GB speeds:

That's on the Ice Lake Xeon box you have?

It is the Ice Lake Xeon-D. Thanks for the explanation and the normal disabling of NIC offload functions didn't occur to me; but otherwise it is hardware issue rather than a pf/BSD limitation, if I understand you correctly?

Having come from MIPS routers I understand that running iPerf from a device, rather than through it, can be practically impossible!

️

️ -

There are some other tunables in the network stack that are set for better routing at the expense of terminated connections. I've occasionally spent time tweaking them but the pfSense defaults are pretty good for most firewall type scenarios. If you are using pfSense as, say, a platform for HAProxy or a VPN concentrator there may be some improvement possible.

-