Intermittent Drop on LAN

-

@stephenw10 Wasn't able to see that from the pfsense dashboard whenever we loss packets. But I'm using PRTG and LibreNMS and CPU (4) never shows to go above 50%, How about I configure traffic shaping on that LAN interface ? I notice too on my Netflow analyzer that the packet loss happens when the volume goes above 1Gb.

Any help there will be really appreciated

-

Without those tuning options vmxnet will only use one queue (one CPU core) so you might be hitting 100% on one core and see 50% total.

To know for sure you need to runtop-HaSPat the CLI whilst testing and seeing packet loss.Check Status > Monitoring to see what pfSense logs at that point.

Traffic shaping could help prevent hitting a CPU limit. But it would still drop packets for some protocols.

-

@stephenw10 Thanks for the reply. On my CPU graphs off LibreNMS and PRTG it all shows the 4 CPU as below when I see packet loss

cpu0 max 36%

cpu1 max 36%

cpu2 max 59%

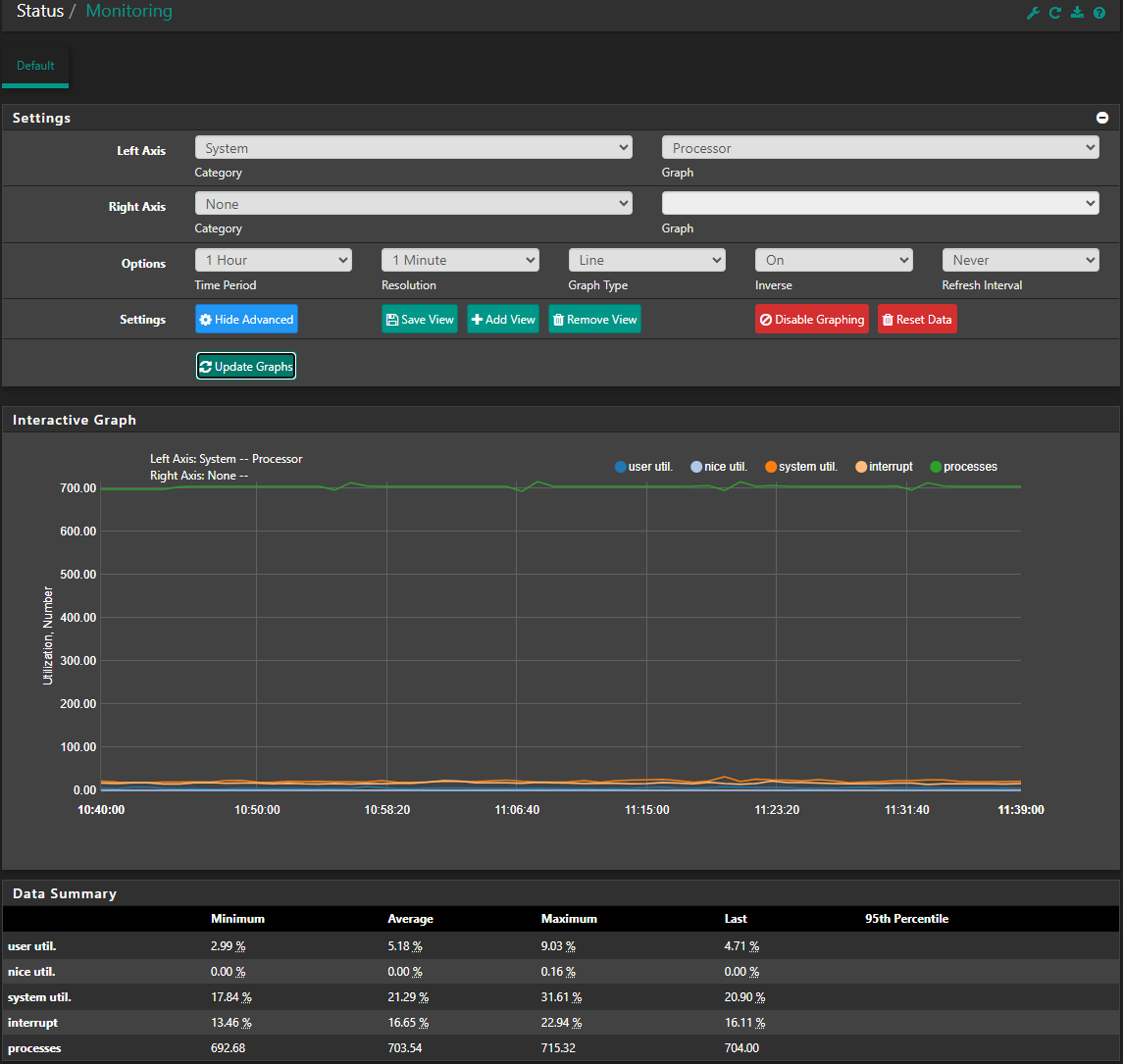

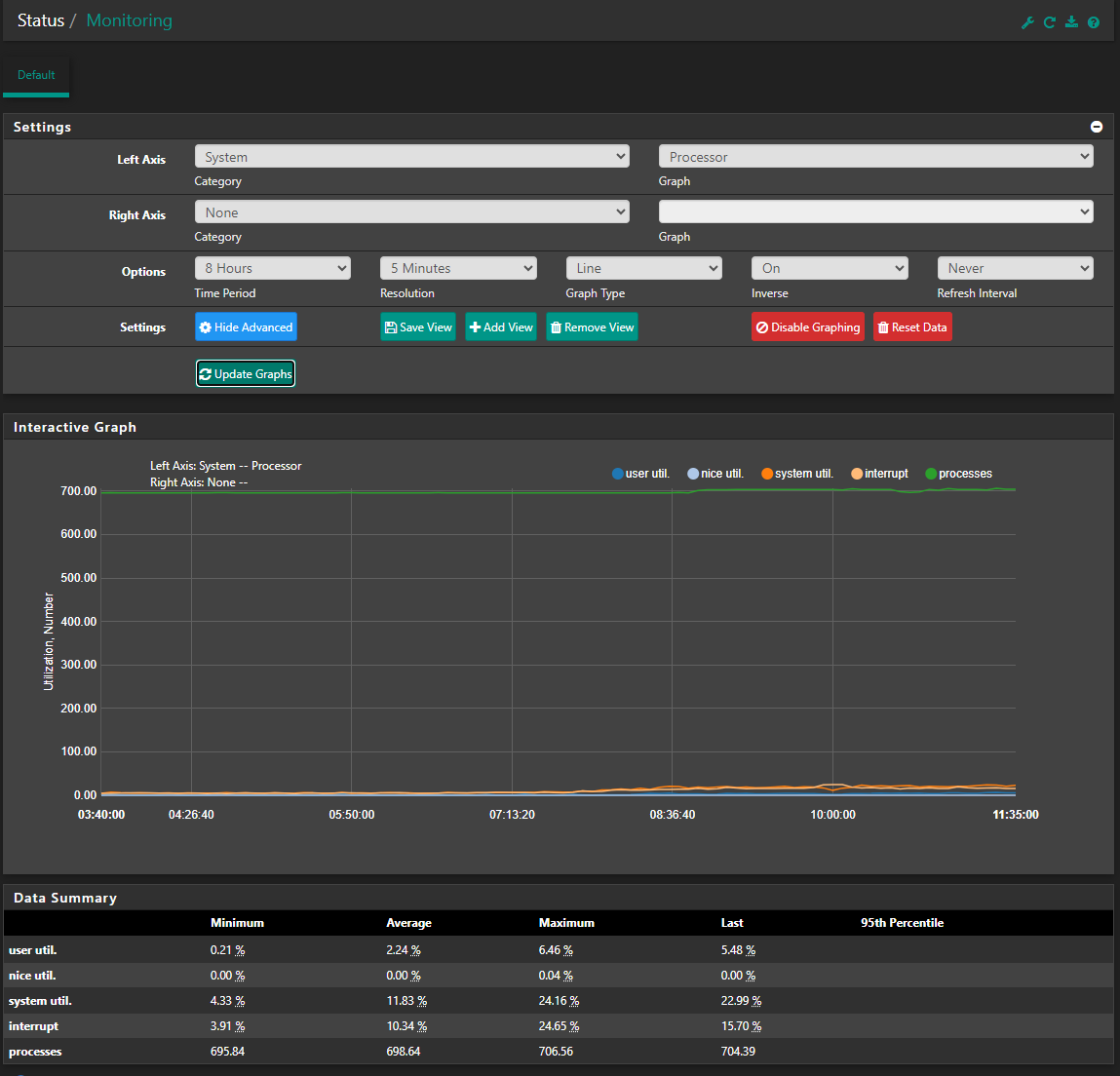

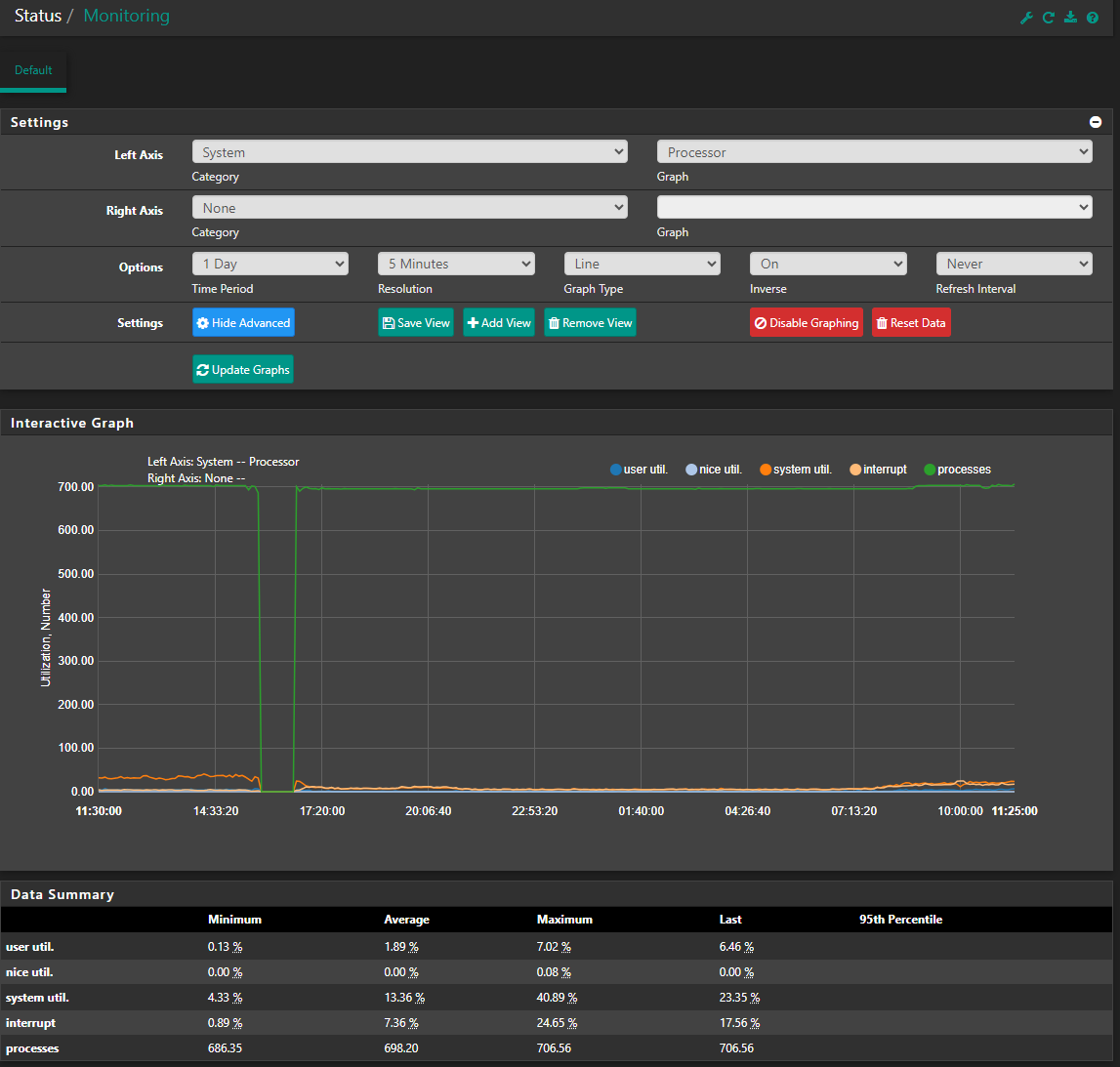

cpu3 max 29%Had the same pattern today with the first packet loss at 9am. Status/Monitoring. The following Maximum value I can see.

user util 8.95%

nice util 0.17%

system util. 22.65%

interrupt 21.90%

processes 709.01Unfortunately, that top-HaSP command on the shell cli doesn't seem to be available. If you can, I would really appreciate if you can point me to a list of common CLI for troubleshooting purposes.

-

700 processes is a lot. Is there something spawning hundreds of things it shouldn't?

Sorry I typo'd:

top -HaSP -

@stephenw10 Hhmm. It's always around 700 even during non-peak hours when we don't see packet loss.

Not sure if you can see these graph. 1 day, 8 hours and 1 hour

-

Yup I see. It's a high number of processes so I'd certainly check it. It doesn't seem to be peaking though so probably not an issue.

Try running

top -HaSP -

@stephenw10 Further investigation we realize that our vswitch and the vmnetwork where the LAN interface is connected to is set to 9000 Bytes MTU. pfSense LAN MTU is set to autodetect. Status show its on 1500

Could this be the issue considering the number of processes? -

@stephenw10 Could this be the issue with MTU mismatch? Is it okay to force the LAN interface to 9000MTU? Packet fragmentation process at the LAN interface causing latency and packet loss?

-

Yes an MTU mismatch would cause it to drop anything that arrives with jumbo packets. The vswitch itself wouldn't do that but if any of the clients are set to MTU 9000 that's a problem. Generally you should have everything in the same layer 2 segments set to the same MTU. The MTU value is not autodetetcted or negotiated, it must be set.

-

@stephenw10 Thanks for the reply. Jumbo frames are on the vPC inter switch link between our core L3 switch and the L2 switch hosting our VMware ESXi hyperconvergence infrastructure and on the vPC interface connected to the ESXi host and the vswitch.

-

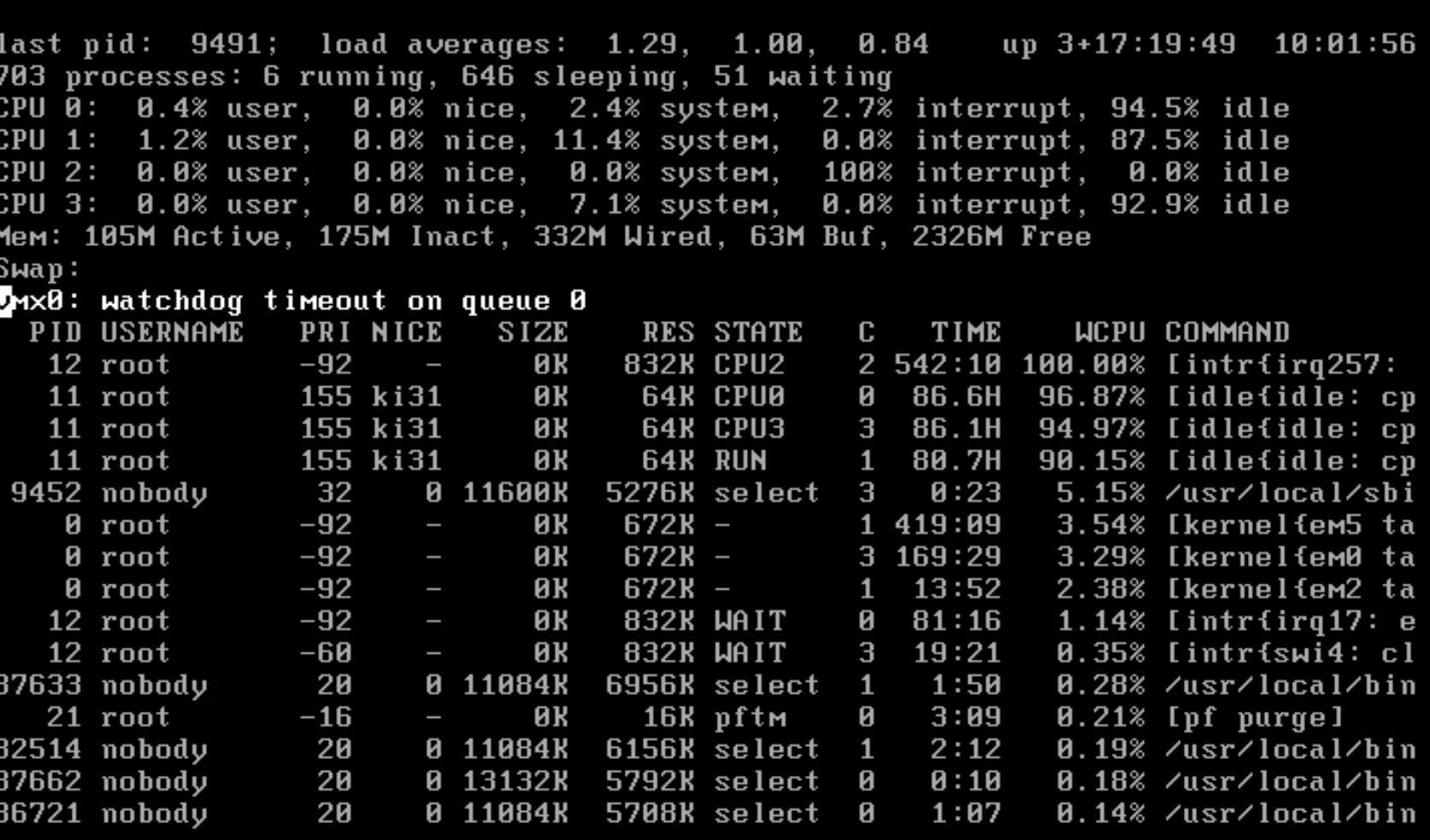

Manage to capture the top -HaSP result. Here it is.

-

If it's only on the switch that shouldn't be an issue. But if any other hosts or the layer3 switch are set to MTU 9000 that's an issue.

However that top output looks to show the issue. One core is pegged at 100% and it's all interrupt load from irq257 which I'd guess in vmx0.

Did you set the NIC tuning I linked to above? That is required to have vmx use more than one queue and hence more than one CPU core.Additionally you can see that vmx0 threw a watchdog timeout in that screenshot. I expect that to be logged?

-

@stephenw10 hi, this is @usaiat 's colleague.

I have created /boot/loader.conf.local and put this in it:

hw.pci.honor_msi_blacklist="0" dev.vmx.0.iflib.override_ntxds="0,4096" dev.vmx.0.iflib.override_nrxds="0,2048,0"After the the reboot I did a

dmesg | grep -Eiw 'descriptors|queues'and I got the following output

em0: netmap queues/slots: TX 1/256, RX 1/256

em1: netmap queues/slots: TX 1/256, RX 1/256

em2: netmap queues/slots: TX 1/256, RX 1/256

em3: netmap queues/slots: TX 1/256, RX 1/256

em4: netmap queues/slots: TX 1/256, RX 1/256

em5: netmap queues/slots: TX 1/256, RX 1/256

em6: netmap queues/slots: TX 1/256, RX 1/256

em0: netmap queues/slots: TX 1/256, RX 1/256

em1: netmap queues/slots: TX 1/256, RX 1/256

em2: netmap queues/slots: TX 1/256, RX 1/256

em3: netmap queues/slots: TX 1/256, RX 1/256

em4: netmap queues/slots: TX 1/256, RX 1/256

em5: netmap queues/slots: TX 1/256, RX 1/256

em6: netmap queues/slots: TX 1/256, RX 1/256I note that vmx0 isn't listed there - did we do that right thing?

-

Yes those look like the correct options. What is shown at boot for vmx0?

-

@stephenw10 OK this VM is old and has been floating about since we were on vsphere 6 so we thought we might check out what would happen if we upgrade from VM Version 13 to VM Version 20, along with a switch to 2.7.2

Now when we do a

dmesg | grep -Eiw 'descriptors|queues'

we get a bit more out of it:em0: Using 1024 TX descriptors and 1024 RX descriptors em0: netmap queues/slots: TX 1/1024, RX 1/1024 em1: Using 1024 TX descriptors and 1024 RX descriptors em1: netmap queues/slots: TX 1/1024, RX 1/1024 em2: Using 1024 TX descriptors and 1024 RX descriptors em2: netmap queues/slots: TX 1/1024, RX 1/1024 em3: Using 1024 TX descriptors and 1024 RX descriptors em3: netmap queues/slots: TX 1/1024, RX 1/1024 em4: Using 1024 TX descriptors and 1024 RX descriptors em4: netmap queues/slots: TX 1/1024, RX 1/1024 em5: Using 1024 TX descriptors and 1024 RX descriptors em5: netmap queues/slots: TX 1/1024, RX 1/1024 em6: Using 1024 TX descriptors and 1024 RX descriptors em6: netmap queues/slots: TX 1/1024, RX 1/1024 vmx0: Using 512 TX descriptors and 512 RX descriptors vmx0: Using 4 RX queues 4 TX queues vmx0: netmap queues/slots: TX 4/512, RX 4/512 em0: Using 1024 TX descriptors and 1024 RX descriptors em0: netmap queues/slots: TX 1/1024, RX 1/1024 em1: Using 1024 TX descriptors and 1024 RX descriptors em1: netmap queues/slots: TX 1/1024, RX 1/1024 em2: Using 1024 TX descriptors and 1024 RX descriptors em2: netmap queues/slots: TX 1/1024, RX 1/1024 em3: Using 1024 TX descriptors and 1024 RX descriptors em3: netmap queues/slots: TX 1/1024, RX 1/1024 em4: Using 1024 TX descriptors and 1024 RX descriptors em4: netmap queues/slots: TX 1/1024, RX 1/1024 em5: Using 1024 TX descriptors and 1024 RX descriptors em5: netmap queues/slots: TX 1/1024, RX 1/1024 em6: Using 1024 TX descriptors and 1024 RX descriptors em6: netmap queues/slots: TX 1/1024, RX 1/1024 vmx0: Using 512 TX descriptors and 512 RX descriptors vmx0: Using 4 RX queues 4 TX queues vmx0: netmap queues/slots: TX 4/512, RX 4/512 em0: Using 1024 TX descriptors and 1024 RX descriptors em0: netmap queues/slots: TX 1/1024, RX 1/1024 em1: Using 1024 TX descriptors and 1024 RX descriptors em1: netmap queues/slots: TX 1/1024, RX 1/1024 em2: Using 1024 TX descriptors and 1024 RX descriptors em2: netmap queues/slots: TX 1/1024, RX 1/1024 em3: Using 1024 TX descriptors and 1024 RX descriptors em3: netmap queues/slots: TX 1/1024, RX 1/1024 em4: Using 1024 TX descriptors and 1024 RX descriptors em4: netmap queues/slots: TX 1/1024, RX 1/1024 em5: Using 1024 TX descriptors and 1024 RX descriptors em5: netmap queues/slots: TX 1/1024, RX 1/1024 em6: Using 1024 TX descriptors and 1024 RX descriptors em6: netmap queues/slots: TX 1/1024, RX 1/1024 vmx0: Using 4096 TX descriptors and 2048 RX descriptors vmx0: Using 4 RX queues 4 TX queues vmx0: netmap queues/slots: TX 4/4096, RX 4/2048Since then the LAN interface does seem to be holding up a bit better now, we will continue to monitor for the rest of the day before we declare victory.

Perhaps we did a few too many changes at once because now we don't know what has made it clean up (2.7.2, VM Version, or multi-queueing) but this is what happens when you have customers yelling at you for a fortnight.

I do note that now a bunch of our GRE tunnels no longer work - we've seen this before when we've attempted to upgrade to 2.7.2. I'm fairly certain this is an unrelated issue so we will start a new thread on this presently.

-

Interesting. There have been a few special case issues affecting GRE. I wonder if you had a workaround in place that's no longer needed or similar.

Do they just not connect at all?

-

@stephenw10 OK I just deleted them all and created new ones - now 7 of them are green and 1 is red (100% packet loss) - while this is less than ideal we can live with that in the short term. What I'll do is open a support call with the provider that these tunnels go to and see what they have to say. If we identify issues with pfSense I will likely open up a new thread on this forum and link to it from here.

As for the LAN drops, I think that problem is solved, we've had 2 whole working days now and its been clean. As mentioned we did rather too many changes at once to isolate which one of these might of been the solution, but thems the breaks.

Of course your help has been invaluable so thank you very much for that.