x11sdv-8c-tp8f (xeon-d 2146NT) compatibility?

-

Not sure I've seen anyone try it. I would say it's massively overpowered for most pfSense installs.

You can check the list of supported hardware in the ix driver which would be required for the 10GbE NICs.

https://github.com/pfsense/FreeBSD-src/blob/e6b497fa0a30a3ae23e1cb0825bc2d7b7cc3635c/sys/dev/ixgbe/if_ix.c#L59I would expect the i350 to work no problems though.

There may be other components that require support.

Steve

-

just following up for others... all interfaces powered up with no fuss with 2.4.4.

igb0: <Intel(R) PRO/1000 Network Connection, Version - 2.5.3-k> port 0x9060-0x907f mem 0xe0c60000-0xe0c7ffff,0xe0c8c000-0xe0c8ffff at device 0.0 numa-domain 0 on pci8 igb0: Using MSIX interrupts with 9 vectors igb0: Ethernet address: ac:1f:6b:73:87:e0 igb0: Bound queue 0 to cpu 0 igb0: Bound queue 1 to cpu 1 igb0: Bound queue 2 to cpu 2 igb0: Bound queue 3 to cpu 3 igb0: Bound queue 4 to cpu 4 igb0: Bound queue 5 to cpu 5 igb0: Bound queue 6 to cpu 6 igb0: Bound queue 7 to cpu 7 igb0: netmap queues/slots: TX 8/1024, RX 8/1024 igb1: <Intel(R) PRO/1000 Network Connection, Version - 2.5.3-k> port 0x9040-0x905f mem 0xe0c40000-0xe0c5ffff,0xe0c88000-0xe0c8bfff at device 0.1 numa-domain 0 on pci8 igb1: Using MSIX interrupts with 9 vectors igb1: Ethernet address: ac:1f:6b:73:87:e1 igb1: Bound queue 0 to cpu 8 igb1: Bound queue 1 to cpu 9 igb1: Bound queue 2 to cpu 10 igb1: Bound queue 3 to cpu 11 igb1: Bound queue 4 to cpu 12 igb1: Bound queue 5 to cpu 13 igb1: Bound queue 6 to cpu 14 igb1: Bound queue 7 to cpu 15 igb1: netmap queues/slots: TX 8/1024, RX 8/1024 igb2: <Intel(R) PRO/1000 Network Connection, Version - 2.5.3-k> port 0x9020-0x903f mem 0xe0c20000-0xe0c3ffff,0xe0c84000-0xe0c87fff at device 0.2 numa-domain 0 on pci8 igb2: Using MSIX interrupts with 9 vectors igb2: Ethernet address: ac:1f:6b:73:87:e2 igb2: Bound queue 0 to cpu 0 igb2: Bound queue 1 to cpu 1 igb2: Bound queue 2 to cpu 2 igb2: Bound queue 3 to cpu 3 igb2: Bound queue 4 to cpu 4 igb2: Bound queue 5 to cpu 5 igb2: Bound queue 6 to cpu 6 igb2: Bound queue 7 to cpu 7 igb2: netmap queues/slots: TX 8/1024, RX 8/1024 igb3: <Intel(R) PRO/1000 Network Connection, Version - 2.5.3-k> port 0x9000-0x901f mem 0xe0c00000-0xe0c1ffff,0xe0c80000-0xe0c83fff at device 0.3 numa-domain 0 on pci8 igb3: Using MSIX interrupts with 9 vectors igb3: Ethernet address: ac:1f:6b:73:87:e3 igb3: Bound queue 0 to cpu 8 igb3: Bound queue 1 to cpu 9 igb3: Bound queue 2 to cpu 10 igb3: Bound queue 3 to cpu 11 igb3: Bound queue 4 to cpu 12 igb3: Bound queue 5 to cpu 13 igb3: Bound queue 6 to cpu 14 igb3: Bound queue 7 to cpu 15 igb3: netmap queues/slots: TX 8/1024, RX 8/1024 ixl0: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.9.9-k> mem 0xfa000000-0xfaffffff,0xfb018000-0xfb01ffff at device 0.0 numa-domain 0 on pci14 ixl0: using 1024 tx descriptors and 1024 rx descriptors ixl0: fw 3.1.57069 api 1.5 nvm 3.33 etid 80001006 oem 1.262.0 ixl0: PF-ID[0]: VFs 32, MSIX 129, VF MSIX 5, QPs 384, MDIO shared ixl0: Using MSIX interrupts with 9 vectors ixl0: Allocating 8 queues for PF LAN VSI; 8 queues active ixl0: Ethernet address: ac:1f:6b:73:89:70 ixl0: SR-IOV ready queues is 0xfffffe00017f4000 ixl0: netmap queues/slots: TX 8/1024, RX 8/1024 ixl1: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.9.9-k> mem 0xf9000000-0xf9ffffff,0xfb010000-0xfb017fff at device 0.1 numa-domain 0 on pci14 ixl1: using 1024 tx descriptors and 1024 rx descriptors ixl1: fw 3.1.57069 api 1.5 nvm 3.33 etid 80001006 oem 1.262.0 ixl1: PF-ID[1]: VFs 32, MSIX 129, VF MSIX 5, QPs 384, MDIO shared ixl1: Using MSIX interrupts with 9 vectors ixl1: Allocating 8 queues for PF LAN VSI; 8 queues active ixl1: Ethernet address: ac:1f:6b:73:89:71 ixl1: SR-IOV ready queues is 0xfffffe000192c000 ixl1: netmap queues/slots: TX 8/1024, RX 8/1024 ixl2: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.9.9-k> mem 0xf8000000-0xf8ffffff,0xfb008000-0xfb00ffff at device 0.2 numa-domain 0 on pci14 ixl2: using 1024 tx descriptors and 1024 rx descriptors ixl2: fw 3.1.57069 api 1.5 nvm 3.33 etid 80001006 oem 1.262.0 ixl2: PF-ID[2]: VFs 32, MSIX 129, VF MSIX 5, QPs 384, I2C ixl2: Using MSIX interrupts with 9 vectors ixl2: Allocating 8 queues for PF LAN VSI; 8 queues active ixl2: Ethernet address: ac:1f:6b:73:89:72 ixl2: SR-IOV ready queues is 0xfffffe0001a82000 ixl2: netmap queues/slots: TX 8/1024, RX 8/1024 ixl3: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.9.9-k> mem 0xf7000000-0xf7ffffff,0xfb000000-0xfb007fff at device 0.3 numa-domain 0 on pci14 ixl3: using 1024 tx descriptors and 1024 rx descriptors ixl3: fw 3.1.57069 api 1.5 nvm 3.33 etid 80001006 oem 1.262.0 ixl3: PF-ID[3]: VFs 32, MSIX 129, VF MSIX 5, QPs 384, I2C ixl3: Using MSIX interrupts with 9 vectors ixl3: Allocating 8 queues for PF LAN VSI; 8 queues active ixl3: Ethernet address: ac:1f:6b:73:89:73 ixl3: SR-IOV ready queues is 0xfffffe0001bc6000 ixl3: netmap queues/slots: TX 8/1024, RX 8/1024 CPU: Intel(R) Xeon(R) D-2146NT CPU @ 2.30GHz (2294.68-MHz K8-class CPU) Origin="GenuineIntel" Id=0x50654 Family=0x6 Model=0x55 Stepping=4 Features=0xbfebfbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE,MCA,CMOV,PAT,PSE36,CLFLUSH,DTS,ACPI,MMX,FXSR,SSE,SSE2,SS,HTT,TM,PBE> Features2=0x7ffefbff<SSE3,PCLMULQDQ,DTES64,MON,DS_CPL,VMX,SMX,EST,TM2,SSSE3,SDBG,FMA,CX16,xTPR,PDCM,PCID,DCA,SSE4.1,SSE4.2,x2APIC,MOVBE,POPCNT,TSCDLT,AESNI,XSAVE,OSXSAVE,AVX,F16C,RDRAND> AMD Features=0x2c100800<SYSCALL,NX,Page1GB,RDTSCP,LM> AMD Features2=0x121<LAHF,ABM,Prefetch> Structured Extended Features=0xd39ffffb<FSGSBASE,TSCADJ,BMI1,HLE,AVX2,FDPEXC,SMEP,BMI2,ERMS,INVPCID,RTM,PQM,NFPUSG,MPX,PQE,AVX512F,AVX512DQ,RDSEED,ADX,SMAP,CLFLUSHOPT,CLWB,PROCTRACE,AVX512CD,AVX512BW,AVX512VL> Structured Extended Features2=0x8<PKU> Structured Extended Features3=0x9c000000<IBPB,STIBP,SSBD> XSAVE Features=0xf<XSAVEOPT,XSAVEC,XINUSE,XSAVES> VT-x: PAT,HLT,MTF,PAUSE,EPT,UG,VPID,VID,PostIntr TSC: P-state invariant, performance statistics -

I know this is quite the old thread, but instead of starting a new one, figured I'd ask a few things here first.

@q54e3w did you end up getting everything working on the 2146NT? I have a need for a proper 10 gigabit real world performance WAN/NAT firewall and am considering this CPU platform for it since it's newer than the 1541's hardware and has QAT built in. Did you manage to get QAT working as well?

Also debating if I just keep using my Netgate 6100 for now and wait for the possibility of something higher end than the 8200 to come out (that is newer than the 1541).

-

@planedrop Appears to work fine.

-

@q54e3w OK this is fantastic news, thanks a ton! Likely going with the same platform for my next firewall. Unless Netgate releases something more up-to-date than the 1541 before I pull the trigger on this lol

-

Thanks from me too, for showing up the informations!

It would also my next platform for pfSense (pfSense+) it offers all for internal installing,

it comes with 3 M.2/miniPCIe slots for modem SSD and WiFi for internal installing.- Intel TurboBoost

- Intel Hyper-Threading

- Intel Quick Assist

- AES-NI

But one thing is irritating me a little bit, in your image is shown that there will be only 8 Cores and max. 2.3GHz

at the Intel site it is shown something like 8 cores and 16 threads and max. 3.0 GHz. So my question is the following,

have enabled Intel Hyper-threading and TurboBoost in the BIOS? -

@Dobby_ they may have SMT disabled, pfSense does support it though.

-

Yes, if it was enabled it would show on the dashboard there.

-

yes, Hyperthreading is disabled on this box hence the 8/8 rather than 8/16 you should expect.

Its also running a XL710L dual port card for multi-gig 2.5/5gbs support. It was originally supporting a 2.5g connection from a cable modem enabling 1.2gbps service.

The systems been 100% reliable running 4-5 load balanced OpenVPN connections and several wireguard tunnels too.

I do think its massively overpowered though. -

@q54e3w Thanks for all the info here, glad to hear it's working well and has been stable. I'm only considering it since I have an 8 gigabit WAN connection now.

-

@planedrop Replying extremely late to an extremely old thread but hoping some of you are still around. I am hoping to deploy this similarly overpowered little machine as a primary firewall shortly. You guys mentioned you had no issues enabling QAT support out of the box? That has really been my only concern.

Cheers

-

@latez I haven't done this build yet so I can't really say, but it should work as far as I'm able to tell.

-

@latez Keep in mind that (as of this moment in time) pfSense CE does not support QAT only pfSense+ does.

-

@InstanceExtension Yeah but you can get a pf+ license for any hardware you want, so it's still a viable thing to try and build a custom firewall that supports QAT.

-

QAT is in base and hence in CE now.

-

@stephenw10 OH yeah, forgot about that lol. Thanks for the reminder.

-

@stephenw10 Sorry and maybe I am mistaken but I thought QAT was a Plus exclusive feature? I actually installed 2.8.1 just now, none of my QAT hardware appears to have been detected by default. Are there additional steps to enable the modules?

-

@latez said in x11sdv-8c-tp8f (xeon-d 2146NT) compatibility?:

Are there additional steps to enable the modules?

What QAT hardware did you get and is it supported by FreeBSD FreeBSD Manual Pages: qat

In the Dashboard you would see something like below, if it's supported but not yet active:

Intel(R) Atom(TM) CPU C3758R @ 2.40GHz 8 CPUs: 1 package(s) x 8 core(s) AES-NI CPU Crypto: Yes (active) QAT Crypto: Yes (inactive)If it is supported you have to enable it, see pfSense docu: Cryptographic Hardware

-

Yeah you have to enable it in Sys > Adv > Misc.

-

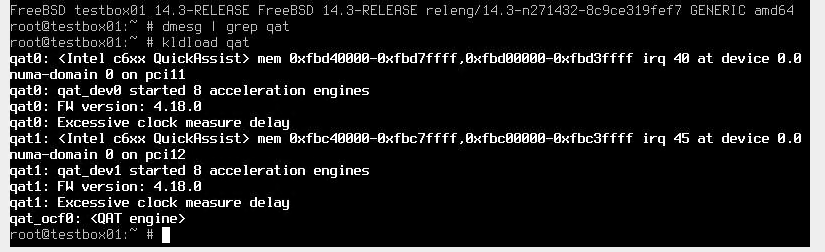

@stephenw10 I apologize for not being more clear. On FreeBSD 14.3

Where as I cannot kldload qat on the latest pfsense CE release. Hence the module is never loaded. You mentioned it should be in base now, am I doing something wrong?