Recommend NAS raid 1

-

@johnpoz I know this is a can of worm to open up, but:

Yes, Raid1 (or any other Raid for that matter) is NOT - I repeat NOT - a backup. It’s an availabilty and drive failure convinience.

BUT: Raid 1 is definitively faster than a single drive if your Raid software/controller is worth anything. Write speed will be like a single drive, but read speeds should be anything between a single drive (sequential read) and two drives worth of performance (heavy random read IO).

Your controller should split reads between the drives, and with queuing of commands which all controllers do these days, it should also parallelize the reads across the drives. -

@keyser Not a can of worms - not saying you "couldn't" see some performance enhancements. If the controller/raid setup took advantage of doing what you talked about. But its not going to be all that, and more than likely wouldn't justify the cost.. Especially if your only connecting at gig..

Gig max speed is going to be like 113MBps --- any single drive is more than capable of handling that.. Shoot even at 2.5ge a decent single rust drive is capable of that speed..

So over the wire with 2.5ge I see 2.37Mbps /8 puts you at like 290ish something.. My single drive can 280ish.. Even if you always had best scenario, would adding the 2nd drive for a few extra Mbps be worth it?

If the drive was locally connected ok - you might see some performance bump.. But once you are talking to the drive(s) over a gig wire, who cares if your drive could do 500MBps read/write right be it single or raid 0 with 6 drives.. When your connection is going to be limited to the speed of 1gig..

Raid1 while can save some headache from a loss of disk, again is not a backup.. And not a very cost effective means of saving you some time coping some files back to your new disk if fails..

If you had a way to just add the disk, ok - sure why not.. But your also now talking getting a new system that is capable of doing it - so you cost is not just the cost of the drive..

Hey have fun setting up a new system and sure setup raid 1, but if your point is setting it up so if when some drive fails, you don't have to just copy your files back - doesn't seem like a cost effective project to me..

I don’t want to break the bank.

There is going to be a cost.. And unless you go with something like PI, which is never going to give you high speed xfer - nor take advantage of any sort of raid performance via reading from both, etc.. And again - the wire is now the bottle neck from seeing either higher read/write speeds from disk(s)..

Also if your accessing this via a vpn connection from some remote location - you sure and the hell are not going to see any advantage in read/write from your array be it raid 0 or 1, etc.

You could prob go with say a synology ds223j - two bay nas, can be found on amazon for under 200.. With no disks.. So even if you leverage the current disk your using, you still have the cost of another disk so you can do raid 1.. So your prob at 300 bucks low end.. So you want to spend 300$ for when your 1 disk fails, you don't have to copy your files back over?

Or maybe the Asustor Drivestor 2 AS1102T, 2 bay, under 200 bucks and comes with 2.5ge interface..

edit:

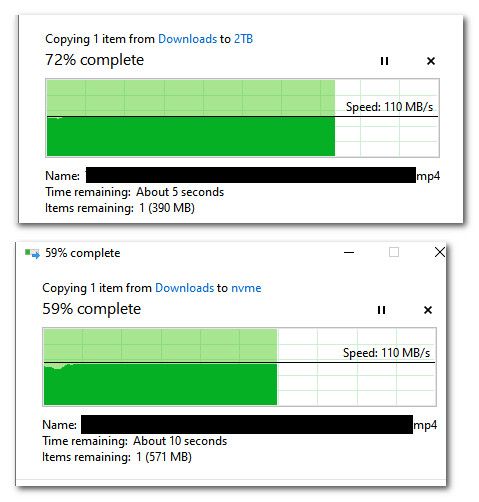

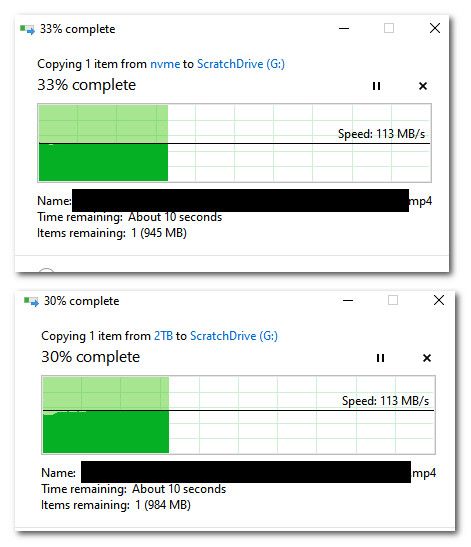

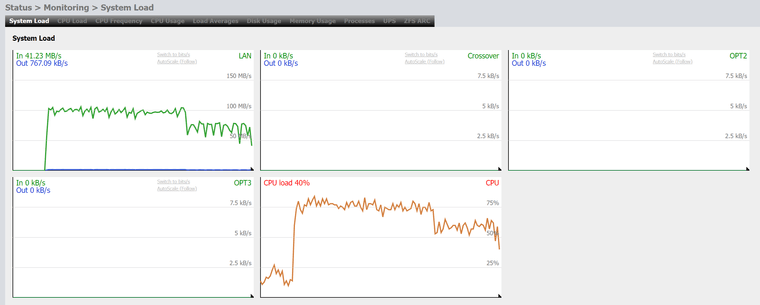

So here is copy over gig to single rust drive, and then copy to 2 nvme disks in a raid 1.. Do you see a speed increase?

Here is reading from them and copy back to my pc...

Do you see a different between the single rust disk, and the 2 nvme disks in a raid 1?

I have the nvme drives in raid 1, because on the off chance of a failure of 1 of those disks - I didn't want to have to redo my dockers and vms that I run on there.. I don't normally back them up.. And wasn't after space, or prob would of put them in a 0.. And the guide I was following on how to setup nvme as storage was in raid 1, and after I had them all setup and working.. I was like oh, could of put them in 0 for more space, and maybe some performance increase for the local io for the dockers and vms.. But was like the difference not going to be all that.. And like said, I have more than enough space for my needs, wasn't worth the complete redo of the storage setup.

-

@johnpoz thanks great idea to reuse the old drive. I don’t know when it will fail but with it mirrored to a new drive I don’t care it will have redundancy fail over capabilities.

-

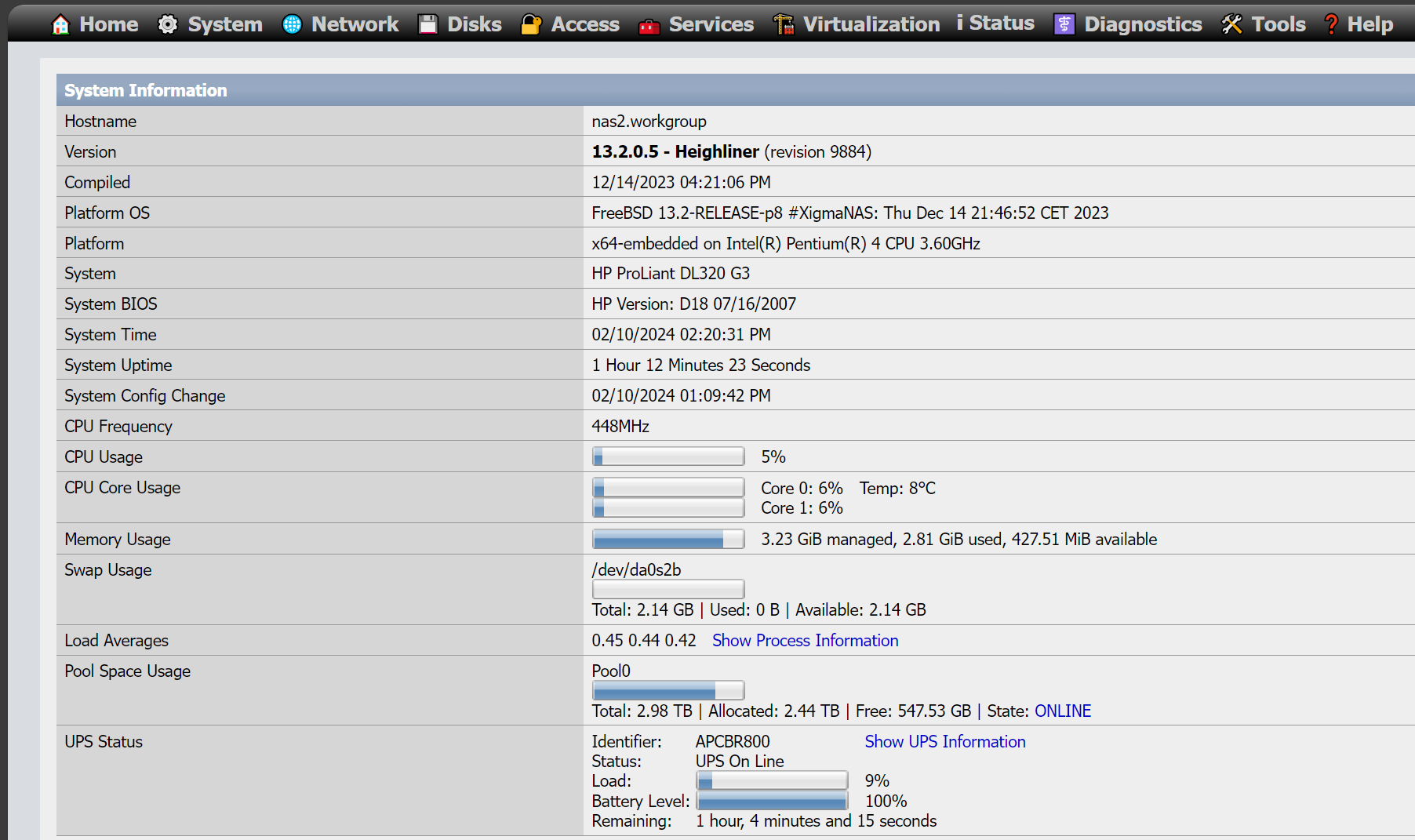

I use XigmaNAS, free, USB boot, FreeBSD based like pfSense. It's actually running on a 20 year old HP 1U rack server - P4, 4GB, 3TB ZFS mirror, GB LAN. No doubt you could hack together something more efficient with desktop parts.

-

-

@stephenw10 Works a treat, as the Brits say (at least until everyone's buffers fill).

-

I mean if it 'aint broke....

But I'd have to be concerned about any hardware that age. As long as you can recover it and afford the downtime I guess.

-

@JonathanLee said in Recommend NAS raid 1:

I don’t know when it will fail but with it mirrored to a new drive I don’t care it will have redundancy fail over capabilities.

That is actually prob not a bad idea, if drives didn't get cheaper and bigger every year.. I normally replace drives in 3-5 years.. But I still have some drives in use much older.. Its more of hey need more space anyway.. Upgrade before they die sort of thing.

Problem with raid 1 is not very useful if you had say a 2TB drive and 4TB drive.. (they don't mirror up correctly) now if what your using will also let you leverage the other 2TB on the bigger drive.. And its even hard to buy such small drives these days to be honest. But if you could add say another 2TB drive couple years later, and then 2 years later replace the original drive with another newer 2TB drive, and just every couple of years replace the older drive in your mirror.

Its all going to come down to your use and needs.. Every few years I need more space anyway - so I just move to bigger drives before the life of the drive is coming to and end.. I have had drives that have worked 7 some years.. Shoot you could prob get 10 years out of some before they fail. But all drives fail, replacing them with bigger faster drives before their life expectancy nears is good practice..

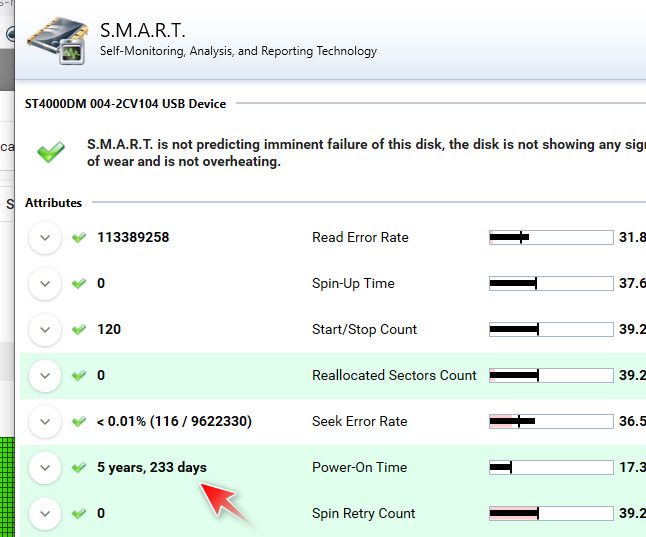

For example the drives in my dac that I store backups on.. Its going on 6 years..

You can for sure get use out your drives.. They are in a pool, my "backup" data is on more than one of the drives in the pool - and its my backup, etc... If they fail, I don't loose anything.. You can for sure squeeze a lot of years out of most disks.. But your day to day active drives, just from space use and speed, 3 some years prob going to upgrade them anyway.. And then cycle them to your backup/scratch storage, etc.

Now you do you, and not trying to discourage you or anything. But me personally would not drop cash to just be able to do raid 1, its not going to get you any extra speed over the wire that is for sure.. Now if locally as mentioned you might see some performance increases.. But are they worth the extra money??? If what your after is performance - and your talking an older drive, prob better to go ssd or nvme and get some real performance increase at the drive - but your not going to see that over the wire that is for sure.

Now if I already had the hardware, and the disks - sure prob throw them in raid 1 vs 0 or jbod if wasn't looking for space.. I agree raid 1 is better than single disk, even if not a backup.. You can survive a disk failure, which is always good.. But if me I wouldn't go throwing cash just so I could do raid 1.. If not really going to see any real world performance difference..

But happy to throw you advice on whatever you want to do, be it shoe string budget nas, or some storage/nas/server thing you willing to throw a few grand at to do nothing ;) I have been eyeing moving to a all SSD nas with 10Ge interfaces - just because it would be fun.. if I could get it past the budget committee (wife).. I would be ordering this https://www.asustor.com/en/product?p_id=80

Do I have an actual use - No ;) do I need to spend the money, again NO.. But it would be slick.. Maybe when get bonus at the end of the year I could sneak it by the committee - hehehe

-

@stephenw10 It was a 10 year old NOS boxed unit when I got it (lost freight). Doubt it has more than a thousand hours on it under my ownership. I only use it for cold storage and sometimes media. And don't get me started on my REALLY old stuff! The machine I'm typing this on with a Core2 Quad has been running overclocked to 3 GHz for 15 years. Yeah, I did change out the drives a couple times.

I'm just saying I could spend plenty on the new hotness, but one can get a lot of use from cast-offs and open-source programs other have devoted plenty of their spare CPU cycles to, like pfSense.

-

Synology is pretty stable and really well professional usage, QNAP

lets you pimp and tune or pain add more other hardware on top

as I see it, but a small old use HP server is also a budget solution.I have used an old HP Proliant mini cube with an Intel Xeon-E 1231

and 16 GB RAM / 16 TB (4 SSDs) together with Openmediavault.

Base model for ~200 € plus 30 € CPU and 50 € RAM and it was

running for a really long time together with dual 10 GBe cards

for ~40 €! So if money will be point, it could also nice and long

running.But now I am looking on a MacBookPro and QNAP offers some NAS

boxes with thunderbolt 4 and two PCIe slots and on top such many

things to tune it will be then less or more my next option.- 2 x M.2 NVMe´s for caching

- 8 x HHD/SSD´s (2 x RAID5 or one RAID50)

- Dual 10GBe Network adapter with 2 x NVMe`s (for caching)

- USB Port for Google coral (QMagie App)

- USB Port for an external RDX drive for backups

- Up to 16/32 GB RAM

- 4/8 Core CPU for running much Apps services

(Mailserver, S/FTP, Webserver, Mediaserver, LDAP/RADIUS server,

Backup for Mac)

At Speicher.de you may find out how much RAM in real you could add to your NAS. Often it was said 4 or 8 GB RAM only and

in real you could add 16 to 32 GB RAM. Perhaps good to know for running

much services for the entire LAN on the NAS.