Pfsense box not reaching 2.5gbps

-

Mmm, in the tests shown above your testing between pfSense and a Windows box on LAN in each direction.

In that setup you're only testing one NIC so I doubt it's hitting a PCIe limit. More likely it's hitting a limit from iperf itself. Try running at the console

top -HaSPwhilst testing. See how the loading is spread across the cores.You might also try setting

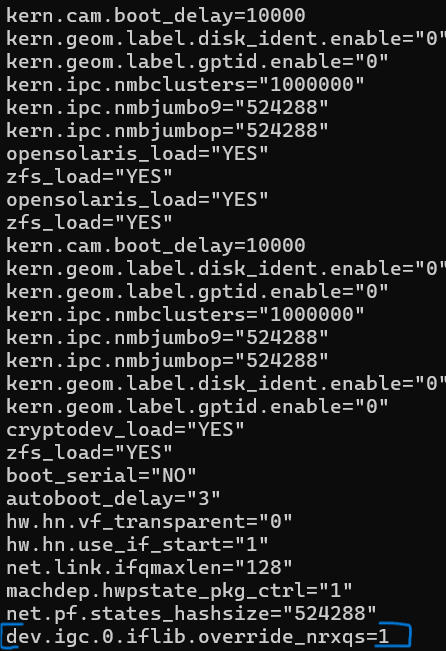

dev.igc.0.iflib.override_nrxqs=1. In some situations switching cores/queues can limit throughput.Steve

-

@python_ip said in Pfsense box not reaching 2.5gbps:

@keyser I think you are right. I was actually thinking along those lines, that’s why I posted the info about the mobo and PCIe. I knew about the theoretical limit of 500MB/s but what I didn’t think of and that you clarified was the IN and OUT. I was only considering one way and that’s why I had some doubts about it really saturating the PCI lane. Do you think that if I use the same NIC on a 3.0 or 4.0 PCIe port I could get the full bandwidth?

https://www.asrock.com/mb/Intel/N100DC-ITX/index.asp#Specification

I am not running VLANs. Only WAN in one port and a simple LAN in the other port of the same NIC.

I have a USB3.0 2.5gb Ethernet adapter, is it worth trying it just to test and see if I can get the full 2.5? I am not sure if FREEBSD will have the drivers for it: https://a.co/d/1hFrULJ

A dualport Intel 2.5Gbit NIC in a PCIe 3.0 x1 should have absolutely no issues reaching linkspeed - I have tried that setup several times without issues.

Please take note of @stephenw10 and @Gertjan 's comments.

They have a real point. Running iPerf server or client on pfSense rarely reaches linkspeed on faster adapters (2.5Gbit+) because of iPerf process pinning.

Make sure to test from a machine on LAN to a WAN side device. You might be lucky that the PCIe lane will just cope with your 2Gbit ISP plan :-)I don't think the USB adapter will do any good, but you could give it a shot - if it turns out to seem PCIe related.

Don't use that in production though, so it's only for testing. -

First of all, thanks for all your replies. They are helping me a ton!

@keyser I have been researching and found this doc: Intel Performance Optimization Guide

They have this note in there:

Some PCIe x8 slots are actually configured as x4 slots. These slots have insufficient bandwidth for full line rate with some dual port devices. The driver can detect this situation and will write the following message in the system log: “PCI-Express bandwidth available for this card is not sufficient for optimal performance. For optimal performance a x8 PCI-Express slot is required.” If this error occurs, moving your adapter to a true x8 slot will resolve the issue.

How can I access those logs in pfsense?

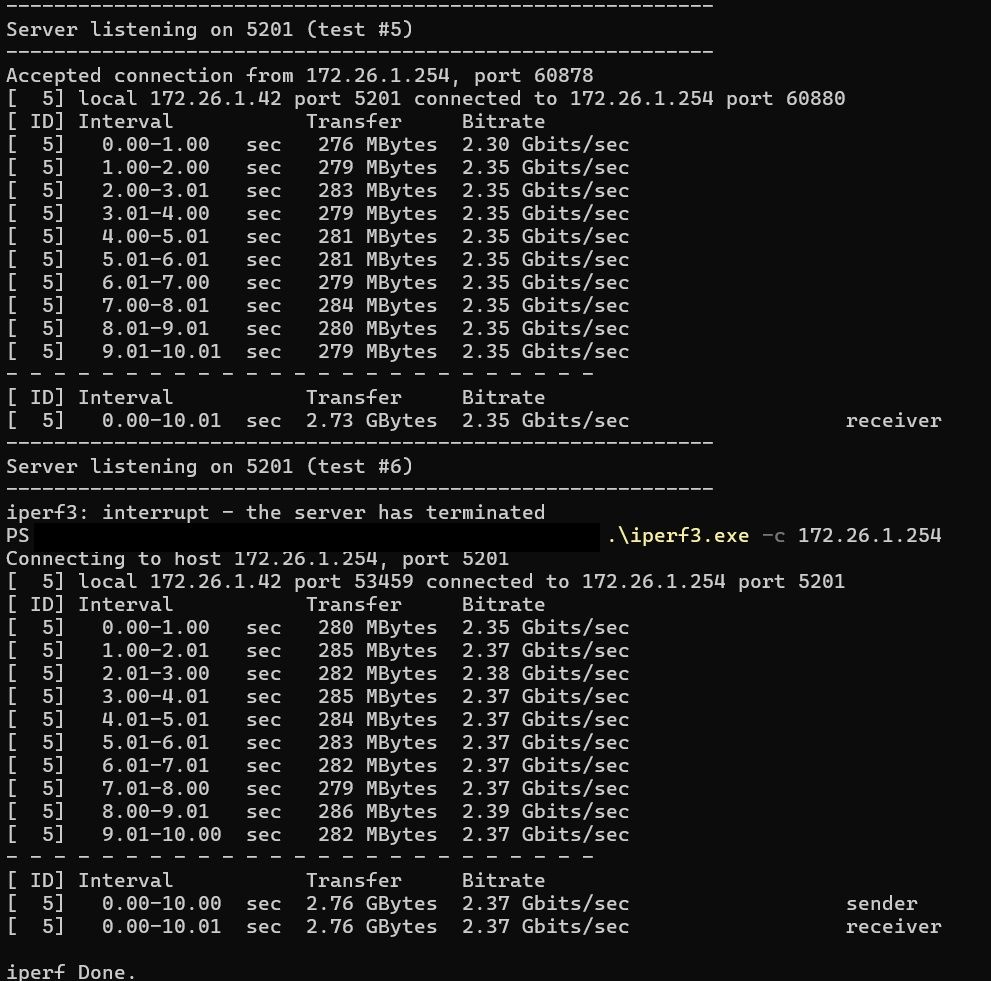

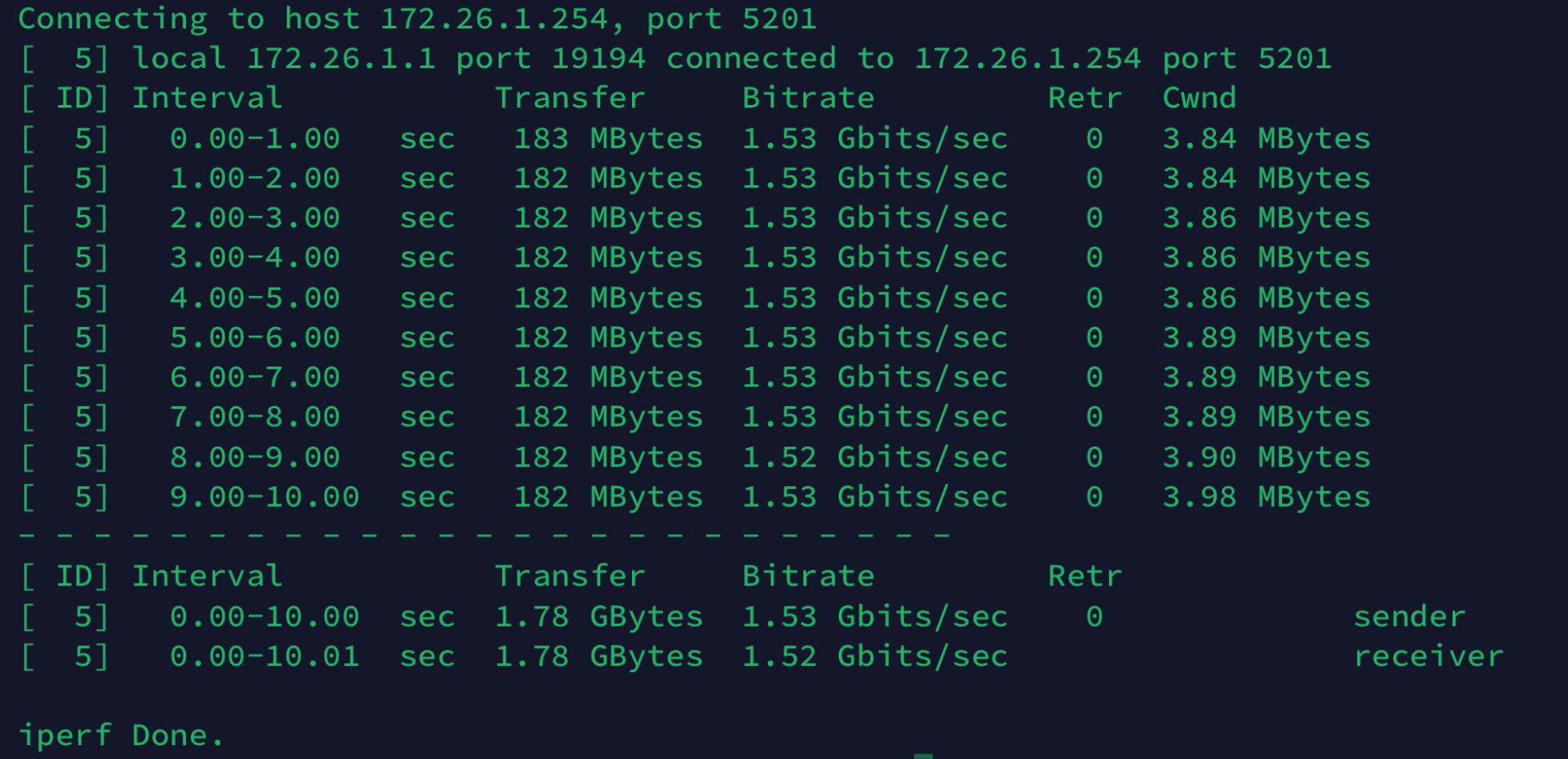

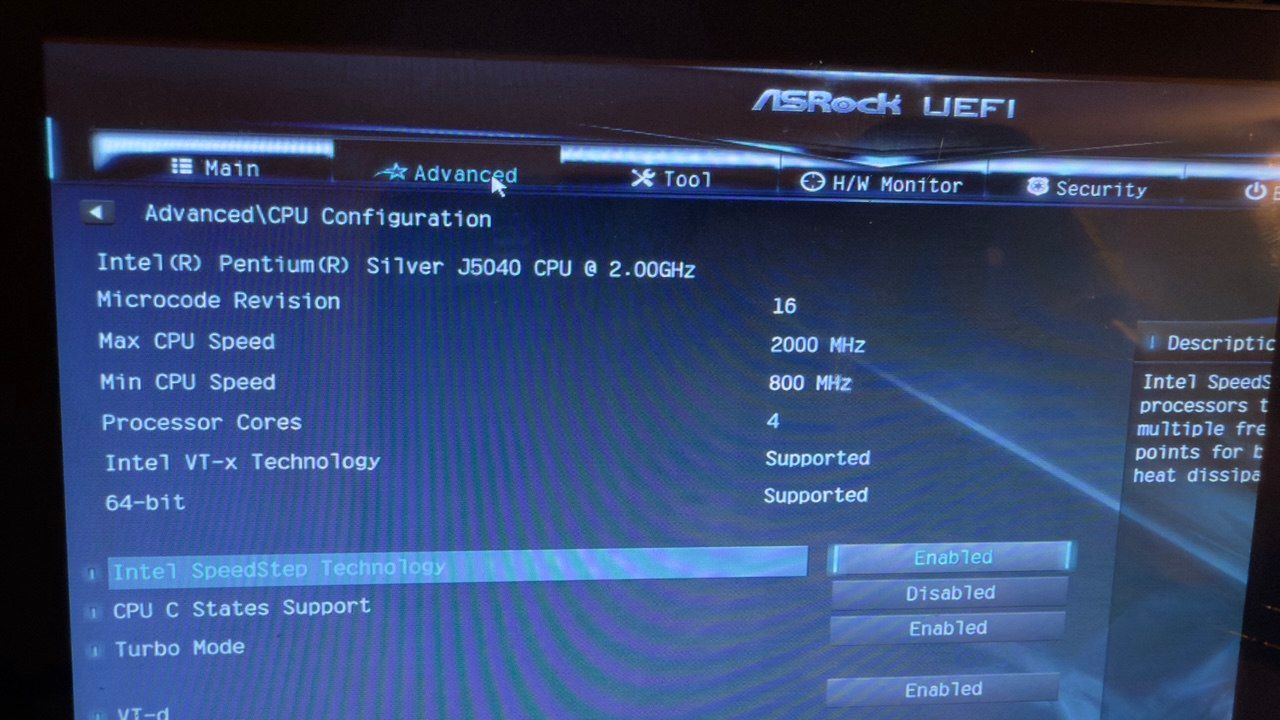

I did the following test that's making me believe more and more the PCIe lane is being saturated on the j5040 board that's running pfsense:

- Took the NIC and plugged it on a Gigabyte Z390 board using the secondary x16 PCIe that runs at x8. (172.26.1.42)

- Installed the latest drivers on Windows 11

- Took the USB3.0 Ethernet adapter and plugged it in a MacbookPro (172.26.1.254)

- Ran iperf3 server on the Windows machine and the client on the Mackbook Pro = 2.35gbps

- Ran iperf3 server on the MacbookPro and the client on the Windows machine = 2.37gbps

The top part shows the results of the Windows machine as a iperf server and the bottom as a client.

What other tests do you suggest?

Thanks again!

-

@python_ip Those logs are generated by the Windows driver I assume. Regardless, thats not really relevant as you are in a X 1 slot anyways.

The Card certainly works as intended as shown by your test, but what you really need is to test from LAN towards a iPerf Server on WAN - If your ISP has a iPerf server you might be able to use that to test towards the 2Gbit.

Then see if you can pull that across pfSense without running the iperf server/client on pfsense itself. -

Install pfSense on that Gigabyte board and retest. If it's a PCIe issue you will still see full throughput there.

But I'd be surprised if it;s actually the PCIe slow restricting this.

Definitely try setting one receive queue. We set that on the 4200.

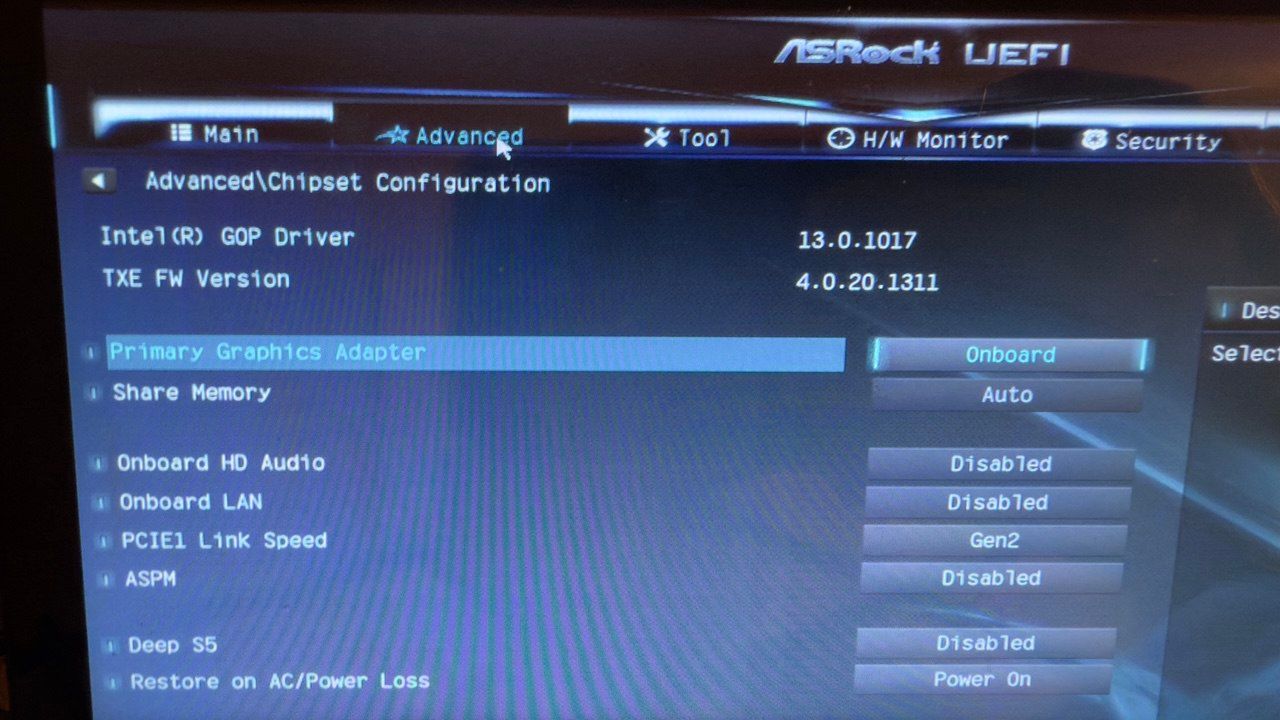

Be sure to disable any unnecessary PCIe devices (sound cards, firewire etc) that may be on the same bus.

-

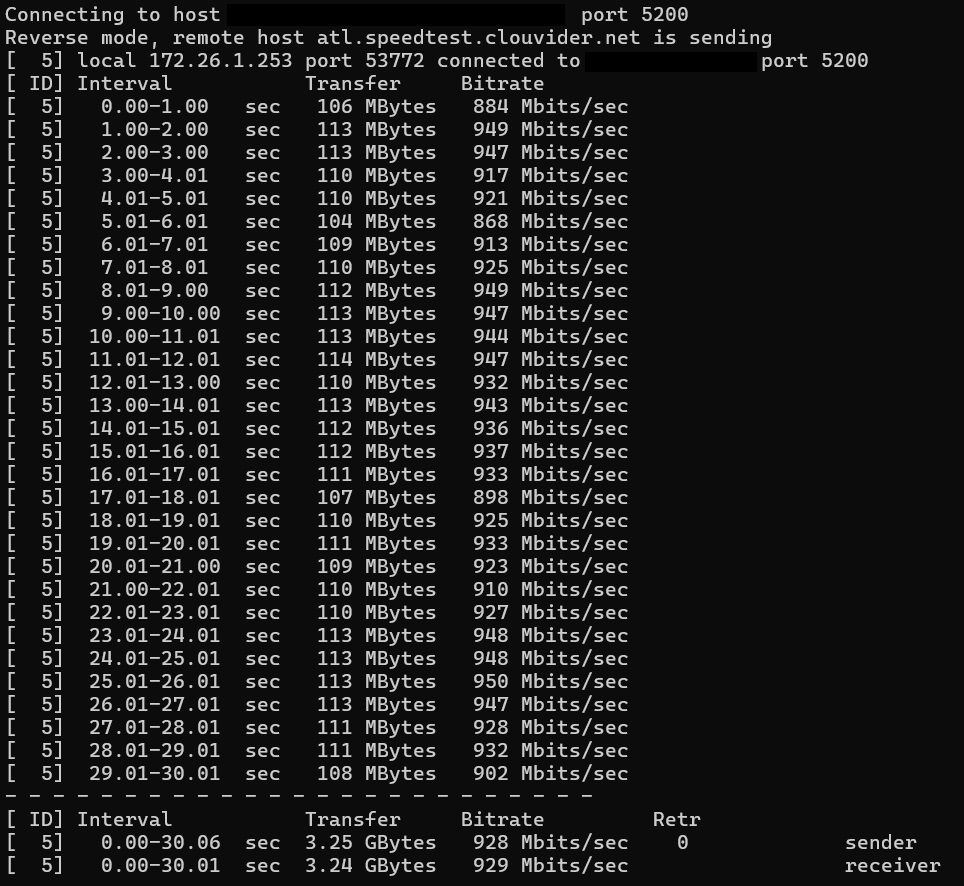

@keyser I did the following tests:

iperf reverse mode from the fastest server I could find online = almost 1gbps

Speed test from the Comcast Business site = 1.6gbps

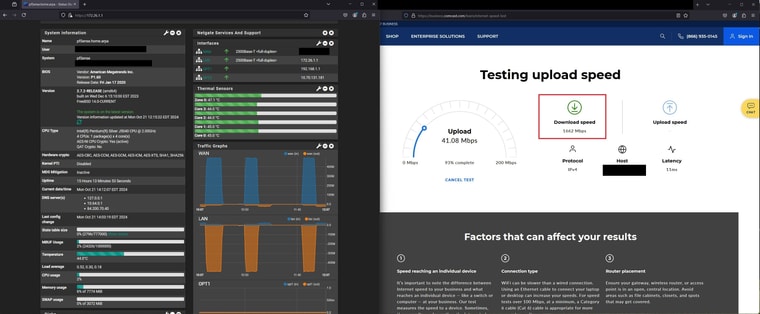

@stephenw10 I set override_nrxqs = 1 in the /boot/loader.cfg and did a reboot:

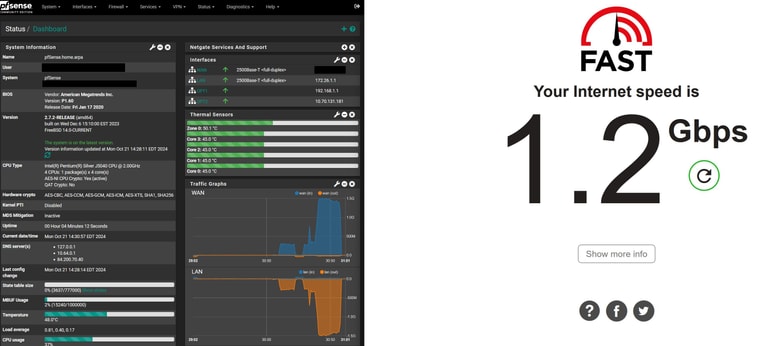

The value persisted the reboot and here are the results of a speed test afterwards:

Should I set the flag for igc1 too? That's the interface running WAN.

Should I try advanced config flags like that one?Installing pfsense on the z390 board will be a test that's going to take some time. Not an easy one for me right now.

-

@stephenw10 @keyser for more detail about the config of the interfaces, I have attached the output of sysctl dev.igc for each interface.

Let me know if you have any recommended settings for other parameters. Thanks!

-

@python_ip Hmm, I’m out of ideas. Intel NICs “always” plays nice with pfSense, so the only other logical test is on that other motherboard. But really - I would expect the J5040 to be able to handle 2.5Gbit, and I would also expect PCIe 2.0 x 1 to actually handle your 2Gbit ISP service (up and down) albeit at the very limit. So somethings off.

-

Here is yet another test… running the iperf server on the MacBook and the client on the pfsense box = 1.53gbps

I have also disabled the onboard audio and the onboard lan:

-

@python_ip said in Pfsense box not reaching 2.5gbps:

Should I set the flag for igc1 too? That's the interface running WAN.

Yes, if both igc NICs are the path, try that. The WAN would be most affected in a download test by that receive value.

-

@stephenw10 no luck…

I found this guide: https://calomel.org/network_performance.html

There is a chart where they recommend the PCIe 2.0 x1 for a gigabit firewall.

I will try a few more things but I think I am maxing out the PCIe lane.

-

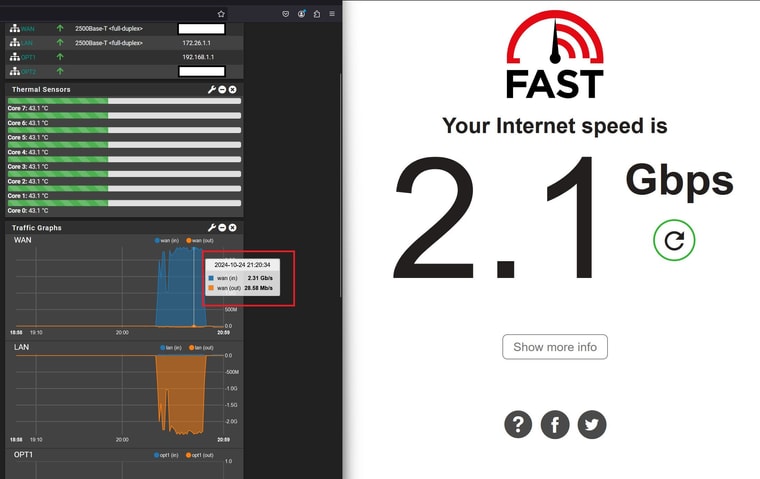

Just to put some closure to this thread... It was the PCIe lane being saturated. Upgraded the hardware to a motherboard with a PCIe 3.0 x16 and this is the result: