How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?

-

@bmeeks said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Sorry for my understanding, I try to re-formulate my question: how to Snort/Suricata on separate bare metal server may interacts with pfSense like internal “packaged” version does?

Need to pulling out Snort, Suricata, ntopng to separate node.

Is that possible at all?No, as @stephenw10 said, this is not possible in the packages (Snort or Suricata) and is likely to never be available. The internal technology just does not lend itself to that.

Thank You so much for suggestions!

Ok, “never” mean “never”.

What you seem to want to implement is much better done using bare metal with a Linux OS and installation of the appropriate Suricata package for that Linux distro.

Which advantages Lunux (RHEL in my case) have in comparison with FreeBSD in this certain usecase?

You would need to configure and manage that Suricata instance completely from a command-line interface (CLI) on that bare metal machine.

From Your experience is great Ansible role exist for orchestrating the Suricata or Snort ?

You could implement Inline IPS mode operation using two independent NIC ports (one for traffic IN and the other for traffic OUT). You would want to use the AF_PACKET mode of operation in Suricata. Details on that can be found here: https://docs.suricata.io/en/latest/setting-up-ipsinline-for-linux.html#af-packet-ips-mode. With proper hardware, such a setup could easily handle 10G data streams. You would never approach that on pfSense because the IDS/IPS packages on pfSense utilize the much slower host rings interface for one side of the packet path. The Linux AF_PACKET mode use two discrete hardware NIC interfaces and bypasses the much slower host rings pathway.

So, in which place in scheme this dedicated server must be installed if I need to inspect/ blocking **both incoming traffic (on each of 4 uplinks) and inside traffic (on each of LANs (each of them connected to pfSense separate NICs port thru the hardware manageable switch) ?

-

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Which advantages Lunux (RHEL in my case) have in comparison with FreeBSD in this certain usecase?

Mainly because the upstream Suricata development team tests extensively on Linux and all of them use Suricata on Linux. The only thing they do for FreeBSD is compile Suricata on a dedicated single instance just to be sure it compiles successfully. They do not test or benchmark there other than to ensure basic functionality.

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

From Your experience is great Ansible role exist for orchestrating the Suricata or Snort ?

I've never used Ansible, so I don't feel qualified to answer this question. I would completely disregard Snort for this role as it is single-threaded and has no hope of matching performance with Suricata. That is unless you migrate to the new Snort3 binary.

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

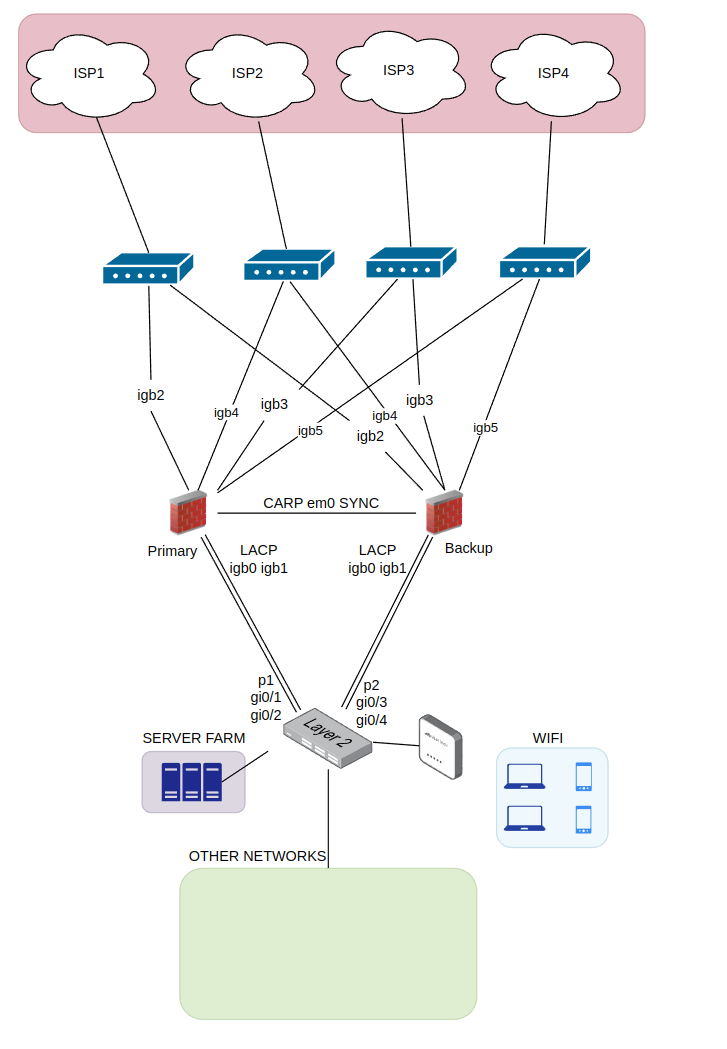

So, in which place in scheme this dedicated server must be installed?

From your diagram at the top of this thread, it appears you would need at least 4 of these IDS appliances (and perhaps 8 for full redundancy). Each appliance would live on the link between your Internet facing switch and your pfSense firewalls.

The appliances would in effect perform as bridges copying traffic at wire speed between the two hardware NIC ports with Suricata sitting in the middle of the internal bridge path analyzing the traffic. Traffic that passed all the rules would be copied from the IN port directly to the OUT port while traffic that triggered a DROP rule would not be copied to the OUT port (and thus effectively dropped). I'm essentially describing a build-it-yourself version of this now discontinued Cisco/Sourcefire appliance family: https://www.cisco.com/c/en/us/support/security/firepower-8000-series-appliances/series.html.

-

@bmeeks said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

So, in which place in scheme this dedicated server must be installed?

From your diagram at the top of this thread, it appears you would need at least 4 of these IDS appliances (and perhaps 8 for full redundancy). Each appliance would live on the link between your Internet facing switch and your pfSense firewalls.

The appliances would in effect perform as bridges copying traffic at wire speed between the two hardware NIC ports with Suricata sitting in the middle of the internal bridge path analyzing the traffic. Traffic that passed all the rules would be copied from the IN port directly to the OUT port while traffic that triggered a DROP rule would not be copied to the OUT port (and thus effectively dropped).

Is this mean that possible using 2 of bare metal servers (for redundancy) and each one of servers have NICs with total 8 of hardware ports (4 for IN traffic and 4 for OUT)?

If You have some experience, which bare metal (in terms of CPU/NICs characteristics) capable to handle total 40Gb/s (4 x 10Gb/s + system Suricata overhead), 80 Gb/s (4 x 20Gb/s)?

I'm essentially describing a build-it-yourself version of this now discontinued Cisco/Sourcefire appliance family: https://www.cisco.com/c/en/us/support/security/firepower-8000-series-appliances/series.html.

Thank You so much! Promise to reading today evening’s;)

-

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Is this mean that possible using 2 of bare metal servers (for redundancy) and each one of servers have NICs with total 8 of hardware ports (4 for IN traffic and 4 for OUT)?

You may want to review the Suricata docs I linked earlier so that you fully understand how IPS mode works. When I say IN and OUT those are relative. Literally each NIC port is both an IN and an OUT simultaneously just like a NIC on a conventional workstation supports bi-directional traffic. But yes, you would want probably 8 total NIC ports for the IPS and then one additional NIC for a managment port. This management port would get an IP address. The other NIC ports would not get an IP assignment. More details below.

With a true Inline IPS configuration in Suricata, you specify in the YAML config file two port pairs for each Suricata instance. In the Suricata YAML configuration file you would specify an interface and a copy-interface for each traffic flow direction so that you can support bi-directional traffic. Here is an example config: https://docs.mirantis.com/mcp/q4-18/mcp-security-best-practices/use-cases/idps-vnf/ips-mode/afpacket.html.

This is the configuration from the link above:

af-packet: - interface: eth0 threads: auto defrag: yes cluster-type: cluster_flow cluster-id: 98 copy-mode: ips copy-iface: eth1 buffer-size: 64535 use-mmap: yes - interface: eth1 threads: auto cluster-id: 97 defrag: yes cluster-type: cluster_flow copy-mode: ips copy-iface: eth0 buffer-size: 64535 use-mmap: yesExamine the YAML configuration above closely and notice how the

eth0andeth1interfaces are each used twice in the configuration (as targets of theinterface:parameter). Once as the "source" and again later on as thecopy-ifaceor copy-to interface. This literally means traffic inbound oneth0is intercepted by an af-packet thread, inspected by Suricata, then copied toeth1for output or else dropped if the traffic matched a DROP rule. At the same time, a separate Suricata af-packet thread is reading inbound traffic from interfaceeth1, inspecting it, and copying it toeth0, thecopy-iface, for output or else dropping it if the traffic matches a DROP rule signature. The above operations can be very fast because the network traffic completely bypasses the kernel stack.In this design (or a similar design implemented with netmap on FreeBSD), you would need a totally separate NIC port for logging in and configuring and maintaining the IPS appliance. You would put an IP address on that separate NIC. But the NIC ports used for inline IPS operation would not get any IP configuration at all. Internally Suricata and the af-packet process treats them like a bridge (but do not configure them as an actual bridge!).

The above description is hopefully enough for you to formulate your own HA design in terms of hardware. With IPS operation, you would connect say

eth0to one of your WAN-side ports andeth1to one of your pfSense firewall ports. Those two ports would literally be "inline" with the cable connecting your WAN switch port to your firewall port -- hence the name Inline IPS (inline intrusion prevention).@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

If You have some experience, which bare metal (in terms of CPU/NICs characteristics) capable to handle total 40Gb/s (4 x 10Gb/s + system Suricata overhead), 80 Gb/s (4 x 20Gb/s)?

I don't have any personal experience with hardware. There are lots of thread discusussions and examples to be found with a Google search for "high throughput or high speed or high traffic" Suricata operation. You might consider up to 4 server appliances. It would all depend on the capacity of the chosen hardware. NICs with plenty of queues will help. Run Suricata in the workers threading mode. That will create a single thread per CPU core (and ideally per NIC queue). Each single thread would handle a packet from ingestion to output on a given NIC queue. With lots of queues, CPU cores, and Suricata threads you get more throughput. There are commercial off-the-shelf appliances for this, but of course they are not cheap

.

.Follow-up Caveat: Suricata cannot analyze encrypted traffic. Since nearly 90% or more of traffic on the Internet these days is encrypted, Suricata is blind to a lot of what crosses the perimeter link. You can configure fancy proxy servers to implement MITM (man-in-the-middle) interception and decryption/re-encryption of such traffic, but that carries its own set of issues. Depending on what you are hoping to scan for, it could be that putting the security emphasis on the endpoints (workstations and servers) instead of the perimeter (firewall) is a much better strategy with a higher chance of successfully intercepting bad stuff.

-

@bmeeks said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Follow-up Caveat: Suricata cannot analyze encrypted traffic. Since nearly 90% or more of traffic on the Internet these days is encrypted, Suricata is blind to a lot of what crosses the perimeter link. You can configure fancy proxy servers to implement MITM (man-in-the-middle) interception and decryption/re-encryption of such traffic, but that carries its own set of issues. Depending on what you are hoping to scan for, it could be that putting the security emphasis on the endpoints (workstations and servers) instead of the perimeter (firewall) is a much better strategy with a higher chance of successfully intercepting bad stuff.

At the first let me say BIGGEST THANKS FOR SO DETAILED ANSWERING and passion to help me resolving the case.

So, because SSL/TLS1.3 connections become standard by default in most common used desktop and mobile browsers (and even search systems exclude sites w/o SSL from their ranking and search results) and QUIC at all become more and more popular on all server OSs and web-servers,- is that mean that EOL date for IDS/IPS w/o mitm come close and close? And not only for outside incoming traffic.

Even inside of organisation’s security perimeter would be no place for Suricata/Snort.And only what Security Admin may doing would be:

- fresh updates for applications and OSs;

- planning internal infrastructure and intrusion monitoring well;

- extensively using AI for monitoring hardware and apps, anomalies real-time finding (and alerting);

- creating great firewall’s rules;

Glad to read Your opinion about that.

-

In a large organisation though they may force all traffic through a proxy to decrypt it. In which case it could still be scanned.

-

@stephenw10 said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

In a large organisation though they may force all traffic through a proxy to decrypt it. In which case it could still be scanned.

But this mean at least 2 (HA, active-backup) IDS/IPS servers on each (!) LAN. So totally 2 proxy + 2 IDS/IPS + several switches on each of LANs.

-

Wouldn't really need more than one pair IMO. As long as all subnets have access to the proxy.

-

By the way, Gigamon’s TAPs and Packets Brokers looks VERY PROMISING to mirroring all traffic for future inspections…

-

@mcury said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

You will need to connect each firewall to each ISP's router.

This setup uses a single switch but you could use two with VRRP enabled if that is what you want.

This setup is using a LACP to the switches, but you could change that to use the 10G switch you mentioned.Is that possible to use LACP also for CARP SYNC, if I have 2 interfaces of NICs for this purpose?

And what about using LACP from firewalls to upstream switches (igb2 - igb5) ?

P.S.

Sorry for late reply.

And THANK YOU SO MUCH for networking passion and patience! -

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Is that possible to use LACP also for CARP SYNC, if I have 2 interfaces of NICs for this purpose?

You mean to use two links in a lagg for just the pfsync traffic? Yes, you can do that but it's probably not worth it IMO.

-

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Is that possible to use LACP also for CARP SYNC, if I have 2 interfaces of NICs for this purpose?

I don't think it is necessary, the SYNC interface doesn't use that much of traffic, as far as I'm concerned, firewall states and configuration changes only (If I'm wrong about this, please someone correct me).

And what about using LACP from firewalls to upstream switches (igb2 - igb5) ?

That would help only if your internet link is above 1Gbps. Although a single client would never go beyond 1Gbps anyway.

That setup above is considering intervlan traffic along with 1Gbps internet links, to don't bottleneck anything.Another approach would be to update all the NICs in the computers to 2.5Gbps, get 2.5Gbps switches with 10Gbps uplink ports to the firewall, then connect the NAS to another 10Gbps port, use the remaining 2.5Gbps ports to connect to the ISP routers/gateways, if those have 2.5Gbps.

By doing like this, a single client would be able to reach 2.5gbps to the WAN.

You could also do LACP with 2.5Gbps ports.And THANK YOU SO MUCH for networking passion and patience!

:) My pleasure

-

@mcury said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

@Sergei_Shablovsky said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Is that possible to use LACP also for CARP SYNC, if I have 2 interfaces of NICs for this purpose?

I don't think it is necessary, the SYNC interface doesn't use that much of traffic, as far as I'm concerned, firewall states and configuration changes only (If I'm wrong about this, please someone correct me).

My FIRST MAIN GOAL IS TO MAKE HARDWARE RESERVATION for all hardware links:

- between pfSense boxes and switches that connected to it;

- between pfSense boxes itself;

So the main “guide mantra” now: AVAILABILITY - FIRST, SECURITY - second, OBSERVABILITY (MONITORING & ALERTTING) - third.

And what about using LACP from firewalls to upstream switches (igb2 - igb5) ?

That would help only if your internet link is above 1Gbps. Although a single client would never go beyond 1Gbps anyway.

That setup above is considering intervlan traffic along with 1Gbps internet links, to don't bottleneck anything.The pfSense itself connected to the nets by switches. So, for example, when offices nodes generate <1G at all, the web services generate between 3 and 7G depending on daytime.

So, the hardware doubled connection from pfSense to upstream switch (which directly connected to ISP’s aggregate switch) was not only as availability, but increase bandwidth.

Am I lost logic somewhere? ;)

Another approach would be to update all the NICs in the computers to 2.5Gbps, get 2.5Gbps switches with 10Gbps uplink ports to the firewall, then connect the NAS to another 10Gbps port, use the remaining 2.5Gbps ports to connect to the ISP routers/gateways, if those have 2.5Gbps.

By doing like this, a single client would be able to reach 2.5gbps to the WAN.

You could also do LACP with 2.5Gbps ports.Yes, the next step in upgrading would be replacing existed downstream NIC’s on pfSense server to 10G-heads NICs and upstream NICs - to 40G or 20G-head NICs.

Am I looking at right direction? :)

-

@Sergei_Shablovsky I used to configure VRRP with Cisco Switches, Catalyst.

Something around 15 years ago, more or less, not sure anymore..I'm getting really old hehe, that is not good..

Check if you can find VRRP switches, that supports 802.1ad (LACP), and go ahead, build the dream network :)

-

@stephenw10 said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

In a large organisation though they may force all traffic through a proxy to decrypt it. In which case it could still be scanned.

Even with 1k nodes in one branch, the inspecting of all incoming traffic would be challenge even for latest&greatest Intel CPU + Intel QAT, and in case client-server tunneling - not give us ability to inspect traffic between them at all.

In any case, decryption to inspect traffic - definitely is a very bad idea to implement exactly on border firewall: firewall need to take decisions very quickly, “on the fly”, and waiting the decryption results - is not possible, because shaping overall bandwidth in 10-15 times.

-

@stephenw10 said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Well there's no way to pass firewall blocks from something external if you're using it in IPS mode. At least not yet.

In IDS mode though you can just mirror the traffic to something external for analysis.

What about scheme, let’s call it “part of multi-layer defense strategy”

there are IDS/IPS system on separate server, that receives mirrored traffic from all of 4xWANs from switches above the pfSense-based firewall

2.

after some ACLs in IDS/IPS triggered and IDS/IPS adding IPs to blacklist

3.

some crone script on IDS/IPS server adding all banned/blacklisted IPs to the switches ACL’sIn this scheme we have constantly worked “traffic analyzing loop” which just detecting and blocking bad/potentially bad requests from coming to border pfSense-based firewall.

And taking off some loading from pfSense-based server’s hardware.As fast example, this may be a guard from small DOS/DDOS or flooding attacks.

What is Your opinion on this?

-

That could work. It's unlikely to help much with a DOS/DDOS attack though because by the time that traffic hits the ACLs on a switch it's already used up bandwidth on the WAN.

-

@stephenw10 said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

That could work. It's unlikely to help much with a DOS/DDOS attack though because by the time that traffic hits the ACLs on a switch it's already used up bandwidth on the WAN.

Thank You for answering and “network firewalling passion” :)

Agree, even in middle-size (mean 200-300 Gb/s) DDoS attack, no one edge FW/switch guard You from filling up Your 10Gb uplink to ISP: after incoming rate raising 9,7-10,3Gb/s (or some quantities of pps) automated alerting system just temporarily dev/null anything that going to You from outside.

But I asking about some kind of eliminating loading on edge FW: moving some portion of blocking rulesets from edge FT to uplink switches (especially they are great built on ASICs).

Anyway, Intel-based consumer servers with FreeBSD/RHEL able to crunching only up to 50-100Gbps depend on CPUs and RAM/PCIe speed, offloading scheme with DPDK - add extra 200-300Gbps to this, offloading scheme with Smart-NICs - give us another extra, than specialized NPU with custom silicon, etc…

This mean pfSense - up to 50-100 Gbps, TNSR - up to 400. (But in real life on non-synthetic tests the numbers would be like 60 and 200 respectively).So, each 1Gb which would be dropped/rejected on uplink ASIC-based switch - would be VERY helpful.

Where am I wrong?

-

Well it would remove load from the firewall. So if you were under a DDoS attack and needed to still route between internal subnets that could be useful. But it wouldn't help with the attack itself much.

-

@stephenw10 said in How to make HA on 2 pfSense on bare metal WITH 4 x UPLINKS WANs ?:

Well it would remove load from the firewall. So if you were under a DDoS attack and needed to still route between internal subnets that could be useful. But it wouldn't help with the attack itself much.

Agree! Anyway for middle/big DDoS better to deal on local ISPs + CloudFlare level. Here no room for edge FW… :)

Let me to note, if thinking in “Zero thrust” direction, also FW on end local node/service as “fine tuning firewalling” would be great, because each end node better know what particular (and how) need to be secured.

So at the end we build 3-layered (as minimum) defense:

- ACLs on edge ASIC-based switches;

- pfSense as edge FW;

- PF/IPF/IPFW FW (sertificates, tokens, etc…) on end node/service;

What do You think about this 3-layered scheme, @stephenw10 ?