10GB Lan causing strange performance issues, goes away when switched over to 1GB

-

Hmm, that is curious. You would not think the 10G link should make any difference there. The total rate is still limited by the incoming WAN to less than the 1G link to the client.

But it does start to look like an issue between the switch and client I agree. Try testing from a different client or different NIC type.

I would also try enabling whatever flow control the switch does have. At least as a test.

-

This article seems to describe my issue.

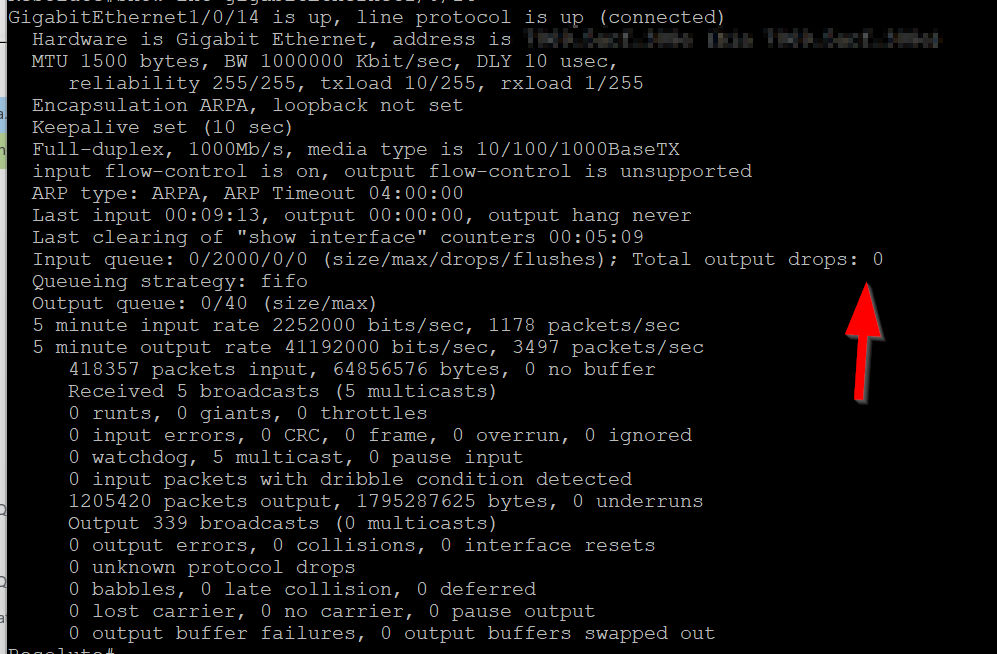

https://www.cisco.com/c/en/us/support/docs/switches/catalyst-3850-series-switches/200594-Catalyst-3850-Troubleshooting-Output-dr.htmlSo far I tried disabling QOS on all ports on the switch and the performance has since doubled, getting 600Mpbs now appose to 300Mbps. I am still seeing output drops but not as many, so getting closer. I am at least happy and convinced this issue is purely a Cisco switch issue and not a pfSense bug.

the article is a little confusing but I sill if what they recommend does the trick.

-

Ah, nice. Yeah I would never have suspected that, good catch!

-

@ngr2001 This was discussed 3+ years ago @ this thread

This is a TCP flow control negotiation issue that exists somewhere upstream from the 1GbE LAN client. For me, I am unsure if this is pfSense or the Comcast Cable modem. One way to deal with this is using ethernet flow control but it is an ugly sledgehammer solution.

The Cisco solution is to put this in your 3850 config to increase the buffers for the switch ports that are suffering from output drops:

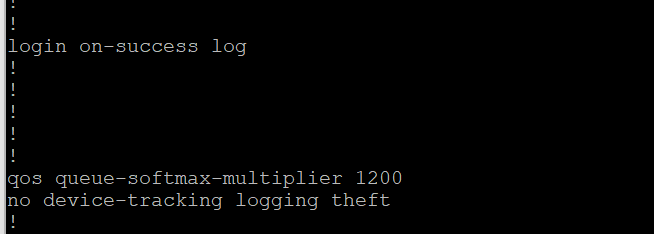

qos queue-softmax-multiplier 1200

-

Hmm, hard to see how TCP flow control could lead to packet drops from a switch...

Unless the client fills it's buffers and can no longer accept packets maybe...

-

@stephenw10 That is exactly why. TCP flow control negotiation between the source and destination should prevent this from occurring but something is preventing this from occurring. L2 flow control works but is a stupid blunt hammer.

-

Hmm. Well also hard to see how either pfSense or the switch could have any effect on TCP flow between the client and server...

Other than perhaps with the 1G link the flow is sufficiently restricted that the TCP control never comes into effect.

-

@stephenw10 Basic query to ChatGPT or Gemini gives you the similar response. Plus I already dealt with this working at Fortune #1/2 with your appliances.

AI Overview

Learn more

A firewall can potentially disrupt TCP flow control by inspecting and modifying packets in a way that interferes with the mechanisms used to manage data transmission rates between two devices, potentially leading to data loss or congestion issues if not configured properly.

How a firewall could break TCP flow control:

Packet filtering based on TCP flags:

If a firewall aggressively filters packets based on TCP flags like SYN, FIN, or ACK, it could inadvertently drop essential packets used for flow control, like the "window update" packets which signal how much data the receiver can accept.

Deep packet inspection (DPI):

If a firewall performs deep packet inspection on TCP data, it might modify the data stream in a way that alters the TCP sequence numbers, causing confusion in the flow control mechanism.

State-based inspection limitations:

While stateful firewalls track TCP connections, they might not always accurately interpret complex flow control scenarios, potentially causing issues when dealing with large data transfers or dynamic window sizes.

Incorrect configuration:

Misconfigured firewall rules, like overly strict filtering or improper flow control settings, can lead to unintended disruptions in TCP traffic management.

Potential consequences of a firewall disrupting TCP flow control:

Packet loss:

If a firewall drops important flow control packets, the sender might continue sending data faster than the receiver can handle, resulting in data loss.

Congestion:

When flow control is disrupted, network congestion can occur as senders continue to transmit data without receiving proper feedback about the receiver's capacity. -

Mmm, none of that should apply to pfSense unless a user has added complex custom rules or packages.

Potentially there could be some TCP flag sequence that pf doesn't see as legitimate. But I'd imagine that would break a lot of things. We'd be seeing floods of tickets. And pf would log that as blocked packets in the firewall logs (unless that has been disabled).

-

@stephenw10 All I can tell you is that with an OOB Netgate XG-1537 appliance configured with just the wizard using the two SFP+ ports for 10GbE WAN & 10GbE LAN downlinked to a Cisco Catalyst 9300 mGig switch, the 10GbE clients have no issues with output drops--but the 1GbE clients do due to lack of TCP flow control working when traffic flows through the pfSense.

iPerf3 on the LAN between 10GbE and 1GbE clients show no output drops. Even performing iperf3 between the pfSense LAN interface and 1GbE shows no output drops. Try to iPerf3 through the pfSense to other corporate and DC servers and speeds drop depending on the buffer of the switch. Larger switches like Cisco 4500E and 9400 don't exhibit this issue but smaller 1RU switches like the 3850X/9300 do because of small buffers--which can be overcome with the command I provided earlier.

Move the 3850X/9300 switch from out behind the XG-1537 and both 10GbE and 1GbE clients hitting Ookla speedtest across the internet and the results are 9.4Gbps and 940Mbps respectively. Same 1GbE clients hitting iperf3 servers on other areas of the corporate LAN or DCs also show full 940Mbps bidi.

-

@stephenw10 said in 10GB Lan causing strange performance issues, goes away when switched over to 1GB:

We'd be seeing floods of tickets. And pf would log that as blocked packets in the firewall logs (unless that has been disabled).

In terms of this. What is the percentage of pfSense users that have say 2/5/10Gbps Internet coming into the pfSense WAN interface and then having a mixture of 10GbE and 1GbE clients downstream. I would think the number is niche still. However I deployed several dozen of your appliances along with other security appliances in the fruit company. Connecting your appliances with 1GbE to the WAN would always resolve the issue. Connecting your appliances with 10GbE or greater always provided the same outcome for 1GbE clients downstream. I am not criticizing you but this is from over 3 decades of network engineering experience with all types of firewalls from Cisco, PAN, Fortinet and Netgate. They all can create the same issues.

-

Hmm, interesting. I'm not arguing that it doesn't happen more just that it's hard to see how it could. And consequently what could be done to address it.

An increasing number of users have access to faster WAN links and LAN side hardware. It's only goign to become more important.

-

@stephenw10 Exactly. I am not even saying it's not the AT&T or Comcast crappy gateways that are the culprit either. There are so many variables to these issues. Same thing happened when we saw GbE come into the market and most clients were FastEthernet 100Mbps.

-

@lnguyen This is great stuff, makes perfect sense now thanks for all the help.

A few follow-up questions.

The command below, am I correct that this should only be applied to the 10GB port on the cisco switch, should I leave the 1GB ports alone / stock ?

qos queue-softmax-multiplier 1200

- You mentioned that Catalyst 9300 suffers from the same problem, are there any 1U 48-Port Cisco switches that do not have this issue that you would recommend, perhaps not even Cisco ? You mentioned the above fix being a sledgehammer approach. I take that as this fix may not be ideal and perhaps keeping the lan simply on 1Gb would result in better overall performance and lower latency. This is just for my home network but as you can tell I have an obsession for speed.

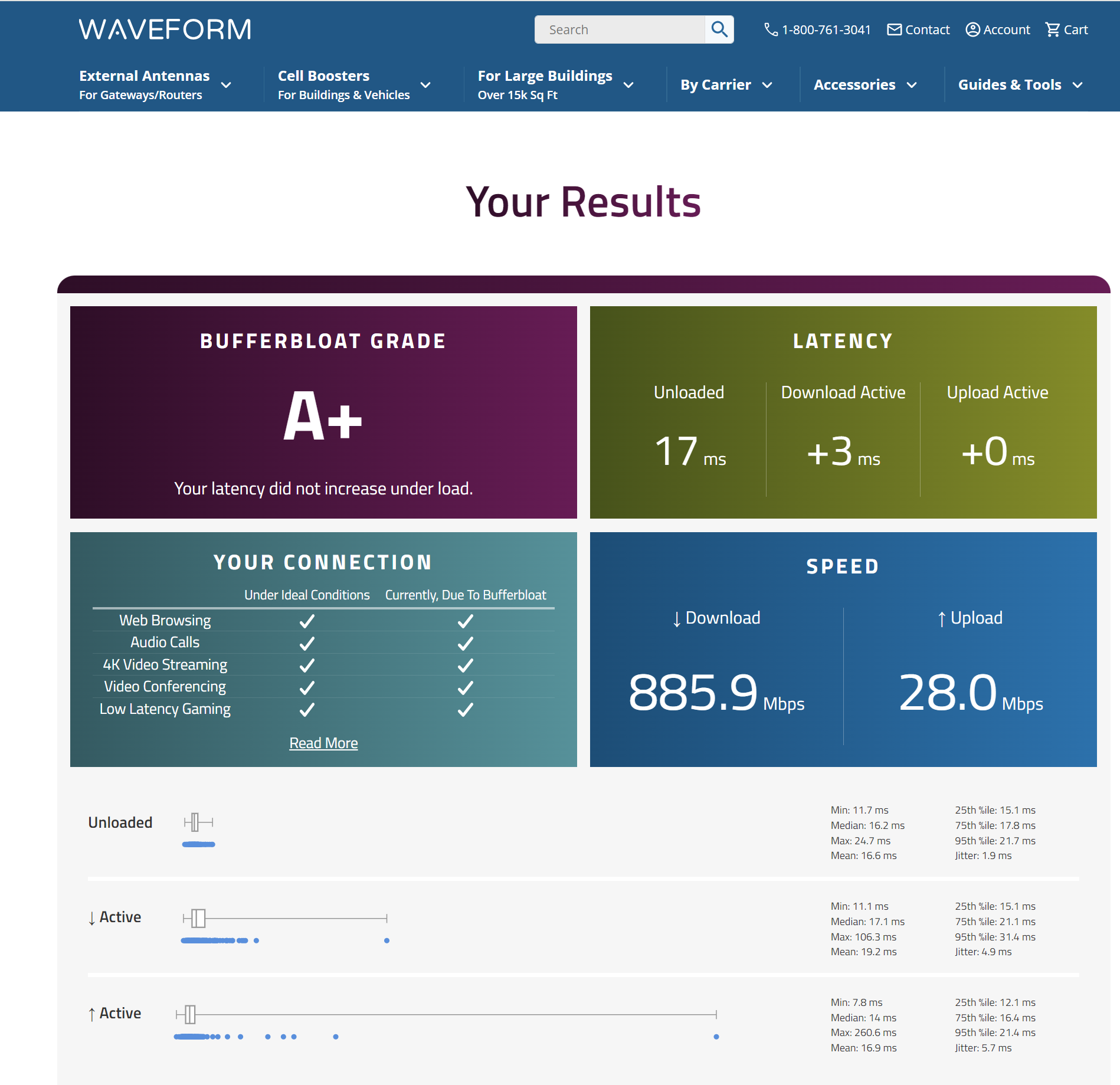

Perhaps I should have also mentioned that on the pfSense side I do have a Codel limiter applied on the WAN NIC to alleviate bufferbloat.

My overall goal with this network is to have the ultimate lowest latencies for pc gaming purposes.

-

@ngr2001 said in 10GB Lan causing strange performance issues, goes away when switched over to 1GB:

The command below, am I correct that this should only be applied to the 10GB port on the cisco switch, should I leave the 1GB ports alone / stock ?

Its applied globally

@ngr2001 said in 10GB Lan causing strange performance issues, goes away when switched over to 1GB:

You mentioned that Catalyst 9300 suffers from the same problem, are there any 1U 48-Port Cisco switches that do not have this issue that you would recommend, perhaps not even Cisco ?

Not that I have seen. The large chassis with dedicated supervisors have huge buffers but I doubt you would be buying those.

@ngr2001 said in 10GB Lan causing strange performance issues, goes away when switched over to 1GB:

You mentioned the above fix being a sledgehammer approach.

Using L2 Ethernet flow control rather than TCP is a blunt sledgehammer because it impacts all frames flowing through that interface. TCP flow control is per client session--hence it is better.

@ngr2001 said in 10GB Lan causing strange performance issues, goes away when switched over to 1GB:

Perhaps I should have also mentioned that on the pfSense side I do have a Codel limiter applied on the WAN NIC to alleviate bufferbloat.

Try disabling it to see how it impacts what you are seeing.

-

Yeah traffic shaping could definitely be an issue.

-

I'll try both suggestions and report back, thank you.

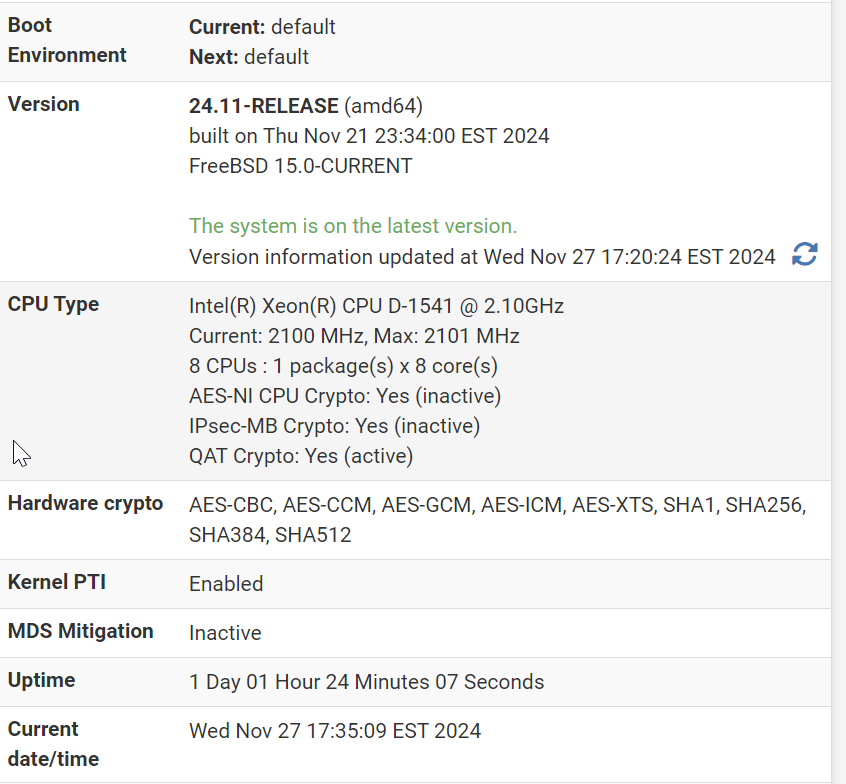

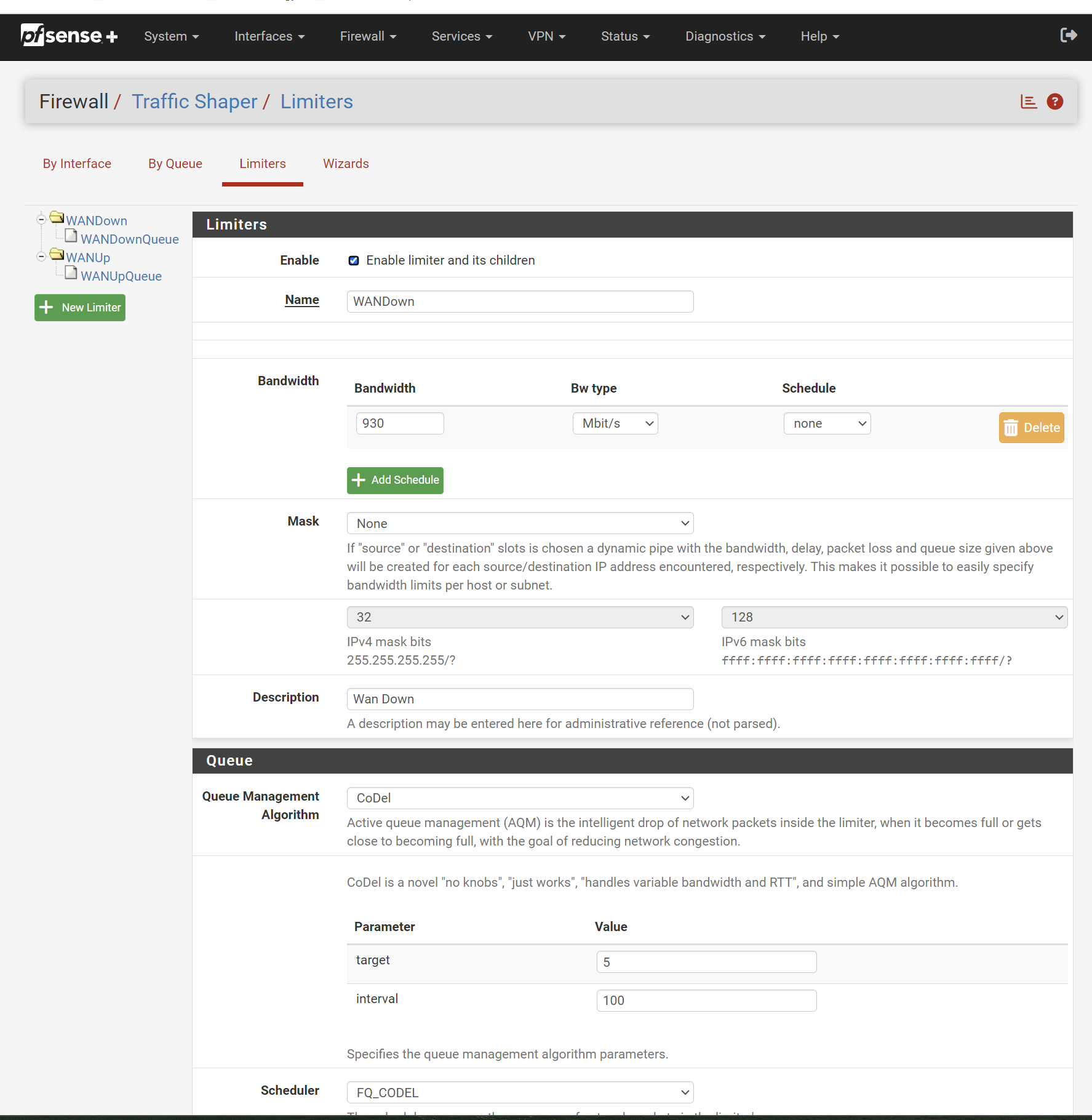

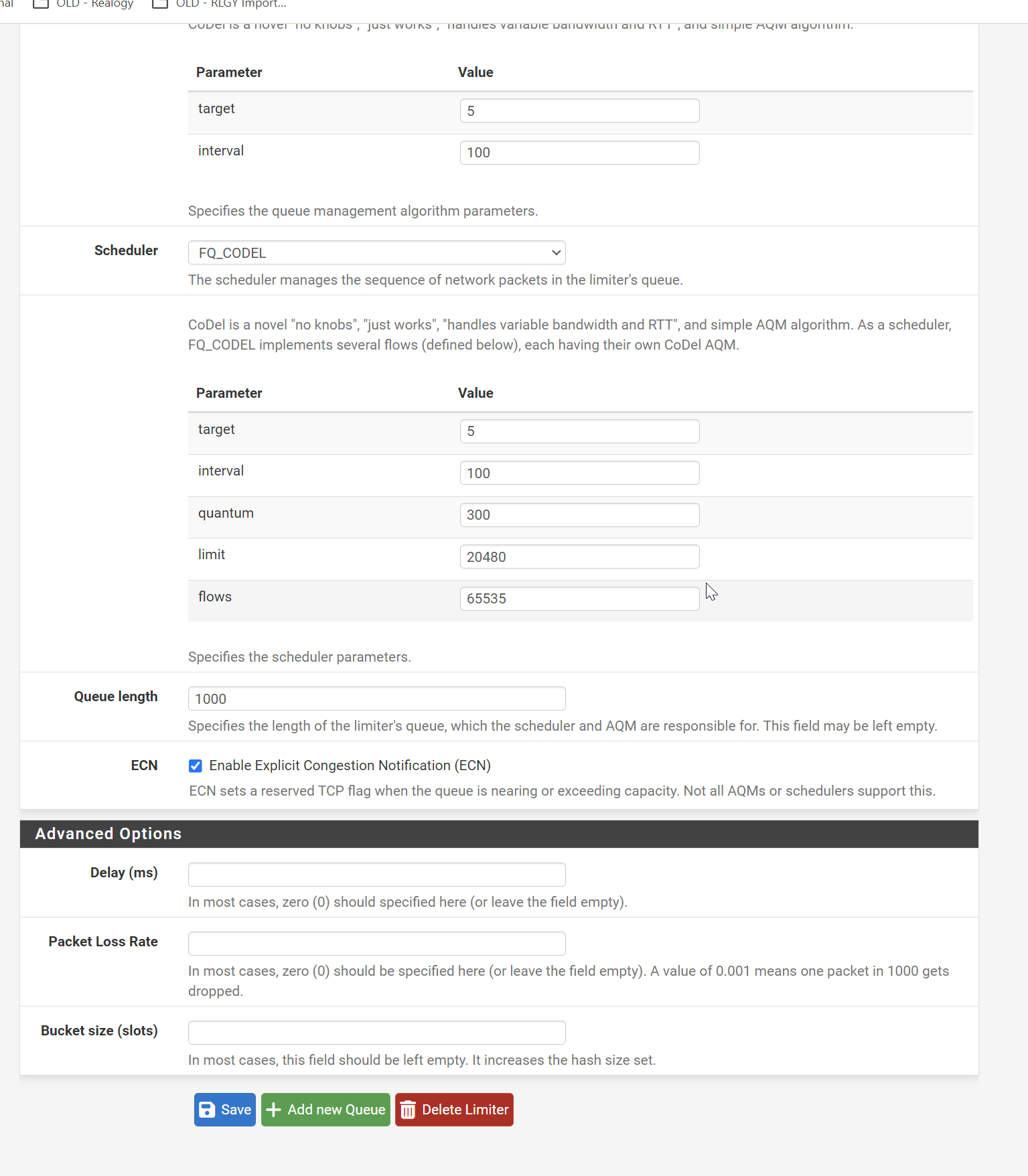

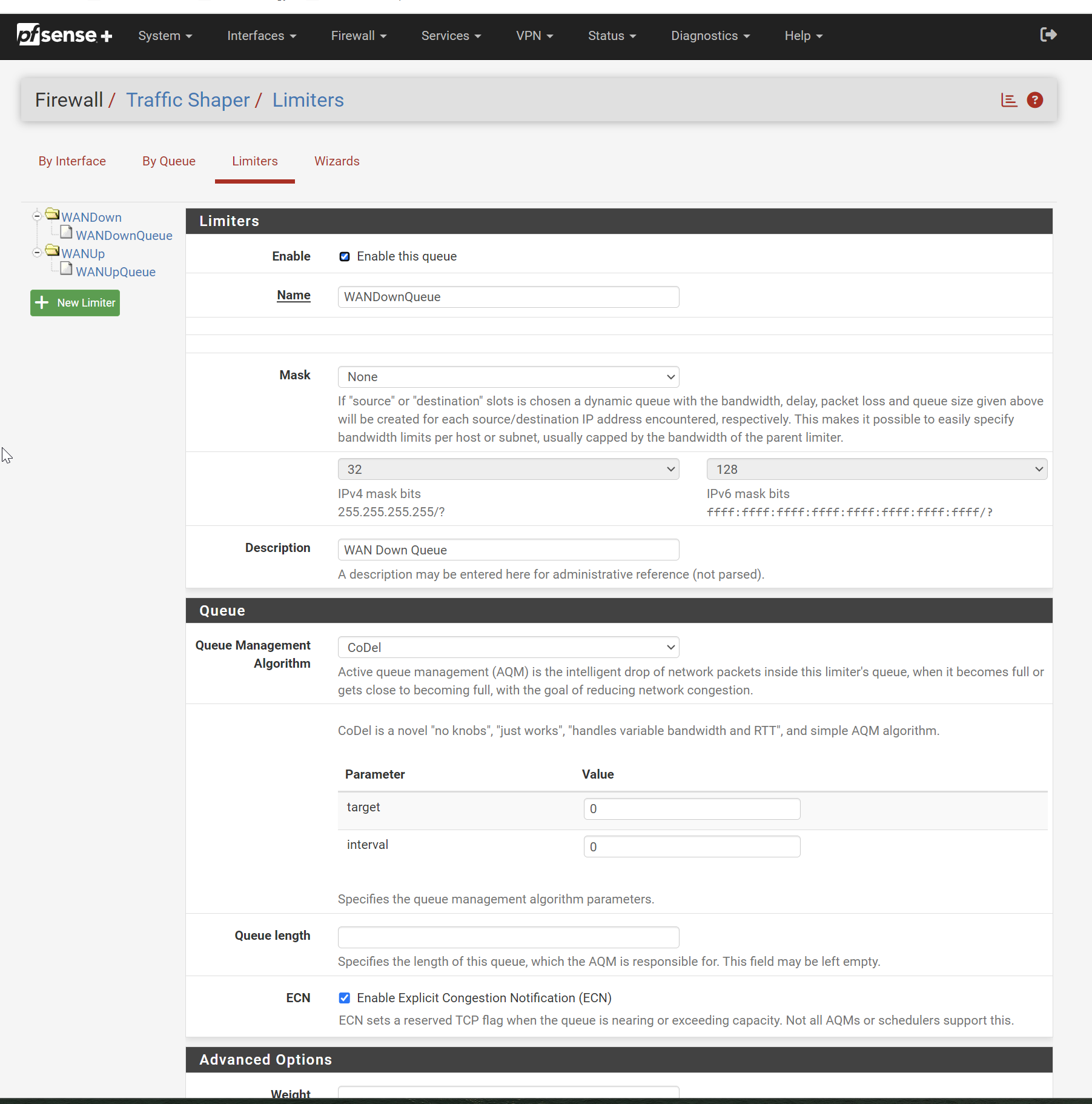

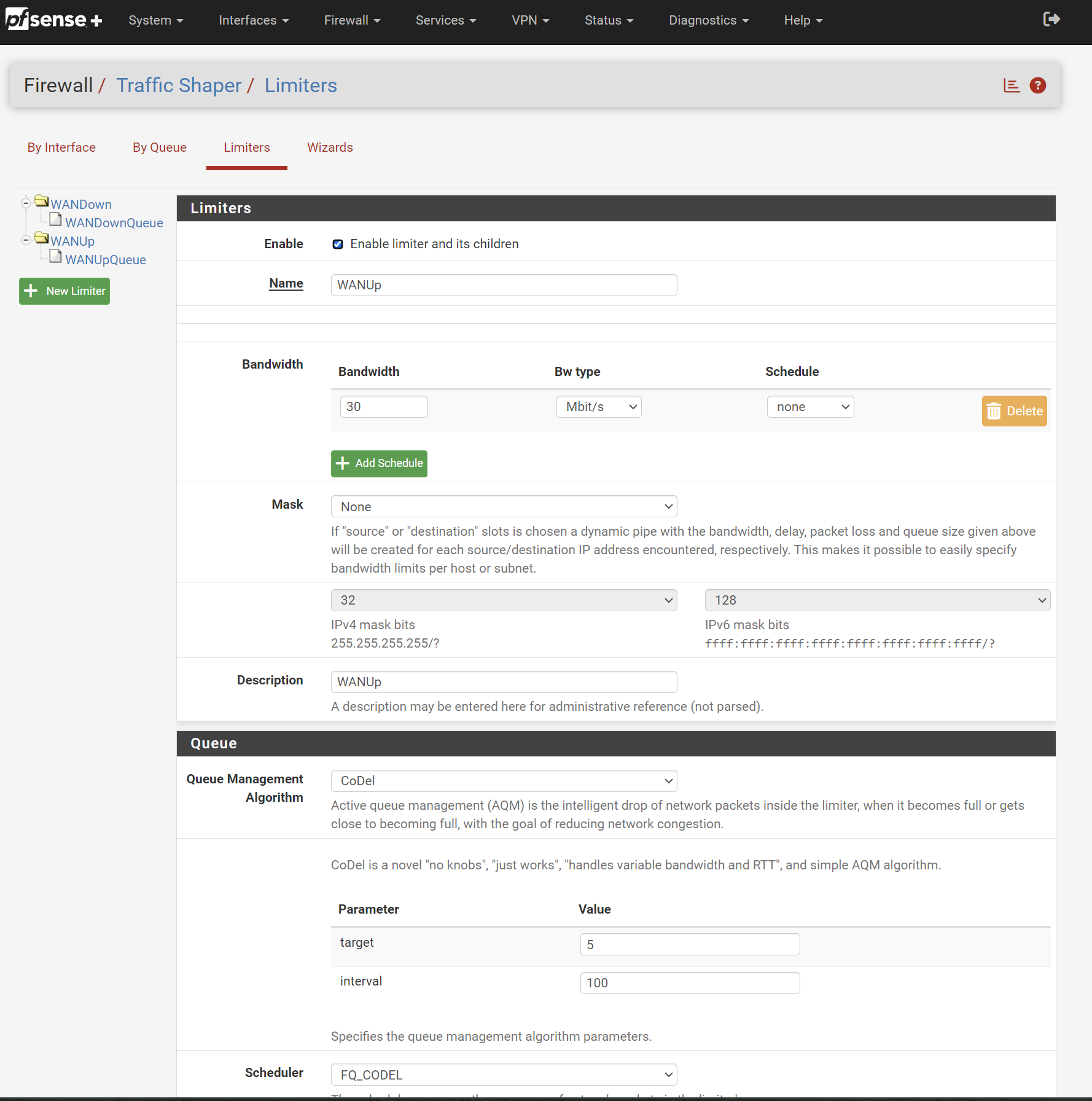

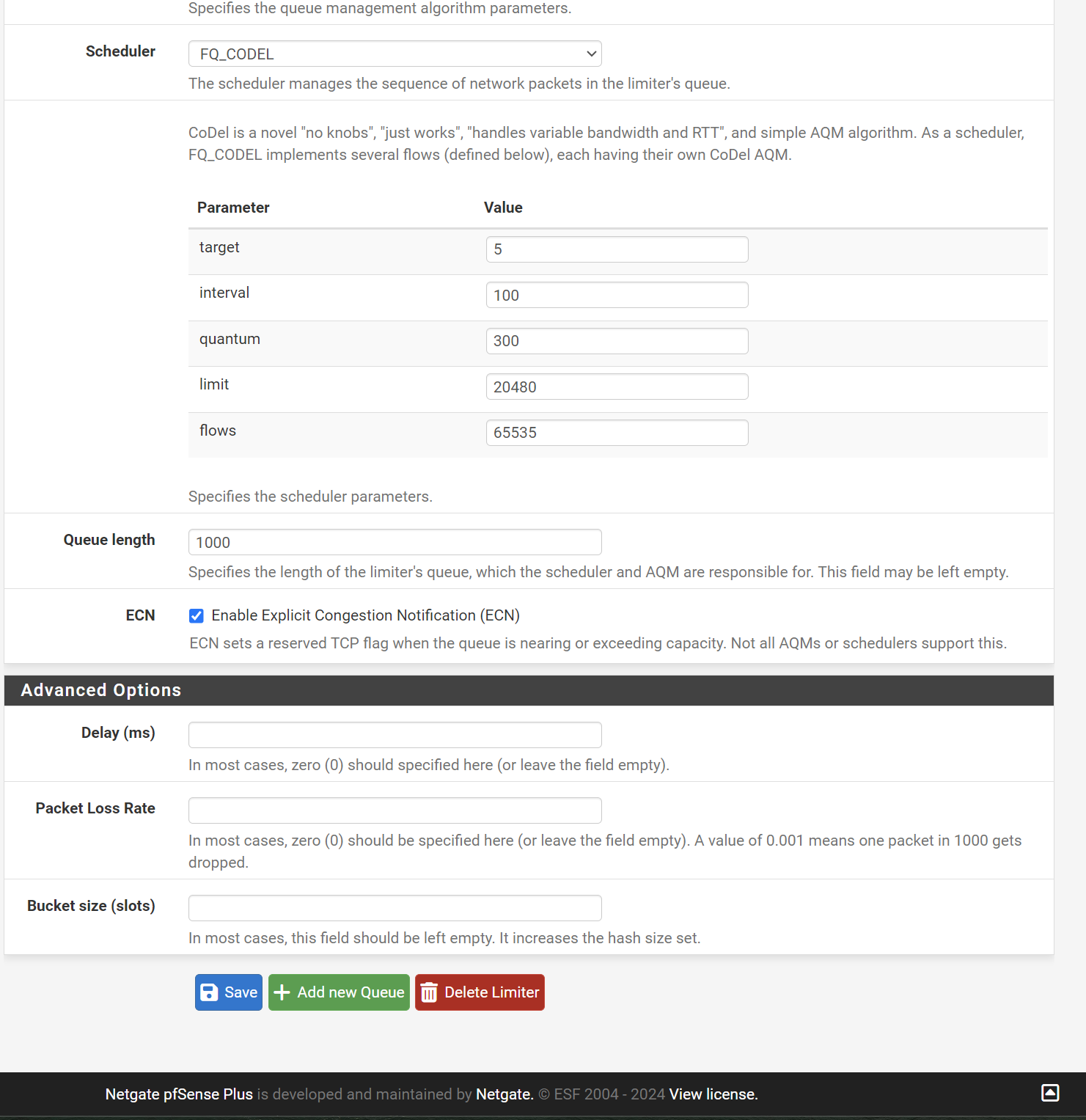

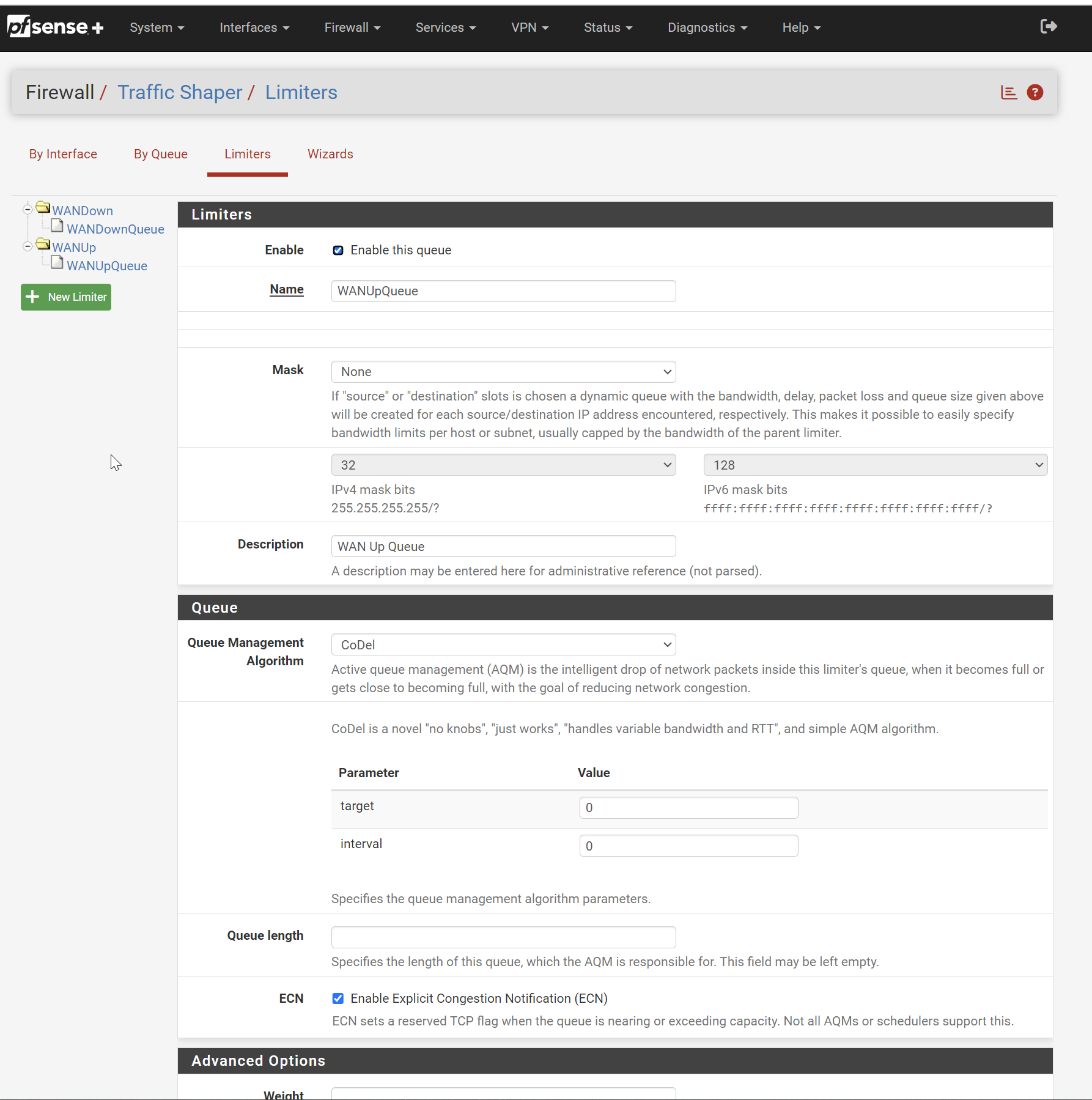

In case anyone is interested this is my basic limiter setup to combat Bufferbloat, I would not give this setting up being I score a perfect A+ with it in place. If I disable I get wild swings in scores, I will disable for the sake of testing.

Other than that I have 4 VLANs using the Router on a stick method, everything else is hardened down, no plugins or other features running, other than I do have a QAT Crypto card installed and working.

-

Had some time to test again.

I applied the setting "qos queue-softmax-multiplier 1200" to my Cisco 3650 global config.

Like magic my speedtests while using the SPF+ 10GB port are now back to full speed. I am also monitoring my switch ports and so far no Output Drops.

I have to admit I am not 100% sure how the above fix works, I guess I am hung up on the fact that I have removed all QOS settings from my config yet this setting seems kind of tied to QOS. I guess in the background no matter what you do the switch still has some kind of intelligence built in for QOS purposes and this simply increases the buffer pool regardless if QOS is in use or not ?

No need to disable / test Codel Limiters on my pfSense as I see it, this issue was purely a Cisco Problem.

-

very happy with these results too.

-

Nice.