datatransfer rate not as high as it should be

-

Hello,

I have built my PfSense on a Proxmox machine about now half a year ago. I've had none to minor issues since then, but one thing always really stood out for me: When I was measuring the datatranfer rate, it always returned me rates of 80MB/s or 667Mbit/s and not higher, but my whole LAN is built for 1GBit/s. I've checked ALL my hardware/software, EVERY. SINGLE. RELEVANT. ASPECT. None of them limits the datatransfer rate in any way to 80MB/s as far as I'm concerned. Now to get a brief understanding of my server structure: Any other server instance depends on the PfSense server that is running on the Proxmox machine (even the Proxmox machine itself), which means I'm getting the same results on any other instance.All statistics measured with iperf3.

These WERE my usual datatransfer rates on 4GB RAM and 1 CPU core (i once raised RAM to 6GB, there were absolutely no differe, still limited at 80MB/s):[ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 78.9 MBytes 661 Mbits/sec [ 4] 1.00-2.00 sec 79.1 MBytes 663 Mbits/sec [ 4] 2.00-3.00 sec 78.8 MBytes 662 Mbits/sec [ 4] 3.00-4.00 sec 79.0 MBytes 662 Mbits/sec [ 4] 4.00-5.00 sec 78.8 MBytes 661 Mbits/sec [ 4] 5.00-6.00 sec 79.0 MBytes 663 Mbits/sec [ 4] 6.00-7.00 sec 78.9 MBytes 661 Mbits/sec [ 4] 7.00-8.00 sec 78.8 MBytes 662 Mbits/sec [ 4] 8.00-9.00 sec 79.0 MBytes 662 Mbits/sec [ 4] 9.00-10.00 sec 78.8 MBytes 661 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 789 MBytes 662 Mbits/sec sender [ 4] 0.00-10.00 sec 789 MBytes 662 Mbits/sec receiverI have absolutely no clue why I'm not getting any higher. Yes, I know 1GBit/s is the max, but then why exactly at 80MB/s? Yes, 1GBit/s is the max, but I don't really except every transfer to be at exactly 1Gbit/s, but around that number would be pleasent and my goal.

Please help me figuring out, what is going on here. I want to get rid of this. I'm pretty sure I'm missing out on some things. But what I can guarantee you is that this shouldn't be a hardware bottleneck, I've checked everything multiple times to be absolutely sure. I've read about allocating PfSense more resources: I did this with zero impact, gave it more RAM and more cores. Nothing.

Then I also read about a certain case, where when you use Proxmox (Debian-Linux Distro) with PfSense as a virtual machine (FreeBSD) the communication between the emulation layer impacts the datatransfer speed. Can this be the origin of the issue? I couldn't really check on this one. I'm just naming things I've heard about while researching and want to get deeper into this issue. Please help me examine. -

@pleaseHELP you testing through pfsense or to it or from it?

Your test should be through

pcA -- pfsense -- pcB

Where your testing with iperf from pcA to B, or from B to A.. Testing to or from pfsense from either A or B is not a actual test of pfsense routing/firewalling. Or just a test of it as a server.

About 113MBps ish is the max your going to see moving data over gig.

$ iperf3.exe -c 192.168.9.10 Connecting to host 192.168.9.10, port 5201 [ 5] local 192.168.9.100 port 1520 connected to 192.168.9.10 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.01 sec 115 MBytes 956 Mbits/sec [ 5] 1.01-2.01 sec 112 MBytes 948 Mbits/sec [ 5] 2.01-3.00 sec 114 MBytes 958 Mbits/sec [ 5] 3.00-4.01 sec 114 MBytes 949 Mbits/sec [ 5] 4.01-5.01 sec 113 MBytes 950 Mbits/sec [ 5] 5.01-6.01 sec 115 MBytes 964 Mbits/sec [ 5] 6.01-7.00 sec 112 MBytes 949 Mbits/sec [ 5] 7.00-8.01 sec 114 MBytes 948 Mbits/sec [ 5] 8.01-9.00 sec 112 MBytes 950 Mbits/sec [ 5] 9.00-10.01 sec 114 MBytes 949 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.01 sec 1.11 GBytes 952 Mbits/sec sender [ 5] 0.00-10.04 sec 1.11 GBytes 949 Mbits/sec receiverYeah 600 something would be low.

So how exactly are you testing, and test in the other direction as well you can use -R

$ iperf3.exe -c 192.168.9.10 -R Connecting to host 192.168.9.10, port 5201 Reverse mode, remote host 192.168.9.10 is sending [ 5] local 192.168.9.100 port 1524 connected to 192.168.9.10 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.01 sec 113 MBytes 941 Mbits/sec [ 5] 1.01-2.01 sec 113 MBytes 943 Mbits/sec [ 5] 2.01-3.01 sec 113 MBytes 950 Mbits/sec [ 5] 3.01-4.01 sec 112 MBytes 938 Mbits/sec [ 5] 4.01-5.01 sec 113 MBytes 944 Mbits/sec [ 5] 5.01-6.01 sec 112 MBytes 940 Mbits/sec [ 5] 6.01-7.01 sec 113 MBytes 949 Mbits/sec [ 5] 7.01-8.01 sec 113 MBytes 950 Mbits/sec [ 5] 8.01-9.01 sec 113 MBytes 949 Mbits/sec [ 5] 9.01-10.00 sec 113 MBytes 950 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.01 sec 1.10 GBytes 947 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 945 Mbits/sec receiverIf your testing from A to B, are those vlans where the traffic is a hairpin down the same physical wire?

-

Hello @johnpoz,

Thanks for the reply.Yes, I am using iperf between PfSense and my computer. In any way, I've tested other LAN-ports in different rooms with different devices all having the same datatransfer rate to my homeserver. If I do an iperf test on my TrueNAS for example, the speed would be the same as doing it on PfSense, (since the connection of TrueNAS depends on PfSense).

Here is the test (My PC as client, Server as server):

[ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 79.3 MBytes 665 Mbits/sec [ 4] 1.00-2.00 sec 79.3 MBytes 665 Mbits/sec [ 4] 2.00-3.00 sec 79.4 MBytes 666 Mbits/sec [ 4] 3.00-4.00 sec 74.6 MBytes 626 Mbits/sec [ 4] 4.00-5.00 sec 79.5 MBytes 667 Mbits/sec [ 4] 5.00-6.00 sec 79.5 MBytes 667 Mbits/sec [ 4] 6.00-7.00 sec 79.5 MBytes 667 Mbits/sec [ 4] 7.00-8.00 sec 79.3 MBytes 665 Mbits/sec [ 4] 8.00-9.00 sec 79.5 MBytes 668 Mbits/sec [ 4] 9.00-10.00 sec 79.5 MBytes 667 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 790 MBytes 662 Mbits/sec sender [ 4] 0.00-10.00 sec 790 MBytes 662 Mbits/sec receiverWith parameter -R (other direction) actually gives me the results I want:

[ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 110 MBytes 924 Mbits/sec [ 4] 1.00-2.00 sec 113 MBytes 949 Mbits/sec [ 4] 2.00-3.00 sec 113 MBytes 949 Mbits/sec [ 4] 3.00-4.00 sec 113 MBytes 948 Mbits/sec [ 4] 4.00-5.00 sec 113 MBytes 946 Mbits/sec [ 4] 5.00-6.00 sec 113 MBytes 948 Mbits/sec [ 4] 6.00-7.00 sec 106 MBytes 893 Mbits/sec [ 4] 7.00-8.00 sec 113 MBytes 949 Mbits/sec [ 4] 8.00-9.00 sec 113 MBytes 948 Mbits/sec [ 4] 9.00-10.00 sec 113 MBytes 948 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 1.09 GBytes 940 Mbits/sec 0 sender [ 4] 0.00-10.00 sec 1.09 GBytes 940 Mbits/sec receiverI am not using VLAN's, they are all wired together with physical cables. Now, I don't really get why the speed is so trottled when data moves to the homeserver, but in different direction that is not the case. Do you have a guess? I'm totally out of ideas. I'm not even sure, whether that "emulation delay" that I've thought about, might still be the reason for this issue. Thanks in advance.

-

@pleaseHELP said in datatransfer rate not as high as it should be:

, the speed would be the same as doing it on PfSense, (since the connection of TrueNAS depends on PfSense).

No. This is totally wrong.

Routing capabilities is quite different from running iperf server on pf.

Don't assume this and don't test like this

Do what jonpoz said, and recheck. -

@pleaseHELP said in datatransfer rate not as high as it should be:

Now, I don't really get why the speed is so trottled when data moves to the homeserver, but in different direction that is not the case. Do you have a guess?

As @johnpoz was saying, it is not being "throttled" by pfsense, it's just that pfsense is not a good "iperf server".

If I do an iperf test on my TrueNAS for example, the speed would be the same as doing it on PfSense, (since the connection of TrueNAS depends on PfSense).

Did you actually test this, between your PC and TrueNAS? I don't think TrueNAS is the best iperf server either. I "only" get around 5 Gbps between PC and TrueNAS but a full ~9.4 when testing towards a simple CT on the same Proxmox machine.

If you do want to test pfsense routing performane, you need to test between VLAN's. And if you want to test end to end firewall performance, you need to test from the internet using speedtest or a public iperf server. But you should not use pfsense as one of the iperf endpoints. It is built and optimized to handle the traffic generated by iperf, but not really to run iperf itself (even though it can).

To test your internal LAN speeds you need to spin up a VM or CT and test towards that from your PC. If they are on the same subnet you will be testing only your switching, server and PC capabilities, not pfsense at all. And you would likely see 930 ish speeds in both directions. Probably also if testing between PC and TrueNAS...

-

@ pleaseHELP

This :

@johnpoz said in datatransfer rate not as high as it should be:

About 113MBps ish is the max your going to see moving data over gig.

is the maximum possible.

Some conditions exist.

Do not use vitalization, do a bare metal test. vitalization costs time (CPU resources). So, for the real test, ditch proxmox.

Check that the NICs you use are quality 'Intel' NICs that support knowly (check on the net) 1 Gbit well. This means, just to be sure, don't use any Realtek stuff.Example :

I've a test PC on LAN.

Another test PC on PORTAL, or IDRAC.

The test should be done with these two devices.

pfSense should do 'just the routing'.

"98x Mbits/sec" is now possible (for me).

Also on my WAN interface, but anything upstream (the Internet) : I'll hit my ISP max rate = 2,4 Gbits/sec down, env 800 Mbits/sec up, which means that it gets clipped to 98x Mbits/sec down and 800 Mbits/sec up (my WAN interface is 1 Gbits/sec). -

@Gertjan said in datatransfer rate not as high as it should be:

Do not use vitalization, do a bare metal test. vitalization costs time (CPU resources). So, for the real test, ditch proxmox.

There are no issues whatsoever running pfsense virtualized on Proxmox. You would need to be using some really low end HW to have trouble reaching 1Gbps. And then virtualization probably isn't a good idea anyway...

I have no issues reaching 9.4 Gbit/s routing over VLAN's even with interfaces virtualized (VirtIO) on Proxomox.

-

Sure. Not saying what proxmox can do, or can't. It depends what hardware you throw at it and how you've set it up.

This :datatransfer rate not as high as it should be

need some "as neutral as possible" settings. For me, that's bare-bone, good NICS, and then pfSense will deliver.

As soon as pleaseHELP discovers what is pfSense can do (or can't), he can remove (or not) pfSense from the list. Thus finding the - his - issue faster. -

Hello and thanks for all the responses. I've been having issues fighting against the anti-spam protection this forum has to offer. Also thanks for those, who pointed out what johnpoz recommended me. Before I thought the traffic of iperf is running through pfSense, which wasn't the case in all of my tests. Now that I understand the situation, let me clear some things out: I also have a TrueNAS server on my Proxmox homeserver to which I generally move data to it. I've noticed that sending data to that TrueNAS is always capped at 80 Mb/s, but getting data from it runs on about 1Gbit/s, as it also should for upload. This isn't just a TrueNAS thing. Whether its PfSense, TrueNAS or just a Ubuntu Server running in a container, this happens to all upload occasions of my homeserver. Data from my PC to the server are capped at 80Mb/s, but the other way around there are no issue whatsoever and I honestly don't know what is causing this. Now you might ask yourself, where pfSense is having any relation to this generally network-related issue and you'd be right: The thing is that I've already explained this whole situation to you all and either way you guys are probably the best if it comes to such network related issues, so any help, ideas or recommendations would be very appreciated. I've checked the hardware-level multiple times, there is nothing capping it at 80Mb/s. The NIC's are from Realtek, but seriously I doubt that this is the origin of the issue (name of the Realtek NIC is: Realtek RTL8111H). Keep in mind that my whole local network suffers from this "upload cap", its neither an issue from the side of my PC or the room connectivity. This issue relies on some part of the homeserver setup. @Gertjan @Gblenn @netblues

-

@Gertjan Here is what PfSense shows me related to the interfaces it uses:

I did an iperf run from my PC and then in reversed mode to show you (in graph) that this issue also occurs for my pfSense

So this should be either a Proxmox thing or a uncanny hardware issue I'm not aware of? I'm seriously unsure... Yes, this issue isn't about pfSense anymore, but please any expertise would help me out alot. Thanks in advance!

-

@pleaseHELP said in datatransfer rate not as high as it should be:

I've noticed that sending data to that TrueNAS is always capped at 80 Mb/s, but getting data from it runs on about 1Gbit/s, as it also should for upload.

Here you are mixing up Mb/s and MB/s... In your very first post you show your iperf results at 662 Mbits/s which is just under 83 MB/s... and quite a bit better than 80 megabit per second.

However, if I'm reading your information correctly, it's always when sending, i.e. from your PC, that you get the lower result. Pfsense and TrueNAS have no trouble sending at full 1Gbps speed to your PC on the other hand.

Now, comparing iperf with file upload and downloads from TrueNAS is not really relevant. There's are other things going on there, like RAM buffer sizes and disk speeds. But still, I think this is actually indicative of something in your PC not up to par.

Have you tried running the coomand like iperf3 -c xxx.xxx.xxx.xx -P 4 or 6 ?

-P 4 will run 4 parallell streams which distributes the load across the cores in your PC. If you get better and more symmetrical results, that is a clear sign that it's your own PC that is the limiting factor here. Perhaps your CPU clock in the Proxmox machine is a bit higher than the base clock in your PC? Since pfsense can cope on only one Core...

-

-P 4 will run 4 parallell streams which distributes the load across the cores in your PC. If you get better and more symmetrical results, that is a clear sign that it's your own PC that is the limiting factor here. Perhaps your CPU clock in the Proxmox machine is a bit higher than the base clock in your PC? Since pfsense can cope on only one Core...

[ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 0.00-1.00 sec 13.2 MBytes 110 Mbits/sec [ 8] 0.00-1.00 sec 13.2 MBytes 110 Mbits/sec [ 10] 0.00-1.00 sec 13.1 MBytes 110 Mbits/sec [ 12] 0.00-1.00 sec 13.0 MBytes 109 Mbits/sec [ 14] 0.00-1.00 sec 13.0 MBytes 109 Mbits/sec [SUM] 0.00-1.00 sec 78.6 MBytes 660 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 1.00-2.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 1.00-2.00 sec 13.2 MBytes 111 Mbits/sec [ 8] 1.00-2.00 sec 13.2 MBytes 111 Mbits/sec [ 10] 1.00-2.00 sec 13.3 MBytes 111 Mbits/sec [ 12] 1.00-2.00 sec 13.3 MBytes 111 Mbits/sec [ 14] 1.00-2.00 sec 13.3 MBytes 111 Mbits/sec [SUM] 1.00-2.00 sec 79.5 MBytes 667 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 2.00-3.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 2.00-3.00 sec 13.3 MBytes 111 Mbits/sec [ 8] 2.00-3.00 sec 13.3 MBytes 111 Mbits/sec [ 10] 2.00-3.00 sec 13.2 MBytes 111 Mbits/sec [ 12] 2.00-3.00 sec 13.2 MBytes 111 Mbits/sec [ 14] 2.00-3.00 sec 13.2 MBytes 110 Mbits/sec [SUM] 2.00-3.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 3.00-4.00 sec 13.3 MBytes 111 Mbits/sec [ 6] 3.00-4.00 sec 13.2 MBytes 111 Mbits/sec [ 8] 3.00-4.00 sec 13.2 MBytes 110 Mbits/sec [ 10] 3.00-4.00 sec 13.2 MBytes 111 Mbits/sec [ 12] 3.00-4.00 sec 13.2 MBytes 111 Mbits/sec [ 14] 3.00-4.00 sec 13.3 MBytes 111 Mbits/sec [SUM] 3.00-4.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 4.00-5.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 4.00-5.00 sec 13.2 MBytes 111 Mbits/sec [ 8] 4.00-5.00 sec 13.3 MBytes 112 Mbits/sec [ 10] 4.00-5.00 sec 13.3 MBytes 112 Mbits/sec [ 12] 4.00-5.00 sec 13.2 MBytes 111 Mbits/sec [ 14] 4.00-5.00 sec 13.2 MBytes 111 Mbits/sec [SUM] 4.00-5.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 5.00-6.00 sec 13.3 MBytes 111 Mbits/sec [ 6] 5.00-6.00 sec 13.3 MBytes 111 Mbits/sec [ 8] 5.00-6.00 sec 13.2 MBytes 110 Mbits/sec [ 10] 5.00-6.00 sec 13.2 MBytes 110 Mbits/sec [ 12] 5.00-6.00 sec 13.2 MBytes 111 Mbits/sec [ 14] 5.00-6.00 sec 13.3 MBytes 111 Mbits/sec [SUM] 5.00-6.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 6.00-7.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 6.00-7.00 sec 13.2 MBytes 111 Mbits/sec [ 8] 6.00-7.00 sec 13.3 MBytes 112 Mbits/sec [ 10] 6.00-7.00 sec 13.3 MBytes 112 Mbits/sec [ 12] 6.00-7.00 sec 13.2 MBytes 111 Mbits/sec [ 14] 6.00-7.00 sec 13.2 MBytes 111 Mbits/sec [SUM] 6.00-7.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 7.00-8.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 7.00-8.00 sec 13.3 MBytes 111 Mbits/sec [ 8] 7.00-8.00 sec 13.2 MBytes 111 Mbits/sec [ 10] 7.00-8.00 sec 13.2 MBytes 110 Mbits/sec [ 12] 7.00-8.00 sec 13.2 MBytes 111 Mbits/sec [ 14] 7.00-8.00 sec 13.3 MBytes 111 Mbits/sec [SUM] 7.00-8.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 8.00-9.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 8.00-9.00 sec 13.2 MBytes 110 Mbits/sec [ 8] 8.00-9.00 sec 13.2 MBytes 111 Mbits/sec [ 10] 8.00-9.00 sec 13.3 MBytes 111 Mbits/sec [ 12] 8.00-9.00 sec 13.3 MBytes 111 Mbits/sec [ 14] 8.00-9.00 sec 13.2 MBytes 111 Mbits/sec [SUM] 8.00-9.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 9.00-10.00 sec 13.2 MBytes 111 Mbits/sec [ 6] 9.00-10.00 sec 13.3 MBytes 112 Mbits/sec [ 8] 9.00-10.00 sec 13.3 MBytes 112 Mbits/sec [ 10] 9.00-10.00 sec 13.2 MBytes 110 Mbits/sec [ 12] 9.00-10.00 sec 13.2 MBytes 110 Mbits/sec [ 14] 9.00-10.00 sec 13.2 MBytes 111 Mbits/sec [SUM] 9.00-10.00 sec 79.4 MBytes 666 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 132 MBytes 111 Mbits/sec sender [ 4] 0.00-10.00 sec 132 MBytes 111 Mbits/sec receiver [ 6] 0.00-10.00 sec 132 MBytes 111 Mbits/sec sender [ 6] 0.00-10.00 sec 132 MBytes 111 Mbits/sec receiver [ 8] 0.00-10.00 sec 132 MBytes 111 Mbits/sec sender [ 8] 0.00-10.00 sec 132 MBytes 111 Mbits/sec receiver [ 10] 0.00-10.00 sec 132 MBytes 111 Mbits/sec sender [ 10] 0.00-10.00 sec 132 MBytes 111 Mbits/sec receiver [ 12] 0.00-10.00 sec 132 MBytes 111 Mbits/sec sender [ 12] 0.00-10.00 sec 132 MBytes 111 Mbits/sec receiver [ 14] 0.00-10.00 sec 132 MBytes 111 Mbits/sec sender [ 14] 0.00-10.00 sec 132 MBytes 111 Mbits/sec receiver [SUM] 0.00-10.00 sec 793 MBytes 666 Mbits/sec sender [SUM] 0.00-10.00 sec 793 MBytes 665 Mbits/sec receiverDoesn't really seem like it... the SUM is the same as running it on only one stream.

-

Here you are mixing up Mb/s and MB/s... In your very first post you show your iperf results at 662 Mbits/s which is just under 83 MB/s... and quite a bit better than 80 megabit per second.

When I write MB/s (or Mb/s) I mean Megabytes per second. When I write Mbit/s (or MBit/s) I mean Megabits per second.

Sending data from my PC is always capped at 80 MB/s. I don't think this has to do with any RAM or harddisk bottleneck. They're all DDR4 3200MhZ and Nvme SSD's with very high read and write speed. -

[...] But still, I think this is actually indicative of something in your PC not up to par.

This is one of the possibilities. As far as I'm concerned, the devices on the same LAN doesn't necessarily have to communicate through the router, but can do so on their own through the switch. I made sure to buy a switch that has 1Gbit/s capabilities and the upload transfer rate to my PC also show that the switch is indeed capable of pulling such transfer rates off. Still, im facing issues when data is sent to my devices. So this isn't an issue that really has to do with my homeserver (or its Realtek NIC), but rather either the switch goofying around or my devices not "up to par" as you say? I'll try and make tests from other rooms with other devices than my PC again and notify you about the results. If you have something else to share, ANYTHING, i'd be happy to know :) Thanks in advance.

-

@pleaseHELP if your iperf is showing you 660mbps - you understand that converts to say 82.5MBps - bitspersec/8 = Bytes per sec

So you're seeing wirespeed - but your wirespeed is not gig.. Gig wirespeed should be high 800s to mid 900s..

As you saw in my test..

If this a vm running on some host - then yeah that could have some throttling.

Look to your drivers, etc.. Nic settings.. But iperf should being seeing mid 900s if want to see max file transfer speeds.

Testing to or from pfsense - is not a good test..

So iperf normal test, the client is sending.. with -R the server is sending..

This sending nas sending to my client with -R over a gig connection.

$ iperf3.exe -c 192.168.9.10 -R Connecting to host 192.168.9.10, port 5201 Reverse mode, remote host 192.168.9.10 is sending [ 5] local 192.168.9.100 port 44737 connected to 192.168.9.10 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.01 sec 114 MBytes 948 Mbits/sec [ 5] 1.01-2.00 sec 112 MBytes 949 Mbits/sec [ 5] 2.00-3.01 sec 114 MBytes 949 Mbits/sec [ 5] 3.01-4.00 sec 112 MBytes 950 Mbits/sec [ 5] 4.00-5.00 sec 113 MBytes 949 Mbits/sec [ 5] 5.00-6.01 sec 114 MBytes 949 Mbits/sec [ 5] 6.01-7.01 sec 114 MBytes 949 Mbits/sec [ 5] 7.01-8.01 sec 113 MBytes 949 Mbits/sec [ 5] 8.01-9.00 sec 112 MBytes 949 Mbits/sec [ 5] 9.00-10.01 sec 114 MBytes 950 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.01 sec 1.11 GBytes 951 Mbits/sec 0 sender [ 5] 0.00-10.01 sec 1.11 GBytes 949 Mbits/sec receiverYou are not going to see over 80MBps ish if all your seeing is 660 mbps with iperf.

Do you not have 2 physical devices you can use to test with - can you test talking to your VM hosts IP - vs some VM running on the host.. The very act of bridging the virtual machine nic to the physical nic would have some performance hit.. It shouldn't be a 300mbps sort of hit, but it will be a hit..

But your iperf test speed corresponds pretty exactly to what you would see via MBps units, be it your writing to a disk or just graphing it.

here is iperf test to my nas on a 5ge connection

$ iperf3.exe -c 192.168.10.10 Connecting to host 192.168.10.10, port 5201 [ 5] local 192.168.10.9 port 44883 connected to 192.168.10.10 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 413 MBytes 3.45 Gbits/sec [ 5] 1.00-2.00 sec 409 MBytes 3.43 Gbits/sec [ 5] 2.00-3.01 sec 410 MBytes 3.43 Gbits/sec [ 5] 3.01-4.01 sec 409 MBytes 3.43 Gbits/sec [ 5] 4.01-5.01 sec 409 MBytes 3.43 Gbits/sec [ 5] 5.01-6.00 sec 407 MBytes 3.43 Gbits/sec [ 5] 6.00-7.01 sec 414 MBytes 3.43 Gbits/sec [ 5] 7.01-8.00 sec 403 MBytes 3.43 Gbits/sec [ 5] 8.00-9.01 sec 414 MBytes 3.43 Gbits/sec [ 5] 9.01-10.01 sec 408 MBytes 3.43 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.01 sec 4.00 GBytes 3.43 Gbits/sec sender [ 5] 0.00-10.02 sec 4.00 GBytes 3.43 Gbits/sec receiver iperf Done.It's low for 5ge connection, but my nas only has a usb 3.0 connection for the usb nic.. And this seems to be the best can get..

-

TLDR: I found the origin of this issue: It comes from my own PC (Windows configuration). Some OS configuration limits the incoming datatransfer rate. Currently, I don't know how to remove this limiter or where to even find it. I've reset my entire network settings and this issue still exists. If someone knows about Windows configuration related to network datatransfer rate limits, then I'll appreciate your help/knowledge very much. If you want to understand my thought process, then you can read further:

@johnpoz thanks for your detailed reply. To clear out the remaining questions, I've done the following test: Since I only want to examine, why the datatransfer rate from incoming data in my LAN is not optimal I ran iperf on both on my PC and another wired device in different rooms. The results are about identical to when I run iperf from TrueNAS, pfSense or whatever... Incoming data isn't being handled in a proper manner, but outgoing data sure is:

Data coming to my PC from the other device:

[ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 76.2 MBytes 639 Mbits/sec [ 4] 1.00-2.00 sec 79.2 MBytes 664 Mbits/sec [ 4] 2.00-3.00 sec 77.3 MBytes 648 Mbits/sec [ 4] 3.00-4.00 sec 79.7 MBytes 669 Mbits/sec [ 4] 4.00-5.00 sec 75.1 MBytes 630 Mbits/sec [ 4] 5.00-6.00 sec 79.8 MBytes 669 Mbits/sec [ 4] 6.00-7.00 sec 78.1 MBytes 655 Mbits/sec [ 4] 7.00-8.00 sec 78.6 MBytes 659 Mbits/sec [ 4] 8.00-9.00 sec 78.5 MBytes 659 Mbits/sec [ 4] 9.00-10.00 sec 78.1 MBytes 655 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 781 MBytes 655 Mbits/sec sender [ 4] 0.00-10.00 sec 781 MBytes 655 Mbits/sec receiverData from my PC to the other device:

[ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 110 MBytes 920 Mbits/sec [ 4] 1.00-2.00 sec 111 MBytes 930 Mbits/sec [ 4] 2.00-3.00 sec 109 MBytes 913 Mbits/sec [ 4] 3.00-4.00 sec 107 MBytes 894 Mbits/sec [ 4] 4.00-5.00 sec 106 MBytes 890 Mbits/sec [ 4] 5.00-6.00 sec 110 MBytes 920 Mbits/sec [ 4] 6.00-7.00 sec 108 MBytes 910 Mbits/sec [ 4] 7.00-8.00 sec 106 MBytes 891 Mbits/sec [ 4] 8.00-9.00 sec 106 MBytes 888 Mbits/sec [ 4] 9.00-10.00 sec 109 MBytes 913 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 1.06 GBytes 907 Mbits/sec sender [ 4] 0.00-10.00 sec 1.06 GBytes 907 Mbits/sec receiverNow... since we all know by now: This isn't an issue of my homeserver and so neither one from PfSense. This issue simply occurs in my bare LAN meaning I can narrow the origin of this issue down to the OS (Windows), which is making some unreasonable decisions when it comes to receiving data. (more possible origins dont come to my mind):

I'm just trying to make heads or tails out of this, yet my suggestions also brings up a question that I was capable to answer with another test: The test, which involves two devices aside from my server, is run on both Windows machines, which means that if my suggestion would be true (by assuming that both OS are configured in a manner that would make them react the same way to incoming data) then BOTH network connections must be throttled in datatransfer rate, since ALWAYS one devices receives and the other one sends (Spoiler: They weren't the same: The other device had a normal 1Gbit/s datatransfer rate to a Ubuntu Server on my homeserver, which proves that this issues occurs on my PC's Windows configuration, I tested the connection with different cables to exclude the possibilty that I'm just using a bad cable, so the only reasonable source has to be some janked up OS config).

-

@pleaseHELP what iperf did you run what was server where you ran -s and client when you ran -c ipofiperfserver

Without the -R client will send data to the server, with -R the server in your iperf test will send to the client..

Couple of things that could be causing the issue in driver settings.. But really need to be sure if your windows PC is having problem sending the data, or when other devices are sending to it.

but yeah that latest iperf with high 800's low 900s is more typical for sure of what you should see over a gig wired connection.

What settings does your interface present? Also I would double check your windows settings in netsh.

$ netsh Interface tcp show global Querying active state... TCP Global Parameters ---------------------------------------------- Receive-Side Scaling State : enabled Receive Window Auto-Tuning Level : normal Add-On Congestion Control Provider : default ECN Capability : enabled RFC 1323 Timestamps : disabled Initial RTO : 1000 Receive Segment Coalescing State : enabled Non Sack Rtt Resiliency : disabled Max SYN Retransmissions : 4 Fast Open : enabled Fast Open Fallback : enabled HyStart : enabled Proportional Rate Reduction : enabled Pacing Profile : offI have seen interrupt moderation cause problems

-

@johnpoz said in datatransfer rate not as high as it should be:

@pleaseHELP what iperf did you run what was server where you ran -s and client when you ran -c ipofiperfserver

I ran all tests with iperf3. In all of my tests I used the -R parameter to switch the roles of server and client.

Couple of things that could be causing the issue in driver settings.. But really need to be sure if your windows PC is having problem sending the data, or when other devices are sending to it.

I'm sorry if I haven't been clear enough: The issue on my PC only occurs, when it's client (f.e. in iperf3). I don't know whether the client in iperf sends data to the server or it receives. You'd have to answer me this before me can proceed with further Windows configuration settings so that I also get the grasp. When I switch the roles (PC as server) with the -R parameter, then everything is running on 1Gbit/s standarts.

What settings does your interface present? Also I would double check your windows settings in netsh.

netsh Interface tcp show global Querying active state... TCP Global Parameters ---------------------------------------------- Receive-Side Scaling State : enabled Receive Window Auto-Tuning Level : normal Add-On Congestion Control Provider : default ECN Capability : disabled RFC 1323 Timestamps : disabled Initial RTO : 1000 Receive Segment Coalescing State : enabled Non Sack Rtt Resiliency : disabled Max SYN Retransmissions : 4 Fast Open : enabled Fast Open Fallback : enabled HyStart : enabled Proportional Rate Reduction : enabled Pacing Profile : offNow I don't have a single clue what all these settings do, the only one that is different is:

- ECN Capability disabled

rather than enabled.

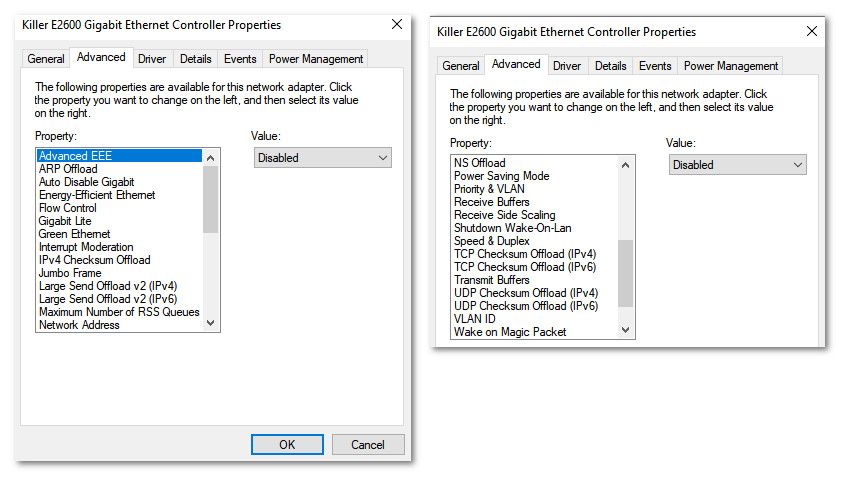

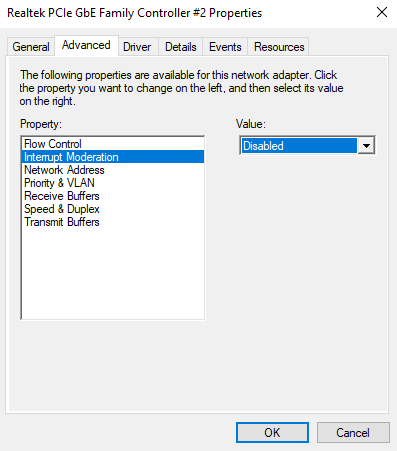

I've now disabled "interrupt moderation":

and also set "Speed & Duplex" from Auto Negotiation to 1.0 Gbps Full Duplex.

It didn't help. -

@pleaseHELP wow that is one basic driver if that is all the settings you have for it.

ECN would really not come into play.. The big one would be recieve-side scaling could lower performance if not on. And the autotuning.

You have any security/antivirus software running - those have been know to take a hit on network performance.

BTW - you should really never hard code gig - its not something you should do, if auto does not neg gig - then something is wrong.. And hard coding not really a fix for what is wrong.

Which direction are you seeing the 600mbps vs 900.. Is that this pc sending to something, or the other something sending to the PC..

Is that a usb nic or nic that came from maker of pc, one you added? My bet currently would be if its the pc trying to send data somewhere that you have some security/antivirus software causing you issues.

-

@pleaseHELP said in datatransfer rate not as high as it should be:

When I write MB/s (or Mb/s) I mean Megabytes per second.

Ok but if you want others to also understand what you mean, it's better to follow standards. B means Bytes and b means bits, so 1 MB/s = 8 Mb/s.

I found the origin of this issue: It comes from my own PC (Windows configuration). Some OS configuration limits the incoming datatransfer rate.

Everything you wrote and talked about earlier indicates it is when the PC is sending not receiving data that you have the 660 ish limitation. And you repeat that just now when you say:

When I switch the roles (PC as server) with the -R parameter, then everything is running on 1Gbit/s standarts.

Although, I can see how it can be confusing unless you are 100% certain which direction the traffic is actually flowing.

When you run the iperf command with the -c parameter, it is the client, and as default it is the sender. When you add the -R parameter, it is still the client, but it is receiving.Anyway, it seems it was like I suggested, that it is your PC that is not working right. So at least you know where to "dig in"... I doubt that an AV program would be the limiting factor, especially as it's when sending. But also because it's too consistent at 660... Simple enough to test though, just turn it off when testing...

But I think you need to look deeper into your NIC and the settings. What NIC is it that you have in your PC?

And as @johnpoz was saying, the driver settings look very basic. Have you ever updated the driver, or checked that it is the correct/best one?