Another Netgate with storage failure, 6 in total so far

-

gpart list Geom name: mmcsd0 ... Geom name: da0 ... Geom name: mmcsd0s3 modified: false state: OK fwheads: 255 fwsectors: 63 last: 14732926 first: 0 entries: 8 scheme: BSD Providers: 1. Name: mmcsd0s3a Mediasize: 7543250432 (7.0G) Sectorsize: 512 Stripesize: 512 Stripeoffset: 0 Mode: r0w0e0 rawtype: 27 length: 7543250432 offset: 8192 type: freebsd-zfs index: 1 end: 14732926 start: 16 Consumers: 1. Name: mmcsd0s3 Mediasize: 7543258624 (7.0G) Sectorsize: 512 Stripesize: 512 Stripeoffset: 0 Mode: r0w0e0Did not bother with gmirror destroy because gmirror status was empty.

zpool labelclear -f /dev/mmcsd0s3 failed to clear label for /dev/mmcsd0s3 zpool labelclear -f /dev/mmcsd0s3a failed to clear label for /dev/mmcsd0s3azpool status -P pool: pfSense state: ONLINE status: Some supported and requested features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(7) for details. config: NAME STATE READ WRITE CKSUM pfSense ONLINE 0 0 0 /dev/da0p3 ONLINE 0 0 0 errors: No known data errorszpool list -v NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT pfSense 119G 1.29G 118G - - 0% 1% 1.00x ONLINE - da0p3 119G 1.29G 118G - - 0% 1.08% - ONLINEgpart destroy -F mmcsd0 mmcsd0 destroyedgpart list now only shows Geom name: da0, nothing for the MMC.

dd if=/dev/zero of=/dev/mmcsd0 bs=1M count=1 status=progress 1+0 records in 1+0 records out 1048576 bytes transferred in 0.048666 secs (21546430 bytes/sec)For science...

dd if=/dev/zero of=/dev/mmcsd0 bs=1M status=progress dd: /dev/mmcsd0: short write on character deviceed 299.006s, 26 MB/s dd: /dev/mmcsd0: end of device 7458+0 records in 7457+1 records out 7820083200 bytes transferred in 299.528401 secs (26107986 bytes/sec)Thanks for the help!

-

Well that should certainly have wiped it. Does it boot from USB as expected?

-

@stephenw10 Have not rebooted yet. I'll be back if there's a problem ;)

-

Background

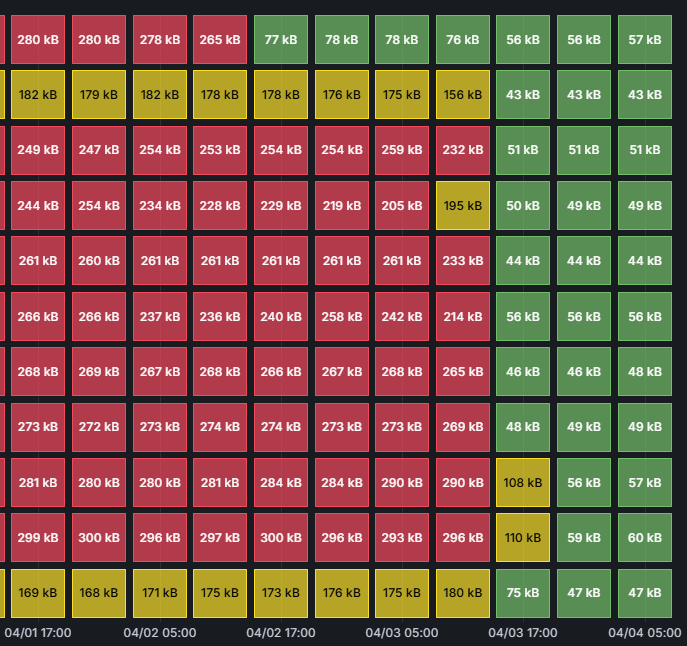

Across a fleet of 45 devices, the ones using UFS have a steady 40-50KB/s write rate, while all devices using ZFS have a steady 250-280KB/s write rate.

I have been trying without success to find a package or GUI option that would reduce the data write rate.

I can definitively state that there is nothing in our configuration that is causing the high, continuous write rate.

The only difference between low and high write rates is UFS vs ZFS.Changing the ZFS Sync Interval

On ZFS firewalls with high wear, I ran the command

sysctl vfs.zfs.txg.timeout=60 (the default value is 5)I have not yet experiment with values other than 60 or any other ZFS settings.

Results of the ZFS Change

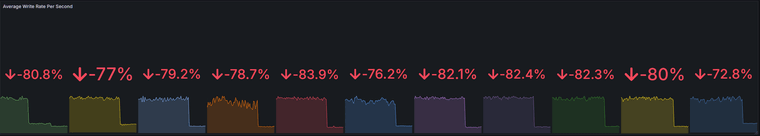

The result was an immediate 73-82% decrease in write rate across all devices.

-80.8% -77% -79.2% -78.7% -83.9% -76.2% -82.1% -82.4% -82.3% -80% -72.8Write rates dropped from 230-280KB/s to 44-60KB/s

Calculating the eMMC lifecycle

Kingston (and all others?) calculate eMMC TBW as follows:

The formula for determining Total Bytes Written, or TBW, is straightforward:

(Device Capacity * Endurance Factor) / WAF = TBWOften, WAF is between 4 and 8, but it depends on the host system write behavior. For example, large sequential writes produce a lower WAF, while random writes of small data blocks produce a higher WAF. This kind of behavior can often lead to early failure of storage devices.

For example, a 4GB eMMC with an endurance factor of 3000 and a WAF of 8 will equate to:

(4GB * 3000) / 8 = 1.5TB before EoLThe Total Bytes Written of the eMMC device is 1.5TB. Therefore, we can write 1.5TB of data over the lifecycle of the product before reaching its EoL state.

To calculate for a 16GB chip with a generously low WAF of 2 gives us:

(16GB * 3000) / 2 = 24 TB before EoLCalculating expected storage lifespans 16GB eMMC with a WAF of 2 and average write rates from 50 to 250KB/s gives us the following:

250KB/s = 596 days (1.6 years) 150KB/s= 994 days (2.7 years) 100KB/s = 1491 days (4.1 years) 50KB/s = 2982 days (8.2 years)To summarize:

- The default ZFS settings used by pfSense cause a significantly higher write rate compared to UFS, even for light loads with minimal logging.

- It is true that all flash wears, but the combination of 8/16GB eMMC and default ZFS settings in pfSense results in a high probability of dying in under 2 years, and practically guarantees failure within 3 years.

- In some cases, when eMMC fails, it will prevent the Netgate hardware from even powering on, rendering the device completely dead. Physically de-soldering the eMMC chip is the only solution when this happens.

- Changing vfs.zfs.txg.timeout to 60 reduces the average write rate back down to UFS levels, although with unknown risks.

- As of this posting, pfSense has no default monitoring of eMMC health or storage write rate, leading to sudden, unexpected failures of Netgate devices.

- Avoiding packages will not solve the problem, nor will overzealously disabling logging.

- As of this posting, there are no warnings in the product pages, documentation, or GUI regarding issues with small storage devices or eMMC storage.

- Changing the ZFS sync interval is sometimes recommended as a solution for reducing storage writes, but it is not documented and the there is no information on the risks of data loss or corruption that can result from changing the default setting.

- Many device failures are incorrectly attributed to "user error" when the real issue is a critical flaw in the default ZFS settings used by pfSense.

- The true scope of this issue is unknown since many failures occur after the 1-year warranty and so they are either not accepted as RMA claims by Netgate or are not reported at all. Due to this, Netgate's RMA stats for eMMC failure will be significantly lower than the true number.

Request for Additional Data

Please share if you have any data on your average disk write rate or the effects of changing the vfs.zfs.txg.timeout.

I am interested to see how my data compares with a larger sample size. -

@jared-silva I am glad that myself and @stephenw10 were able to help!

-

@andrew_cb This is a real contribution to the project, even for those of us running CE on white boxes. This entire discussion has personally called my attention to a number of configuration improvements and tweaks I probably wouldn't have considered or realized otherwise. So thank you—seriously.

(And thank you to Netgate for even openly particpating in the discussion. Seriously.)

Can I just ask about your methodology for measuring average system write? Is there some built-in FreeBSD tool? Something obvious in the GUI I've overlooked? Did you script something? Wondering if it's something I could incorporate into my own run-of-the-mill system monitoring.

-

@tinfoilmatt said in Another Netgate with storage failure, 6 in total so far:

Can I just ask about your methodology for measuring average system write? Is there some built-in FreeBSD tool? Something obvious in the GUI I've overlooked? Did you script something? Wondering if it's something I could incorporate into my own run-of-the-mill system monitoring.

Nothing in the GUI but on the console "iostat" (see documentation for parameters) is a good approach.

Have a nice weekend,

fireodo -

@andrew_cb said in Another Netgate with storage failure, 6 in total so far:

I have not yet experiment with values other than 60 or any other ZFS settings.

But I have (go to extreme 1800), and the writes are significantly reduced but also the risk is higher to get trouble when a sudden powerloss happends ...

-

@fireodo said in Another Netgate with storage failure, 6 in total so far:

But I have (go to extreme 1800), and the writes are significantly reduced but also the risk is higher to get trouble when a sudden powerloss happends ...

This risk is still lower than that for UFS with the same sudden power loss, and way lower than the risk of ending up with a dead eMMC

-

@w0w said in Another Netgate with storage failure, 6 in total so far:

@fireodo said in Another Netgate with storage failure, 6 in total so far:

But I have (go to extreme 1800), and the writes are significantly reduced but also the risk is higher to get trouble when a sudden powerloss happends ...

This risk is still lower than that for UFS with the same sudden power loss, and way lower than the risk of ending up with a dead eMMC

That is correct.

I begun in 2021 to investigate the problem after a (at the time there was no eMMC problematic or no one was avare of it) Kingston SSD was suddenly get killed in my machine:

https://forum.netgate.com/topic/165758/average-writes-to-disk/62?_=1743795093996 -

@tinfoilmatt We use Zabbix, along with the script and template created by rbicelli.

I have my own fork of the script and template that I can post later.Zabbix Agent and Proxy packages are available in pfSense (including Zabbix 7.0 in 24.11!).

You will need to set up a Zabbix server, which is pretty easy to do.

vfs.dev.write is the agent function for getting disk write activity.

-

@andrew_cb said in Another Netgate with storage failure, 6 in total so far:

I am interested to see how my data compares with a larger sample size.

Here they come:

2021 - 2025 GBW/Day - 19,45 initial and default settings (timeout=5) GBW/Day - 1,79 vfs.zfs.txg.timeout=120 GBW/Day - 0,78 vfs.zfs.txg.timeout=240 GBW/Day - 0,72 vfs.zfs.txg.timeout=360 GBW/Day - 0,59 vfs.zfs.txg.timeout=600 GBW/Day - 0,42 vfs.zfs.txg.timeout=900 GBW/Day - 0,36 vfs.zfs.txg.timeout=1200 GBW/Day - 0,28 vfs.zfs.txg.timeout=1800(I have no "/s" values - this are median per day values)

(GBW - GigaByteWritten)Edit 23.04.2025: Today I had a Power failure that exhausted the UPS Battery. The timeout was set at 2400. The pfsense has shudown as set in the apcupsd and after Power has come back the pfsense came up without any issues.

-

Has this been be added as a redmine so it can be merged ? This fix should be used to mitigate the high risk of failure.

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

@fireodo Awesome, thanks for sharing!

Your GBW/Day at the default timeout of 5 is similar to what I am seeing. Most devices are doing about 23GBW/Day (nearly 1GB per hour).

-

Introduction

While further researching ZFS settings, I found this enlightening 2016 FreeBSD article Tuning ZFS. The introduction states:

The most important tuning you can perform for a database is the dataset block size—through the recordsize property. The ZFS recordsize for any file that might be overwritten needs to match the block size used by the application.

Tuning the block size also avoids write amplification. Write amplification happens when changing a small amount of data requires writing a large amount of data. Suppose you must change 8 KB in the middle of a 128 KB block. ZFS must read the 128 KB, modify 8 KB somewhere in it, calculate a new checksum, and write the new 128 KB block. ZFS is a copy-on-write filesystem, so it would wind up writing a whole new 128 KB block just to change that 8 KB. You don’t want that.

Now multiply this by the number of writes your database makes. Write amplification eviscerates performance.

It can also affect the life of SSDs and other flash-based storage that can handle a limited volume of writes over their lifetimes

...

ZFS metadata can also affect databases. When a database is rapidly changing, writing out two or three copies of the metadata for each change can take up a significant number of the available IOPS of the backing storage. Normally, the quantity of metadata is relatively small compared to the default 128 KB record size. Databases work better with small record sizes, though. Keeping three copies of the metadata can cause as much disk activity, or more, than writing actual data to the pool.

Newer versions of OpenZFS also contain a redundant_metadata property, which defaults to all.(emphasis added)

Interpretation

My understanding of this is that even if only a few entries are added to a log file, ZFS will write an entire 128 KB block to disk. At the default interval of 5 seconds, there are likely dozens of 128 KB blocks that need to be written, even though each block might only contain a few KB of new data. For example, if a log file is written every 1 second, then in the span of 5 seconds there could be 640 KB (5 x 128 KB blocks) of data to write, just for that single log file.

When the interval is increased, the pending writes can be more efficiently coalesced into fewer, fuller 128 KB blocks, which results in less data needing to be written overall.

Block Size

The block size can be checked with the command :

zfs get recordsizeWhich produces an output similar to the following:

NAME PROPERTY VALUE SOURCE pfSense recordsize 128K default pfSense/ROOT recordsize 128K default pfSense/ROOT/auto-default-20221229181755 recordsize 128K default pfSense/ROOT/auto-default-20221229181755/cf recordsize 128K default pfSense/ROOT/auto-default-20221229181755/var_cache_pkg recordsize 128K default pfSense/ROOT/auto-default-20221229181755/var_db_pkg recordsize 128K default pfSense/ROOT/auto-default-20230729144653 recordsize 128K default pfSense/ROOT/auto-default-20230729144653/cf recordsize 128K default pfSense/ROOT/auto-default-20230729144653/var_cache_pkg recordsize 128K default pfSense/ROOT/auto-default-20230729144653/var_db_pkg recordsize 128K default pfSense/ROOT/auto-default-20230729145845 recordsize 128K default pfSense/ROOT/auto-default-20230729145845/cf recordsize 128K default pfSense/ROOT/auto-default-20230729145845/var_cache_pkg recordsize 128K default pfSense/ROOT/auto-default-20230729145845/var_db_pkg recordsize 128K default pfSense/ROOT/auto-default-20240531032905 recordsize 128K default pfSense/ROOT/auto-default-20240531032905/cf recordsize 128K default pfSense/ROOT/auto-default-20240531032905/var_cache_pkg recordsize 128K default pfSense/ROOT/auto-default-20240531032905/var_db_pkg recordsize 128K default pfSense/ROOT/default recordsize 128K default pfSense/ROOT/default@2022-12-29-18:17:55-0 recordsize - - pfSense/ROOT/default@2023-07-29-14:46:53-0 recordsize - - pfSense/ROOT/default@2023-07-29-14:58:45-0 recordsize - - pfSense/ROOT/default@2024-05-31-03:29:05-0 recordsize - - pfSense/ROOT/default@2024-05-31-03:36:02-0 recordsize - - pfSense/ROOT/default/cf recordsize 128K default pfSense/ROOT/default/cf@2022-12-29-18:17:55-0 recordsize - - pfSense/ROOT/default/cf@2023-07-29-14:46:53-0 recordsize - - pfSense/ROOT/default/cf@2023-07-29-14:58:45-0 recordsize - - pfSense/ROOT/default/cf@2024-05-31-03:29:05-0 recordsize - - pfSense/ROOT/default/cf@2024-05-31-03:36:02-0 recordsize - - pfSense/ROOT/default/var_cache_pkg recordsize 128K default pfSense/ROOT/default/var_cache_pkg@2022-12-29-18:17:55-0 recordsize - - pfSense/ROOT/default/var_cache_pkg@2023-07-29-14:46:53-0 recordsize - - pfSense/ROOT/default/var_cache_pkg@2023-07-29-14:58:45-0 recordsize - - pfSense/ROOT/default/var_cache_pkg@2024-05-31-03:29:05-0 recordsize - - pfSense/ROOT/default/var_cache_pkg@2024-05-31-03:36:02-0 recordsize - - pfSense/ROOT/default/var_db_pkg recordsize 128K default pfSense/ROOT/default/var_db_pkg@2022-12-29-18:17:55-0 recordsize - - pfSense/ROOT/default/var_db_pkg@2023-07-29-14:46:53-0 recordsize - - pfSense/ROOT/default/var_db_pkg@2023-07-29-14:58:45-0 recordsize - - pfSense/ROOT/default/var_db_pkg@2024-05-31-03:29:05-0 recordsize - - pfSense/ROOT/default/var_db_pkg@2024-05-31-03:36:02-0 recordsize - - pfSense/ROOT/default_20240531033558 recordsize 128K default pfSense/ROOT/default_20240531033558/cf recordsize 128K default pfSense/ROOT/default_20240531033558/var_cache_pkg recordsize 128K default pfSense/ROOT/default_20240531033558/var_db_pkg recordsize 128K default pfSense/cf recordsize 128K default pfSense/home recordsize 128K default pfSense/reservation recordsize 128K default pfSense/tmp recordsize 128K default pfSense/var recordsize 128K default pfSense/var/cache recordsize 128K default pfSense/var/db recordsize 128K default pfSense/var/log recordsize 128K default pfSense/var/tmp recordsize 128K defaultAs you can see, the recordsize of all mount points is 128 KB.

Redundant Metadata

The redundant_metadata setting can be checked with

zfs get redundant_metadataNAME PROPERTY VALUE SOURCE pfSense redundant_metadata all default pfSense/ROOT redundant_metadata all default pfSense/ROOT/auto-default-20221229181755 redundant_metadata all default pfSense/ROOT/auto-default-20221229181755/cf redundant_metadata all default pfSense/ROOT/auto-default-20221229181755/var_cache_pkg redundant_metadata all default pfSense/ROOT/auto-default-20221229181755/var_db_pkg redundant_metadata all default pfSense/ROOT/auto-default-20230729144653 redundant_metadata all default pfSense/ROOT/auto-default-20230729144653/cf redundant_metadata all default pfSense/ROOT/auto-default-20230729144653/var_cache_pkg redundant_metadata all default pfSense/ROOT/auto-default-20230729144653/var_db_pkg redundant_metadata all default pfSense/ROOT/auto-default-20230729145845 redundant_metadata all default pfSense/ROOT/auto-default-20230729145845/cf redundant_metadata all default pfSense/ROOT/auto-default-20230729145845/var_cache_pkg redundant_metadata all default pfSense/ROOT/auto-default-20230729145845/var_db_pkg redundant_metadata all default pfSense/ROOT/auto-default-20240531032905 redundant_metadata all default pfSense/ROOT/auto-default-20240531032905/cf redundant_metadata all default pfSense/ROOT/auto-default-20240531032905/var_cache_pkg redundant_metadata all default pfSense/ROOT/auto-default-20240531032905/var_db_pkg redundant_metadata all default pfSense/ROOT/default redundant_metadata all default pfSense/ROOT/default@2022-12-29-18:17:55-0 redundant_metadata - - pfSense/ROOT/default@2023-07-29-14:46:53-0 redundant_metadata - - pfSense/ROOT/default@2023-07-29-14:58:45-0 redundant_metadata - - pfSense/ROOT/default@2024-05-31-03:29:05-0 redundant_metadata - - pfSense/ROOT/default@2024-05-31-03:36:02-0 redundant_metadata - - pfSense/ROOT/default/cf redundant_metadata all default pfSense/ROOT/default/cf@2022-12-29-18:17:55-0 redundant_metadata - - pfSense/ROOT/default/cf@2023-07-29-14:46:53-0 redundant_metadata - - pfSense/ROOT/default/cf@2023-07-29-14:58:45-0 redundant_metadata - - pfSense/ROOT/default/cf@2024-05-31-03:29:05-0 redundant_metadata - - pfSense/ROOT/default/cf@2024-05-31-03:36:02-0 redundant_metadata - - pfSense/ROOT/default/var_cache_pkg redundant_metadata all default pfSense/ROOT/default/var_cache_pkg@2022-12-29-18:17:55-0 redundant_metadata - - pfSense/ROOT/default/var_cache_pkg@2023-07-29-14:46:53-0 redundant_metadata - - pfSense/ROOT/default/var_cache_pkg@2023-07-29-14:58:45-0 redundant_metadata - - pfSense/ROOT/default/var_cache_pkg@2024-05-31-03:29:05-0 redundant_metadata - - pfSense/ROOT/default/var_cache_pkg@2024-05-31-03:36:02-0 redundant_metadata - - pfSense/ROOT/default/var_db_pkg redundant_metadata all default pfSense/ROOT/default/var_db_pkg@2022-12-29-18:17:55-0 redundant_metadata - - pfSense/ROOT/default/var_db_pkg@2023-07-29-14:46:53-0 redundant_metadata - - pfSense/ROOT/default/var_db_pkg@2023-07-29-14:58:45-0 redundant_metadata - - pfSense/ROOT/default/var_db_pkg@2024-05-31-03:29:05-0 redundant_metadata - - pfSense/ROOT/default/var_db_pkg@2024-05-31-03:36:02-0 redundant_metadata - - pfSense/ROOT/default_20240531033558 redundant_metadata all default pfSense/ROOT/default_20240531033558/cf redundant_metadata all default pfSense/ROOT/default_20240531033558/var_cache_pkg redundant_metadata all default pfSense/ROOT/default_20240531033558/var_db_pkg redundant_metadata all default pfSense/cf redundant_metadata all default pfSense/home redundant_metadata all default pfSense/reservation redundant_metadata all default pfSense/tmp redundant_metadata all default pfSense/var redundant_metadata all default pfSense/var/cache redundant_metadata all default pfSense/var/db redundant_metadata all default pfSense/var/log redundant_metadata all default pfSense/var/tmp redundant_metadata all defaultConclusion

It did not make sense to me why ZFS would have so much data to flush every 5 seconds when the actual amount of changed data is low.

The 128 KB block size and redundant_metadata setting are the missing links as to why the write activity is so much higher with ZFS.

Further Testing

The effects of changing the recordsize to values smaller than 128 KB and changing redundant_metadata to "most" instead of "all" needs to be tested.

-

It looks like the ZFS block size can be changed with the command

sudo zfs set recordsize=[size] [dataset name]However, this will not change the block size of existing files.

In theory, this should help with log files since a new file is created at rotation. -

@andrew_cb said in Another Netgate with storage failure, 6 in total so far:

For example, if a log file is written every 1 second, then in the span of 5 seconds there could be 640 KB (5 x 128 KB blocks) of data to write, just for that single log file.

Maybe, but it could also be one block if all updates fit into the one block. (there is also compression)

(Edit: 5 updates to one log file, or to 5 log files)Note the amount of metadata changes with recordsize (there are more blocks per file), as do things like write speed.

It stands to reason though most of the writing on pfSense is log files, or periodic file updates like pfBlocker feeds.

-

K keyser referenced this topic on

K keyser referenced this topic on

-

I was able to do some testing of different zfs_txg_timeout values. I am using Zabbix to call a PHP script every 60 seconds and store the value in an item. Attached to the item is a problem trigger that fires whenever the value changes. The data is visualized using Grafana, with an annotation query that overlays the "problems" (each time the value changes) as vertical red lines. There is a slight delay before Zabbix detects the change, so the red lines do not always align exactly with changes on the graph.

The testing was performed on 3 active firewalls performing basic functions, so they are representative of real-world performance in our environment and likely many others. Notice how the graphs for all 3 firewalls are nearly identical, reinforcing that the underlying OS drives this behavior.

Changing from 5s to 120s results in a 90% reduction in write activity. The change numbers shown on the graph are a bit low because some data from the previous 10s value is included from before changing to 5s at the start of the test period. Interestingly, values of 45 and 90 seem to produce more variation in the write rate compared to other values.

Looking at just the change from 5s to 10s, the average write rate has been reduced by 35%.

Changing from 10s to 15s gives a further 30% average reduction in write rate.

There are diminishing returns from values above 30.

The gain from 15s to 120s is 32%, specifically, 15s to 30s is 16%, and from 30s to 120s is an additional 16%.Calculating for a 16GB storage device with a TBW of 48 gives us the following:

timeout (s) kb/s change lifespan (days) lifespan (years) 5 309 656 1.8 10 175 -43% 1159 3.2 15 129 -58% 1572 4.3 20 104 -66% 1950 5.3 30 81 -74% 2503 6.9 45 66 -79% 3072 8.4 60 59 -81% 3437 9.4 90 39 -87% 5199 14.2 120 31 -90% 6541 17.9Based on this data, changing the default value of zfs_txg_timeout to 10 or 15 will double the lifespan of devices with eMMC storage without causing too much data loss in the event of an unclean shutdown.

This change, combined with improved eMMC monitoring, would nearly eliminate unexpected eMMC failures due to wearout.

-

Leaving this here for reference: https://openzfs.github.io/openzfs-docs/Performance%20and%20Tuning/Module%20Parameters.html#zfs-txg-timeout

-

@andrew_cb said in Another Netgate with storage failure, 6 in total so far:

Based on this data, changing the default value of zfs_txg_timeout to 10 or 15 will double the lifespan of devices with eMMC storage without causing too much data loss in the event of an unclean shutdown.

This is a great write-up. Thanks

-

P Patch referenced this topic on