Installing pfSense on a Supermicro 5018A-FTN4 SuperServer

-

So I figured out the ahci issue when enabling trim for the SSD I was using. I attempted to use a Crucial M500 which uses a Marvell controller and no matter which settings I changed in the BIOS it refused to mount the / file system you had ahci enabled despite having the proper fstab entries.

I tried swapping the M500 out for a Samsung Evo 840 and it solved the problem. I was able to install then enable AHCI without any trouble.

Hope this helps others.

-

Hi cfipilot

Did you ever get pass that point?I too get stuck at:

ACPI APIC Table: <intel tiano ="" ="">I first install the system the the SSD. Then I start to do the modifications. When getting to the point of logging in as single-user the system hangs at the point above. It is an Intel SATA SSD.– SOLVED --

I could not boot the server in single-user mode, so I ended up reverting the patch that has made it difficult to enable TRIM.

I reverted this commit (jsut copy-pasted it back in place):

http://freshbsd.org/commit/pfsense/aa87bae5fc11a857c9dc7793fc4a932cc860e94aThen created the file (will make the code above enable TRIM):

/root/TRIM_setAnd did a reboot. That soves the "enable trim" without beeing in single-user mode..</intel>

-

Does pfSense 2.2 run well on this?

-

The 2.1.5 seems to install "as is".

The same seems to go for 2.2…You allways need to increase mbuf.

I have tried one upgrade from 2.1.5 to 2.2 that crashed the system and required a reinstall. So be close to your box when upgrading.

I have had two of these boxes running pfsense 2.2. One was (and is) running with no problems at all. The other had a serious DNS problem so clients on LAN could not resolve addresses. I think I caused the DNS error and not incompatibility between hardware and pfsense. But pay attention anyway.

Regarding TRIM if you use SSD then:

This part is tricke and could be subject to change…https://forum.pfsense.org/index.php?topic=66622.msg364411#msg364411

Login with SSH and open the shell.

Run: /usr/local/sbin/ufslabels.sh

pres 'y' to acceptAdd the line ahci_load="YES" to /boot/loader.conf.local

reboot the machine##EITHER REMOTE:

Revert patch by editing /root/rc

http://freshbsd.org/commit/pfsense/aa87bae5fc11a857c9dc7793fc4a932cc860e94a

Login with SSH and open the shell

touch /root/TRIM_set; /etc/rc.reboot

##ELSE IF YOU HAVE LOCAL ACCESS

Login as single user and run:

/sbin/tunefs -t enable /

/etc/rc.rebootOnce the machine has rebooted check the status with: tunefs -p /

See if trim enabled is in the output -

Nice boxes and I bought a pair on the basis of this thread but the network throughput seems poor.

I've got two of them with CARP enabled and doing an iperf test on the CARP interfaces (igb1 on both boxes connected by a 4" cable so no switch involved) I'm only seeing ~550Mb/s (larger iperf windows don't make any difference).

Client connecting to 10.10.1.1, TCP port 5001 TCP window size: 65.0 KByte (default) ------------------------------------------------------------ [ 3] local 10.10.1.2 port 64350 connected with 10.10.1.1 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 654 MBytes 547 Mbits/secThe NIC's are coming up at a gigabit as you'd expect:

igb1: flags=8843 <up,broadcast,running,simplex,multicast>metric 0 mtu 1500 options=403bb <rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,vlan_hwtso>ether 0c:c4:7a:32:5c:31 inet6 fe80::ec4:7aff:fe32:5c31%igb1 prefixlen 64 scopeid 0x2 inet 10.10.1.1 netmask 0xffffff00 broadcast 10.10.1.255 nd6 options=21 <performnud,auto_linklocal>media: Ethernet autoselect (1000baseT <full-duplex>) status: active</full-duplex></performnud,auto_linklocal></rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,vlan_hwtso></up,broadcast,running,simplex,multicast>I've increased the nmbclusters to 1,000,000 (as suggested on the pfSense website), tried playing with turning off TSO but never seem to see much more than half a gig.

I'm running pfSense 2.2.1-RELEASE (amd64), 8GB RAM and a pair of 128GB Samsung Evo pro drives in a GEOM Mirror.

As I have a gigabit fibre arriving in a few weeks and I've put these boxes together to replace our aging IPcop firewalls, I really would like to get them running as close to a gigabit as possible.

Anyone got any suggestions as to what I can try?

-

Don't know if this will do it. But if you use the shaper, then try to disable the "Explicit Congestion Notification". It seems to "eat" a lot of throughput in my networks at least.

-

That's a config issue somewhere, these are capable of way greater speeds than you are seeing. dont worry, we just need to find the turbo trigger :)

-

I wish I knew where this turbo trigger was! :)

For fun I connected igb2 on each box as OPT2 (so there was no chance of CARP interfering) and tried iperf on that link:

Client connecting to 10.9.8.2, TCP port 5001 TCP window size: 65.0 KByte (default) ------------------------------------------------------------ [ 3] local 10.9.8.1 port 54294 connected with 10.9.8.2 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 633 MBytes 531 Mbits/secso that's pretty much the same speed on a pair of ports being for used for nothing else than the test.

I also tried disabling the disabling (!) of the hardware TSO and LRO in Advanced->Networking. No difference.

I had ntopng installed on one of the boxes…. deleted that and no difference :(

MBuf usage is at 4%, CPU load near zero, 4% RAM used so the systems are basically twiddling their thumbs and doing nothing..... but they still can't pass data between themselves at gigabit speeds. I'm not using shaper.

Can anyone suggest what else I can try or do to get these NICs working?

-

Been doing some more testing and it looks like it's a packet filtering issue rather than the NICs themselves.

Using my test OPT2 'network' (igb2 on both machines connected with a short cable) if I disable all packet filtering (System->Advanced->Firewall) the speed reported by iperf leaps up to 900+Mbit/s

------------------------------------------------------------ Client connecting to 10.9.8.1, TCP port 5001 TCP window size: 129 KByte (WARNING: requested 128 KByte) ------------------------------------------------------------ [ 3] local 10.9.8.2 port 65147 connected with 10.9.8.1 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.06 GBytes 912 Mbits/secTurning off the packet filtering on just one of the two boxes doesn't improve things it has to be both.

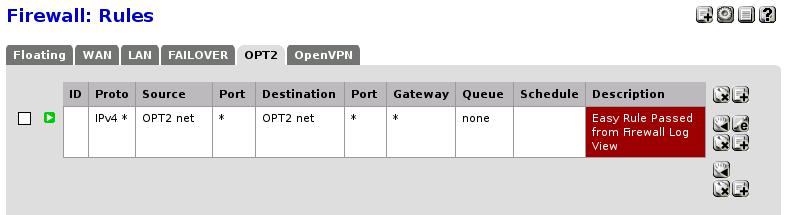

Firewall wise the packet filtering on OPT2 is simply a pass rule for everything:

The systems are not heavily loaded (load average showing 0) or out of resource.

Quite where this now takes me I don't know. Turning off pfSense's packet filtering doesn't seem like a Good Move :(

-

Firewall wise the packet filtering on OPT2 is simply a pass rule for everything:

You aware the rule does not allow any traffic, right? The rule is for traffic that's never gonna hit the firewall in the first place. Fix the destination!

-

Errr no. ???

If I disable this one and only rule on OPT2 then iperf doesn't connected on the OPT2 network.

However, I've changed the destination to "OPT2 Address"…... and the Bandwidth is still ~550Mb/s unless I disable all packet filtering on both machines.

How do I start debugging this? Any suggestions will be most gratefully received.

-

Your rules are completely wrong, end of story.

-

OK. You're a Hero and I'm a pfSense newbie so I probably am doing something wrong :) The clever bit is finding out what!

What should the rule be for this test link ?

NICs configured as OPT2 in two separate boxes connected back to back.

Unique network IP's (10.9.8.1/24 and 10.9.8.2/24) not used in any other part of the config.For fun I changed the destination of the rule to 'any'…. no difference still ~550Mb/s :(

However.... that's with one thread. Googling around I noticed a post from yourgoodself where you used multiple threads so I played with that.

Two iperf threads (iperf -c 10.8.9.1 -P 2) gives results between ~550 and ~850 Mb/s. Curiously inconsistent.

Push that up higher (tried up to 100 threads) and above 4(ish) threads, the max speed seems to stabilise and plateau around 950 Mb/s.

Am I seeing some facet of pfSense/OpenBSD/C2758 here in that a single thread can't max out the 1Gb/s NIC ?

(all of the above with packet filtering on).

-

Been doing some more testing and I'm pretty sure the network slowdown is being caused by the PFSense firewalling.

I booted both PFsense boxes on Debian live usb sticks and iperf shows ~950Mb/s in both directions on the OPT2/igb2 interface… so it's not a hardware issue as such; the interfaces can run at ~1Gb/s.

I booted my primary box back into PFSense and left the second PFSense box on Debian and did some more iperf tests on the dedicated OPT2 interface (igb2 with a short link cable between the boxes).

Sending data from the Debian booted box into the PFSense box the speed is limited to around ~550Mb/s.

Sending date from the PFsense booted box to the Debian one the speed is ~940Mb/s.

So it's data coming into the PFSense box that's the problem. If I disable the PFSense firewall (in System->Advanced->Firewall->Disable Firewall) then I can send data into the PFSense box at full speed.

I tried re-enabling the firewall and disabling NAT (Firewall->NAT->Disable Outbound NAT) and that made no difference... still ~550Mb/s.

The firewall rules on OPT2 are a simple allow anything rule but bearing in mind doktornotor's comment I disabled my rule, ran iperf with the PFSense box as the server and then used the 'Add rule' feature' of PFSense's log to add a rule permitting iperf's traffic (as attached).

No difference.... still limited to ~550Mb/s sending data into PFSense.

So the bottom line is..... with firewalling enabled PFSense will not accept inbound data faster than around ~550Mb/s.

Turning off the firewall on my 1Gb/s WAN port really isn't an option :( so I'm asking for any suggestions as to what I can try to sort this out.

-

your rules still don't make sense ;)

your source and destination are in the same subnet. so no routing can be done, and pfsense can not forward anything.

draw a schematic of your network layout. also provide your interface configuration for both interface you wish to run iperf in between.to test you could just allow all (source:* destination:*)

-

Yeah just remove the BS from the rules source and destination and it will work.

-

Sorry Guys I'm having a real problem understanding why the rule for OPT2 is wrong and causing the inbound speed issue (especially as the active rule in my previous post is one created by PFSense itself). I have tried source: * and destination: * as shown in the above attachment but that doesn't help with the speed either.

Can you explain? or even suggest what the firewall rule for this simple test should be? (Note: with no rules for OPT2 the iperf test obviously fails as all traffic defaults to blocked).

As requested attached is a diagram. I'm testing on the OPT2/igb2 interfaces on both boxes as a simple case but the same speed issue is present on the LAN and FAILOVER interfaces (not tested the WAN side but I'd be amazed if that didn't have the same issue).

Also as requested here's the interface config from the PFSense booted box:

igb0: flags=8943 <up,broadcast,running,promisc,simplex,multicast>metric 0 mtu 1500 options=407bb <rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso>ether 0c:c4:7a:32:5c:30 inet6 fe80::ec4:7aff:fe32:5c30%igb0 prefixlen 64 scopeid 0x1 inet 192.168.1.247 netmask 0xffffff00 broadcast 192.168.1.255 inet 192.168.1.254 netmask 0xffffff00 broadcast 192.168.1.255 vhid 2 nd6 options=21 <performnud,auto_linklocal>media: Ethernet autoselect (1000baseT <full-duplex>) status: active carp: MASTER vhid 2 advbase 1 advskew 0 igb1: flags=8843 <up,broadcast,running,simplex,multicast>metric 0 mtu 1500 options=407bb <rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso>ether 0c:c4:7a:32:5c:31 inet6 fe80::ec4:7aff:fe32:5c31%igb1 prefixlen 64 scopeid 0x2 inet 10.10.1.1 netmask 0xffffff00 broadcast 10.10.1.255 nd6 options=21 <performnud,auto_linklocal>media: Ethernet autoselect status: no carrier igb2: flags=8843 <up,broadcast,running,simplex,multicast>metric 0 mtu 1500 options=407bb <rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso>ether 0c:c4:7a:32:5c:32 inet6 fe80::ec4:7aff:fe32:5c32%igb2 prefixlen 64 scopeid 0x3 inet 10.9.8.1 netmask 0xffffff00 broadcast 10.9.8.255 nd6 options=21 <performnud,auto_linklocal>media: Ethernet autoselect (1000baseT <full-duplex>) status: active igb3: flags=8943 <up,broadcast,running,promisc,simplex,multicast>metric 0 mtu 1500 options=407bb <rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso>ether 0c:c4:7a:32:5c:33 inet6 fe80::ec4:7aff:fe32:5c33%igb3 prefixlen 64 scopeid 0x4 inet X.X.X.106 netmask 0xffffffe0 broadcast X.X.X.127 inet X.X.X.108 netmask 0xffffffe0 broadcast X.X.X.127 vhid 1 nd6 options=21 <performnud,auto_linklocal>media: Ethernet autoselect (1000baseT <full-duplex>) status: active carp: MASTER vhid 1 advbase 1 advskew 0 pflog0: flags=100 <promisc>metric 0 mtu 33144 pfsync0: flags=41 <up,running>metric 0 mtu 1500 pfsync: syncdev: igb1 syncpeer: 10.10.1.2 maxupd: 128 defer: on syncok: 1 lo0: flags=8049 <up,loopback,running,multicast>metric 0 mtu 16384 options=600003 <rxcsum,txcsum,rxcsum_ipv6,txcsum_ipv6>inet 127.0.0.1 netmask 0xff000000 inet6 ::1 prefixlen 128 inet6 fe80::1%lo0 prefixlen 64 scopeid 0x7 nd6 options=21 <performnud,auto_linklocal>enc0: flags=0<> metric 0 mtu 1536 nd6 options=21 <performnud,auto_linklocal>ovpns2: flags=8051 <up,pointopoint,running,multicast>metric 0 mtu 1500 options=80000 <linkstate>inet6 fe80::ec4:7aff:fe32:5c30%ovpns2 prefixlen 64 scopeid 0x9 inet 10.0.8.1 --> 10.0.8.2 netmask 0xffffffff nd6 options=21 <performnud,auto_linklocal>Opened by PID 85860</performnud,auto_linklocal></linkstate></up,pointopoint,running,multicast></performnud,auto_linklocal></performnud,auto_linklocal></rxcsum,txcsum,rxcsum_ipv6,txcsum_ipv6></up,loopback,running,multicast></up,running></promisc></full-duplex></performnud,auto_linklocal></rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso></up,broadcast,running,promisc,simplex,multicast></full-duplex></performnud,auto_linklocal></rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso></up,broadcast,running,simplex,multicast></performnud,auto_linklocal></rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso></up,broadcast,running,simplex,multicast></full-duplex></performnud,auto_linklocal></rxcsum,txcsum,vlan_mtu,vlan_hwtagging,jumbo_mtu,vlan_hwcsum,tso4,tso6,lro,vlan_hwtso></up,broadcast,running,promisc,simplex,multicast>