ZFS ISSUES!!! built on Wed Jul 11 16:46:22 EDT 2018

-

I have not updated mine yet, but will be getting out of work soon to test as I don't have remote IPMI setup yet and am afraid to do it remotely at this moment of noway to get it back up.

-

@raul-ramos It's a no-go for me on that snapshot, although I did not manually modify the config after to add the lines back in.

-

Yes, this is upgrade bug. Clean install works, but if you upgrade it to the next snapshot it is going to fail on boot.

-

Updated redmine as well...

- Clean install of (2.4.4-DEVELOPMENT (amd64)built on Fri Jul 13 10:56:07 EDT 2018FreeBSD 11.2-RELEASE)

- Restore config

- Reboot

- Upgrade and reboot (2.4.4.a.20180713.1957)

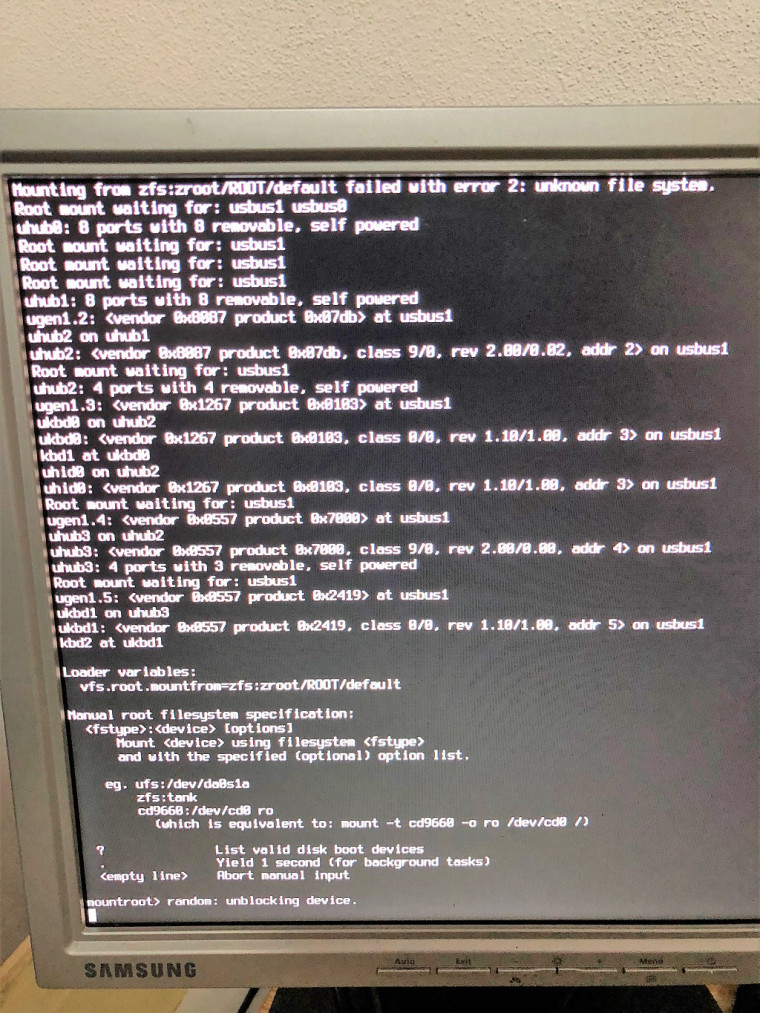

- Borked system zfs won`t mount

-

@maverick_slo

Actually you don't need to restore config, at least it is not necessary to reproduce the problem. I've used clean install in VM and right after boot updated it with option 13, without configuring anything. -

Yeah I know.

I just wanted to note exactly what I did.But lack of offical response makes me concerned...

At least they could pull upgrades. -

@maverick_slo

May be it's vacations. Delays are expected in summer time

And this is development branch that have low priority I think, because everyone who uses it, must be ready for fail. The other thing embarrassing me is that 2.4.4 have too much major changes for the minor version. -

Should be fixed with recent snapshot.

-

Tried todazs and same mountroot error.

-

@maverick_slo

Before upgrade check that your /boot/loader.conf containszfs_load="YES"Mine was just missing. I've added it manually and upgraded successfully to JUL 17 7:07 version.

-

I do have zfs_load="YES" on loader.conf and it failed to upgrade from:

2.4.4-DEVELOPMENT (amd64)

built on Fri Jul 13 19:58:06 EDT 2018

FreeBSD 11.2-RELEASEto:

Can't be sure snapshot version. It was arround 14:00 GMT+1.

-

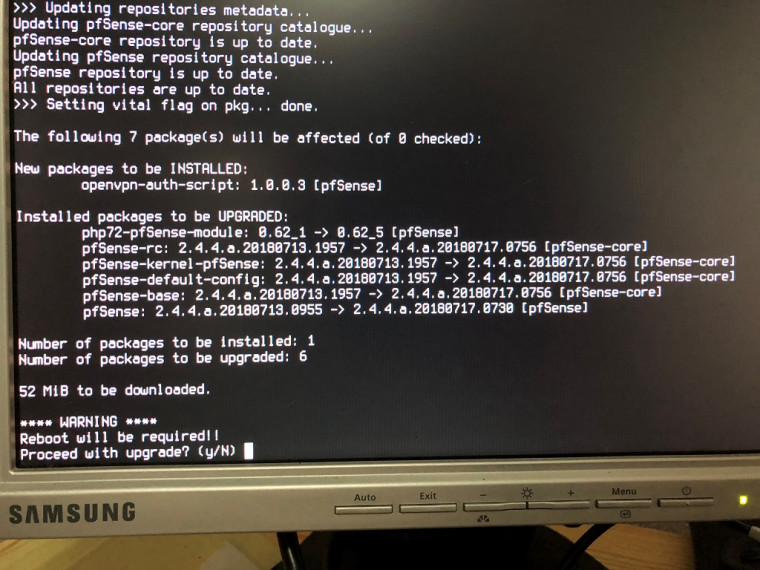

Clean install and upgrade, both versions are on the screenshot. No problem after boot and reboot.

-

Well.... it didn't work for me.

EDIT: sorry. Adding pics.

EDIT2: Now it should be here.

-

For me either... Same error

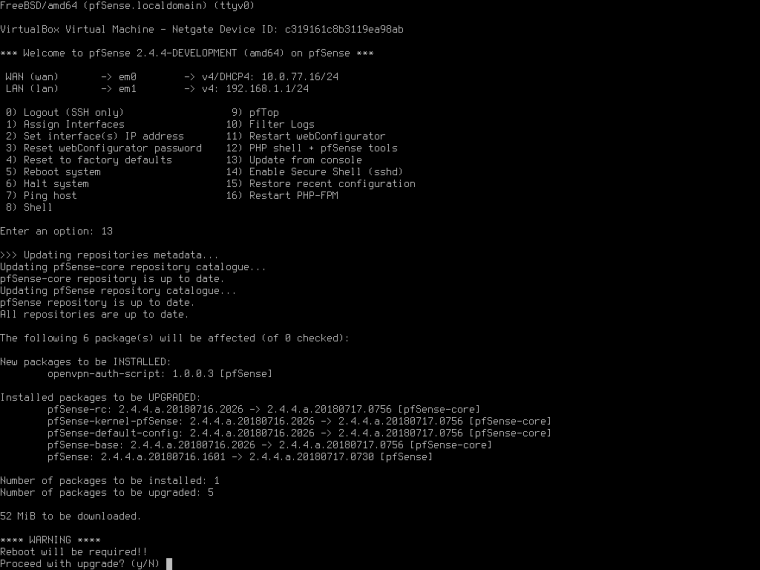

Updating repositories metadata...

Updating pfSense-core repository catalogue...

pfSense-core repository is up to date.

Updating pfSense repository catalogue...

pfSense repository is up to date.

All repositories are up to date.

Setting vital flag on pkg... done.

Downloading upgrade packages...

Updating pfSense-core repository catalogue...

pfSense-core repository is up to date.

Updating pfSense repository catalogue...

pfSense repository is up to date.

All repositories are up to date.

Checking for upgrades (9 candidates): ......... done

Processing candidates (9 candidates): ......... done

The following 10 package(s) will be affected (of 0 checked):New packages to be INSTALLED:

openvpn-auth-script: 1.0.0.3 [pfSense]Installed packages to be UPGRADED:

snort: 2.9.11.1_1 -> 2.9.11.1_2 [pfSense]

php72-pfSense-module: 0.62_1 -> 0.62_5 [pfSense]

pfSense-rc: 2.4.4.a.20180713.1056 -> 2.4.4.a.20180717.0756 [pfSense-core]

pfSense-kernel-pfSense: 2.4.4.a.20180713.1056 -> 2.4.4.a.20180717.0756 [pfSense-core]

pfSense-default-config: 2.4.4.a.20180713.1056 -> 2.4.4.a.20180717.0756 [pfSense-core]

pfSense-base: 2.4.4.a.20180713.1056 -> 2.4.4.a.20180717.0756 [pfSense-core]

pfSense: 2.4.4.a.20180713.0955 -> 2.4.4.a.20180717.0730 [pfSense]

git: 2.18.0 -> 2.18.0_1 [pfSense]

e2fsprogs-libuuid: 1.44.2_1 -> 1.44.3 [pfSense]Number of packages to be installed: 1

Number of packages to be upgraded: 957 MiB to be downloaded.

[1/10] Fetching snort-2.9.11.1_2.txz: .......... done

[2/10] Fetching php72-pfSense-module-0.62_5.txz: ...... done

[3/10] Fetching pfSense-rc-2.4.4.a.20180717.0756.txz: .. done

[4/10] Fetching pfSense-kernel-pfSense-2.4.4.a.20180717.0756.txz: .......... done

[5/10] Fetching pfSense-default-config-2.4.4.a.20180717.0756.txz: . done

[6/10] Fetching pfSense-base-2.4.4.a.20180717.0756.txz: .......... done

[7/10] Fetching pfSense-2.4.4.a.20180717.0730.txz: . done

[8/10] Fetching git-2.18.0_1.txz: .......... done

[9/10] Fetching e2fsprogs-libuuid-1.44.3.txz: ..... done

[10/10] Fetching openvpn-auth-script-1.0.0.3.txz: . done

Checking integrity... done (0 conflicting)Upgrading pfSense kernel...

Checking integrity... done (0 conflicting)

The following 1 package(s) will be affected (of 0 checked):Installed packages to be UPGRADED:

pfSense-kernel-pfSense: 2.4.4.a.20180713.1056 -> 2.4.4.a.20180717.0756 [pfSense-core]Number of packages to be upgraded: 1

[1/1] Upgrading pfSense-kernel-pfSense from 2.4.4.a.20180713.1056 to 2.4.4.a.20180717.0756...

[1/1] Extracting pfSense-kernel-pfSense-2.4.4.a.20180717.0756: .......... done

===> Keeping a copy of current kernel in /boot/kernel.old

Upgrade is complete. Rebooting in 10 seconds.

Success -

Will try exactly 2.4.4.a.20180713.1056 to see what happens.

-

@maverick_slo

yes, it refuses to boot with the same error after upgrade.

I don't understand how it's happening... Ask on redmine, please. -

ZFS needs opensolaris.ko.

/boot/loader.conf should have this line.

opensolaris_load="YES" zfs_load="YES" -

I've real hardware installation and it never have had this first line.

kern.cam.boot_delay=10000 kern.geom.label.disk_ident.enable="0" kern.geom.label.gptid.enable="0" vfs.zfs.min_auto_ashift=12 zfs_load="YES" autoboot_delay="3" hw.usb.no_pf="1"All I have, I think, years since zfs introduced.

-

Just now fixed broken installation by booting it manually loading all kernels needed and edited loader.conf adding one line

zfs_load="YES"Works like a charm.

-

I have a 2.4.3 that don't have the "opensolaris_load="YES"" and an earlier version of 2.4.4 with untouched loader.conf that have the line.

When this problem begin i solve the boot loading zfs manually on start and i had to load kernel, opensolaris.ko and zfs.ko. Loading zfs.ko without opensolaris.ko i get zfs needs opensolaris.ko error message.

So i don't know...Edit:

@w0w said in ZFS ISSUES!!! built on Wed Jul 11 16:46:22 EDT 2018:Works like a charm.

Nice