Poor performance on igb driver

-

@marcop

I ran the test using an exe, not from browser, so I don't know if it was on HTTPS. -

Are you running any traffic shaping or anything else?

-

have you tried any of the tipps mentioned here: https://www.netgate.com/docs/pfsense/hardware/tuning-and-troubleshooting-network-cards.html?

-

I would expect a J1900 to pass that fairly easily in normal test conditions.

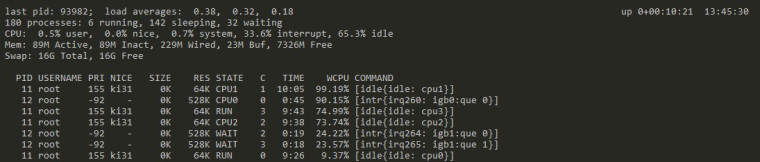

Can we see the output from top when the test is running?

Steve

-

I have set most of the settings from that tunning page.

The only thing I didn't set was hw.igb.num_queues=1, I have it set to 0.I tried with Hardware Checksum Offloading, Hardware TCP Segmentation Offloading, Hardware Large Receive Offloading disabled and enabled, I didn't notice any difference.

@Animosity022

No traffic shaping.I have the following services enabled:

dhcpd

dpinger

ntpd

openvpn OpenVPN server: Home LAN

openvpn_2 OpenVPN client:

sshd

syslogd

unboundHow do I record/export the live output from top?

Should I record a video? -

You shouldn't need to set the igb queues to 1 any longer. That was a bug in much older versions.

Just hit

qin top when it's showing something useful and it will quit out and leave whatever was there available to copy and paste out.Are you routing traffic over OpenVPN?

Steve

-

Hi @bdaniel7

I also agree that the CPU should be able to handle 1Gbit speeds fairly easily, especially if you are not trying run any IDS/IPS on top regular kernel packet processing.

FreeBSD's network defaults aren't tuned too well for very high speed connections by default (although this is getting better in newer versions). Here is a link to a thread with some more parameters you can tune on your Intel NIC's:

https://forum.netgate.com/topic/117072/dsl-reports-speed-test-causing-crash-on-upload

Of those parameters, I"d probably adjust the RX/TX descriptors and processing limits first and see if that yields any improvements.

Hope this helps.

-

I'm only using OpenVPN to access the internal network from outside.

Which is happening when I'm at the office.

-

How are you testing when that is shown? What is connected to igb0 and igb1?

Is the CPU actually running at 1.9GHz? Do you have powerd enabled?

Try running

sysctl dev.cpu.0.freqwhen the test is running.Steve

-

igb0is WAN,igb1is LAN.I'm starting

top -aSHas you suggested, then during the peak transfer, I exit from top withq.I had powerD enabled, with all (AC power, Battery power, Unknown power) set to Maximum.

I disabled powerD but there is no difference.And I get this

sysctl: unknown oid 'dev.cpu.0.freq' -

@tman222 said in Poor performance on igb driver:

Hi @bdaniel7

I also agree that the CPU should be able to handle 1Gbit speeds fairly easily, especially if you are not trying run any IDS/IPS on top regular kernel packet processing.

FreeBSD's network defaults aren't tuned too well for very high speed connections by default (although this is getting better in newer versions). Here is a link to a thread with some more parameters you can tune on your Intel NIC's:

https://forum.netgate.com/topic/117072/dsl-reports-speed-test-causing-crash-on-upload

Of those parameters, I"d probably adjust the RX/TX descriptors and processing limits first and see if that yields any improvements.

Hope this helps.

Hi @bdaniel7 - have you also tried tuning some of the additional parameters that I suggested? If yes, what were the results?

-

Sorry I meant where are you testing between? Speedtest client on igb1 connecting to a server via igb0?

Steve

-

@stephenw10

Yes, the mediaconverter is connected to igb0, my Windows 10 client is connected to the igb1 port. -

I don't see it having been asked so, are you connecting using PPPoE?

Steve

-

@stephenw10

Yes, I'm using PPPoE. -

Ah, then that is the cause of the problem. You can see that all the loading is on one queue and hence one CPU core while the others are mostly idle. It's unfortunately a known issue with PPPoE in FreeBSD/pfSense right now.

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=203856However there is something you can do to mitigate it to some extent, set:

sysctl net.isr.dispatch=deferredYou can add that as a system tunable in System > Advanced if it makes a significant difference.

Be aware that doing so may negatively impact some other things, ALTQ traffic shaping in particular.

Steve

-

Thank you for the clarification.

I should've stated from the beginning that I'm on PPPoE.

I added the net.isr.dispatch setting, but I don't have any improvements in speed.I am now evaluating which option is cheaper and faster, buying a different board, with other (Intel) cards and keeping pfSense, or moving to Linux.

-

These are my settings, by the way:

hw.igb.fc_setting=0

hw.igb.rxd="4096"

hw.igb.txd="4096"

net.link.ifqmaxlen="8192"

hw.igb.max_interrupt_rate="64000"

hw.igb.rx_process_limit="-1"

hw.igb.tx_process_limit="-1"

hw.igb.0.fc=0

hw.igb.1.fc=0

net.isr.defaultqlimit=4096

net.isr.dispatch=deferred

net.pf.states_hashsize="2097152"

net.pf.source_nodes_hashsize="65536"

hw.igb.enable_msix: 1

hw.igb.enable_aim: 1 -

Hmm, you should see some improvement in speed with that setting. You may need to restart the ppp session or at least clear the firewall state. Or reboot if it's being applied by system tunables.

Steve

-

@bdaniel7 said in Poor performance on igb driver:

These are my settings, by the way:

hw.igb.fc_setting=0

hw.igb.rxd="4096"

hw.igb.txd="4096"

net.link.ifqmaxlen="8192"

hw.igb.max_interrupt_rate="64000"

hw.igb.rx_process_limit="-1"

hw.igb.tx_process_limit="-1"

hw.igb.0.fc=0

hw.igb.1.fc=0

net.isr.defaultqlimit=4096

net.isr.dispatch=deferred

net.pf.states_hashsize="2097152"

net.pf.source_nodes_hashsize="65536"

hw.igb.enable_msix: 1

hw.igb.enable_aim: 1I recently went through the process if identifying the performance culprit on the Intel NICs using a Lanner FW-7525A. It turns out, that for the igb driver, you want

hw.igb.enable_msix=0orhw.pci.enable_msix=0to nudge the driver towards using msi interrupts over the less-performant MSIX interrupts (suggested here). This made a 4x difference on my system. It is also recommended to disable tso and lso on the igb drivers so includenet.inet.tcp.tso=0as well. Hope this helps.