Playing with fq_codel in 2.4

-

On the BBR front, more progress.

https://lwn.net/ml/netdev/20180921155154.49489-1-edumazet@google.com/

-

@dtaht said in Playing with fq_codel in 2.4:

I don't get what you mean by codel + fq_codel. did you post a setup somewhere?

@dtaht said in Playing with fq_codel in 2.4:

I don't get what anyone means when they say pie + fq_pie or codel + fq_codel. I really don't. I was thinking you were basically doing the FAIRQ -> lots of codel or pie queues method?

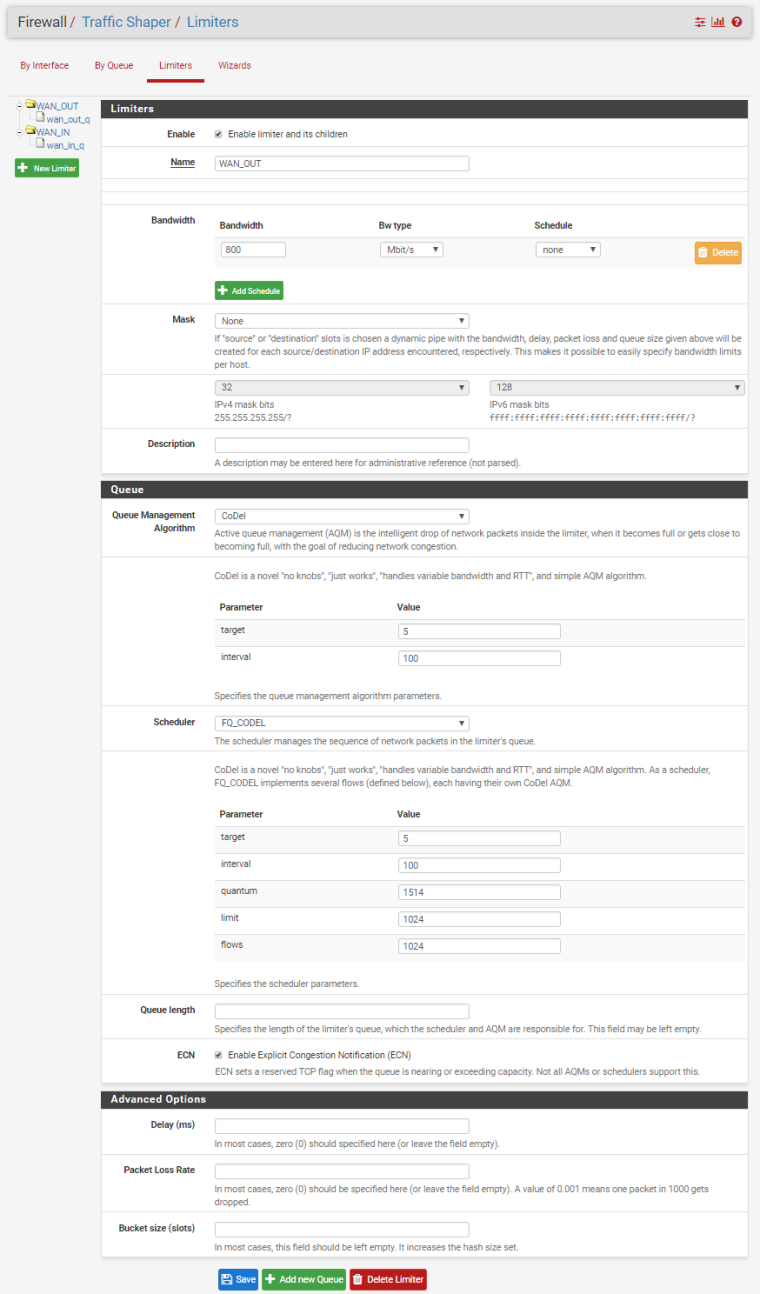

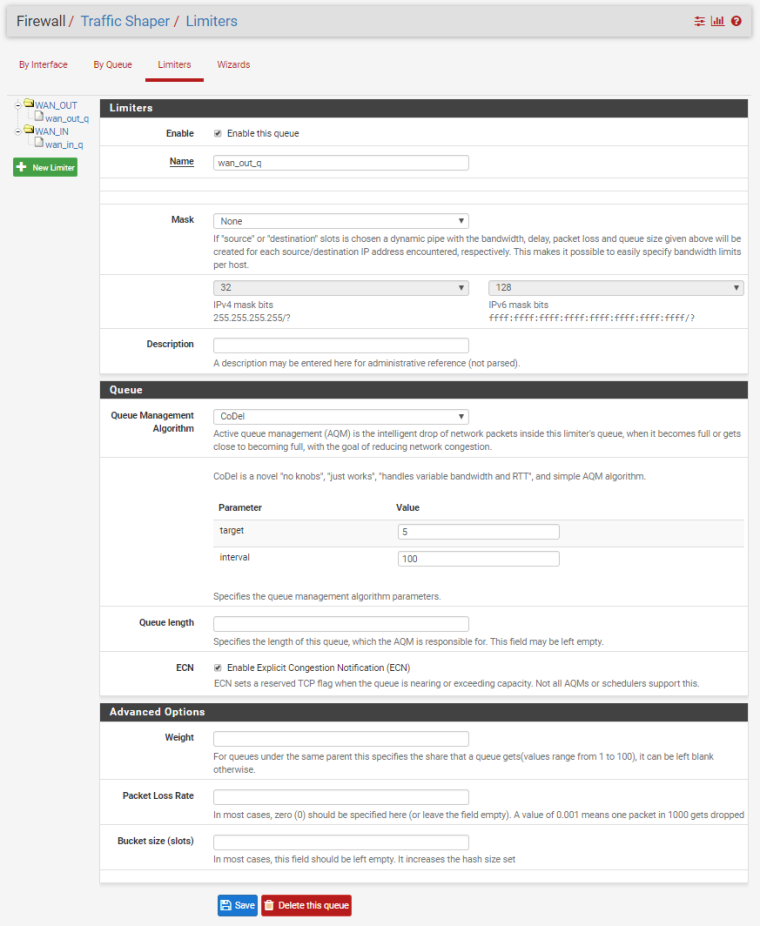

Sorry, I'm trying to keep this straight as well. I'm finding little inconsistencies in the implementation/documentation that I, and others, may be perpetuating as "how it is". The screenshot below shows the limiter configuration screen where you can choose your AQM and scheduler in the pfSense WebUI. What I see now though is that even if I choose CoDel as the pipe AQM, and FQ_CODEL as the scheduler, it appears that ipfw is using droptail as the AQM. Anyone else seeing this? Another bug?

[2.4.4-RELEASE][admin@pfSense.localdomain]/root: cat /tmp/rules.limiter pipe 1 config bw 800Mb codel target 5ms interval 100ms ecn sched 1 config pipe 1 type fq_codel target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ecn queue 1 config pipe 1 codel target 5ms interval 100ms ecn pipe 2 config bw 800Mb codel target 5ms interval 100ms ecn sched 2 config pipe 2 type fq_codel target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ecn queue 2 config pipe 2 codel target 5ms interval 100ms ecn [2.4.4-RELEASE][admin@pfSense.localdomain]/root:[2.4.4-RELEASE][admin@pfSense.localdomain]/root: ipfw sched show 00001: 800.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 39516 47775336 134 174936 0 00002: 800.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 384086 482821168 163 232916 0 [2.4.4-RELEASE][admin@pfSense.localdomain]/root:[2.4.4-RELEASE][admin@pfSense.localdomain]/root: pfctl -vvsr | grep limiter @86(1539633367) match out on igb0 inet all label "USER_RULE: WAN_OUT limiter" dnqueue(1, 2) @87(1539633390) match in on igb0 inet all label "USER_RULE: WAN_IN limiter" dnqueue(2, 1) [2.4.4-RELEASE][admin@pfSense.localdomain]/root: -

@dtaht I noticed that I get tons of bufferbloat when on 2.4 ghz, when compared to 5 ghz. On 5ghz I get no bufferbloat and it maxes out the speed of 150 mbits down. On 2.4 ghz I get a B score with 500+ ms in bufferbloat and speed is limited to 80 mbits down. Upload of 16 mbits works just fine on either band. Any way to create a tired system for 2.4 ghz clients to use different queue?

-

EDIT:

Turns out you CAN configure these queues, but you have to do so after the fact. We don't know the ID ahead of time, these are generated automatically. So we would have to make the patch handle a new number space, possibly reserved or pre-calculated, to use for configuring these queues.

Here's a re-iteration of the flow diagram for dummynet:

(flow_mask|sched_mask) sched_mask +---------+ weight Wx +-------------+ | |->-[flow]-->--| |-+ -->--| QUEUE x | ... | | | | |->-[flow]-->--| SCHEDuler N | | +---------+ | | | ... | +--[LINK N]-->-- +---------+ weight Wy | | +--[LINK N]-->-- | |->-[flow]-->--| | | -->--| QUEUE y | ... | | | | |->-[flow]-->--| | | +---------+ +-------------+ | +-------------+ -

@strangegopher said in Playing with fq_codel in 2.4:

@dtaht I noticed that I get tons of bufferbloat when on 2.4 ghz, when compared to 5 ghz. On 5ghz I get no bufferbloat and it maxes out the speed of 150 mbits down. On 2.4 ghz I get a B score with 500+ ms in bufferbloat and speed is limited to 80 mbits down. Upload of 16 mbits works just fine on either band. Any way to create a tired system for 2.4 ghz clients to use different queue?

The problem with wifi is that it can have a wildly variable rate. (Move farther from the AP). We wrote up that fq_codel design (for linux) here: https://arxiv.org/pdf/1703.00064.pdf and it was covered in english here: https://lwn.net/Articles/705884/

There was a bsd dev working on it last I heard. OSX has it, I'd love it if they pushed their solution back into open source.

So while you can attach a limiter to 80 mbit on the wifi device that won't work if your rate falls below that as you move farther away.

-

@mattund said in Playing with fq_codel in 2.4:

EDIT:

Turns out you CAN configure these queues, but you have to do so after the fact. We don't know the ID ahead of time, these are generated automatically. So we would have to make the patch handle a new number space, possibly reserved or pre-calculated, to use for configuring these queues.

Here's a re-iteration of the flow diagram for dummynet:

(flow_mask|sched_mask) sched_mask +---------+ weight Wx +-------------+ | |->-[flow]-->--| |-+ -->--| QUEUE x | ... | | | | |->-[flow]-->--| SCHEDuler N | | +---------+ | | | ... | +--[LINK N]-->-- +---------+ weight Wy | | +--[LINK N]-->-- | |->-[flow]-->--| | | -->--| QUEUE y | ... | | | | |->-[flow]-->--| | | +---------+ +-------------+ | +-------------+Even with that diagram I'm confused. :) I think some of the intent here is to get per host and per flow fair queuing + aqm which to me is something that

uses the fairq scheduler per IP, each instance of which has a fq_codel qdisc.But I still totally don't get what folk are describing as codel + fq_codel.

...

My biggest open question however is that we are hitting cpu limits on various higher speeds, and usually the way to improve that is to increase the token bucket size. Is there a way to do that here?

-

@dtaht @strangegopher There's also this https://www.bufferbloat.net/projects/make-wifi-fast/wiki/

-

@pentangle said in Playing with fq_codel in 2.4:

@dtaht @strangegopher There's also this https://www.bufferbloat.net/projects/make-wifi-fast/wiki/

That website (I'm the co-author) is a bit out of date. We didn't get funding last year... or this year. Still, the google doc at the bottom of that page is worth reading.... lots more can be done to make wifi better.

-

@dtaht said in Playing with fq_codel in 2.4:

My biggest open question however is that we are hitting cpu limits on various higher speeds, and usually the way to improve that is to increase the token bucket size. Is there a way to do that here?

Yes, it appears we can.

https://www.freebsd.org/cgi/man.cgi?query=ipfw

burst size If the data to be sent exceeds the pipe's bandwidth limit (and the pipe was previously idle), up to size bytes of data are allowed to bypass the dummynet scheduler, and will be sent as fast as the physical link allows. Any additional data will be transmitted at the rate specified by the pipe bandwidth. The burst size depends on how long the pipe has been idle; the effec- tive burst size is calculated as follows: MAX( size , bw * pipe_idle_time). -

um, er, no, I think. That's the queue size. We don't need to muck with that.

A linux "limiter" has a token bucket size and burst and cburst and quantum parameters to control how much data gets dumped into the next pipe in line per virtual interrupt.

A reasonable explanation here

https://unix.stackexchange.com/questions/100785/bucket-size-in-tbf

Or http://linux-ip.net/articles/Traffic-Control-HOWTO/classful-qdiscs.html

I'm so totally not familar with what's in bsd, but... what I wanted to set was the bucket size... the burst value, the cburst value. You are setting the token rate only, so far as I can tell. At higher rates, you need bigger buckets.

from some bsd stuff elsehwere

A token bucket has

token rate'' andbucket size''.

Tokens accumulate in a bucket at the averagetoken rate'', up to thebucket size''.

A driver can dequeue a packet as long as there are positive

tokens, and after a packet is dequeued, the size of the packet is

subtracted from the tokens.

Note that this implementation allows the token to be negative as a

deficit in order to make a decision without prior knowledge of the

packet size.

It differs from a typical token bucket that compares the packet

size with the remaining tokens beforehand.The bucket size controls the amount of burst that can dequeued at a time, and controls a greedy device trying dequeue packets as much as possible. This is the primary purpose of the token bucket regulator, and thus, the token rate should be set to the actual maximum transmission rate of the interface. -

@dtaht thanks for the correction - I've edited my previous submission. It appears we can edit burst in ipfw/dummynet so I can play with that.

-

@uptownvagrant That's closer, but we do want to dump it into the next pipe inline. Reading that seems to indicate it bypasses everything? A quantum? A bucket size?

Worth trying anyway...

-

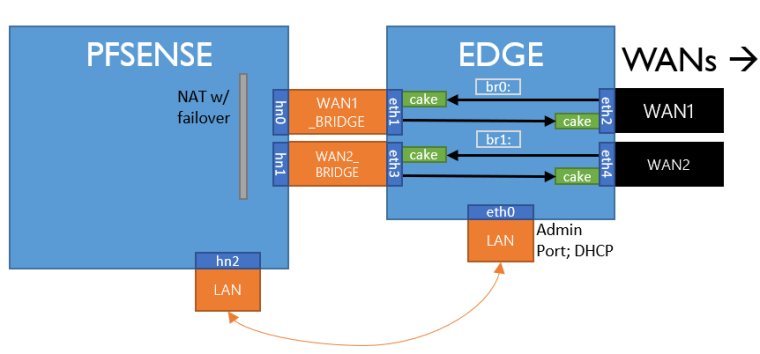

Not to derail this thread any more off of the FQ_CODEL topic, but I thought I should mention this insane setup is actually working on my end; I think you especially will find this amusing:

| pfSense vRouter | | "edge" vRouter | | | | linux 4.9 | | | | br0: | | (no shaping) | --WAN0--> |eth1...(cake)...eth2|(vNIC)|i350|--(LAGG)-> Cable Modem | | | br1: | | | --WAN1--> |eth3...(cake)...eth4|(vNIC)|i350|---------> DSL ModemDriving home, I was laughing, thinking this was a dumb idea but, uh, it's kind of keeping up OK. For when you want the best of both worlds (pfSense's firewalling and out-of-tree Cake cloned off Git). So far I'm noticing great stability and of course, next to no bufferbloat.

These are both in the same virtual host, so they just pass traffic between eachother over virtual "Internal" interfaces, and the Linux-based vRouter has been stripped down to the bare minimums, and under load is reporting next to no CPU usage at all, on a 147M connection. I'll have to get some flent results while I'm at this. I might actually stick with it honestly.

-

-

Hi @gsakes and @mattund: The setups you have described sound intriguing and it might be something I want to try as well down the road when I have some spare time and access to an additional machine:

Could you guys talk a little more about how this is setup? Does the Linux machine in front take in all the WAN traffic, shape it and pass it through to pfSense? Are there then two sets of firewalls traffic must traverse (one on pfSense and on the Linux box) or just one? I'm just trying to understand the architecture a bit better and how one would setup up something like what you have described.

Thanks in advance.

-

@tman222 In my case, I'd prefer to use PFSense for firewalling and shaping, but my testing showed that Linux/Cake performs better than PfSense/fq_codel, albeit not by much, maybe 10-15% depending on the load.

As far as the architecture is concerned you don't need to run a firewall on the linux host, you can simply configure it as a router; you'd need two network interfaces, where you'd configure cake using the 'layer.cake' script from the cake github repo on the egress interface.

Taking from @mattund 's example, this is how my setup looks like:

| pfSense FW | Router | | | (no shaping) | Ubuntu Server | | | (192.168.0.0/24)| | (10.18.9.0/24) | | LAN --> | | eth1 --> (cake) --> eth2 | --> Cable Modem | --> WAN | | | | -

@tman222 said in Playing with fq_codel in 2.4:

Does the Linux machine in front take in all the WAN traffic, shape it and pass it through to pfSense?

That is correct, this is an entirely different VM that I have set up bridging on; I'm using Debian since it's what I'm most familiar with. I was able to do it all in

/etc/network/interfaces, after installing Cake following https://www.bufferbloat.net/projects/codel/wiki/Cake/#installing-cake-out-of-tree-on-linux (you may need some packages to install Cake, not to worry, if your build system is missing them just install them):# The loopback network interface auto lo iface lo inet loopback # MANAGEMENT allow-hotplug eth0 iface eth0 inet dhcp # WAN1 iface eth1 inet manual iface eth2 inet manual auto br0 iface br0 inet manual bridge_stp off bridge_waitport 0 bridge_fd 0 bridge_ports eth1 eth2 up tc qdisc add dev eth1 root cake bandwidth 12280kbit ; tc qdisc add dev eth2 root cake bandwidth 122800kbit down tc qdisc del dev eth1 root ; tc qdisc del dev eth2 root # WAN2 iface eth3 inet manual iface eth4 inet manual auto br1 iface br1 inet manual bridge_stp off bridge_waitport 0 bridge_fd 0 bridge_ports eth3 eth4 up tc qdisc add dev eth3 root cake bandwidth 2000kbit ; tc qdisc add dev eth4 root cake bandwidth 27000kbit down tc qdisc del dev eth3 root ; tc qdisc del dev eth4 rootYou just add 5 NICS to a VM:

1: Management

2/3: Internal WAN1, External WAN1 attached to hardware

3/4: Internal WANN+1, External WANN+1 attached to hardwareFYI, I was not aware of netdata before I was informed here, but it is absolutely fantastic if you want to audit your setup.

-

@mattund Yes, netdata is fantastic - I prefer telegraf/grafana or Prometheus for monitoring, but out-of-the box netdata is one of the best apps I've seen in a long time.

-

Thanks @gsakes and @mattund.

So in terms of configuration:

Let's say I had my pfSense FW --> Debian Linux Box --> WAN Connection.

Let's say the Debian Box I have had three network interfaces:

if0 Connected to pfSense WAN Interface

if1 DHCP From Management VLAN

if2: WAN Connection (DCHP From ISP)Would I setup if0 with a static IP, e.g. let's say 10.5.5.1 and then connect if0 to my pfSense WAN interface (let's call it pf0)? On the pfSense side I would assign my WAN interface (pf0) another static IP, e.g. 10.5.5.2 and then set 10.5.5.1 as the Gateway? If that's correct so far, what would I have to setup on the linux box to make sure traffic can get out into the internet? I would have to enable ip forwarding and then ensure that if2 becomes the gateway for if0? Also, do I have to setup any firewall rules on the Linux box to make sure that, even though it's just a router, it's not open to the whole internet?

Apologies for these basic questions, I'm having just a bit of trouble visualizing how this would all work in concert.

Thanks again.

-

I opted to only address my management interface; I have not addressed either the if0 or if2 in your scenario, instead I have created a basic bridge over each interface (note my creation of

br0andbr1and lack of addressing above Layer 2 in the interface configuration). Now, in my case I feel fine managing the VM over a management port, so this is fine.Effectively, I've just made a "dumb" Layer2 switch between pfSense and the modems with isolated port pairs, that happens to also use CAKE on each participating interface's outbound, root queue.

Reason being, I wanted an extremely simple setup with as little overhead as possible and as few layers in the stacks of the participating bridge interfaces.