Playing with fq_codel in 2.4

-

@uptownvagrant said in Playing with fq_codel in 2.4:

That being said, I'm pinging @Rasool on this as my understanding is that your configuration should not be needed for flow separation as RFC 8290 states that FQ-CoDel is doing its own 5-tuple to identify flows.

You are right. FQ-CoDel internally identifies flows and hashes them to internal sub-queues. External flow separation and hashing are not required in simple configurations.

-

If I reduce my download pipe e.g. to say half yes there is no packet loss regardless as the line has way more unused buffer then. Well actually sorry there was still packetloss, but on the half speed they tended to be early on only in the test.

The commands I posted are not what I am actually using, I am a bit looser to default than what was posted, but quantum 300 is used as is recommended to improve performance of smaller packets and it does indeed help my ssh packets (worse on default), I am now back on the default 50 pipe depth.

Interval was manually tuned by me to suit my network conditions. Although I feel that one to have no measurable affect either good or bad.

I have no experience of RRUL so I dont know if that would have affected me, its not just any download that would cause dropped packets, usually just things like steam which absolutely flood the ingress pipe with tons of packets.

But it doesnt matter for me now as by making the dynamic queues one per remote ip, it seems to be pretty much all resolved for me now. It wasnt a major issue I had already had it working pretty well, but this change has perfected it.

But before you ask I have obviously used all default values, I would have done the very first time I used fq_codel and dummynet, I always start of by keeping things as simple as possible, and only stray from that if I feel a need to.

Bear in mind the vast majority of my home lan is just my desktop pc, all other network devices are almost always idle aside from my STB,and if I am watching streams on the STB then I am not on my PC and interactivity doesnt matter on my PC then, so really the masks that I was using before that separated per lan device was pointless for me, but the other way round its really useful.

00001: 69.246 Mbit/s 0 ms burst 0 q00001 50 sl. 0 flows (256 buckets) sched 1 weight 0 lmax 0 pri 0 droptail mask: 0x00 0xffffffff/0x0000 -> 0x00000000/0x0000 sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 30ms quantum 300 limit 1000 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 1 60 0 0 0 00002: 18.779 Mbit/s 0 ms burst 0 q00002 50 sl. 0 flows (256 buckets) sched 2 weight 0 lmax 0 pri 0 droptail mask: 0x00 0x00000000/0x0000 -> 0xffffffff/0x0000 sched 2 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 300ms quantum 300 limit 800 flows 1024 NoECN Children flowsets: 2 q00001 50 sl. 0 flows (256 buckets) sched 1 weight 0 lmax 0 pri 0 droptail mask: 0x00 0xffffffff/0x0000 -> 0x00000000/0x0000 q00002 50 sl. 0 flows (256 buckets) sched 2 weight 0 lmax 0 pri 0 droptail mask: 0x00 0x00000000/0x0000 -> 0xffffffff/0x0000 00001: 69.246 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 65537 type FIFO flags 0x0 0 buckets 0 active 00002: 18.779 Mbit/s 0 ms burst 0 q131074 50 sl. 0 flows (1 buckets) sched 65538 weight 0 lmax 0 pri 0 droptail sched 65538 type FIFO flags 0x0 0 buckets 0 active -

@chrcoluk said in Playing with fq_codel in 2.4:

If I reduce my download pipe e.g. to say half yes there is no packet loss regardless as the line has way more unused buffer then. Well actually sorry there was still packetloss, but on the half speed they tended to be early on only in the test.

The idea behind this question was have you set your bandwidth too close to where your ISP is dropping packets? I was assuming, based on your bandwidth settings, that you were testing over the Internet and your ISP, as well as other outside factors, are in the mix. What does your bufferbloat look like when you half the bandwidth? There is going to be some packet loss, that's actually the point, but my experience so far is that FQ-CoDel is doing a pretty great job at prioritizing interactive flows and I haven't been able to recreate the poor handling of these flows that a couple folks have mentioned.

The commands I posted are not what I am actually using, I am a bit looser to default than what was posted, but quantum 300 is used as is recommended to improve performance of smaller packets and it does indeed help my ssh packets (worse on default), I am now back on the default 50 pipe depth.

Setting the pipe or queue 'queue length' is ignored when you are using FQ-CoDel. CoDel is handling the queue length dynamically.

Interval was manually tuned by me to suit my network conditions. Although I feel that one to have no measurable affect either good or bad.

I have no experience of RRUL so I dont know if that would have affected me, its not just any download that would cause dropped packets, usually just things like steam which absolutely flood the ingress pipe with tons of packets.

I don't personally use Steam but I have it on my network. I'm assuming you are referring to game downloads when you mention "absolutely flood the ingress pipe with tons of packets"?

But it doesnt matter for me now as by making the dynamic queues one per remote ip, it seems to be pretty much all resolved for me now. It wasnt a major issue I had already had it working pretty well, but this change has perfected it.

Glad it's working perfectly for you now, I was hoping that we could identify the root cause.

-

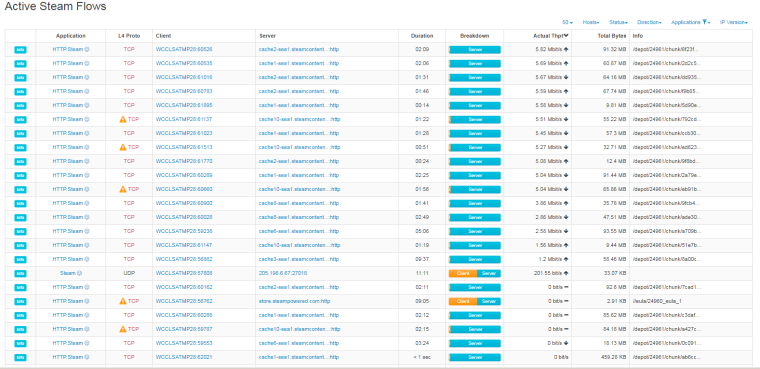

yes I mean steam downloads, typically there is at least 24 tcp streams opened but often over 30 if its a large game. If you have a local download server configured then thats 24+ tcp streams with low rtt flooding the network. Steam has no persistent way of reducing the number of connections, you can reduce it via a command in the console but it is not saved, so when you restart steam it goes back to default.

If you set a server on the other side of the world so rtt is way higher, it is another way to mitigate the problem as higher rtt makes the packets much more passive on the network. So e.g. if from asia to the uk I can still hit full speed downloads simply due to the really high number of threads, 32 threads is way overkill on a 10ms latency server.

What I am considering doing is changing the mask to a /24 instead of /32 for remote as often the steam ip's come from the same /24, which will make them fight with each other in one dynamic queue, but still separate them from other dynamic queue, that will be even more effective than what I have now.

Interesting that fq_codel also seems to make a lot of tunables useless, this really needs to be documented somewhere because its not documented on the dummynet or pfsense pages. But I did never notice a difference between 1000 slots and 50 slots anyway which is why it was reduced back to the default 50 slots, that explains why no difference was observed.

What I just want to say here is sometimes on the internet people dont like it when people go away from defaults, you 2 guys have been fine, as you have been very polite about it, but sometimes I have come across people who even get angry :), but there is a reason things can be tuned is that you cannot set something as a default that works in 100% of situations optimally. Its impossible. Even auto tuning algorithms can not be 100%.

But to me steam is the ultimate test, I have yet to come across any speedtester or other automated testing tool that abuses the network as much as steam, steam basically ddos's your network as far as I am concerned, its abusive to use so many threads. Because the only way I have found to stress a network harder is to ddos it.

I m happy to try and put your mind's at rest to test quantum 1514 whils tusing these masks, quantum 300 was suggested by someone earlier in this thread and is also suggested by a fq_codel expert somewhere on the net, it supposedly makes queues with packets smaller than the quantum size have higher priority than queues with packets larger than the quantum size so less likely to get packets dropped when the pipe is full.

I am lucky my isp has no visible congestion so I can hit my line rate 24/7/365. Which makes configuring a pipe size easier. I did take into account overheads for dsl etc. so the rate configured for the pipe is lower than actual achievable tcp speeds "after" overheads. The only thing I have to watch out for of course is if my dsl sync speed changes so it syncs lower, but thankfully this has been stable for a long time now.

-

@chrcoluk said in Playing with fq_codel in 2.4:

yes I mean steam downloads, typically there is at least 24 tcp streams opened but often over 30 if its a large game. If you have a local download server configured then thats 24+ tcp streams with low rtt flooding the network. Steam has no persistent way of reducing the number of connections, you can reduce it via a command in the console but it is not saved, so when you restart steam it goes back to default.

If you set a server on the other side of the world so rtt is way higher, it is another way to mitigate the problem as higher rtt makes the packets much more passive on the network. So e.g. if from asia to the uk I can still hit full speed downloads simply due to the really high number of threads, 32 threads is way overkill on a 10ms latency server.

Thanks for detailing what you're seeing with regard to Steam game downloads. I'll see if I can recreate your experience on one of my networks.

Interesting that fq_codel also seems to make a lot of tunables useless, this really needs to be documented somewhere because its not documented on the dummynet or pfsense pages. But I did never notice a difference between 1000 slots and 50 slots anyway which is why it was reduced back to the default 50 slots, that explains why no difference was observed.

This is why I brought it up because it's not well documented.

What I just want to say here is sometimes on the internet people dont like it when people go away from defaults, you 2 guys have been fine, as you have been very polite about it, but sometimes I have come across people who even get angry :), but there is a reason things can be tuned is that you cannot set something as a default that works in 100% of situations optimally. Its impossible. Even auto tuning algorithms can not be 100%.

But to me steam is the ultimate test, I have yet to come across any speedtester or other automated testing tool that abuses the network as much as steam, steam basically ddos's your network as far as I am concerned, its abusive to use so many threads. Because the only way I have found to stress a network harder is to ddos it.

I m happy to try and put your mind's at rest to test quantum 1514 whils tusing these masks, quantum 300 was suggested by someone earlier in this thread and is also suggested by a fq_codel expert somewhere on the net, it supposedly makes queues with packets smaller than the quantum size have higher priority than queues with packets larger than the quantum size so less likely to get packets dropped when the pipe is full.

A quantum of 300 seems perfectly sane for your use case and test scenario. If you look at the sqm-scripts for OpenWRT, which was provided by CeroWrt’s SQM with optional packages @bufferbloat.net, you will see a FQ-CoDel quantum of 300 and 1514 depending on if PRIO is being used on children - the simplest scripts are using the default quantum of 1514. My observation was that you were not using defaults and my experience has been that sometimes folks don't really understand all of the nerd knobs, I know I've been guilty of this, and they start making changes based on collecting out of context bits of information on the Internet. In short, we sometimes act against our own interest in the pursuit of "tuning" - not saying that you are, just wanted to see if defaults had any positive impact. For instance, setting "limit" to anything under the default only seems beneficial on systems severely memory constrained - the hard limit should be rarely, if ever, hit as drops are performed by CoDel long before "limit" would be hit. (RFC 8290, section 5.2.3) You run the risk of setting limit too low and experiencing drops, "fq_codel_enqueue over limit", before they should based on your target. A limit of 1001 should be OK but leaving it at the default 10240 on your system may drop less without any adverse effects seeing as your system has 4GB of RAM.

-

I want my ISP to replace my puma modem with broadcom modem but they refuse to replace it until I show them that my modem is bad. As you have seen my modem still has high latency in flent tests despite fq_codel. Is there anything more I can do to show the tech that the modem is cause if this issue?

-

@uptownvagrant The limit value has now also been reset to the default of 10240, the only tuned value in my rules now is basically quantum and the masks been reversed. Thanks for your help.

Also I do agree that tuning can most definitely make things worse, the enqueue stuff that appears in logs is caused by the limit value been set too low, which is probably why it has a high default. The bufferbloat website suggests reducing it when speed is below 100mbit, but I tested their recommendation and it generated lots of enqueue over limit warnings, which as muppet pointed if too many of them appear it can bring down the OS.

-

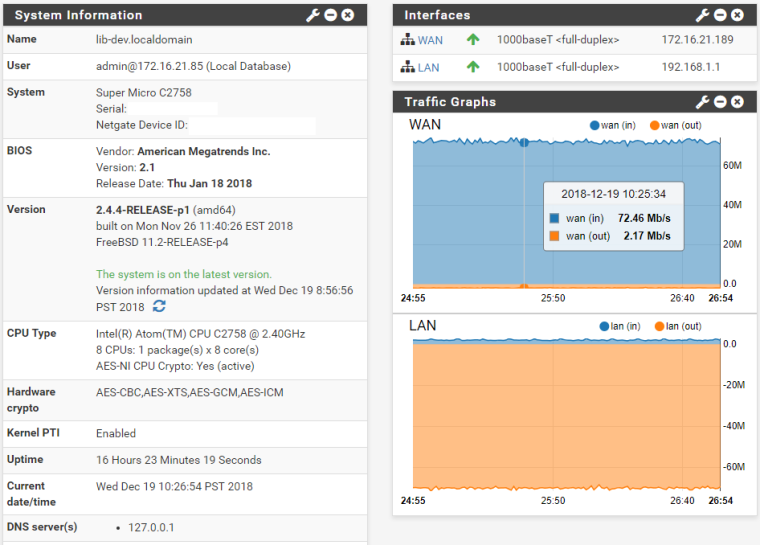

I was able to test Steam distributed downloads using your config minus the masks. I was not able to recreate your findings and I'm still wondering if the interactive flow drops, SSH session, were due to something upsteam from you or there was a very low probability hash collision. If it was consistent then a hash collision is highly improbable.

Notes:

- My testing with Flent and netperf actually pushes more pps and more flows then what I saw with Steam.

- The bandwidths configured to your values are a very small percentage of what my lab upstream up/down limits are so those were not a factor in my testing.

- ICMP and HTTPS webUI traffic was not passing through the limiter. All other traffic was set to be placed in the limiter queues.

Limiters: 00001: 18.779 Mbit/s 0 ms burst 0 q131073 50 sl. 0 flows (1 buckets) sched 65537 weight 0 lmax 0 pri 0 droptail sched 65537 type FIFO flags 0x0 0 buckets 0 active 00002: 69.246 Mbit/s 0 ms burst 0 q131074 50 sl. 0 flows (1 buckets) sched 65538 weight 0 lmax 0 pri 0 droptail sched 65538 type FIFO flags 0x0 0 buckets 0 active Schedulers: 00001: 18.779 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 193 9082 0 0 0 00002: 69.246 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 51106 76002641 29 43500 1209 Queues: q00001 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail q00002 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail

The RTT for almost all of these Steam servers was under 8 ms.

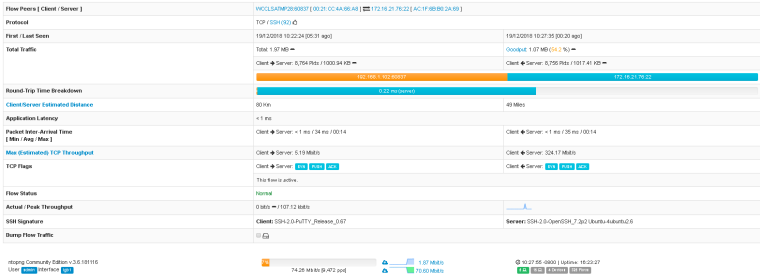

There were no drops associated with the SSH session I had from inside the LAN to outside the WAN.

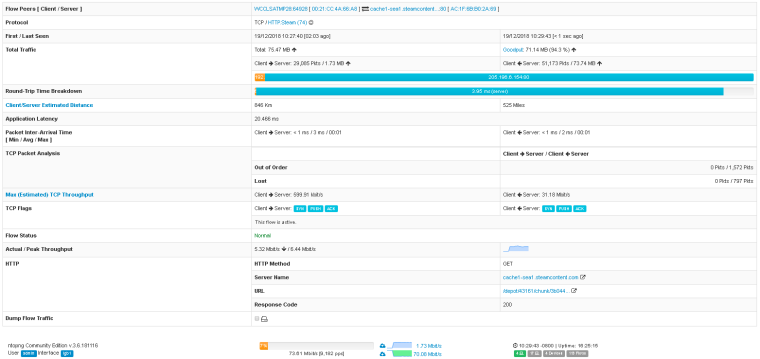

Here is a Steam download flow where you see many drops associated.

-

I wouldnt worry over it too much.

There is too many variables here, Operating System, Hardware used, RTT to download servers, capacity of connection, congestion provider used and so on.

I originally had really bad issues which I detailed earlier in the thread, when I changed the hardware in my pfsense unit it was improved, then it became minor issues only on a few scenarios with steam been one of them, changing the masks so steam downloads have their own dynamic queue made it perfect for me, but because you cannot get the same issue yourself it doesnt mean is a problem, its fine everyone is happy with what they have here. :)

I have also slightly decreased my pipe size a bit further so I can get perfect results on 32 threaded dslreports test as well. It seems it was slightly too high for that.

-

@uptownVagrant may I know what is this rule for? I tried to enable this rule only, but it only bypass the limiters. What's the difference between this rule #3 and rule #4

3.) Add a match rule for incoming state flows so that they're placed into the FQ-CoDel in/out queues

- Action: Match

- Interface: WAN

- Direction: in

- Address Family: IPv4

- Protocol: Any

- Source: any

- Destination: any

- Description: WAN-In FQ-CoDel queue

- Gateway: Default

- In / Out pipe: fq_codel_in_q / fq_codel_out_q

- Click Save

-

@knowbe4 Floating rule #3 is to match flows that are ingress to the firewall WAN port and a state is created - an example could be traffic destined for a port you are forwarding into your LAN. Floating rule #4 is to match flows that are egress, leaving the WAN port and a state is created.

Rule #3 and #4 explicitly places flows into the limiter queues. If you have configured other floating rules, that are matching after the limiter rules, those may be bypassing the limiter.

-

Forgive me if this was covered here, I tried to read it all and got my fq-codel set up well thanks to this.

However, traceroute will only show 1 hop on windows clients. From the pfsense router cli, it shows the proper hops.

C:\WINDOWS\system32>tracert google.comTracing route to google.com [172.217.3.110]

over a maximum of 30 hops:1 <1 ms <1 ms <1 ms router.lan [192.168.1.1]

2 1 ms 1 ms 1 ms sea09s17-in-f14.1e100.net [172.217.3.110]From router:

traceroute google.com

traceroute to google.com (172.217.3.110), 64 hops max, 40 byte packets

(Hid first 4 hops to not give away location)

5 72.14.208.130 (72.14.208.130) 5.587 ms 5.172 ms 3.809 ms

6 * * *

7 216.239.62.148 (216.239.62.148) 4.472 ms

216.239.62.168 (216.239.62.168) 4.947 ms 4.957 ms

8 209.85.253.189 (209.85.253.189) 2.687 ms 2.850 ms

209.85.244.65 (209.85.244.65) 2.915 ms

9 sea09s17-in-f14.1e100.net (172.217.3.110) 2.524 ms

209.85.254.129 (209.85.254.129) 5.549 ms

216.239.56.17 (216.239.56.17) 4.269 ms -

@robnitro Take a look at the following guide as it should explain the issue you are witnessing and show how to workaround it - hint floating rule #1.

https://forum.netgate.com/post/807490

-

Thanks, I did add that rule for both WAN and LAN.

The only difference is that my setup has the rules based on LAN, because I have FIOS. The cable boxes video on demand VOD, can watch video above my 50mbit limit, they arent part of my QOS (by floating rule for the cable boxes IPs in an alias) because if they were, I would lose my 50mbit data max. Example: HD stream 16 mbit, I still can get 50mbit data. So the boxes are outside codel and/or HSFC.

When I have limiters by WAN, using client IP's in floating rules to not be a part of the limiter doesn't work most of the time. I think it's because the traffic is technically coming into the router WAN ip address and leaving from the WAN ip address... not the local address which only exists on LAN communication.

-

I have two ISP (10Mbps + 15Mbps symmetric) that currently config load balance in pfsense. I did follow your config for fq_codel on both WANs. I think both WANs are working as they are limiting their Download and Upload speed.

But later I found a problem, when I tried to upload a file to google drive, sometimes it doesn't upload at full speed (10KBps flat), sometimes it does. I'm not quite sure if is related to fq_codel, but when i tried to disable the floating rules that related to fq_codel, it always upload at full speed of my bandwidth.

here's my config;

I hope you can help me with this problem.

Findings that may help:

- When floating rules is enabled, load balancing on download speed is working but on upload is not working. Also all upload traffic always stick on default gateway even load balancing is enabled.

-

I just tried with the floating rules on WAN instead of LAN, omitting the cable boxes (STBs) and my desktop. So, it seems that if a rule is on WAN, you cannot use source or destination IP to omit a client from the queue.

Just to remind, on fios, you can have a 50/50 line but the cable boxes video on demand do not count towards the limit. Example: No limiters: watch a 16 mbit stream on cable box, speed tests will give 50 mbit. But put 50mbit limiter for all- 16 mbit stream, desktop will get 34 mbit.Only LAN based rules seem to work for me to exclude the cable boxes from the limiter.

I also have separate limiters for guest wifi clients that give them 20mbit, and this also has to be done on LAN floating rules, unfortunately.

I wonder if there could be a tiered form of queues that feed into eachother.

Example: main fqc queue 50, guests under that with a simple limiter of 20- instead of needing to have 2 separate queues of 50 and 20 (which traffic is not joined together) -

Can you use the fq_codel in/out limiters in conjunction with the standard traffic shaping?

-

You could, but not on the same interface. WAN with shaper and LAN with limiter would work I think for example.

-

I'm using shapers with the limiters on LAN. Before fqcodel, I was using plain limiters to keep guests on Wi-Fi from hogging connection with their apple updates.

With fq codel, i kept the same limiter speeds just changed the scheduler. I also added a fq codel limiter for those that aren't throttled and excluded the cable boxes as they aren't included in the isp speed limit (fios)

Unfortunately, I cannot use a single fq codel for everyone this way, but it doesn't seem to affect buffer bloat.

Limiters I have:

Schedulers: 00001: 27.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 80ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 1 00002: 28.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 80ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 2 00004: 30.000 Mbit/s 0 ms burst 0 q65540 50 sl. 0 flows (1 buckets) sched 4 weight 0 lmax 0 pri 0 droptail sched 4 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 80ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 4 00005: 47.000 Mbit/s 0 ms burst 0 q65541 50 sl. 0 flows (1 buckets) sched 5 weight 0 lmax 0 pri 0 droptail sched 5 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 80ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 5 00006: 48.000 Mbit/s 0 ms burst 0 q65542 50 sl. 0 flows (1 buckets) sched 6 weight 0 lmax 0 pri 0 droptail sched 6 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 80ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 6 00007: 29.000 Mbit/s 0 ms burst 0 q65543 50 sl. 0 flows (1 buckets) sched 7 weight 0 lmax 0 pri 0 droptail sched 7 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 80ms quantum 300 limit 10240 flows 1024 NoECN Children flowsets: 3 Queues: q00001 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail q00002 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail q00003 50 sl. 0 flows (1 buckets) sched 7 weight 0 lmax 0 pri 0 droptail q00004 50 sl. 0 flows (1 buckets) sched 4 weight 0 lmax 0 pri 0 droptail q00005 50 sl. 0 flows (1 buckets) sched 5 weight 0 lmax 0 pri 0 droptail q00006 50 sl. 0 flows (1 buckets) sched 6 weight 0 lmax 0 pri 0 droptailHSFC traffic shaping limiters

QUEUE BW SCH PRI PKTS BYTES DROP_P DROP_B QLEN BORRO SUSPE P/S B/S root_em0 64M hfsc 0 0 0 0 0 0 0 0 qInternetOUT 48M hfsc 0 0 0 0 0 0 0 qACK 8640K hfsc 12043K 703M 0 0 0 3 215 qP2P 960K hfsc 42429K 45636M 0 0 0 64 83005 qVoIP 512K hfsc 0 0 0 0 0 0 0 qGames 9600K hfsc 3576K 592M 0 0 0 0 0 qOthersHigh 9600K hfsc 2463K 665M 0 0 0 4 811 qOthersLow 2400K hfsc 0 0 0 0 0 0 0 qDefault 7200K hfsc 1815K 1170M 0 0 0 8 975 qNoLimiterSTB 4000K hfsc 0 0 0 0 0 0 0 qLink 640K hfsc 0 0 0 0 0 0 0 root_em1 490M hfsc 0 0 0 0 0 0 0 0 qLink 98M hfsc 0 0 0 0 0 0 0 qInternetIN 47M hfsc 0 0 0 0 0 0 0 qACK 8460K hfsc 838180 106M 0 0 0 6 374 qP2P 940K hfsc 27924K 14986M 0 0 0 24 2656 qVoIP 512K hfsc 0 0 0 0 0 0 0 qGames 9400K hfsc 1334 581495 0 0 0 0 0 qOthersHigh 9400K hfsc 8497K 5911M 0 0 0 4 618 qOthersLow 2350K hfsc 5315K 7804M 0 0 0 0 0 qDefault 7050K hfsc 18785K 17996M 0 0 0 32 6931 qNoLimiterSTB 320M hfsc 55549 78583K 0 0 0 0 0 -

@uptownvagrant Thanks for the guide, it is working perfectly for me on my Cable WAN (very bad bufferbloat). I'm trying to use it for my VDSL WAN as well but running into an odd issue. if I have the out floating rule enabled then my upload speed is exceptionally slow, no matter how the limiter is configured. If I disable the out floating rule and leave only the in one then upload speed is fine (but bufferbloat is back). The in rule works fine and gets rid of the download bufferbloat.

http://www.dslreports.com/speedtest/45277068 - this shows it with the out floating rule enabled

http://www.dslreports.com/speedtest/45277501 - and this is it with it disabled

Any ideas?

TIA