intel x520-da2 low throughput

-

I tried running two different parallel iperf (1). BW splits up showing me that the card just won't traffic much. I do not believe is a CPU usage, as I did run iperf between two servers that are connected to these gateways, one on vlan 1 and the other on vlan 2. I get 1.4 GB/s and almost no cpu usage on the gateway.

[2.4.4-RELEASE][admin@g2]/root: iperf -c 10.70.70.1 -p 5001 ------------------------------------------------------------ Client connecting to 10.70.70.1, TCP port 5001 TCP window size: 64.2 KByte (default) ------------------------------------------------------------ [ 3] local 10.70.70.2 port 34912 connected with 10.70.70.1 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.62 GBytes 1.39 Gbits/sec [2.4.4-RELEASE][admin@g2]/root: iperf -c 10.70.70.1 -p 5002 ------------------------------------------------------------ Client connecting to 10.70.70.1, TCP port 5002 TCP window size: 64.2 KByte (default) ------------------------------------------------------------ [ 3] local 10.70.70.2 port 3782 connected with 10.70.70.1 port 5002 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.59 GBytes 1.36 Gbits/secI am afraid to test NAT speed.

-

Ok but you're running iperf on pfSense which will always give worse results. You need to test through the firewall.

You should be using iperf3. You can install that in pfSense from the command line

pkg install iperf3; rehashif you need to test from there.You can just use the

-Pswitch to increase the process count.Steve

-

Iperf vs iperf3 in my case provides the exact same results.

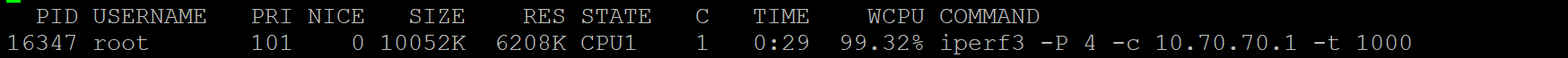

Also, running -P does not seem to increase the process count, just the parallel connection, I can see is only one thread.

Leaving the gateways aside, this is the speed I am getting between host salt (vlan1) and host smtp (vlan2) with the troublesome gateways in between doing the routing and some filtering. Both are linux machines.

[root@salt salt]# iperf3 -c smtp Connecting to host smtp, port 5201 [ 4] local 192.168.1.118 port 45280 connected to 192.168.2.25 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 142 MBytes 1.19 Gbits/sec 4 460 KBytes [ 4] 1.00-2.00 sec 147 MBytes 1.23 Gbits/sec 0 660 KBytes [ 4] 2.00-3.00 sec 181 MBytes 1.52 Gbits/sec 0 844 KBytes [ 4] 3.00-4.00 sec 209 MBytes 1.75 Gbits/sec 0 1021 KBytes [ 4] 4.00-5.00 sec 168 MBytes 1.41 Gbits/sec 19 868 KBytes [ 4] 5.00-6.00 sec 180 MBytes 1.51 Gbits/sec 0 1015 KBytes [ 4] 6.00-7.00 sec 186 MBytes 1.56 Gbits/sec 0 1.12 MBytes ^C[ 4] 7.00-7.56 sec 126 MBytes 1.89 Gbits/sec 0 1.20 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-7.56 sec 1.31 GBytes 1.49 Gbits/sec 23 sender [ 4] 0.00-7.56 sec 0.00 Bytes 0.00 bits/sec receiver iperf3: interrupt - the client has terminated [root@salt salt]# traceroute smtp traceroute to smtp (192.168.2.25), 30 hops max, 60 byte packets 1 g1 (192.168.1.1) 0.091 ms 0.072 ms 0.059 ms 2 192.168.2.25 (192.168.2.25) 0.397 ms 0.348 ms 0.340 ms [root@salt salt]#Iperf3 running on pfsense just shows me the same I get running between devices and so on. I don't think it's the cpu. Prob the driver or whatever setting the now one_foot_in_the_grave freebsd wants for a 10G card to work more than 20% of it's speed.

-

The ix driver can load multiple queues to process multiple connections through it using multiple cores. It does that by default and you should see that in the top output. If you run iperf with more connections it can use that.

The CPU is pretty ancient but I would still expect to see more that that through it.

Steve

-

If I boot up a live linux on the same machine with ancient cpu, I get full speed with almost no cpu usage. That is my problem right now, unfortunately.

If some people can get at least half of that 10G via pfsense, I am curious how. -

Did you have any sort of firewall running in Linux when you tested that?

If you disable pf in pfSense and are testing through it instead of to it I would expect to see 10G line rate from iperf.

With pf enabled you won't but I'd expect to see 5-6Gbps without changing anything. Something is not right on your system there.

I think last time I tested I was seeing 3-4Gbps using a Q6600 and that's quite a lot more ancient!

Steve

-

I will disable PF and check that way but with low expectations. Will have to be done after hours on these.

That was just a plain linux with iptables disabled. However, obviously that is a linux kernel with linux drivers. For example we have another set of gateways in the other office, with even crappier cpus (l5640) but running routeros (linux kernel and drivers) with much more firewall rules and yet we get around 7G.I am also not sure for example on these ix0 interfaces which is used exclusively for carp, how does PF limit the performance? it's just one rule there that allows everything on that specific interface since it's connected straight to the other one. Running even through that "unfiltered" interface does not get more than 2.3 Gb/s.

-

Just having pf enabled on there introduces significant overhead. But I think you're right it's not just pf at work here, you should be seeing more throughput. I think you need to check the ix drive is creating multiple queues and using them. Try running

vmstat -iduring a test.Linux will almost always perform better to various extents on given hardware. But not that much better.

Steve

-

Seems like it does create about 8 queues as this is another set of boxes but just with one cpu. Still, I was not yet able to exceed 2.5Gb/s and this is on a straight connection no switch in between, no nat or filtering.

A little bit ridiculous... -

That's iperf3 directly between the boxes with pfSense on? With multiple connections? 8 to use all the queues.

Steve

-

Yes, unfurtunately. I've been playing with all sorts of ifconfig options for ix0 nic, to no avail. Some of them make matter worse but none of them better.

I believe I must accept the faith and blame freebsd. I can't spend days unfortunately troubleshooting this, the card/system should work out of the box. -

I would certainly expect more from that CPU. You could try FreeBSD directly and see what performance you're seeing. Could be some obscure hardware/driver compatibility issue.

Steve

-

Alright, more digging has been done.

Setting the mtu to 9000 gets me 9.90 Gbits/sec. Of course jumbo frames should make a different but sincerely not that much expected.So, I went on with my investigation and created two vms on same server, installed pfsense with the configs from the troublesome ones. Using virtio for net, initially I was getting pretty much the same however enabling tso and lro on vtnet0 which was the "carp" interface pushed to 28 GB/s WITHOUT jumbo frames (because they are on the same machine, local software bridge)

However, this introduced new issues such as iperf3: error - unable to write to stream socket: Permission denied, which I am sure is some default limitations that need tweaking.