Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5

-

@muppet Yes! You are entirely right. pfblocker is victim not villain.

The question I would like answered is does this problem exist on all 2.4.5 x64 installs or just some. On clean installs or just upgraded ones? The timing of this stinks. I don't expect a lot of movement concerning this for some time.

I really have no one to blame other than myself. I thought that given the extended time between releases and the time 2.4.5 spent in RC status that it would be rock solid out of the gate. I was wrong and I should have known better than to do this upgrade now.

I'll do a clean install and restore my config when I can do that without taking myself or the kids offline for an extended time. Remote work, remote school.

-

@jwj Yes, I feel the same in that I wish I hadn't upgraded. I could have easily rolled back (I took a backup of my VM before I pressed go) but I've been meaning for ages to try out Vyos at home and this was the final push I needed.

I'm sure this will be fixed, I expect it's an underlying FreeBSD issue, probably something to do with workarounds for Spectre/Meltdown or similar.

It was bad timing, but then joke's on us really - who upgrades their key infrastructure during a crisis this the worlds current one (for future readers, COVID19). The release notes even warn us. So we've noone to blame but ourselves.

I also regret not having run a 2.4.5-RC build where I could have helped diagnose this and fix it before production. It's the old thing of "I'm sure someone else has done that". Alas.

Onwards and upwards though, I love pfSense!

-

@muppet said in Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5:

I'm sure this will go unread, but the problem is nothing to do with pfBlocker

Let see if I can write that biggerIrrespective of size or color of font, yours appears to be an absolute claim in the negative, which cannot be proven by definition. To the contrary, there is a base of evidence pointing to cause/effect combinations of pfblocker, unbound, pfctl that others proved out on their respective systems (which appear to be mostly x86_64 based). If you have substantive data to offer that may establish different cause/effect sets, that may be helpful to all in isolating the issue(s) and hopefully fixing it.

-

@t41k2m3 I played around and tested from fresh install, no imported config, no packages and only two nics, wan/lan on ESXI 6.5 and vbox on windows 10(complete different amd/intel machines) and problem exists in playing around through changing settings randomly. pfblocker and other packages do agitate the issue making t very visible.

-

@t41k2m3 I have no packages installed and it's still a problem. Therefore it's easy to prove it's not pfBlockerNG.

As I posted, anything like pfBlockerNG etc seem to exacerbate the problem, but are not the cause of it.

-

Hello all,

I`m here because I ran into the same issue.

On Friday I updated to 2.4.5 on a baremetal system (Netgate D1541 with 32GB RAM).

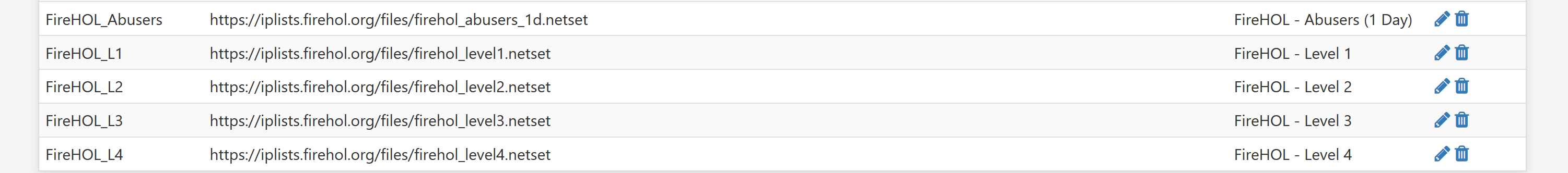

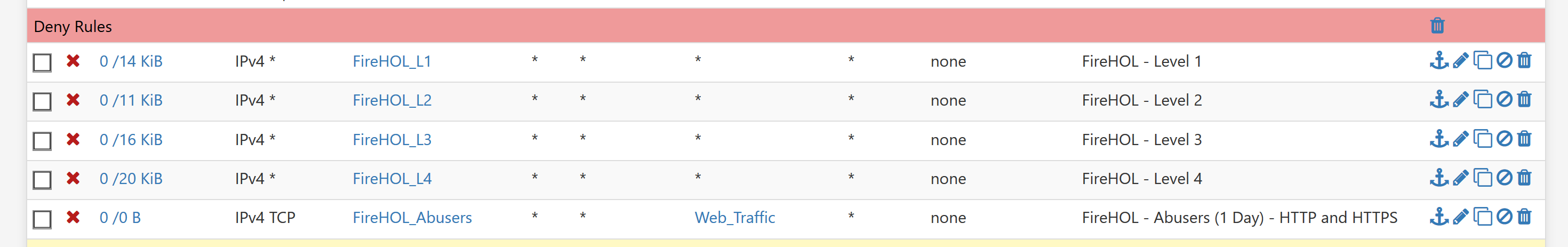

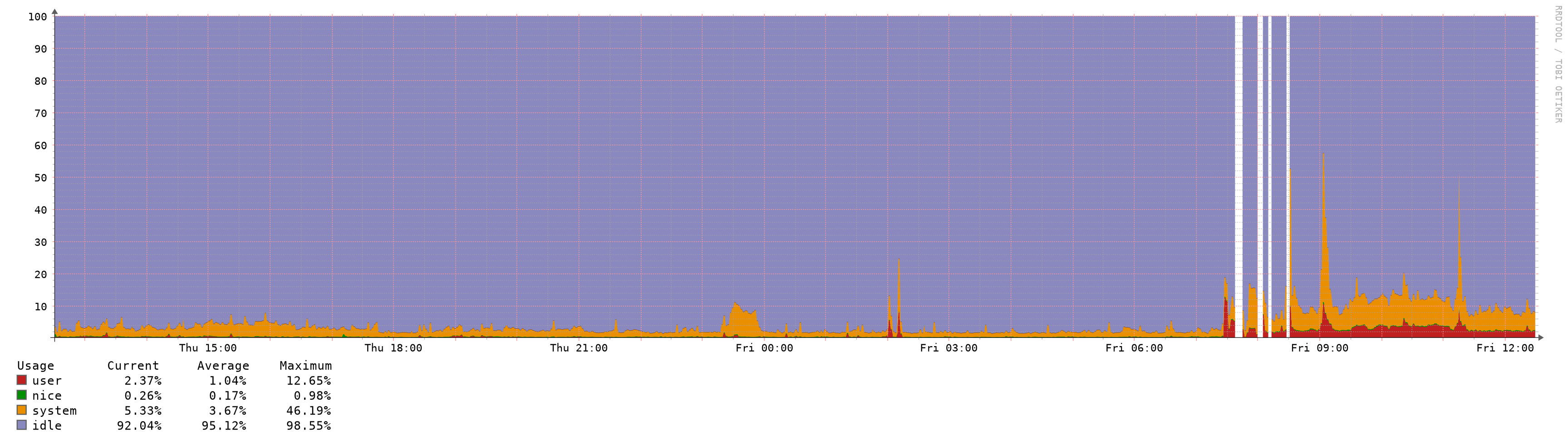

Additionally I updated pfBlockerNG to the devel version (2.2.5_30).Same issues as decribed above including high system load up to 23, pfctl eating 180% CPU, and similar issues like Nginx gateway timeouts, VPN interrupts, broken internet connections.

Additionally I had a broken GEOM mirror after the update process and reboot (I did not switch it off or similar). The system was not usable after the rebuild, I saw a lot off missing PHP files. Nothing was working, the network was also broken. To get it running again I had to reinstall the system. This is also new for me. The S.M.A.R.T status was and is without any issue. The update process did not show an error.

Does anybody has any hint what could be the root cause for such a behavior?

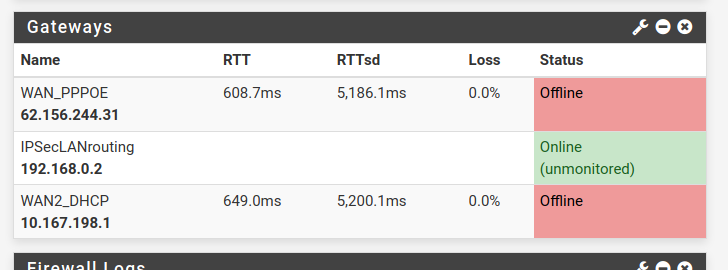

For me it looks like the mirror was broken while the update process was running and after the reboot it copied from the wrong SSD to the other. I have no clue how this can happen.If the system is under load the WAN gateways have a high latency but without packet loss, which I never saw before:

The system is not accessible for minutes if anything changed.

As I added some new VLANs it never came back, I had to go onsite for a reboot which is not so easy at the moment because everybody is working from home.It is not only the pfctl process, I saw ntopng, resolver, php-fpm with high CPU usage.

In the meanwhile I don`t believe that the problem is only pfBlockerNG-devel, it is more likely one or more problems somewhere in the system.What is the best solution for now? Waiting for a fix is not an option with COVID-19.

Has anybody tested a clean install of 2.4.4 P3 and restored the settings of the 2.4.5 version?

Is this working or should I waste my work of the last few days and restore a backup of 2.4.4 P3?Ralf

-

As i write early, now i am on Hyper-V Server 2019, set 2 CPU cores for pfSense 2.4.5, i have made clean install on new VHDX storage file with config restore from old patched system. Set Kernel PTI: Disabled and MDS Mitigation: Inactive, than make clean config on pfBlockerNG and reinstall pfBlockerNG-devel, after it — manually restore all settings in GUI. System uptime is 4 days for now, no lags and abnormal CPU usage, just a little bigger RAM usage (~20%).

p.s.

Once per hour system gets frozen for few seconds, when pfblocker make updates, must to set update once per day. -

@getcom I exported the config from my 2.4.5 system, did a fresh install of 2.4.4 p3 and restored the config back. Everything works as expected for me.

-

@Gektor That once per hour is what we are talking about. The pfSense becomes completely unresponsive. If you are running VOIP traffic, your call is dropped. If you are collaborating in a video call, you lose the call. Setting pfBlocker to only update once a day during off hours is a nice workaround, but it's not a fix.

-

Here's my test:

Hyper-V on Windows Server 2019

2 Dedicated NICs (Both intel gigabit) without host sharing, all acceleration disabled. (tried it with everything enabled and the 3 permuations)

4 cores, 4 gigs of ram

Internet NIC plugged into Bridged ISP fiber modem that uses PPPoE.pfSense 2.4.3 clean install: 2% cpu, saving settings doesn't have any issue. First configuration, until PPPoE connection is established is slow, then once that's set, it's fast. (appears pfSense waits for a DHCP WAN address a REALLY long time.

pfSense 2.5 clean install: 20% cpu, 15x longer while PPPoE not setup between saves and initial configuration, after setup, every save of settings causes cpu spike, and system becomes completely non-responsive including pings to the local lan address of the router.

Both tests were done with no restore of settings, nothing installed, only the initial wizard run. That's it.

I tried disabling AES-NI, hardware offload of everything, and it made no difference, 2.4.3 is fine on hyper-v, 2.5 is a disaster.

Should be very easy to reproduce. Just install the free hyper-v server per above, and setup pfsense on hyper-v and it should have the issues. Don't know if PPPoE is part of the problem or not, but just plug the internet wan adapter into a switch without a dhcp server on it to reproduce.

-

@JohnGalt1717 I noticed that some are saying that symptoms disappear by dropping to 1 core for the VM - have you tried that?

-

Too many threads, too many ways to look at the issue.

VM's and bare metal both have the problem. How badly the problem is felt is dependent on how capable your system is. Anything that causes pfctl to run will cause the problem in my experience. I'm on good hardware so it's not a show stopper, more of an annoyance.

pf_blocker is NOT the problem. Issue with mime types was identified and fixed.

Putting the 2.4.4-p3 download links back up would be a good idea IMHO.

-

@snarfattack said in Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5:

@getcom I exported the config from my 2.4.5 system, did a fresh install of 2.4.4 p3 and restored the config back. Everything works as expected for me.

It would be interesting which output would be created with a diff -Bur between a fresh installed pfS with restored configs and an updated version.

This has to be done to get an idea about the problem if it is a database/config item issue. As I`m using only baremetal firewalls only I would have to dump the SSD and copy it to one of my Proxmox hosts.

Then reconfigure the ethernet devices + setups and try to reproduce that. It can work like that or not. It would be much easier for somebody who has already a running VM. -

@getcom I've not seen any indication that a fresh install of 2.4.5 is different than an updated one. I would do a fresh install of 2.4.5 if I had some indication that fresh installs don't have the issue. Otherwise going back to 2.4.4-p3 is, sadly, the way to go.

-

@jwj said in Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5:

VM's and bare metal both have the problem. How badly the problem is felt is dependent on how capable your system is. Anything that causes pfctl to run will cause the problem in my experience. I'm on good hardware so it's not a show stopper, more of an annoyance.

Mmh., me too, an eight core D1541 Xeon CPU with 32GB RAM is a lot of power, but nevertheless the system has load peaks up to 23 with 100% CPU and up to 80% memory consumption.

This is way too much. The response times are worst in such a case. There is zero packet loss but 5 seconds latency. It sounds like a service is blocked or overloaded which is necessary for e.g. packet inspection. This would then explain a) high CPU usage, b) memory consumption and c) no packet loss. More traffic is causing a higher load. This is the case if pfB runs the updates but it can also happen if user payload is higher. In my regular setup a pfSense can have 5-12 or more VLANs and the pfS is the gateway for all. Normally I`m using trunk ports over 10GbE SFP+ laggs as parent interfaces for VLANs directly connected to Cisco core switches. If pfS hangs, the complete infrastructure which is using the pfS route has a problem and this is what I have at the moment. It is traffic related, not hardware or packet related. Some tests in a lab with a stress test tool like Gatling shooting to endpoints in different subnets and a debugging enabled pfS should enlighten the darkness.

The FreeBSD changes of 11.3 have a lot potential candidates for such issues: https://www.freebsd.org/releases/11.3R/relnotes.html#kernel-general. -

@getcom said in Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5:

@jwj said in Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5:

VM's and bare metal both have the problem. How badly the problem is felt is dependent on how capable your system is. Anything that causes pfctl to run will cause the problem in my experience. I'm on good hardware so it's not a show stopper, more of an annoyance.

Mmh., me too, an eight core D1541 Xeon CPU with 32GB RAM is a lot of power, but nevertheless the system has load peaks up to 23 with 100% CPU and up to 80% memory consumption.

This is way too much. The response times are worst in such a case. There is zero packet loss but 5 seconds latency. It sounds like a service is blocked or overloaded which is necessary for e.g. packet inspection. This would then explain a) high CPU usage, b) memory consumption and c) no packet loss. More traffic is causing a higher load. This is the case if pfB runs the updates but it can also happen if user payload is higher. In my regular setup a pfSense can have 5-12 or more VLANs and the pfS is the gateway for all. Normally I`m using trunk ports over 10GbE SFP+ laggs as parent interfaces for VLANs directly connected to Cisco core switches. If pfS hangs, the complete infrastructure which is using the pfS route has a problem and this is what I have at the moment. It is traffic related, not hardware or packet related. Some tests in a lab with a stress test tool like Gatling shooting to endpoints in different subnets and a debugging enabled pfS should enlighten the darkness.

The FreeBSD changes of 11.3 have a lot potential candidates for such issues: https://www.freebsd.org/releases/11.3R/relnotes.html#kernel-general.Similar situation here as well: On a bare metal 4 core Xeon D 1518 based system with 16GB RAM running 2.4.5, latencies will spike up up into the 1000's of milliseconds for a few seconds with load spiking before it finally reduces. This happens every time e.g. I change or update a firewall rule (and a reload occurs). No such issues observed on 2.4.4 P3 or prior versions.

-

@jwj said in Increased Memory and CPU Spikes (causing latency/outage) with 2.4.5:

@getcom I've not seen any indication that a fresh install of 2.4.5 is different than an updated one. I would do a fresh install of 2.4.5 if I had some indication that fresh installs don't have the issue. Otherwise going back to 2.4.4-p3 is, sadly, the way to go.

There is no indication for that from my perspective but to be sure it could be done in such a way. As it happens also on fresh installed system the indication points to another direction.

It is more likely that this is either a bug or a wrong/too small sysctl value in conjunction with a specific circumstance like a high TCP payload or traffic. -

Just did a fresh install of 2.4.5. No difference. Supermicro 5018D-FN4T.

What's left to determine is is it a tunable or something more fundamentally broken in FreeBSD 11.3 as mentioned by @getcom

It's time for Netgate to put the 2.4.4-p3 images back online. Amazing given the number of times it was stated that 2.4.5 would be ready when it was ready. Better late than untested as so on. I trusted them that when it was out it would be ready. I defended them when others whined about the time between releases. I will not make that mistake again.

-

I currently have no dog in this fight since I don't run pfBlockerNG-devel and I have not yet upgraded my SG-5100 to 2.4.5 (but I do plan to).

I found this Errata Notice in the Release Notes for FreeBSD 11/STABLE -- https://www.freebsd.org/security/advisories/FreeBSD-EN-20:04.pfctl.asc. Since several of you, and some posters in other threads, are complaining about high utilization from

pfctl, the patch this errata notice talks about might be of interest. -

@bmeeks Thanks! I will add that the issue exists without pfblocker. pfblocker just adds a bunch of large alias's that exacerbate the issue.