PC Engines apu2 experiences

-

@sToRmInG good that the cause is found

-

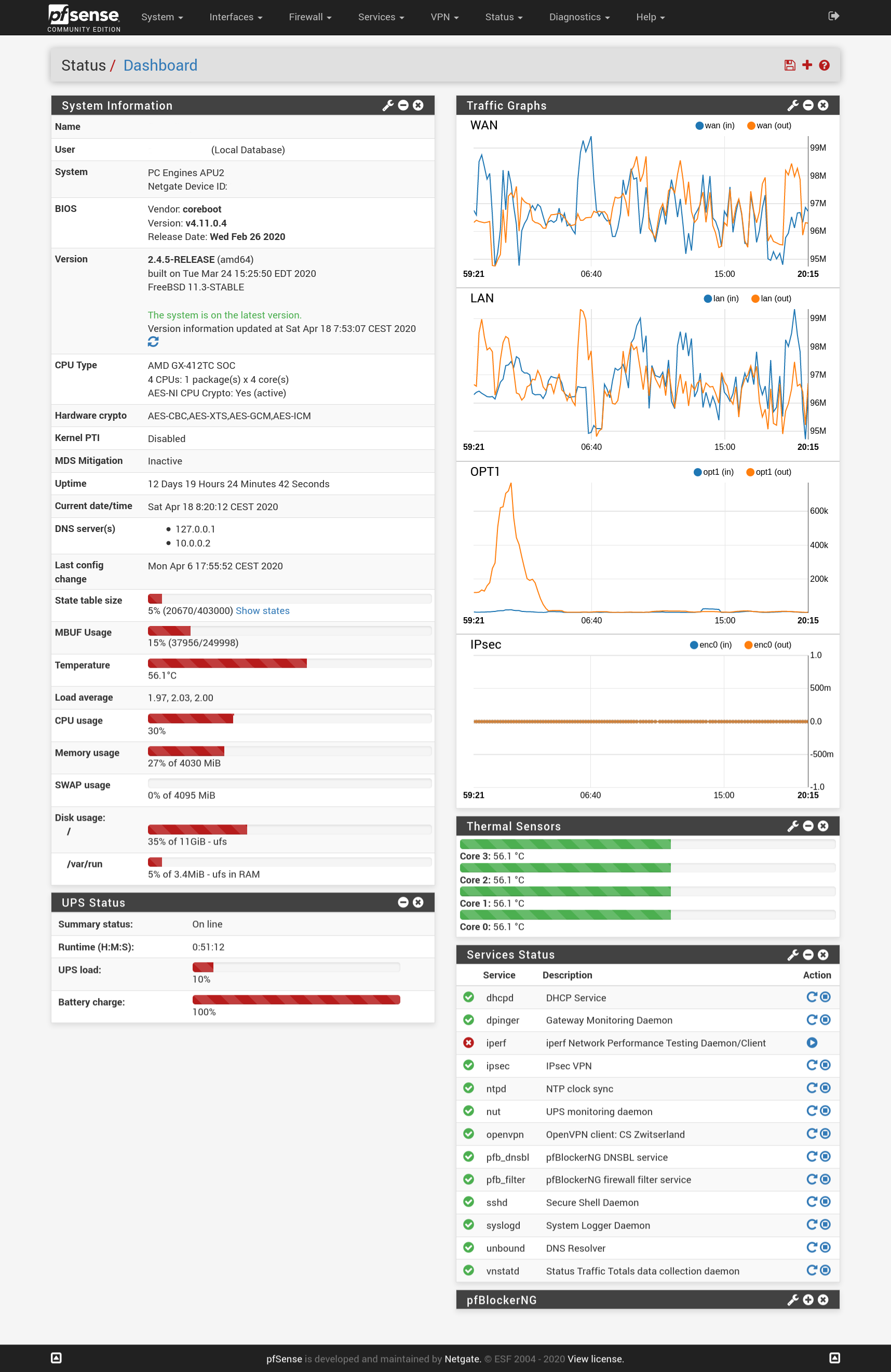

I'm wondering what kind of performance and CPU load you guys have with an APU2C4. I want to know if I should lower my expectations or tweak my settings.

I have a 500/500 fiber line.

The ~100mbit load is mostly from my Tor relay in a docker container. It is hard capped to 100 mbit. The cpu load is already quite high with this throughput. If I set it to 300mbit the network becomes unusable, dns timeouts, etc. Most cpu time goes to igb que interrupts.

Load with 100mbit throughput:

last pid: 79066; load averages: 1.38, 2.08, 2.50 up 12+20:03:08 08:58:38 230 processes: 6 running, 190 sleeping, 34 waiting CPU: 6.4% user, 0.0% nice, 4.5% system, 20.5% interrupt, 68.6% idle Mem: 401M Active, 209M Inact, 798M Wired, 296M Buf, 2498M Free Swap: 4096M Total, 4096M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 11 root 155 ki31 0K 64K CPU0 0 127.4H 73.22% [idle{idle: cpu0}] 11 root 155 ki31 0K 64K RUN 3 129.5H 71.20% [idle{idle: cpu3}] 11 root 155 ki31 0K 64K CPU1 1 133.2H 66.98% [idle{idle: cpu1}] 11 root 155 ki31 0K 64K RUN 2 139.1H 57.81% [idle{idle: cpu2}] 12 root -92 - 0K 544K WAIT 2 48.8H 19.57% [intr{irq258: igb0:que 2}] 12 root -92 - 0K 544K WAIT 2 58.3H 11.25% [intr{irq268: igb2:que 2}] 12 root -92 - 0K 544K WAIT 0 69.2H 10.17% [intr{irq266: igb2:que 0}] 12 root -92 - 0K 544K WAIT 1 52.1H 9.09% [intr{irq267: igb2:que 1}] 12 root -92 - 0K 544K WAIT 3 61.5H 8.20% [intr{irq269: igb2:que 3}] 12 root -92 - 0K 544K WAIT 0 69.2H 8.14% [intr{irq256: igb0:que 0}] 58124 root 20 0 97404K 39156K accept 0 0:56 6.76% php-fpm: pool nginx (php-fpm) 12 root -92 - 0K 544K WAIT 1 67.4H 6.37% [intr{irq257: igb0:que 1}] 45047 root 41 0 97468K 39804K piperd 0 1:25 6.28% php-fpm: pool nginx (php-fpm) 12 root -92 - 0K 544K WAIT 3 63.1H 5.00% [intr{irq259: igb0:que 3}] 15231 stfn 20 0 9868K 4624K CPU2 2 0:00 1.56% top -aSH 20 root -16 - 0K 16K pftm 3 149:55 0.69% [pf purge] 59099 root 20 0 23680K 9460K kqread 0 1:16 0.47% nginx: worker process (nginx) 12 root -60 - 0K 544K WAIT 0 24:23 0.16% [intr{swi4: clock (0)}] 50468 root 20 0 6404K 2464K select 1 13:01 0.12% /usr/sbin/syslogd -s -c -c -l /var/dhcpd/var/run/log -P /var/run/syslog.pid -f /etc/syslog.conf -b 10.0.0.2 72979 root 20 0 13912K 11596K select 3 0:01 0.12% /usr/local/sbin/clog_pfb -f /var/log/filter.log 86683 root 20 0 10304K 6168K select 0 0:03 0.09% /usr/local/sbin/openvpn --config /var/etc/openvpn/client2.conf 39603 dhcpd 20 0 16460K 11008K select 1 0:01 0.06% /usr/local/sbin/dhcpd -user dhcpd -group _dhcp -chroot /var/dhcpd -cf /etc/dhcpd.conf -pf /var/run/dhcpd.pid igb2 25 root 20 - 0K 32K sdflus 1 3:06 0.05% [bufdaemon{/ worker}] 1722 root 20 0 6292K 1988K select 0 7:22 0.04% /usr/sbin/powerd -b hadp -a hadp -n hadpLoad with 300mbit throughput:

last pid: 82661; load averages: 7.41, 5.28, 4.66 up 14+05:05:29 18:00:59 229 processes: 12 running, 188 sleeping, 29 waiting CPU: 8.1% user, 0.0% nice, 17.0% system, 59.9% interrupt, 14.9% idle Mem: 272M Active, 353M Inact, 796M Wired, 292M Buf, 2486M Free Swap: 4096M Total, 4096M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 12 root -92 - 0K 544K CPU2 2 53.9H 47.76% [intr{irq258: igb0:que 2}] 12 root -92 - 0K 544K CPU0 0 75.1H 32.51% [intr{irq266: igb2:que 0}] 12 root -92 - 0K 544K WAIT 3 66.2H 30.62% [intr{irq269: igb2:que 3}] 12 root -92 - 0K 544K WAIT 3 67.6H 27.98% [intr{irq259: igb0:que 3}] 12 root -92 - 0K 544K CPU0 0 74.4H 25.48% [intr{irq256: igb0:que 0}] 12 root -92 - 0K 544K WAIT 1 57.4H 22.66% [intr{irq267: igb2:que 1}] 12 root -92 - 0K 544K RUN 2 62.7H 21.08% [intr{irq268: igb2:que 2}] 11 root 155 ki31 0K 64K RUN 0 144.0H 18.86% [idle{idle: cpu0}] 11 root 155 ki31 0K 64K RUN 1 148.7H 18.59% [idle{idle: cpu1}] 12 root -92 - 0K 544K CPU1 1 73.9H 15.59% [intr{irq257: igb0:que 1}] 92651 root 52 0 95420K 37880K piperd 1 1:26 14.63% php-fpm: pool nginx (php-fpm) 11 root 155 ki31 0K 64K RUN 3 146.5H 14.17% [idle{idle: cpu3}] 11 root 155 ki31 0K 64K RUN 2 155.8H 11.19% [idle{idle: cpu2}] 73008 root 52 0 56396K 42476K piperd 2 13:31 1.61% /usr/local/bin/php_pfb -f /usr/local/pkg/pfblockerng/pfblockerng.inc filterlog 20 root -16 - 0K 16K pftm 3 165:40 0.97% [pf purge] 38833 root 52 0 95356K 38180K accept 2 3:22 0.85% php-fpm: pool nginx (php-fpm) 93443 stfn 20 0 9868K 4516K CPU3 3 0:00 0.69% top -aSH 95491 root 20 0 55856K 19000K select 1 0:38 0.25% /usr/local/libexec/ipsec/charon --use-syslog{charon} 86683 root 20 0 10304K 6216K select 0 14:08 0.24% /usr/local/sbin/openvpn --config /var/etc/openvpn/client2.conf -

A couple of suggestions:

- Intel NIC tuning. Remove igb RX processing limit by adding the following line to /boot/loader.conf.local (and rebooting):

hw.igb.rx_process_limit=-1- Unfortunately there also appears to be a routing performance regression with pfSense 2.4.5 - likely resulting from the OS update to FreeBSD 11.3-STABLE. FreeBSD 11+ offers a routing path optimisation known as ip_tryforward(), which will be used to route IP packets (excluding IPSEC) as long as ICMP redirects are disabled. To disable ICMP redirects, under System / Advanced / System Tunables set sysctls net.inet.ip.redirect & net.inet6.ip6.redirect to 0.

This should result in 5-20% reduction of system CPU for routing (depending on workload).

-

@dugeem thank you for the suggestions!

-

Was already disabled as advised here: https://teklager.se/en/knowledge-base/apu2-1-gigabit-throughput-pfsense/

-

I now have disabled. Will let you know what the outcome is.

-

-

@dugeem said in PC Engines apu2 experiences:

A couple of suggestions:

- Intel NIC tuning. Remove igb RX processing limit by adding the following line to /boot/loader.conf.local (and rebooting):

hw.igb.rx_process_limit=-1- Unfortunately there also appears to be a routing performance regression with pfSense 2.4.5 - likely resulting from the OS update to FreeBSD 11.3-STABLE. FreeBSD 11+ offers a routing path optimisation known as ip_tryforward(), which will be used to route IP packets (excluding IPSEC) as long as ICMP redirects are disabled. To disable ICMP redirects, under System / Advanced / System Tunables set sysctls net.inet.ip.redirect & net.inet6.ip6.redirect to 0.

This should result in 5-20% reduction of system CPU for routing (depending on workload).

Is #2 a known issue that is acknowledged by the pfsense team already? I guess I can go away not disabling ICMP redirects if this will get fixed in the next release anyway.

-

@stefanl Those Teklager guys make some very brave statements, like: "It turns out that internet is wrong :-) "

Simply, the internet folks are not really wrong. But they have a very specific issue that breaks this brave statement under a second:

PPPoE is a protocoll that breaks multi-queue traffic, because the RSS algorithm cannot distribute the PPPoE non-IP-type traffic to multi queues, and multi-CPU cores. So no matter how hard you try to download in parallel streams, if the WAN protocoll does not allow multi-queuing, the whole magic breaks immediately. And the same results stays: you cannot reach 1Gbit throughput on APU, if you have PPPoE internet. And we havent even mentioned NAT and PF! That kills the throughput even more. The internet folks are not dumb people, dearTeklager.+extra content: throwing in some random sysctl values without explanation does not help either to bust the magic myth of 1gbit on APU.

-

@soder So I did try those fixes (under System -> Advanced -> Networking and the bootloader) on both of my APU2C4 that are connected via site-to-site OpenVPN and I've had all sorts of issues with my Plex servers. I have two Plex servers (one in each network served by those two pfsense boxes). I can understand that these tweaks can have an effect when I'm accessing a remote Plex server but the weird thing is that I also had issues when I'm accessing my local one. Any thoughts?

-

Bit of a long answer ...

pfSense 2.3 (released back in 2016) was based on FreeBSD 10.3 ... which introduced a new optimised IP routing path feature known as ip_tryforward() which replaced the older ip_fastforward(). (Refer to OS section in https://docs.netgate.com/pfsense/en/latest/releases/2-3-new-features-and-changes.html). The tryforward path has been in use ever since.

However back in August 2017 a bug noting ICMP Redirects not working was raised upstream in FreeBSD (refer https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=221137). This bug was finally fixed in around August 2018 in FreeBSD head (now FreeBSD 12) and was also MFCed back to 11-STABLE. Note that the patch was not applied to FreeBSD 11.1 (pfSense 2.4-2.4.3) or 11.2 (pfSense 2.4.4).

pfSense 2.4.5 updated to FreeBSD 11.3-STABLE and thus now incorporates the ICMP Redirect fix. The pfSense default for ICMP Redirects is enabled - which therefore disables use of the tryforward path.

I've raised this in Redmine (refer https://redmine.pfsense.org/issues/10465). JimP has indicated they'll consider adding a pfSense GUI option to simplify disabling ICMP Redirects. No timeframe yet.

For now the workaround is simple enough - and for most networks there is no concern for disabling ICMP Redirects (only really required for networks where there are multiple routers directly accessible from end hosts - refer https://en.wikipedia.org/wiki/Internet_Control_Message_Protocol#Redirect)

-

-

Unfortunately some of the advice in the link is incorrect.

Firstly TSO & LRO should always be disabled on routers. Netgate recommend this (hence pfSense defaults) as do others. BSDRP have even tested this and found routing performance drop negligible from enabling TSO & LRO (see link below).

In terms of loader.conf.local suggestions:

- hw.igb.rx_process_limit=-1 is a standard tweak for Intel igb NICs. Performance boost on APU2 though is only ~1%.

- hw.igb.tx_process_limit already defaults to -1 so no need to change this.

- hw.igb.num_queues=1 is not for APU2 as stated. Default 0 allows driver to allocate maximum queues across CPU cores (i210AT has 4 queues; i211AT has 2 queues)

- kern.ipc.nmbclusters=1000000 is unnecessary - default on APU2 with 4GB RAM is ~250000 (mbuf is 2kB - so this represents maximum 12% of RAM). Possibly for high bandwidth routers 500000 mbufs would be prudent. However use the command

netstat -mto verify mbuf use prior to changing. - net.pf.states_hashsize=2097152 is ridculous for an APU2. If you need to be tweaking this then you'll likely need better hardware.

- hw.igb.rxd=4096 & hw.igb.txd=4096. Increasing NIC descriptors on APU2 will actually decrease performance by 20%. And likely worsens buffer bloat. Default of 1024 is fine.

- net.inet.tcp.* sysctl tuning is for end clients (ie not routers).

Reference performance data for some of the above: https://bsdrp.net/documentation/technical_docs/performance#nic_drivers_tuning

Even longer version: https://people.freebsd.org/~olivier/talks/2018_AsiaBSDCon_Tuning_FreeBSD_for_routing_and_firewalling-Paper.pdf

The only caveat is that these BSDRP performance numbers were compiled in 2018 before the AMD CPB was enabled in APU2 BIOS - so performance should now exceed this.

My current APU2 performance tweak summary:

- Upgrade BIOS to enable CPB (mainline v4.9.0.2 or later, legacy v4.0.25 or later)

- Disable ICMP Redirects to enable tryforward routing path (under System / Advanced / System Tunables set net.inet.ip.redirect & net.inet6.ip6.redirect to 0)

- Add hw.igb.rx_process_limit=-1 to /boot/loader.conf.local

There may well be other tweaks but for our power efficient APU2 routers these tweaks should serve most well. And when my home internet evolves to 500Mb/s I'll worry some more

-

@dugeem said in PC Engines apu2 experiences:

My current APU2 performance tweak summary:

- Upgrade BIOS to enable CPB (mainline v4.9.0.2 or later, legacy v4.0.25 or later)

- Disable ICMP Redirects to enable tryforward routing path (under System / Advanced / System Tunables set net.inet.ip.redirect & net.inet6.ip6.redirect to 0)

- Add hw.igb.rx_process_limit=-1 to /boot/loader.conf.local

There may well be other tweaks but for our power efficient APU2 routers these tweaks should serve most well. And when my home internet evolves to 500Mb/s I'll worry some more

May I ask you what kind of Internet Access do you have? Is it PPPOE or something else?

Because here (I have Internet Access via PPPOE) if I activate hw.igb.rx_process_limit=-1 in /boot/loader.conf.local and then disable TSO & LRO in the advanced settings the PPPOE internet connection cannot be established. (PPPOE is bound on igb1).Thanks,

fireodo -

My primary internet is a 100Mb/s HFC service which terminates on Ethernet VLAN.

Your problem is interesting. Last year I had a secondary PPPoE service as well - and had no issues.

If you revert to pfSense defaults (TSO & LRO disabled - comment out anything in loader.conf.local etc) does the PPPoE connection work?

-

@dugeem said in PC Engines apu2 experiences:

@fireodo

If you revert to pfSense defaults (TSO & LRO disabled - comment out anything in loader.conf.local etc) does the PPPoE connection work?Yes!

-

@dugeem said in PC Engines apu2 experiences:

Unfortunately some of the advice in the link is incorrect.

Firstly TSO & LRO should always be disabled on routers. Netgate recommend this (hence pfSense defaults) as do others. BSDRP have even tested this and found routing performance drop negligible from enabling TSO & LRO (see link below).

In terms of loader.conf.local suggestions:

- hw.igb.rx_process_limit=-1 is a standard tweak for Intel igb NICs. Performance boost on APU2 though is only ~1%.

- hw.igb.tx_process_limit already defaults to -1 so no need to change this.

- hw.igb.num_queues=1 is not for APU2 as stated. Default 0 allows driver to allocate maximum queues across CPU cores (i210AT has 4 queues; i211AT has 2 queues)

- kern.ipc.nmbclusters=1000000 is unnecessary - default on APU2 with 4GB RAM is ~250000 (mbuf is 2kB - so this represents maximum 12% of RAM). Possibly for high bandwidth routers 500000 mbufs would be prudent. However use the command

netstat -mto verify mbuf use prior to changing. - net.pf.states_hashsize=2097152 is ridculous for an APU2. If you need to be tweaking this then you'll likely need better hardware.

- hw.igb.rxd=4096 & hw.igb.txd=4096. Increasing NIC descriptors on APU2 will actually decrease performance by 20%. And likely worsens buffer bloat. Default of 1024 is fine.

- net.inet.tcp.* sysctl tuning is for end clients (ie not routers).

Reference performance data for some of the above: https://bsdrp.net/documentation/technical_docs/performance#nic_drivers_tuning

Even longer version: https://people.freebsd.org/~olivier/talks/2018_AsiaBSDCon_Tuning_FreeBSD_for_routing_and_firewalling-Paper.pdf

The only caveat is that these BSDRP performance numbers were compiled in 2018 before the AMD CPB was enabled in APU2 BIOS - so performance should now exceed this.

My current APU2 performance tweak summary:

- Upgrade BIOS to enable CPB (mainline v4.9.0.2 or later, legacy v4.0.25 or later)

- Disable ICMP Redirects to enable tryforward routing path (under System / Advanced / System Tunables set net.inet.ip.redirect & net.inet6.ip6.redirect to 0)

- Add hw.igb.rx_process_limit=-1 to /boot/loader.conf.local

There may well be other tweaks but for our power efficient APU2 routers these tweaks should serve most well. And when my home internet evolves to 500Mb/s I'll worry some more

Thanks for the explanation again. I just applied these tweaks to my boxes. I just have a 300Mbps down/up Internet connection anyway but just want to optimize everything for my home networks.

-

@dugeem said in PC Engines apu2 experiences:

Bit of a long answer ...

pfSense 2.3 (released back in 2016) was based on FreeBSD 10.3 ... which introduced a new optimised IP routing path feature known as ip_tryforward() which replaced the older ip_fastforward(). (Refer to OS section in https://docs.netgate.com/pfsense/en/latest/releases/2-3-new-features-and-changes.html). The tryforward path has been in use ever since.

However back in August 2017 a bug noting ICMP Redirects not working was raised upstream in FreeBSD (refer https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=221137). This bug was finally fixed in around August 2018 in FreeBSD head (now FreeBSD 12) and was also MFCed back to 11-STABLE. Note that the patch was not applied to FreeBSD 11.1 (pfSense 2.4-2.4.3) or 11.2 (pfSense 2.4.4).

pfSense 2.4.5 updated to FreeBSD 11.3-STABLE and thus now incorporates the ICMP Redirect fix. The pfSense default for ICMP Redirects is enabled - which therefore disables use of the tryforward path.

I've raised this in Redmine (refer https://redmine.pfsense.org/issues/10465). JimP has indicated they'll consider adding a pfSense GUI option to simplify disabling ICMP Redirects. No timeframe yet.

For now the workaround is simple enough - and for most networks there is no concern for disabling ICMP Redirects (only really required for networks where there are multiple routers directly accessible from end hosts - refer https://en.wikipedia.org/wiki/Internet_Control_Message_Protocol#Redirect)

By the way, why can't ICMP redirect be enabled at the same time as tryforward path?

Also, if tryforward was used ever since does that mean ICMP redirect was disabled by default back then?

-

@dugeem Kudos for the tweaks, could you explain what each of these tweak will do/accomplish on a APU2C4?

Btw my speeds here are not high, as I am mandatory of using aDSL which has a max of 10mb down and as it is combined with 4G a total of 60 down and a total of 15 up

Cheers Qinn

-

@Qinn said in PC Engines apu2 experiences:

@dugeem Kudos for the tweaks, could you explain what each of these tweak will do/accomplish on a APU2C4?

Btw my speeds here are not high, as I am mandatory of using aDSL which has a max of 10mb down and as it is combined with 4G a total of 60 down and a total of 15 up

Cheers Qinn

I thought he already did explain what the tweaks do on an APU2C4? It's in his post above.

-

@kevindd992002 said in PC Engines apu2 experiences:

@Qinn said in PC Engines apu2 experiences:

@dugeem Kudos for the tweaks, could you explain what each of these tweak will do/accomplish on a APU2C4?

Btw my speeds here are not high, as I am mandatory of using aDSL which has a max of 10mb down and as it is combined with 4G a total of 60 down and a total of 15 up

Cheers Qinn

I thought he already did explain what the tweaks do on an APU2C4? It's in his post above.

I know what number on does, but the other 2 I don't and I don't seem to read what these to do

2.Disable ICMP Redirects to enable tryforward routing path (under System / Advanced / System Tunables set net.inet.ip.redirect & net.inet6.ip6.redirect to 0)

3.Add hw.igb.rx_process_limit=-1 to /boot/loader.conf.local

-

Weird. Has this issue been around for a while or has it appeared with 2.4.5?

Any hints from PPPoE logging? Also you could try reducing interface MTU (though normally PPPoE gets it right)

-

@dugeem said in PC Engines apu2 experiences:

@fireodo

Weird. Has this issue been around for a while or has it appeared with 2.4.5?No. I had this issue only after adding hw.igb.rx_process_limit=-1 to /boot/loader.conf.local

AND then disable TSO & LRO in the advanced settings.Any hints from PPPoE logging? Also you could try reducing interface MTU (though normally PPPoE gets it right)

The only thing that I found was: "ppp: can't lock /var/run/pppoe_wan.pid after 30 attempts"

As I said somewhere up in the thread without those "tunings" everything work rocksolid.Have a nice Sunday,

fireodo