Log Archiving Plugin/Mailreport Package

-

@RobEmery said in Log Archiving Plugin/Mailreport Package:

l. I'm cu

Wouldn't you be better using syslog or syslog-ng and writing it elsewhere?

-

Use remote syslog. There is no other way to ensure you will actually get all of the log messages.

On 2.5.0 the logs switched from clog to plain text logs with rotation, there you may be able to script something to grab the archives, but it's still best to use remote syslog to ship the log entries off to an archival system directly.

-

Yeah I understand that's an approach; however I don't have a syslog setup I can utilise for that purpose so I'd have to provision some more servers to do it and build a stack on-top of that to achieve the same thing.

I was leaning towards the plugin option as it keeps it all self contained and can be "switched on" with a single click, no mess, no fuss etc, nice and easy for users to activate etc.

Under 2.5.0 does that basically mean that there's a logrotate.d system similiar to most linux distros? Normally I'd use something like that to do archiving off of logs as required, sounds like that might be a good seam for this if so.

-

It uses newsyslog, not logrotate, and the newsyslog config is automatically generated so manual edits may not be viable, but it's more likely to work there anyhow.

In this day and age "I don't have a syslog setup" isn't really a good excuse, though. You can find plenty of simple how-tos for doing one with a pi, a small VM, practically anything.

Making the firewall do something it shouldn't in the name of being "self-contained" is a dangerous path to go down.

-

@RobEmery Have you looked at something like https://www.papertrail.com/? It is a remote syslog server that I send my pfsense logs to and they have a free option.

-

@IsaacFL Thank you for the suggestion, I'll have a look at that option

-

@RobEmery that plugin would be awesome.

We are looking to get all our logs archived in AWS S3 and GCP Storage, so in the event that our infrastructure is burned, we have 'out-of-band' access to our logging for forensic purposes. I don't particularly want the overhead of a set of highly available syslog servers at each site when we are able to get most of our infrastructure already archiving logs to cloud storage.

Thanks

.mt

-

pfSense :

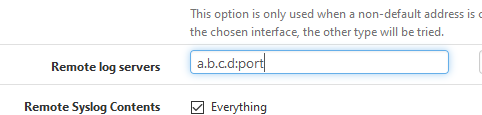

On the "AWS S3 and GCP Storage" set up a syslog type service. Have it listening on it's "Internet IP" (this is not activated by default), which should be reachable from pfSEnse, be smart and put a firewall rule in front of that interface/IP that accepts only UDP stuff from YOUR pfSense WAN IP, on port 'port'.

Logging, using the Internet as link between the source and destination, is possible.

Is it advisable, is another question. -

Don't sent syslog unencrypted over the Internet. If you do set it up at a cloud service, ensure you have a VPN tunnel carrying the traffic. Or go with syslog-ng and encrypted syslog.

-

I've had a go at using papertrail, it's a rather clunky setup in my opinion and I couldn't achieve what I was actually trying to. The PFsense syslog only supports UDP, so we need to use syslog-ng to repeat those logs over TCP/SSL, however syslog-ng doesn't work properly with TLS, it ends up not being able to start if it is enabled. So I ended up having to use stunnel to handle the TLS setup into papertrail as well. So I've ended up with 2 extra packages on, to almost achieve what I was after and I'm not there yet.

Just to reiterate the problem I'm actually trying to solve with the package idea is that I want to be able to archive the logs from PFSense into encrypted zip files (or similiar) on Azure Blob Storage nightly or similiar. I'm not trying to get real-time log monitoring or analysis, it's simply a case of retention of these logs over longer time periods.

-

What happens if the WAN circuit goes down, do you have multiple WAN circuits?

-

Yeah, I have 2 internet connections. But again, it's archiving that I'm trying to achieve; so it can try again later and succeed then; really not looking for realtime log streaming.