PfSense Custom Load Balancer Monitoring Returning "check http code use ssl"

-

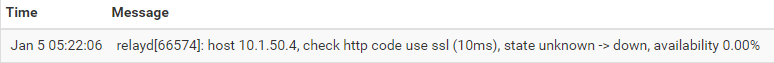

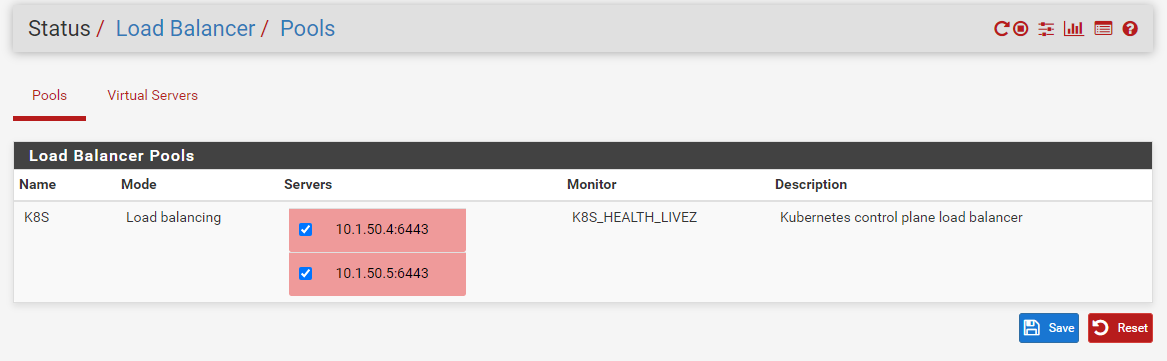

Status of the Load Balancer. Both show down.

Not familiar with HAProxy, is there some setting that needs to be updated to make use of that instead of relayd?

-

HAProxy is a separate package that needs to be installed:

https://docs.netgate.com/pfsense/en/latest/packages/haproxy.htmlSteve

-

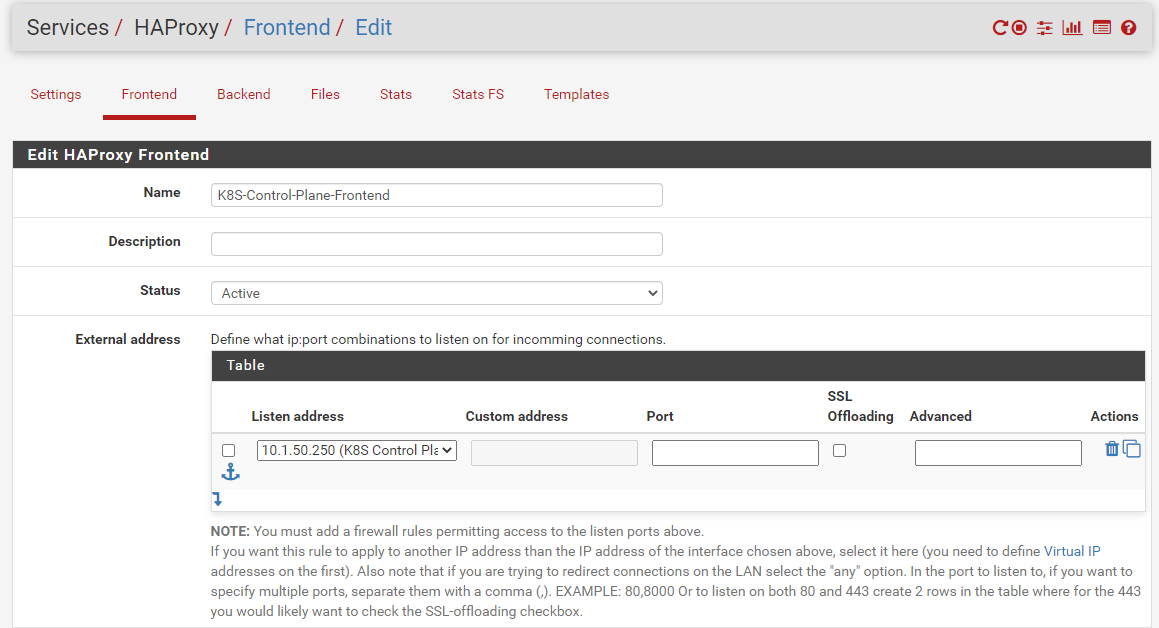

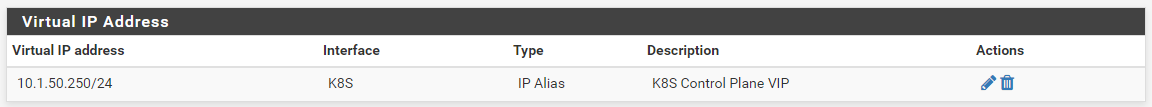

@stephenw10 Been playing around with HAProxy for a bit since my last post. Is there a way to use a "Virtual Server" or "Virtual IP" like in the pfSense Load Balancer service?

I would want a request to come in on 10.1.50.250 (which is not a actual server) and then get load balanced between two servers (assuming both are up).

-

@stephenw10

I created aFirewall->Virtual IPand then assigned it to the external addresses table, that however did not seem to pass a connection through (I do have the .4 machine showing green in HAProxy Stats).I also see under the Table there is a hyperlink mentioning "Virtual IP" which directs you to

/haproxy/firewall_virtual_ip.phphowever that page shows a 404. I have a feeling that a HAProxy specific Virtual IP needs to be set instead of a pfSense Virtual IP, correct?

-

-

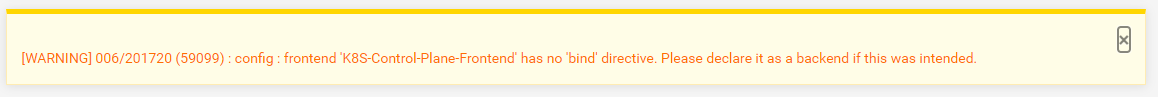

@jimp @stephenw10 @PiBa

Also I notice I am getting this warning but don't see any sections that would mention "bind" in this context.

-

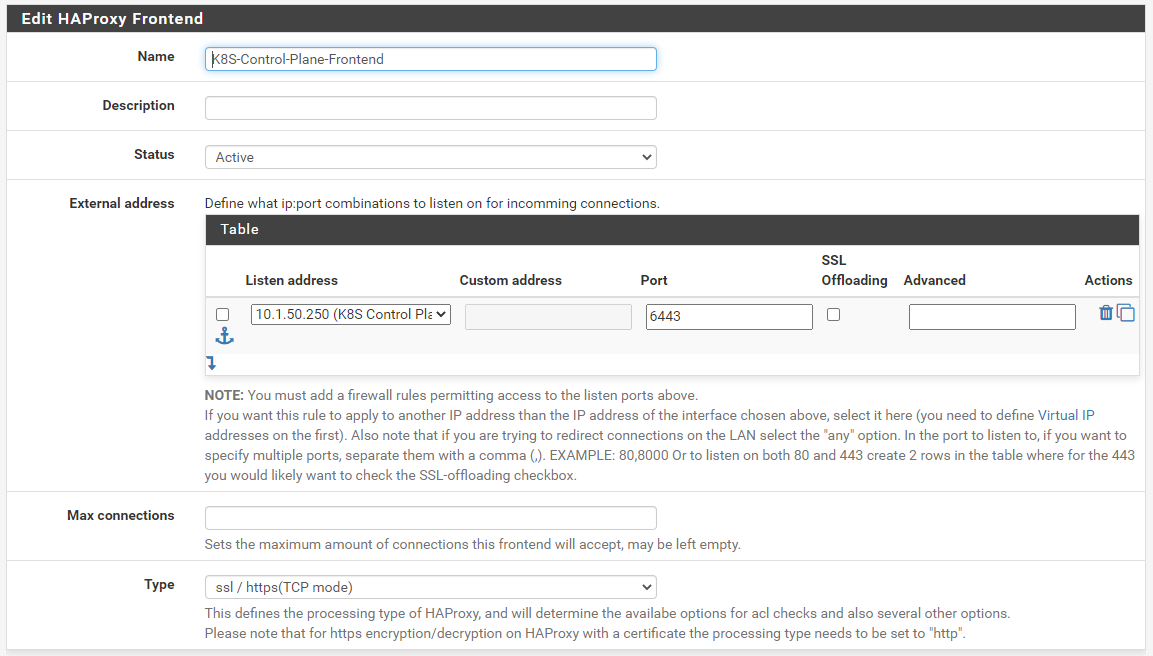

@thepiemonster

Your frontend should have a port configured, the 6443 one? Or perhaps just 443.

As for the virtualIP used its the regular pfSense one. Haproxy doesnt have its own virtualip's, but the pages were moved to a /haproxy/ folder in the website structure, the link was apparently never corrected.. -

@piba

These settings got it working.

As for the hyperlink issue, is there a bug out so the owners can fix that or should I submit something somewhere?

-

Pull Request to fix above mentioned issue.

https://github.com/pfsense/FreeBSD-ports/pull/1023 -

@thepiemonster

Good you got it working, and for fixing the link with a Github-PullRequest including a version bump . That's the way to fix things, if you can. Other option is to create a Redmine issue.. but hmm not looking to often there myself, and for a text/link-issue i would probably just forget to do it again.. Though there are more people looking of-course...

. That's the way to fix things, if you can. Other option is to create a Redmine issue.. but hmm not looking to often there myself, and for a text/link-issue i would probably just forget to do it again.. Though there are more people looking of-course... -

@piba

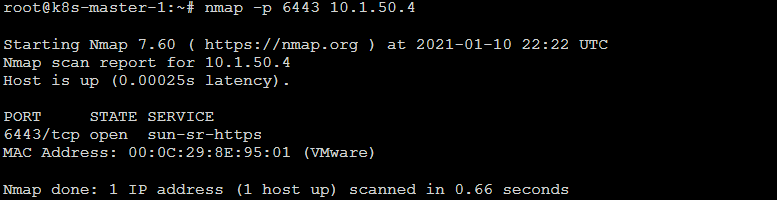

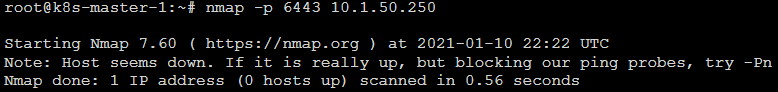

So I'm having some network issues with trying to join a second node to the cluster. Per the kubeadm log below there is a error when trying to reach out to the virtual ip. Seems like the request is not being passed to the host. However, when running kubectl commands from my PC which is pointing to the virtual ip I am able to get responses. So I know the VIP is working, but perhaps there are certain requests that HaProxy is not passing through?root@k8s-master-1:~# kubeadm join 10.1.50.4:6443 --token 9f5ciy.94mbxmf3385xbxg3 --discovery-token-ca-cert-hash sha256:7xxx9979cace02a9f1e98d82253ef9a8c1594c80ea0860aba6ef628cda7103fb --control-plane --certificate-key 19a3022e07555x9222xx74a969158bf9e2ca10f38d6879166aef4bf4b70b4b4c --v=5I0110 22:31:51.351625 8951 join.go:395] [preflight] found NodeName empty; using OS hostname as NodeName I0110 22:31:51.352158 8951 join.go:399] [preflight] found advertiseAddress empty; using default interface's IP address as advertiseAddress I0110 22:31:51.352604 8951 initconfiguration.go:104] detected and using CRI socket: /var/run/dockershim.sock I0110 22:31:51.353207 8951 interface.go:400] Looking for default routes with IPv4 addresses I0110 22:31:51.353442 8951 interface.go:405] Default route transits interface "ens160" I0110 22:31:51.353953 8951 interface.go:208] Interface ens160 is up I0110 22:31:51.354176 8951 interface.go:256] Interface "ens160" has 2 addresses :[10.1.50.5/24 fe80::20c:29ff:fe2d:674d/64]. I0110 22:31:51.354337 8951 interface.go:223] Checking addr 10.1.50.5/24. I0110 22:31:51.354454 8951 interface.go:230] IP found 10.1.50.5 I0110 22:31:51.354570 8951 interface.go:262] Found valid IPv4 address 10.1.50.5 for interface "ens160". I0110 22:31:51.354685 8951 interface.go:411] Found active IP 10.1.50.5 [preflight] Running pre-flight checks I0110 22:31:51.355035 8951 preflight.go:90] [preflight] Running general checks I0110 22:31:51.355209 8951 checks.go:249] validating the existence and emptiness of directory /etc/kubernetes/manifests I0110 22:31:51.355406 8951 checks.go:286] validating the existence of file /etc/kubernetes/kubelet.conf I0110 22:31:51.355536 8951 checks.go:286] validating the existence of file /etc/kubernetes/bootstrap-kubelet.conf I0110 22:31:51.355653 8951 checks.go:102] validating the container runtime I0110 22:31:51.452205 8951 checks.go:128] validating if the "docker" service is enabled and active [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ I0110 22:31:51.587806 8951 checks.go:335] validating the contents of file /proc/sys/net/bridge/bridge-nf-call-iptables I0110 22:31:51.587896 8951 checks.go:335] validating the contents of file /proc/sys/net/ipv4/ip_forward I0110 22:31:51.587954 8951 checks.go:649] validating whether swap is enabled or not I0110 22:31:51.588025 8951 checks.go:376] validating the presence of executable conntrack I0110 22:31:51.588087 8951 checks.go:376] validating the presence of executable ip I0110 22:31:51.588143 8951 checks.go:376] validating the presence of executable iptables I0110 22:31:51.588210 8951 checks.go:376] validating the presence of executable mount I0110 22:31:51.588265 8951 checks.go:376] validating the presence of executable nsenter I0110 22:31:51.588299 8951 checks.go:376] validating the presence of executable ebtables I0110 22:31:51.588334 8951 checks.go:376] validating the presence of executable ethtool I0110 22:31:51.588369 8951 checks.go:376] validating the presence of executable socat I0110 22:31:51.588411 8951 checks.go:376] validating the presence of executable tc I0110 22:31:51.588448 8951 checks.go:376] validating the presence of executable touch I0110 22:31:51.588486 8951 checks.go:520] running all checks I0110 22:31:51.718877 8951 checks.go:406] checking whether the given node name is reachable using net.LookupHost I0110 22:31:51.719390 8951 checks.go:618] validating kubelet version I0110 22:31:51.877366 8951 checks.go:128] validating if the "kubelet" service is enabled and active I0110 22:31:51.902537 8951 checks.go:201] validating availability of port 10250 I0110 22:31:51.902826 8951 checks.go:432] validating if the connectivity type is via proxy or direct I0110 22:31:51.902926 8951 join.go:465] [preflight] Discovering cluster-info I0110 22:31:51.902986 8951 token.go:78] [discovery] Created cluster-info discovery client, requesting info from "10.1.50.4:6443" I0110 22:31:51.923885 8951 token.go:116] [discovery] Requesting info from "10.1.50.4:6443" again to validate TLS against the pinned public key I0110 22:31:51.941107 8951 token.go:133] [discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.1.50.4:6443" I0110 22:31:51.941186 8951 discovery.go:51] [discovery] Using provided TLSBootstrapToken as authentication credentials for the join process I0110 22:31:51.941226 8951 join.go:479] [preflight] Fetching init configuration I0110 22:31:51.941272 8951 join.go:517] [preflight] Retrieving KubeConfig objects [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' I0110 22:31:51.962083 8951 interface.go:400] Looking for default routes with IPv4 addresses I0110 22:31:51.962125 8951 interface.go:405] Default route transits interface "ens160" I0110 22:31:51.962698 8951 interface.go:208] Interface ens160 is up I0110 22:31:51.962843 8951 interface.go:256] Interface "ens160" has 2 addresses :[10.1.50.5/24 fe80::20c:29ff:fe2d:674d/64]. I0110 22:31:51.962891 8951 interface.go:223] Checking addr 10.1.50.5/24. I0110 22:31:51.962919 8951 interface.go:230] IP found 10.1.50.5 I0110 22:31:51.963085 8951 interface.go:262] Found valid IPv4 address 10.1.50.5 for interface "ens160". I0110 22:31:51.963362 8951 interface.go:411] Found active IP 10.1.50.5 I0110 22:31:51.974786 8951 preflight.go:101] [preflight] Running configuration dependant checks [preflight] Running pre-flight checks before initializing the new control plane instance I0110 22:31:51.975248 8951 checks.go:577] validating Kubernetes and kubeadm version I0110 22:31:51.975405 8951 checks.go:166] validating if the firewall is enabled and active I0110 22:31:51.998043 8951 checks.go:201] validating availability of port 6443 I0110 22:31:51.998416 8951 checks.go:201] validating availability of port 10259 I0110 22:31:51.998619 8951 checks.go:201] validating availability of port 10257 I0110 22:31:51.998813 8951 checks.go:286] validating the existence of file /etc/kubernetes/manifests/kube-apiserver.yaml I0110 22:31:51.998961 8951 checks.go:286] validating the existence of file /etc/kubernetes/manifests/kube-controller-manager.yaml I0110 22:31:51.999125 8951 checks.go:286] validating the existence of file /etc/kubernetes/manifests/kube-scheduler.yaml I0110 22:31:51.999305 8951 checks.go:286] validating the existence of file /etc/kubernetes/manifests/etcd.yaml I0110 22:31:51.999442 8951 checks.go:432] validating if the connectivity type is via proxy or direct I0110 22:31:51.999592 8951 checks.go:471] validating http connectivity to first IP address in the CIDR I0110 22:31:51.999752 8951 checks.go:471] validating http connectivity to first IP address in the CIDR I0110 22:31:51.999895 8951 checks.go:201] validating availability of port 2379 I0110 22:31:52.000115 8951 checks.go:201] validating availability of port 2380 I0110 22:31:52.000316 8951 checks.go:249] validating the existence and emptiness of directory /var/lib/etcd [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' I0110 22:31:52.123542 8951 checks.go:839] image exists: k8s.gcr.io/kube-apiserver:v1.20.1 I0110 22:31:52.214173 8951 checks.go:839] image exists: k8s.gcr.io/kube-controller-manager:v1.20.1 I0110 22:31:52.299598 8951 checks.go:839] image exists: k8s.gcr.io/kube-scheduler:v1.20.1 I0110 22:31:52.399299 8951 checks.go:839] image exists: k8s.gcr.io/kube-proxy:v1.20.1 I0110 22:31:52.489050 8951 checks.go:839] image exists: k8s.gcr.io/pause:3.2 I0110 22:31:52.575446 8951 checks.go:839] image exists: k8s.gcr.io/etcd:3.4.13-0 I0110 22:31:52.663021 8951 checks.go:839] image exists: k8s.gcr.io/coredns:1.7.0 [download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [certs] Using certificateDir folder "/etc/kubernetes/pki" I0110 22:31:52.674828 8951 certs.go:45] creating PKI assets I0110 22:31:52.675278 8951 certs.go:474] validating certificate period for etcd/ca certificate [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-master-1 localhost] and IPs [10.1.50.5 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-master-1 localhost] and IPs [10.1.50.5 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key I0110 22:31:54.827565 8951 certs.go:474] validating certificate period for ca certificate [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-master-1 kube.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.1.50.5 10.1.50.250] I0110 22:31:55.501127 8951 certs.go:474] validating certificate period for front-proxy-ca certificate [certs] Generating "front-proxy-client" certificate and key [certs] Valid certificates and keys now exist in "/etc/kubernetes/pki" I0110 22:31:55.647728 8951 certs.go:76] creating new public/private key files for signing service account users [certs] Using the existing "sa" key [kubeconfig] Generating kubeconfig files [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" I0110 22:31:56.359925 8951 manifests.go:96] [control-plane] getting StaticPodSpecs I0110 22:31:56.360794 8951 certs.go:474] validating certificate period for CA certificate I0110 22:31:56.361158 8951 manifests.go:109] [control-plane] adding volume "ca-certs" for component "kube-apiserver" I0110 22:31:56.361299 8951 manifests.go:109] [control-plane] adding volume "etc-ca-certificates" for component "kube-apiserver" I0110 22:31:56.361423 8951 manifests.go:109] [control-plane] adding volume "k8s-certs" for component "kube-apiserver" I0110 22:31:56.361564 8951 manifests.go:109] [control-plane] adding volume "usr-local-share-ca-certificates" for component "kube-apiserver" I0110 22:31:56.361784 8951 manifests.go:109] [control-plane] adding volume "usr-share-ca-certificates" for component "kube-apiserver" I0110 22:31:56.376555 8951 manifests.go:126] [control-plane] wrote static Pod manifest for component "kube-apiserver" to "/etc/kubernetes/manifests/kube-apiserver.yaml" [control-plane] Creating static Pod manifest for "kube-controller-manager" I0110 22:31:56.376935 8951 manifests.go:96] [control-plane] getting StaticPodSpecs I0110 22:31:56.377748 8951 manifests.go:109] [control-plane] adding volume "ca-certs" for component "kube-controller-manager" I0110 22:31:56.377975 8951 manifests.go:109] [control-plane] adding volume "etc-ca-certificates" for component "kube-controller-manager" I0110 22:31:56.378204 8951 manifests.go:109] [control-plane] adding volume "flexvolume-dir" for component "kube-controller-manager" I0110 22:31:56.378387 8951 manifests.go:109] [control-plane] adding volume "k8s-certs" for component "kube-controller-manager" I0110 22:31:56.378594 8951 manifests.go:109] [control-plane] adding volume "kubeconfig" for component "kube-controller-manager" I0110 22:31:56.378762 8951 manifests.go:109] [control-plane] adding volume "usr-local-share-ca-certificates" for component "kube-controller-manager" I0110 22:31:56.378910 8951 manifests.go:109] [control-plane] adding volume "usr-share-ca-certificates" for component "kube-controller-manager" I0110 22:31:56.380857 8951 manifests.go:126] [control-plane] wrote static Pod manifest for component "kube-controller-manager" to "/etc/kubernetes/manifests/kube-controller-manager.yaml" [control-plane] Creating static Pod manifest for "kube-scheduler" I0110 22:31:56.381200 8951 manifests.go:96] [control-plane] getting StaticPodSpecs I0110 22:31:56.382005 8951 manifests.go:109] [control-plane] adding volume "kubeconfig" for component "kube-scheduler" I0110 22:31:56.383216 8951 manifests.go:126] [control-plane] wrote static Pod manifest for component "kube-scheduler" to "/etc/kubernetes/manifests/kube-scheduler.yaml" [check-etcd] Checking that the etcd cluster is healthy I0110 22:31:56.386079 8951 local.go:80] [etcd] Checking etcd cluster health I0110 22:31:56.386308 8951 local.go:83] creating etcd client that connects to etcd pods I0110 22:31:56.386536 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:00.513351 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:03.562540 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:06.632801 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:09.731111 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:12.741050 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:15.822008 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:18.888305 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:21.984626 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:25.073845 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:28.175733 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:31.187799 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:34.276609 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:37.342296 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:40.429410 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:43.482728 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:46.555632 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:49.666323 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:52.692295 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:55.762607 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:32:58.850890 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:01.943495 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:05.044608 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:08.059751 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:11.132062 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:14.249961 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:17.266901 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:20.404860 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:23.459946 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:26.514801 8951 etcd.go:177] retrieving etcd endpoints from "kubeadm.kubernetes.io/etcd.advertise-client-urls" annotation in etcd Pods I0110 22:33:28.534646 8951 etcd.go:201] retrieving etcd endpoints from the cluster status Get "https://10.1.50.250:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=10s": dial tcp 10.1.50.250:6443: connect: no route to host error execution phase check-etcd k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1 /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow/runner.go:235 k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow/runner.go:421 k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow/runner.go:207 k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdJoin.func1 /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/cmd/join.go:172 k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).execute /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/github.com/spf13/cobra/command.go:850 k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).ExecuteC /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/github.com/spf13/cobra/command.go:958 k8s.io/kubernetes/vendor/github.com/spf13/cobra.(*Command).Execute /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/vendor/github.com/spf13/cobra/command.go:895 k8s.io/kubernetes/cmd/kubeadm/app.Run /workspace/src/k8s.io/kubernetes/_output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/app/kubeadm.go:50 main.main _output/dockerized/go/src/k8s.io/kubernetes/cmd/kubeadm/kubeadm.go:25 runtime.main /usr/local/go/src/runtime/proc.go:204 runtime.goexit /usr/local/go/src/runtime/asm_amd64.s:1374Network Tests - Requests are coming from 10.1.50.5

-

@thepiemonster said in PfSense Custom Load Balancer Monitoring Returning "check http code use ssl":

I0110 22:33:28.534646 8951 etcd.go:201] retrieving etcd endpoints from the cluster status

Get "https://10.1.50.250:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=10s": dial tcp 10.1.50.250:6443: connect: no route to host

error execution phase check-etcdIt seems the virtual-ip is inside the same network subnet as the node-ip. Are you sure its configured on the correct pfSense interface so it is reachable from the node without routing? Subnet sizes correctly configured as /24 everywhere?

-

@piba

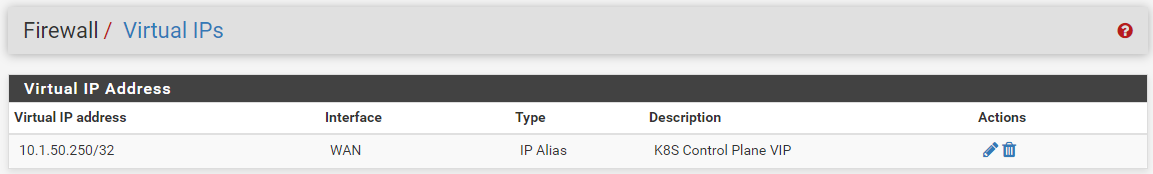

I have several interfaces in pfSense:- Interface Name - Interface Subnet

- WAN - NA

- LAN - 10.1.1.0/24

- K8S - 10.1.50.0/24

- Plus More - NA

The .4 and .5 machines are part of the K8S subnet.

The VIP is attached to the WAN interface currently.

Are you saying that the VIP interface should be on the same interface with the nodes?

-

@thepiemonster said in PfSense Custom Load Balancer Monitoring Returning "check http code use ssl":

Are you saying that the VIP interface should be on the same interface with the nodes?

If it is in the same subnet then it should be on the same network.. So yes. in this case it needs to be on the K8S interface, and with a /24 subnet size.

Edit:

You could also choose to put the VirtualIP on a different unused subnet like 10.1.51.250/32 and then put in on the lo0 loopback interface.. -

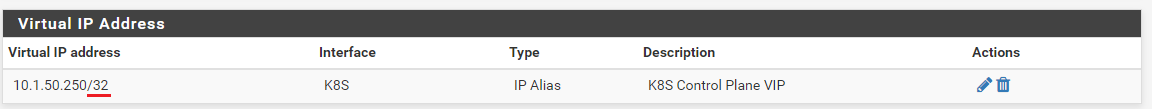

@piba said in PfSense Custom Load Balancer Monitoring Returning "check http code use ssl":

If it is in the same subnet then it should be on the same network.. So yes. in this case it needs to be on the K8S interface, and with a /24 subnet size.

Are you saying that the VIP should be a /24 instead of a /32? Since its just a single address that the nodes would be pointing too, shouldn't the VIP subnet be a /32 regardless of what the interface subnet size is?

-

An IPAlias can usually be either. If it's not in some existing subnet though it should have the real subnet mask.

Steve

-

@thepiemonster

The 10.1.50.4/24 will send ARP-requests for the 10.1.50.250 address, so imho the 10.1.50.250 should have that same /24 to know it should respond to requests from that client.. Maybe it works with /32, but i think that is 'technically wrong'.. A IP-alias is a 'real ip', its not like proxy-arp that where you would define a range of IPs to respond to without the IP-stack of the OS even really knowing about that.. -

I agree, I would rather see the correct subnet set on every IPAlias but it will work with /32 on there. If you see that it's not normally the cause of a problem.

Steve

-

@piba @stephenw10

Ok you convinced me. Updated the subnet.

Updated the subnet.

I'm still unsure if its related to the Virtual IP or not (I don't believe it is) but I have been running into a issue when I try to join a second K8S control-plane node to the cluster. It runs through its join process and then seems to lose a connection and ends up killing the cluster. You can see a post I made about it here on ServerFault if you want. Assuming the issue is not VIP related, then I would say this Netgate forum thread is closed. Thank you everyone again for your help in this matter!